We often get asked how Solace PubSub+ compares with alternative event streaming platforms and other technologies that help enterprises enable the adoption of event-driven architecture. From open-source software like Apache Kafka, Apache ActiveMQ, and RabbitMQ, to commercial event brokers like Confluent Cloud, Amazon Simple Queue Service (SQS), and Red Hat AMQ, and even to integration technologies like MuleSoft’s Anypoint Platform, people considering Solace want to know where we fit, how we stack up and, more broadly, how to think about our platform and the value it can help them realize.

The points of reference for comparing event streaming platforms and technologies for event-driven architecture can range, so I’m here to offer a clear and honest perspective on the options that are out there, the specific problems and opportunities that can be addressed with our platform, and why I think Solace is leading the pack.

Our most unique, most differentiating, and most powerful value proposition is that we help enterprises adopt, manage, and leverage event-driven architecture (EDA). In my view we do it better than any company in the world, and in this post I’ll explain why I believe that.

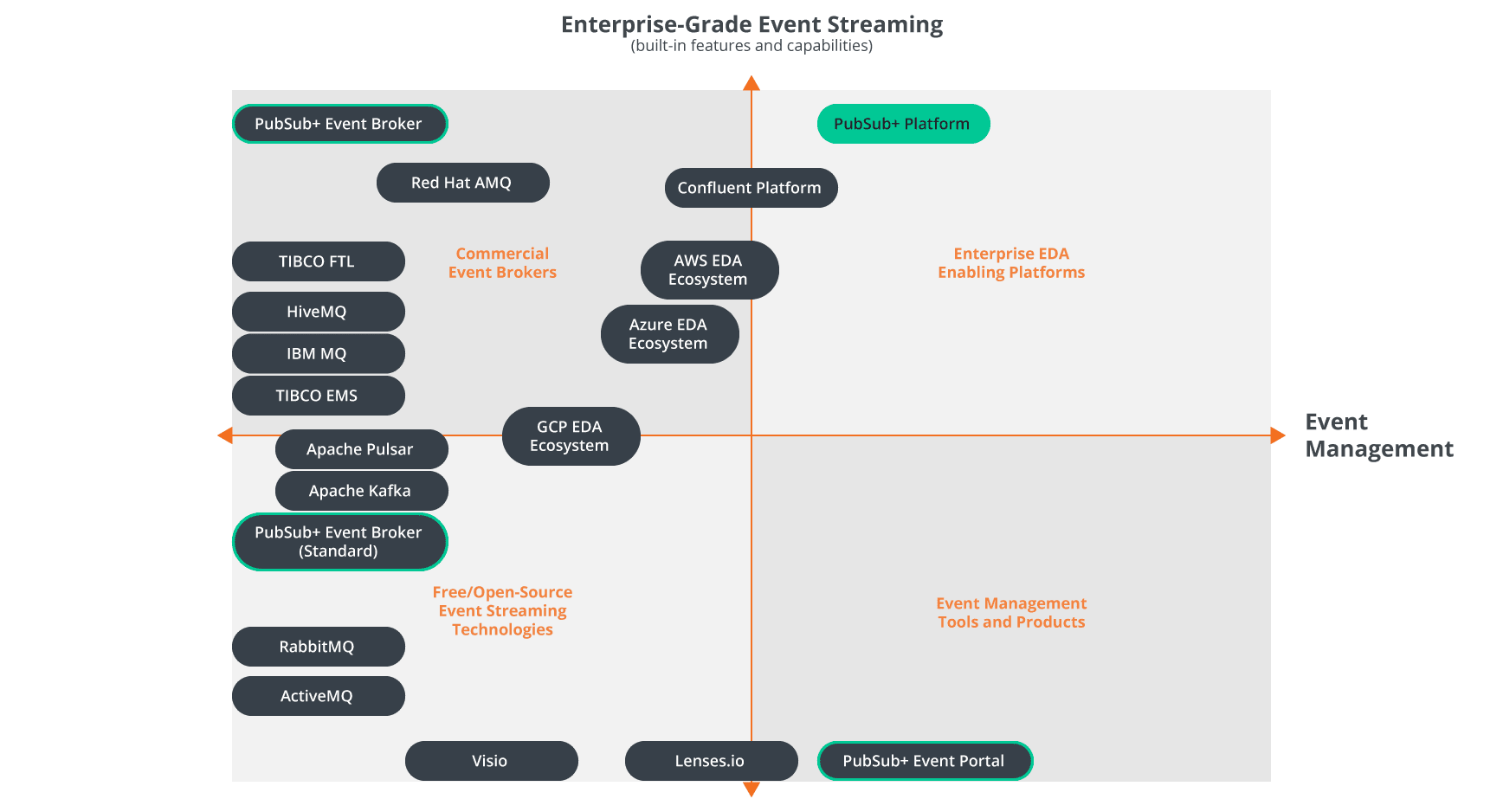

It all stems from our perspective on the two major capabilities enterprises need to adopt and leverage event-driven architecture at scale:

- Enterprise-grade event streaming.

- Event management.

From this perspective, here is my view of the market:

Now let me explain my rationale for evaluating EDA enabling technologies in this way, and why I have Solace PubSub+ leading on each axis.

What You Need to Adopt and Leverage Event-Driven Architecture at Scale

In a nutshell, you need two things: (1) enterprise-grade event streaming and (2) event management capabilities.

Let’s start with the rationale for the x-axis and the y-axis.

We know event-driven architecture is a rising priority for modern enterprises that want to be more real-time, agile, and resilient in this era of unprecedented business disruption, technological advancement, and rising consumer expectations. But event-driven architecture can be difficult to implement. Especially when:

- You have a variety of IT and OT distributed across hybrid-cloud, multi-cloud, and IoT environments.

- You have a legacy estate and architecture that was not designed for events.

- Tooling and best practices to design, deploy and manage event-driven architecture are scarce.

When I boil these challenges down and think about what enterprises are trying to achieve with event-driven architecture, I think they need two things:

- (Y) Enterprise-grade event streaming capabilities.

- (X) Event management capabilities.

Enterprise-Grade Event Streaming

By enterprise-grade event streaming, I mean the capabilities that enable an enterprise to stream events quickly, reliably, and securely (and here’s the tricky part) across hybrid-cloud, multi-cloud, and IoT environments, and across other elements that may be part of your supply/value chain (such as factories, stores, warehouses, and distribution centers).

If you’re thinking multi-cloud and IoT are not relevant to you today, ask yourself if they might be soon. Or ever. Chances are they’ll be relevant sooner than later. So you need an event streaming layer that can cover those complex environments.

You will need that event streaming layer to be performant, reliable, and resilient. Maybe you don’t need to move events at the speed of light. Maybe you don’t need five-nines availability. But if you want to unlock the benefits of event-driven architecture, you need events to be transmitted (produced and consumed) more or less as they happen, and in the correct temporal order. And you can’t afford downtime, or to lose data, or to have the transmission of an event delayed due to geography.

For these reasons, features like low latency, high availability, disaster recovery and WAN optimization are important. In many cases they are critical.

You also need events to be streamed in the most efficient manner possible. You will also want to be specific about what data you stream to and from the cloud to minimize egress costs. You also do not want applications or people to have to sift through reams of data to get to what they want to consume. For these reasons, a rich topic hierarchy and fine-grained filtering are important.

Here is a great 5-minute video on the importance of topic hierarchy:

Event Topics: Kafka vs. Solace for Implementation

Lastly, you will want your event streaming layer to support multiple open standard protocols and APIs, to connect with the variety of IT and OT you have or expect to have — including legacy applications, new microservices, serverless apps and third-party SaaS — all while avoiding technology and/or vendor lock-in.

Why have I not included capabilities for event stream processing or streaming analytics in my list of fundamental criteria for enterprise-grade event streaming? In short, that’s because I view those as use cases or things you can do with event-driven architecture rather than things that are fundamental to building and supporting event-driven architecture.

We believe event-driven architecture is a critical and foundational layer needed to support a wide variety of use cases, such as event-driven integration, microservices, IoT and streaming analytics, so what you have in place should enable and support those things, but they are not fundamental to it.

So that’s my rationale for the y-axis and the criteria that informs the relative positioning of the products and technologies along it (more on that below). Now for the x-axis.

Event Management

By event management, I mean the tools that help developers, architects, and other stakeholders work efficiently and collaboratively in designing, deploying and managing events and event-driven applications. Think of it like a toolset similar to those associated with API management, but for the real-time, event-driven world.

API Management, Meet Event ManagementA break-down of the value proposition for API management platforms and what API management means for events.

These capabilities become increasingly important as you scale your use of event-driven architecture. That’s when most organizations we work with start thinking about how they can expose and share event streams and event schemas so they can be used and reused across the enterprise. After that, they start sweating things like event governance and yearning for a way to visualize their topologies.

The truth is that most enterprises will make their way to some sort of event management toolset when architects and developers can’t answer basic questions like:

- What events already exist in a system? How can I subscribe to various events?

- Who created this or that event? And who can tell me more about it?

- What are my organization’s best practices and conventions for event stream definitions?

- What topic structure should I use to describe the events my application will produce?

- How is this event being used? By whom? By what applications? How often?

- Is the change I want to make to my application or event backward compatible?

- How can I control access to this or that event stream?

Enterprises can’t hope to adopt event-driven architecture at scale or maximize the business value of their events if stakeholders can’t answer those basic questions. And while Visio, Excel spreadsheets and Jira are great tools, they were not purpose-built to solve this problem.

By the way, analysts from Gartner, Forrester and other firms have noted the need for (and lack of) tooling in this area. Feel free to reach out and ask about it.

For event-based interfaces, apply the principles of API management, but recognize that the tooling in this market is still emerging.”

So that’s my rationale for the x-axis and the criteria that informs the relative positioning of the products and technologies along it.

Now let me explain the relative ranking/positioning of the technologies.

Evaluating event management technologies

I have Solace PubSub+ Event Portal leading on the x-axis, so let me start with event management because I think that’s the more straightforward case to make.

Solace PubSub+ Event Portal is the first and only (as of publishing) product of its kind. It’s a purpose-built event management toolset that can be used to design, discover, catalog, visualize, secure and manage all the events and event-driven apps in an event-driven architecture ecosystem.

More precisely, developers and architects can use PubSub+ Event Portal to:

- Discover and audit runtime event streams.

- Manage and audit changes to events, schemas and apps.

- Visualize existing event flows and application interactions.

- Define and model event-driven systems.

- Develop consistent event-driven applications.

- Define and manage event API products.

- Govern event-driven systems.

PubSub+ Event Portal covers many of the requirements enterprises will need to adopt, manage, and leverage event-driven architecture scale. Our vision is to have it be a universal event management toolset you can use with any of the popular open-source and commercial event brokers you may be using. At the time of writing, you can use it to scan event streams from PubSub+ event brokers, Apache Kafka brokers, and other Kafka distributions from Confluent and Amazon Managed Streaming for Apache Kafka (MSK).

Understanding the Concept of an Event Portal – An API Portal for EventsAn event portal, like an API portal, provides a single place for an enterprise to design, create, discover, share, secure, manage, and visualize events.

There are alternatives for some of this stuff.

Confluent and Red Hat both have schema registries that provide a REST interface for storing and retrieving event schemas. But I believe both registries are meant more for enforcing the schema at runtime (to prevent runtime errors for events and APIs) and for data validation than they are about making events and schemas discoverable so they can be used, managed and re-used over time. My colleague and Solace’s Field CTO, Jonathan Schabowsky, wrote an interesting blog post on this topic.

Here is what Shawn McAllister, our CTO and CPO, said when I asked him about this:

Schema registry is used by publisher apps to store the schema associated with an event stream, and it’s used by consumers to know how to decode events — so it’s used at runtime. Our Event Portal reads schemas from the schema registry as part of ‘discovery’ and then loads them into Event Portal so users can understand, manipulate and evolve them. So Event Portal is used at design time. Our Event Portal and these schema registries are truly different tools and completely complementary.”

IBM’s Cloud Pak for Integration version 2021.1.1 gets a bit closer to our vision for an event portal, as it includes functionality like the ability to “socialize your Kafka event sources” and “create an AsyncAPI document to describe your application that produces events to Kafka topics, and publish it to a developer portal.” But it all seems to be focused on Kafka, and these features are insular to IBM ecosystems/infrastructure.

Lenses.io is perhaps the closest to the value proposition of PubSub+ Event Portal in that it provides tooling to view data lineage flows and microservices so you can “drill down into applications or topics to view partitioning, replication health, or consumer lag.” It also includes a data catalog and a “self-serve administration and governance portal” where you can “view and evolve schemas.” But this toolset is focused on Kafka and Kafka distributions (such as MSK); it’s similar to Confluent Control Center but with more capabilities. It feels more like a data operations tool for accessing and leveraging data streams for things like event stream processing.

Given the purpose, capabilities and our vision for PubSub+ Event Portal, and in light of the alternatives currently available, I have PubSub+ Event Portal leading along the x-axis. I don’t have it on the far right of the x-axis because the full vision of the product has yet to be realized.

Now for the y-axis.

Evaluating enterprise-grade event streaming platforms and technologies

I have Solace PubSub+ Event Broker leading on the y-axis, so let me start by explaining why I have all the free and open-source technologies (yes, even Kafka) below their commercial alternatives on the enterprise-grade event streaming spectrum.

Why not go the open-source route for enterprise EDA?

Mainly because few (if any) of the features I listed above as important for enterprise-grade event streaming come out-of-the-box (OOTB).

You have to manually build them in. In some cases, you’re aided by great documentation and a vibrant developer community. In other cases, not so much. And the vibrancy of that community and the quality of that documentation can change over time. For sure the people driving and overseeing them will.

All that manual DIY work is time consuming and often brittle. And if the DIY team leaves, where does that leave you?

It has also been our experience that what starts out as a very efficient and manageable open-source initiative can quickly turn into the exact opposite as the project grows in scale and complexity, at which point you end up paying a commercial service provider to help. For enterprise-scale event-driven architecture, it’s often better to think ahead and buy a product that has the features you need now and may need in the future.

Another point here relates to the perception that open-source gives you more freedom, flexibility, and agility. In many ways, that’s true. But in one important way, it’s not. Some open-source technologies are based on their own unique protocols and APIs, so it can take a lot of work to get it to work well with other technologies. It also becomes increasingly difficult to switch out that open-source technology as your deployment grows and becomes more complex and more entrenched.

I appreciate and see the value in open-source technologies for many enterprise projects. With respect to specific event streaming use cases like streaming data analytics or event stream processing, if you have the development talent and resources, maybe it makes sense to go the open-source route. But for enterprises looking to adopt and leverage event-driven architecture at scale, or use event-driven architecture as the foundation for many modern use cases such as event-driven integration, microservices, IoT and analytics, I don’t think the DIY/open-source route makes as much sense.

So those are the reasons I have open-source below the commercial products for enterprise-grade event streaming.

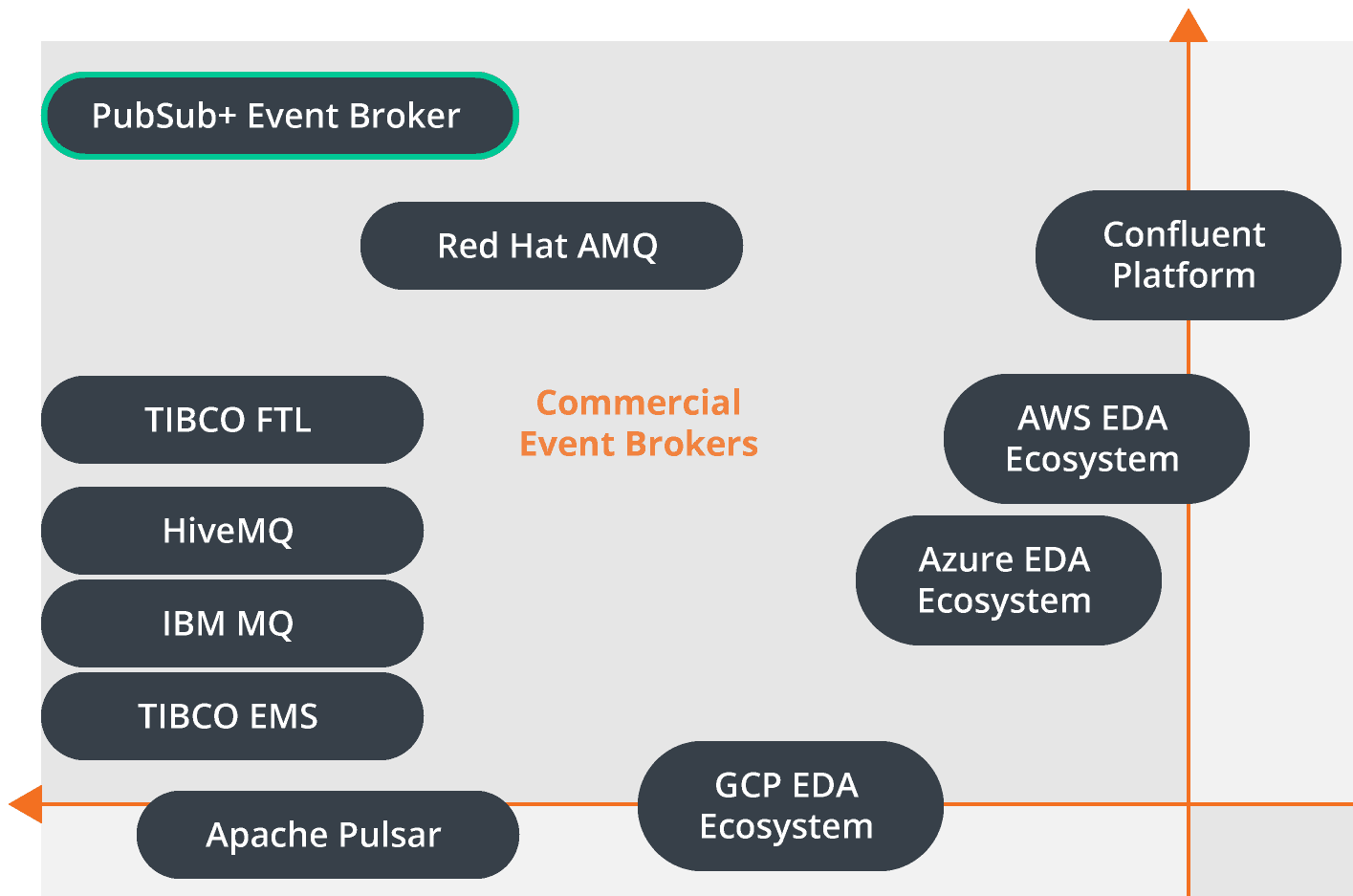

Comparing commercial event brokers

I will now explain the relative positioning of the commercial event streaming platforms and technologies in the top left quadrant.

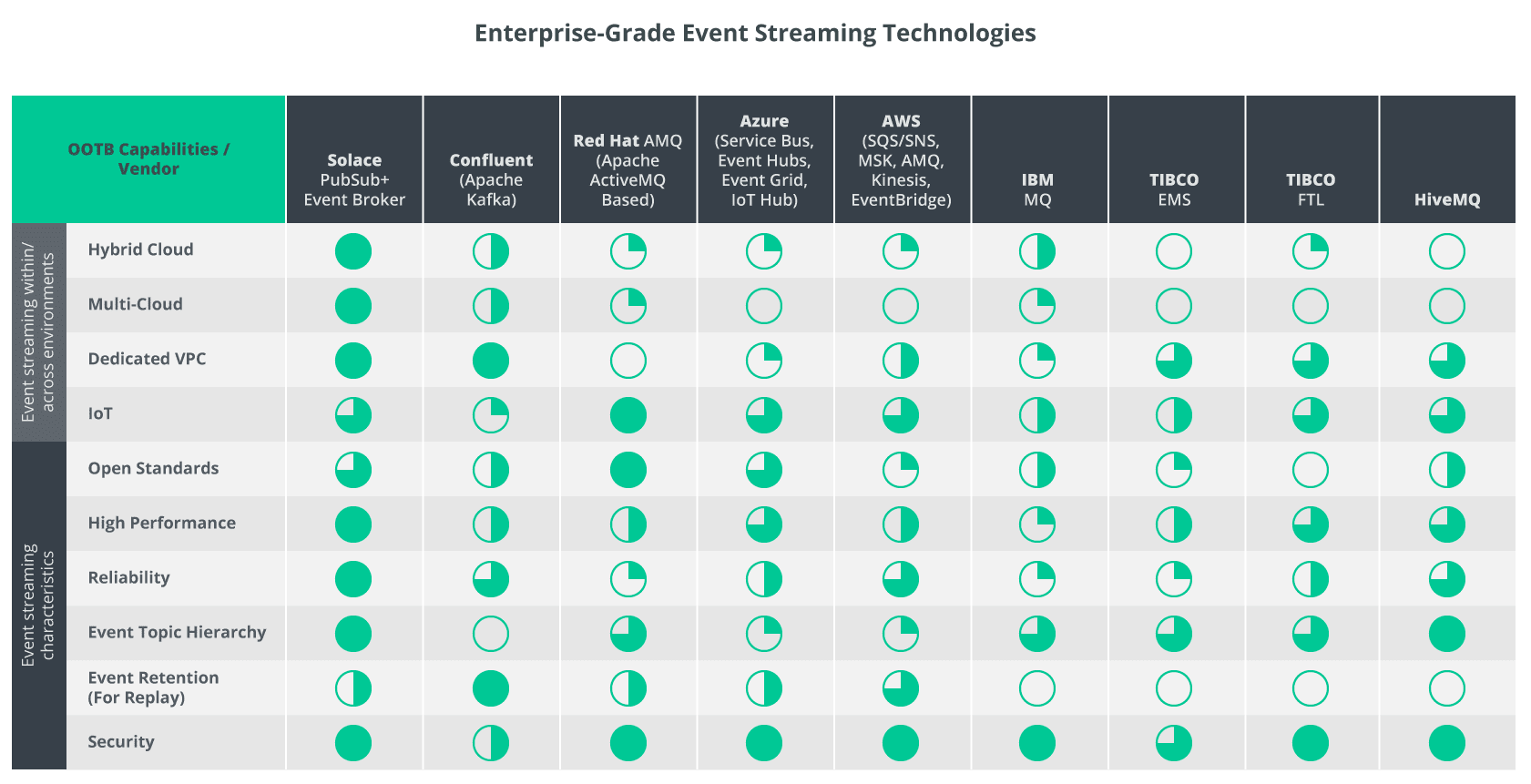

You will recall the capabilities that I’m associating with enterprise-grade event streaming — those that enable an enterprise to stream events across hybrid, multi-cloud, and IoT environments, and do so quickly, efficiently, reliably and securely.

So I did some thinking, spoke to our sales engineers, and came up with criteria to evaluate technologies along these lines. Then I reviewed our internal competitive intelligence and did some desk research (product and solution web content and documentation) to fill knowledge gaps. Then I spoke to our CTO team and three industry analysts from different firms. Then I circled back with specific technology leaders on our team to revise and hone our thinking.

This table is the result of that process:

Is it 100% correct? Probably not. Is it 80% correct? I’m confident it is in that ballpark.

The point is really to give you a sense of the relative capabilities of event streaming technologies that can support enterprise event-driven architecture. I’ve tried to do that as honestly and objectively as possible. That said, if you’re deep into evaluating these technologies, you’ll have questions.

You may even have a raised eyebrow if something felt off to you based on your own research or experience. I understand that the best way to evaluate products is based on the functional requirements of the use cases(s) you intend to solve. In this evaluation, I looked at a wide range of use cases that I think are/will be relevant to the modern enterprise.

All that said, I’ve done my best to forecast your questions and I’ve answered/provided my rationale below.

Answers to questions you may have:

- Our experience in the field, including years of experience working with many of these technologies and with customers who have shared their experiences.

- A review of online product content, including product pages and documentation.

- Conversations with industry analysts. While I can’t cite an analyst endorsing this table, I can say that it was reviewed with many analysts and adjusted with their feedback.

- The reason we do not have event stream processing and streaming data analytics is because they do not support enterprise-grade event streaming, which I argue is a core capability you need to do EDA well. Event stream processing doesn’t help you do EDA well; it is a product or one of the things that is enabled by EDA (as is integration, microservices, and IoT). I will say very clearly here: if you are looking for a tool to do event stream processing or streaming data analytics, you need to integrate/augment your EDA infrastructure with an event stream processor like Apache Spark/Databricks, Flink, Beam/Dataflow, or ksqlDB.

- Am I skewing the criteria to position Solace in the best light? I don’t think so. That the capabilities I list as critical for enterprise event-driven architecture align so well with the capabilities you get with PubSub+ Platform is because our products and platform were purpose-built to support enterprise EDA. I don’t believe any of our direct competitors have been as intentional in wanting to solve the exact same set of problems, so it makes sense that we would edge them out on this use case.

- I don’t think Solace is the only game in town for hybrid and multi-cloud event streaming, but I do think we are the best in town, and by a considerable margin. Core to that thinking is an insider’s view to our event mesh capabilities. Simply put, you can use our cloud-based Mesh Manager to create a hybrid and multi-cloud event mesh that will stream a variety of events from a variety of endpoints across these environments, and you can do so quickly, dynamically, reliably and securely, with built-in WAN optimization and more. And the time it will take you to do that is minutes, not hours or days.

- In PubSub+ Mission Control, you can deploy our cloud event brokers into a variety of different public and virtual private cloud environments, and into Kubernetes environments on-premises, and then basically click to connect them to create a hybrid and multi-cloud event mesh — without add-on components and with full dynamic routing using our DMR technology.

- You can do some/most of that with other technologies listed in this table, but not as quickly or as easily. You’ll likely need to manually create custom bridges and do a lot of the configuration and tuning of the brokers yourself to meet specific needs. And in the end, for a vast majority of scenarios, we would argue the end product wouldn’t be as efficient, reliable or as easy to manage as a PubSub+ deployment.

- Because while you can deploy Confluent Cloud into multiple different public clouds, and IaaS services integration is solid, streaming events between different clouds requires manual/static configuration (whereas with Solace it is self-learning/dynamic). Remote administration and hybrid capabilities require a Confluent CLI and Replicator. The Confluent CLI does not work with customer-managed cloud-based Kafka installations.

- Because there are a lot of moving pieces and a lot of processes to secure in a typical Kafka deployment; it’s not just the Kafka broker. If you need to do REST, if you need to do MQTT, if you’re doing enterprise-grade authorization and authentication with LDAP, and if you are combining these pieces with Zookeepers where you store your ACLs — you have so many moving pieces to secure. Additionally, how secure is the code of a Kafka broker? And even if Confluent Cloud abstracts a lot of that complexity away, it still suffers from the same fundamental problems and vulnerabilities.

- No fine-grained ACLs for publishers or subscribers (coarse-grained filtering means coarse-grained ACLs).

- Because you have a lot of work to do to create a hybrid and/or multi-cloud event streaming layer that is performant and reliable, and there is no ability to make the event/message routing intelligent/dynamic (as you can with a Solace PubSub+ powered event mesh – see answer to question 3).

- To create a hybrid/multi-cloud event mesh with Red Hat you’re going to need to work with Red Hat AMQ Broker, AMQ Interconnect, and AMQ Clients, and to create an event mesh that spans geographies over WANs you’ll likely have to work with much more tech (such as Ansible). It’s a lot of work. And maybe that’s an underlying reason why this DZone blog by Red Hat on the topic of event mesh fails to use any of the technologies above, and instead relies on Camel and Kafka.

- AMQ Interconnect is like a broker. It doesn’t do persistence, but it does a form of dynamic event routing where clients can connect to Interconnect and it will route their request to the right AMQ broker for persistence. Importantly, AMQ Interconnect only supports AMQP 1.0, so we are not talking about an event mesh that you can easily plug all kinds of applications and things into.

- Long story short, you’ll need to invest time and resources to really get a good understanding of the broker and how to tune it (way points, base stations, etc.) with other tech to deploy an event mesh that works well (with persistence, reliability, management and monitoring). And then you’ll need to manage all of that.

- Similar to above, because you need to invest time and resources to build it in.

- Reliability is also challenging because of its failover model.

- Because they are all designed to be able to work together within their respective cloud environments.

- Azure’s Service Bus Relay is used to connect Azure-based applications to other clouds or on-premises deployments, but to do so it creates application-to-application bridges that require bespoke development work on both sides. What’s more, Relay does not support pub/sub interactions, so every new publisher or subscriber requires more development work.

- Because you can’t run Azure Service Bus inside Azure’s virtual (private) networks (VNETs). Instead, traffic from VNETs to Premium Service Bus namespaces and Standard or Dedicated Azure Event Hubs namespaces must exit the VNET using the Azure backbone network. What’s more, Microsoft itself warns that implementing Virtual Networks integration can prevent other Azure services from interacting with Service Bus. Trusted Microsoft services are not supported when Virtual Networks are implemented. Here are some common Azure scenarios that don’t work with Virtual Networks (this list is NOT exhaustive):

- Azure Monitor

- Azure Stream Analytics

- Integration with Azure Event Grid

- Azure IoT Hub Routes

- Azure IoT Explorer

- Azure Data Explorer

- Yes, various Azure services support REST, AMQP, MQTT and WebSocket, but they have limited API support and you’ll likely have some work to do if you want to stream events between applications or things that leverage different protocols and APIs.

- Because a lot of the things you need for reliability (like HA and DR) are not built in. You need to create and execute a strategy for high availability and disaster recovery for each of the individual components you are using.

- HiveMQ has the best implementation of MQTT (it supports the entire MQTT specification), but it doesn’t support the other open standard protocols and APIs (JMS, AMQP, REST) that would enable an enterprise to easily plug into and distribute IoT event streams to cloud-native services, or to applications on-premises or in plants (such as SCADA, PLCs, MES, and ERP). This makes it less useful in IIoT use cases and in new and emerging use cases at the intersection of IoT, AI, ML and customer experience.

Still have questions? Consider contacting us directly. We would love to have a more in-depth conversation. I’d also encourage you to ask an analyst for their perspective.

Conclusion

To bring this home, we are seeing event-driven architecture become a mainstream architectural pattern. It is being leveraged by enterprises across all major industries to underpin modern technology patterns and use cases like event-driven integration, microservices, IoT, streaming analytics and more. It is being applied to improve a variety of business processes like:

- Preventative maintenance.

- Real-time product lifecycle management.

- Global track and trace/real-time supply chain visibility.

- Omnichannel retail experiences.

- Smart cities, smart utilities, smart transportation.

- Post-trade processing, streaming market data, FX trading, order management.

And many more.

We believe the enterprises that will lead their markets in the years to come will have a strong digital backbone for enabling event-driven architecture across their distributed enterprise so they can harness the full power and potential of events to maximize operational efficiencies, increase agility and accelerate innovation.

We believe a strong digital backbone for event-driven architecture includes two core elements:

- Enterprise-grade event streaming capabilities.

- Event management capabilities.

And we’re confident that Solace PubSub+ Event Broker and PubSub+ Event Portal represent best-in-class offerings for these two core capabilities. But here’s an important point that I’ve saved for the end.

Being able to leverage these core products/capabilities together, in one platform, can deliver additional (and incredibly significant) benefits. This was really emphasized by Jesse Menning, an architect in our CTO’s office, who upon review of this paper (pre-conclusion section) said:

At this point, we need to emphasize that the Solace PubSub+ Platform is better than the sum of its (powerful) parts. To me, the full value of EDA isn’t realized with component-by-component architecture, even if you have great components. With Solace, discovering events on the event broker feeds crucial information into Event Portal, Event Portal pushes runtime configuration to the broker, while Insights gives you info across the enterprise. That cohesion of vision creates a virtuous cycle and sets Solace apart.”

Of course, I totally agree. There are so many synergies, so many time efficiencies, insights and quality-enhancing processes that exist at the intersection of these products. Especially when they are designed to work together. And that’s the case with PubSub+ Event Broker and PubSub+ Event Portal, the two core components of PubSub+ Platform.

These are all the reasons why I can truly and confidently say that Solace helps enterprises adopt, manage, and leverage event-driven architecture, and we do it better than any company in the world.

Chris Wolski

Chris Wolski