For Western companies doing business in mainland China, digital connectivity can be a huge challenge when you’re having to contend with the “Great Firewall of China” restricting or slowing down cross-border Internet connectivity. Could a multi-cloud solution help? I decided to investigate…

What is the Digital Silk Road?

The “Silk Road” was an ancient network of trade routes that connected East and West, dating from around the year 114 BC. More recently however the Silk Road term has been rebooted to represent the massive $800 billion foreign investment program by the Chinese government to strengthen international trade and connectivity.

Within that overall initiative, the Digital Silk Road is a $200 billion wide-ranging project focusing on the digital aspects such as new undersea Internet cables, advancements in artificial intelligence, quantum computing, and even alternative satellite navigation systems.

The connectivity challenge

In spite of all this investment to foster and improve international trade, the ‘Great Firewall of China’ that affects all cross-border Internet connectivity remains firmly in place. This firewall enables the censorship and blocking of certain traffic or content, which has the net effect of severely slowing down all data flows in and out of mainland China. (As a tourist to Beijing in 2017, I had to bear with my wife’s constant frustrations with her social media access being slow!)

Now picture a hypothetical Western company wishing to do business in China. Say a European manufacturer of smart, Internet-connected cars that are now driving on the roads of busy Beijing. To support the digital services and applications in that vehicle, it needs healthy bidirectional connectivity to applications and services deployed in existing on-prem datacenters. One hypothetical service is your vehicle being able to re-route your journey to avoid a known accident further ahead. Information such as the location of the accident and the real-time location of your vehicle will need to be processed without undue delay for this to be effective.

Some of those digital services may be delivered from a regional ‘hub’ datacenter close by in Asia, and others delivered from a datacenter in Europe closer to company headquarters. Having a digital presence within China – as a first stop before reaching the on-prem datacenters – would be required to effectively deliver responsive services to those connected cars.

Enter Alibaba, Tencent and Huawei Clouds

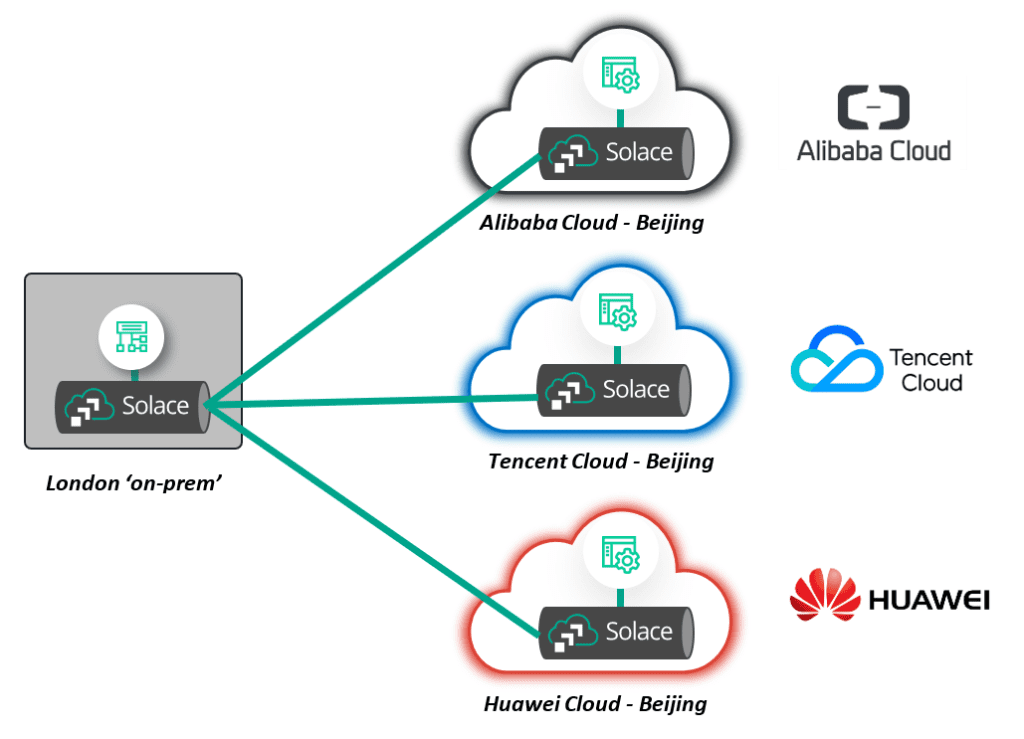

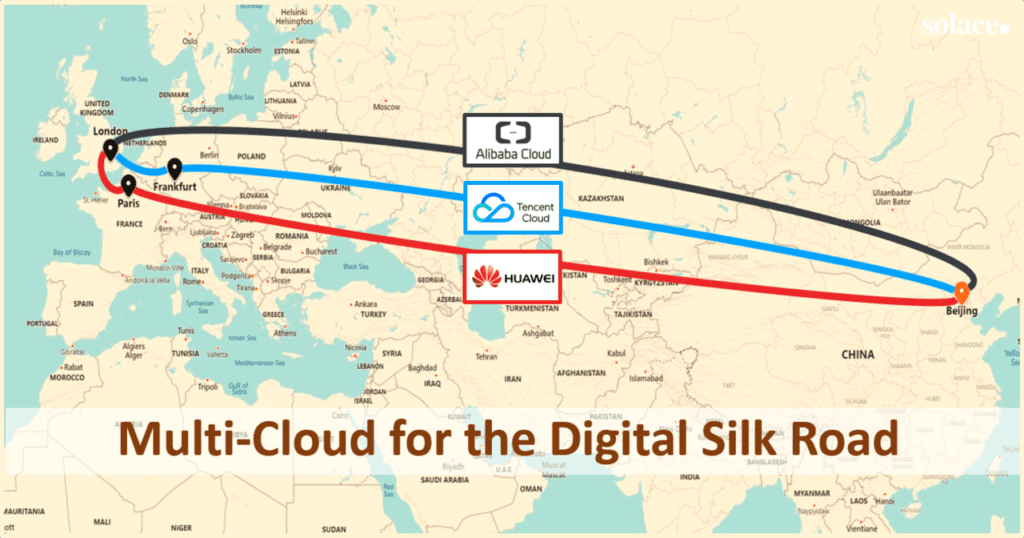

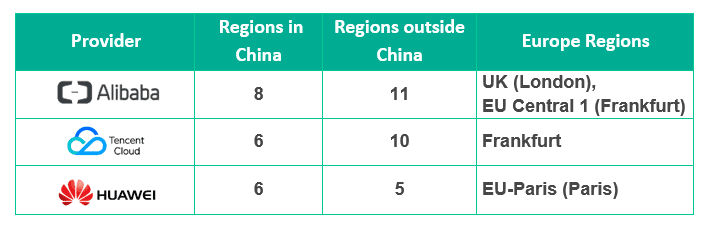

Just as Amazon AWS, Microsoft Azure and Google are the dominant players in the West for public cloud services, for China you can consider Alibaba, Tencent and Huawei for the same. With each of them maintaining datacenters (or “cloud regions”) in many international cities, the business of facilitating digital access for foreign companies into mainland China is a ‘low hanging fruit’ use-case. Alibaba and Tencent are the largest players and make up a duopoly locked in a constant battle for overall dominance:

“Forget Google versus Facebook. Forget Uber versus Lyft. Forget Amazon versus…well, everybody. The technology world’s most bruising battle for supremacy is taking place in China.” – NY Times

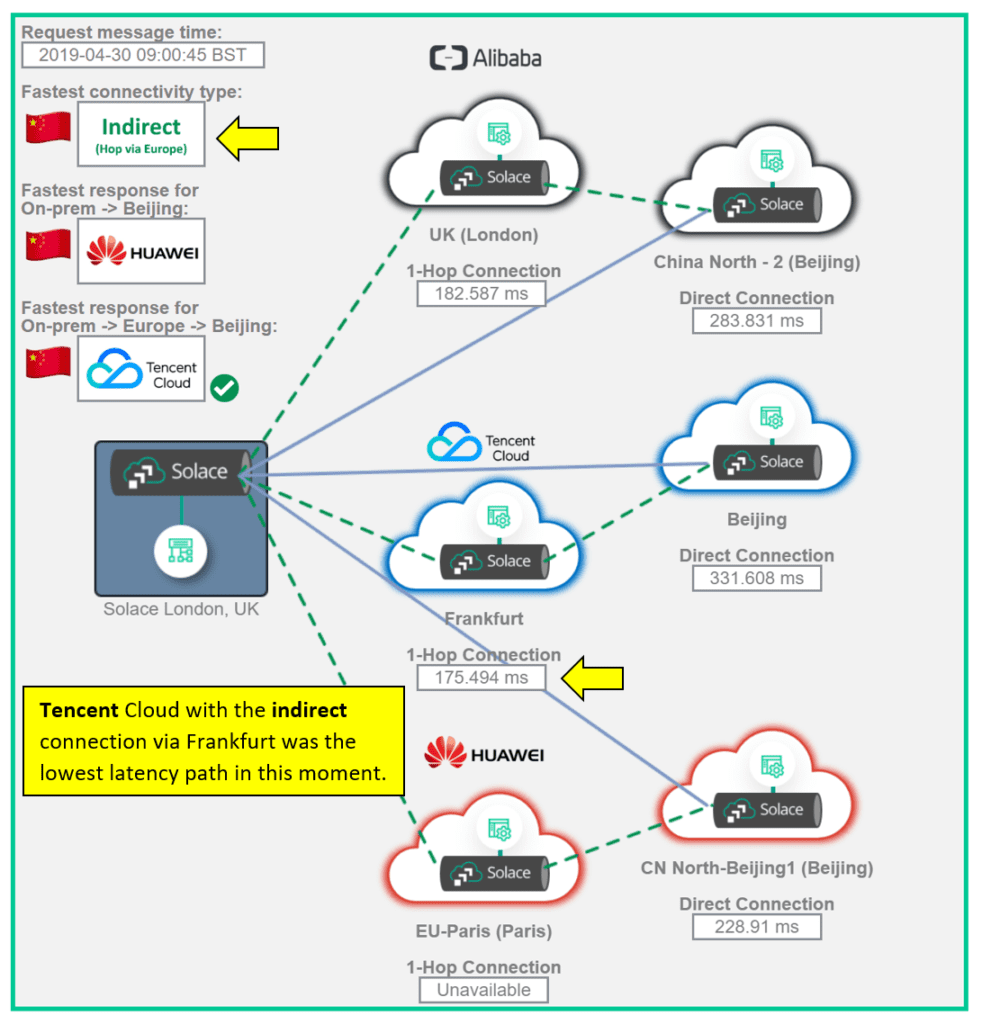

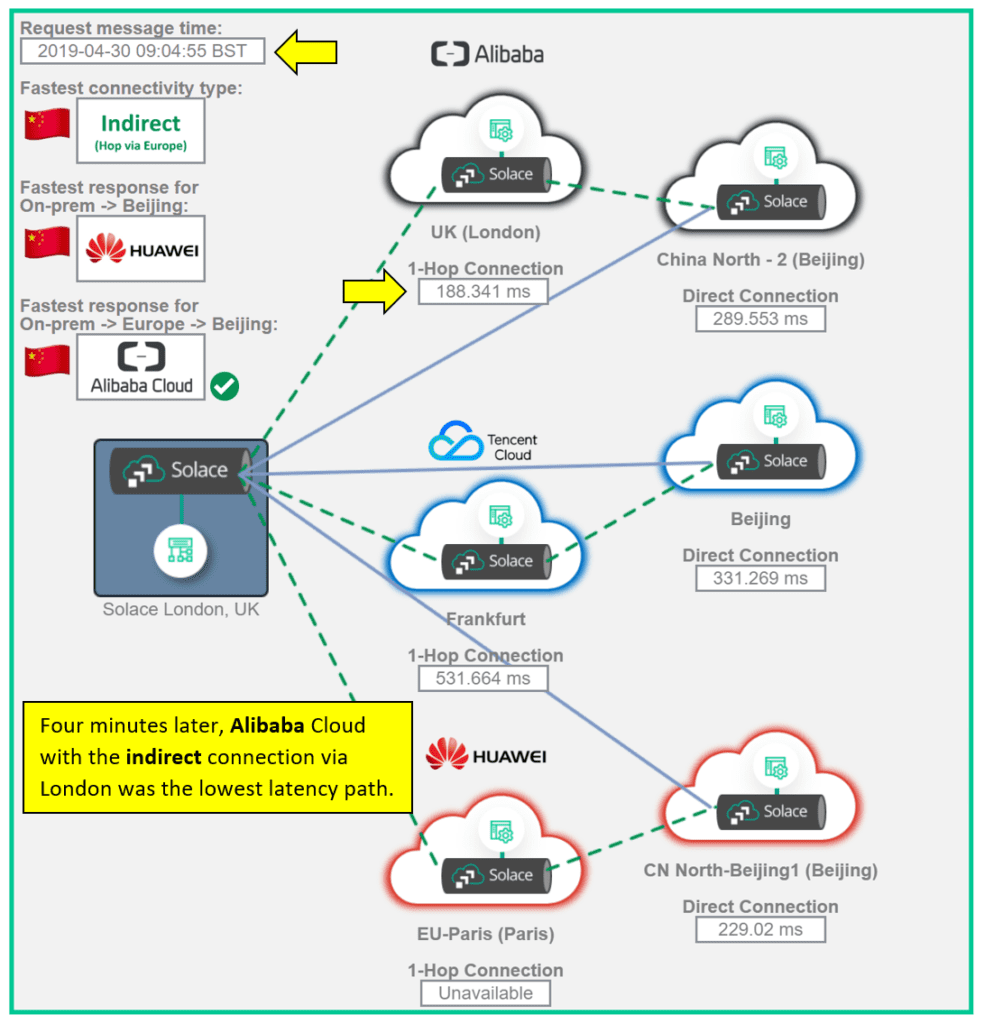

With my focus here being connectivity from London to Beijing, Alibaba Cloud actually inches ahead a little over Tencent. Alibaba Cloud has 11 regions outside of China versus 10 from Tencent. More importantly, the nearest cloud region in Europe is also in London for Alibaba, versus Frankfurt for Tencent and Paris for Huawei.

(When Alibaba launched their London region in late 2018, I performed an analysis on how they compared with AWS, Azure and Google. You can find that here: Alibaba Cloud – A Data-Driven Analysis.)

The Investigation Parameters

As part of looking at this issue I was interested in the following items:

1. What exactly does “slow” connectivity mean?

This will be quantified by mimicking a simple application interaction using a popular communication pattern (request-response), that is stretched between a pair of services deployed in our London ‘on-prem’ datacenter and the Beijing ‘cloud’ datacenter. This will take place at a regular 5 second interval to build up a long term picture of how long the full ‘round trip’ of those interactions take to complete. i.e. The latency of the connection at an application level.

Furthermore, with the help of a multi-cloud “event mesh” architecture for this connectivity, the ‘responding’ service in Beijing can be deployed in triplicate across the three cloud providers – in each of their Beijing cloud ‘region’. This will have the effect of ‘racing’ the interaction across the three in parallel so we can also assess which offered the fastest connectivity at that time and determine the arrival ranking in that race.

2. Does indirect connectivity via the cloud provider add value?

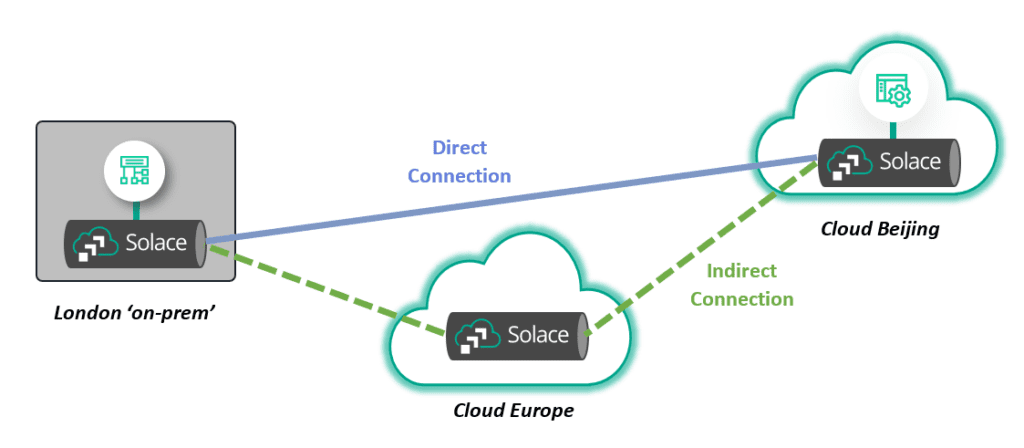

There are two different ways we can connect our London application with the applications in Beijing:

- The first is a direct connection from our London on-prem network to the Beijing cloud provider’s network.

- The second is an indirect path by adding an extra interim hop to the cloud provider’s “Europe” region first, then connecting onwards to their Beijing region.

To use an airplane analogy, the options here are either a direct flight to Beijing or one with a change in Europe first.

Counter-intuitively, the extra hop may actually help to reduce the latency of the overall communication and provide a more predictable service. Instead of being treated as cross-border Internet traffic straight away, the hop may direct the traffic via a different, more optimised path across the border.

The architecture of the event mesh has this new dimension now. As well as providing competing service deployment across three cloud providers, we can also compete across the two connectivity paths for each provider.

3. Do increases in latency affect all 6 traffic paths to Beijing at the same time?

This is the crux of the overall investigation. If dealing with the ‘Great Firewall’ is an unavoidable aspect of doing digital business with China, can a multi-cloud approach help to protect (or “hedge”) against it?

To put it another way, if one connectivity path and deployed service is suffering from a sudden slow down, would an alternate path and service be performing better at that same moment? If there is no correlation across the paths during those moments of slow down, enterprises that are particularly sensitive can choose to operate a “multi-path” approach to their connectivity to mainland China.

The Live Results

The results of the continuous application ‘request-response’ interaction described above, along with the event-mesh architecture with multiple clouds and multiple paths, can be viewed in a live demo here: http://london.solace.com/multi-cloud/digital-silk-road.html

Example results with the actual round-trip latency in milliseconds are available below:

The Findings

1. Even snails move faster than this!

This investigation and live demo builds on earlier work measuring AWS, Azure and Google with global communications spanning UK, US and Singapore. After deploying it against China here, the latency was at times so high it broke the charting logic of my demo! Embarrassingly, the high latency exposed a logic bug that was easily fixed, but a great example of how previously working applications can fail in strange ways when faced with such challenging connectivity circumstances.

The logic of the application was as follows:

- Send a single request message that the PubSub+ Event Brokers replicate across the multiple paths and cloud providers

- Wait up to 5 seconds for the multiple responses to arrive

- Calculate the latency of each response and determine an overall ranking of the order in which they arrived

- Repeat with a new request message

What I failed to anticipate is the response messages arriving more than 5 seconds late. From all the experience so far with the other global regions, latency rarely breaks the 1 second mark, let alone 5.

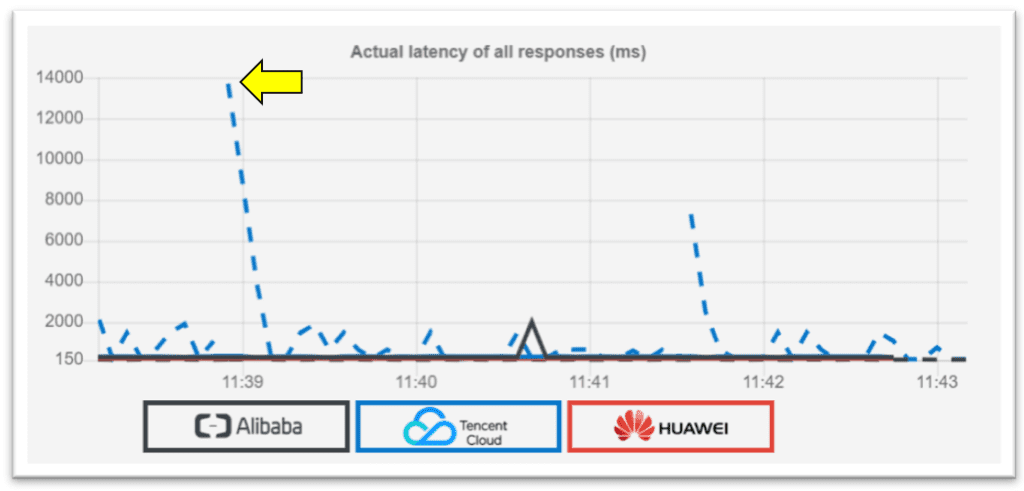

The fastest responses seen from China were around the 160 millisecond mark. (Less than a fifth of a second.) At the upper end however responses can be 100x higher in the 10’s of seconds like the 14 seconds example below:

We just had the London Marathon last weekend so will use that to help explain the logic bug. With the latency being so late past the 5 second measurement window, the effect was like a marathon runner starting at the weekend but arriving very late the next month to finish the race. At that point all the other participants have gone so when he arrives at the finish line he is the only one present. Then when put into an ordered rank of arrival, he appears to be “first” by virtue of no one else being there!

The simple fix can also borrow from the marathon analogy. Each race has a “pacer” response that will always be present. When determining the final ranking, you only get one assigned if the pacer is present at the time of your arrival.

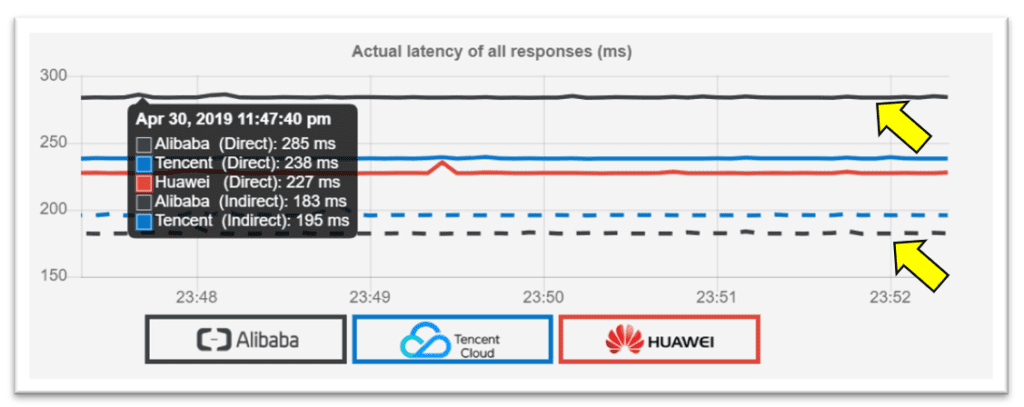

2. Don’t take the direct flight

One result that looks unanimous is that the direct connection to Beijing from London ‘on-prem’ is by far the slowest. In the latency chart below, the broken dashed line represents the indirect connectivity and the solid line being direct. For both Alibaba and Tencent the best connectivity path is to add the hop via the nearest cloud region in Europe. Latency can be up to 36% lower with this approach.

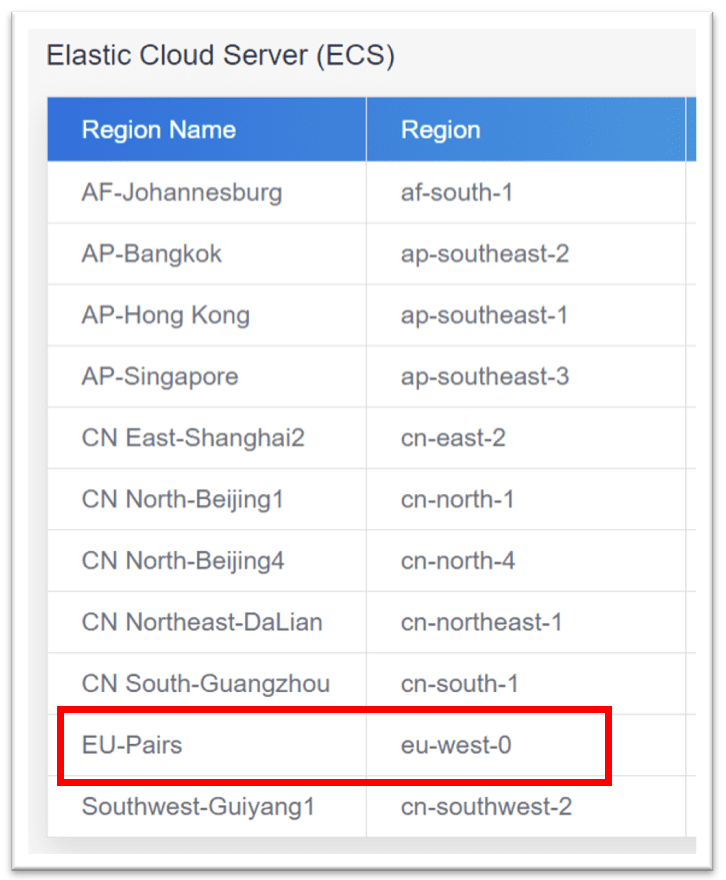

Note that for Huawei, this comparison of direct vs indirect could not be made. While the public website lists Paris as an available region for their “Elastic Cloud Server”, upon inquiry I was told this region is not fully available at the moment. So the demo retains the placeholder for Huawei connectivity via Paris should it become available.

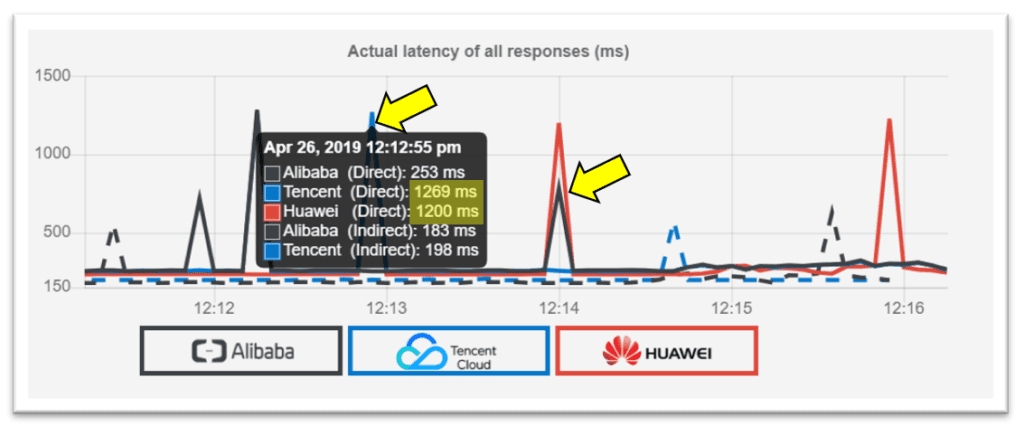

3. It’s Yes to Multi-Cloud

The main finding here is that while there are some cases of the connection slowdown (latency spikes) aligning perfectly across multiple providers, for the most part they do not correlate. i.e. If one cloud provider experiences a spike, in that moment the others often appear to be fine.

An example when the high latency spike was seen across Huawei and Tencent at the same time:

You can review this recording of a 3 hour replay of latency results to decide for yourself:

You can also view live results and trigger your own replay window here: http://london.solace.com/multi-cloud/digital-silk-road.html

Next Steps

These are the preliminary findings being shared and the investigation can continue further. Adding Huawei Cloud’s Paris region when it becomes available is one pending step. Once that is in place, the three will be on a fairer footing to try additional services available from the cloud providers. Mainly, the ability to purchase dedicated and low latency bandwidth between the cloud regions to further improve performance and connectivity.

In the meantime, I hope you found this an interesting read. Please share your thoughts on the LinkedIn version of this post.

If you liked this article, you might like this one too: Comparing Machine Learning through BBC News Analysis

Jamil Ahmed

Jamil Ahmed