In previous blog posts, we’ve talked about one way to use Ansible to automate the configuration of Solace PubSub+ Event Broker, and how to automate the configuration of a hybrid IoT event mesh. In this post we’ll describe how to stream sensor data from critical infrastructure assets across geographies into central data lakes and analytics applications.

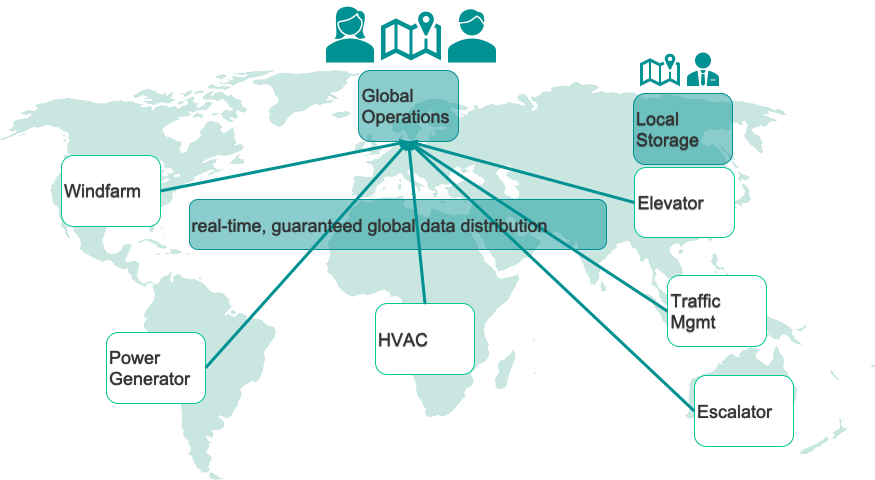

A few example projects we have worked on to develop this common approach include assets such as power generation systems like windmills and diesel generators, assets in buildings such as HVACs, escalators and elevators, and traffic management systems.

All of these systems have one thing in common: the companies building and managing them are looking to shift their business models from selling the assets themselves to offering rentals and subscriptions, and, in the most forward-looking cases, charging for output of the asset such as energy produced, miles driven, or number of people moved.

It takes very manageable, predictable SLAs to enable such business models, which demands that the assets be fitted with sensors, and that sensor data be streamed into analytics systems and data lakes. Since these companies often operate on a global basis, streaming sensor data across the world, across unreliable networks, they need an industrial-strength data distribution platform to do so.

Here are a few key technical requirements this data distribution platform must meet:

- Guaranteed data delivery from end to end, i.e. from the sensor to the central analytics platform with built-in fail-safes across every node in the delivery chain

- Real-time data distribution. Especially if the asset is safety-critical, sensor data must arrive in the analytics systems as it happens, not 10 minutes later, an hour after the fact, or the next day.

- It needs to be flexible and extensible. When the business evolves and grows, more assets are deployed in more locations. It is therefore imperative that deployment is supported on a variety of environments including different cloud providers and data centers.

Certain jurisdictions have stringent data export policies in place, often including a requirement to store the raw sensor data in-country with on-demand access for the authorities, before it leaves the country.

The following picture summarizes the physical setup:

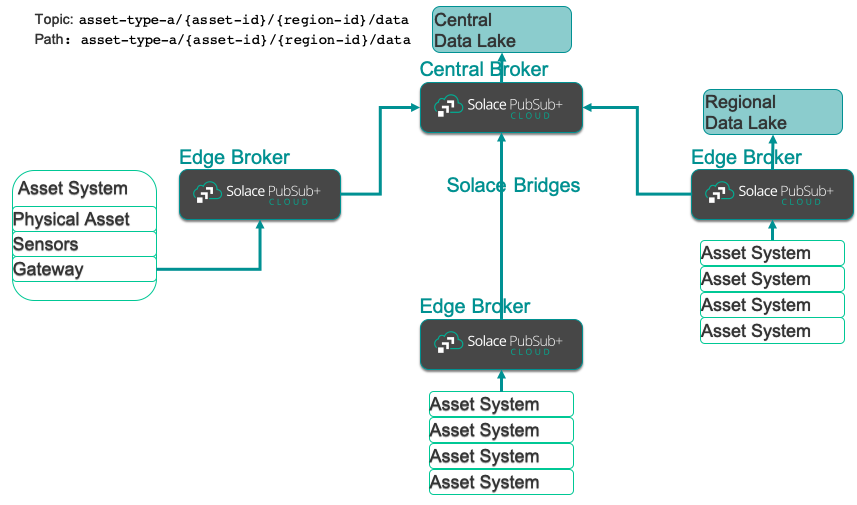

Now, let’s turn to the system architecture of such a hybrid event distribution platform using Solace PubSub+ event brokers and how they are connected into a hybrid event mesh.

The main components and links are:

- The asset systems

- The event mesh including the edge brokers and the central broker

- The central and regional data lakes

The asset system is equipped with sensors which in turn communicate with a local gateway. The gateway collects the sensor data, buffers it locally and sends it to the nearest edge PubSub+ Event Broker. The edge event brokers are connected via Solace bridges (over public or private networks) to the central event broker. Each event broker is set up in HA mode and buffers data locally, so data delivery is guaranteed in case of network glitches or unavailability of the recipient.

The central event broker then calls an integration function that:

- Receives the data

- Maps the topic to a data lake path

- Stores the data in the data lake

- Acknowledges success so the data is removed from the event broker’s storage

As you can imagine, flexible deployment across multiple cloud and data centers is important when realizing this architecture. For example, in Europe and the US, Amazon and Azure are pervasive. However, looking at Latin America, the Middle East and China, the choices are quite different. In China, for example, Alibaba Cloud, and Huawei Cloud are better bets to fulfill the regulatory requirements than, say, Azure.

The Drivers for Automation

When we started working on our early projects, building out such a hybrid IoT event mesh, we quickly realized that we need a consistent, repeatable, and portable approach to stand-up the event brokers, create the user profiles, access control lists for the gateways, the Solace bridges and their topic subscriptions and the entire integration into the Data Lake using the event broker’s REST Delivery Point (RDP) feature. Different data lakes also require slightly different integration and set-up of the RDP, for example. In Huawei Cloud the object storage (OBS) works differently from Azure Storage Gen2 (Blobs).

The environments we needed to automatically create and destroy ranged from every developer with their own dev environment, to an integration environment, QA, Production. And if you want to run multiple versions in parallel, the configuration effort increases exponentially.

Hence, we decided to use Ansible and the ansible-solace modules to cope with this variety. A single set of playbooks with a variety of inventories is now sufficient – creation and destruction of environments now is a matter of minutes (good coffee break opportunity) instead of error-prone scripting or even manual configuration. It also saves costs in terms of resource usage, if you don’t work on an environment – even for a day – simply destroy it and when you come back, stand it up again.

The Technical Details

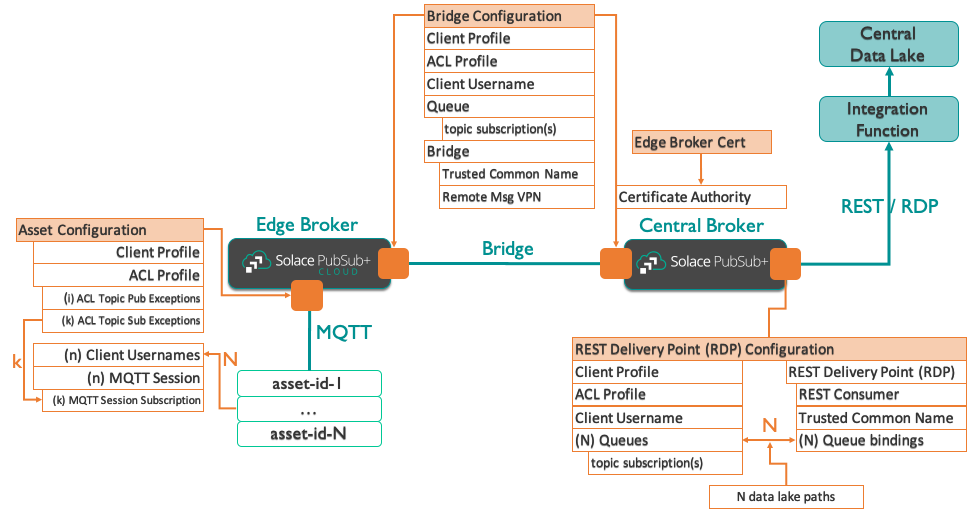

So, what are the detailed configuration requirements of the event mesh? Let’s go through the steps, starting with the PubSub+ event brokers. For each full environment, we need to create the event broker services in the Solace Cloud account. The various edge brokers and the central broker, each one with the correct settings. The next step is to create the Solace bridges between the edge brokers to the central broker.

Broker Configurations

Each gateway has its own unique ID, a type ID, an asset ID, and certificate.

For the edge event broker that means:

- Deploy the root certificate

- Create the system wide user profile for gateways

- Create the access control lists for each gateway type

- Create a client username for each gateway

- Create the MQTT session with the correct subscriptions

- Create the queues including the correct topic subscriptions

- Add the topic subscriptions to the Bridge to the central broker

At this point, we are able to connect the assets’ gateways to the edge brokers and data is flowing from asset to the central broker.

Note: People have asked us why we create the MQTT session with subscriptions on the broker? Firstly, all the projects required a path back to the gateway – to send configuration and invoke management functions such as upgrades, etc. Hence, the gateways need to subscribe to these topics. This article does not explore this back path.

Pre-creating the MQTT session adds an additional layer of security and eliminates dependencies between Gateway and management applications. The pre-created session is the equivalent of using cleanSession = false in the MQTT API.

Data Lake Integration Configuration

Our preferred mechanism is to use a serverless function in the chosen cloud (for example, Azure Functions, Huawei Function Graphs, AWS Lambda Functions) which is triggered via an HTTP POST request through the broker’s REST delivery point (RDP) feature.

Here is another complication though:

The RDP feature (at the point of writing) does not propagate the event topic, which we need to populate the data lake – i.e. the topic maps to the path in the data lake.

So, here is the pattern we used to overcome this limitation:

- Set up 1 queue per data lake path

- Add the topic subscription to the queue

- Set up 1 RDP which always calls the same serverless function

- Add 1 queue binding per queue/topic which passes a different URL path to the function

- The URL path is exactly the same as the topic subscription on the queue

Now we have created a way to pass the topic information for every event to the function, which in turn uses the URLs path to determine the data lake path for the event. With possibly thousands or even millions of assets, each one with their own topic (or set of topics), mapping every topic would simply not be feasible. Hence, we use wildcards, in both the queue subscription and the URL path when calling the function. A typical project has a range between 100 and 1,000 queues and queue bindings.

Now take a minute and imagine configuring these manually for every environment and for every change.

Alternatively, the function could open the payload and use fields within the payload to determine the data lake path – we typically stay away from this as it adds unnecessary complexity and dependencies between gateway and function. The resource requirements of the function (and therefor its running costs) would also increase dramatically if we needed to open and parse each event’s payload. At a rate of many thousands per second, we don’t feel this is a viable option.

The following picture shows the configuration required from an asset system to the edge broker to the central broker to the data lake:

Looking at the configuration items in the previous picture, we can map them to modules in ansible-solace:

| Action | Ansible-solace module |

| Client Profile | solace_client_profile |

| ACL Profile | solace_acl_profile |

| ACL topic pub exceptions | solace_acl_publish_topic_exception |

| ACL topic sub exceptions | solace_acl_subscribe_topic_exception |

And so on…you get the pattern.

The Implementation

For a working project, have a look at this repo on GitHub:

IoT Assets Analytics Integrationby solace-iot-team

IoT Assets Analytics Integrationby solace-iot-team

A few notes on ansible-solace:

- The modules support configuration of a broker in an idempotent manner. That means running the same playbook twice will leave the system in exactly the same state.

- Ansible has the concept of hosts (obviously) to run against in the inventory file. We have hijacked the host concept – here a host is either a Solace PubSub+ Event Broker, or a Solace PubSub+ Event Broker: Cloud account.

- This also means that none of the normal setup and fact gathering Ansible provides works. Hence, we need to tell Ansible this and set

ansible_connectiontolocalso it doesn’t try to log in. To gather Solace PubSub+ Event Broker facts, special modules exists:solace_gather_factsandsolace_cloud_account_gather_facts. - The

ansible-solacemodule structure follows the convention of the Solace SEMP V2 API, for example,solace_client_usernamemaps to this andsolace_queuemaps to this, and so on.

The sample project uses a number of input files which are configured for your particular environment, for example:

central-broker.ymllib/vars.bridge.ymllib/vars.mqtt.ymllib/vars.rdp.yml- …

In the playbooks we make extensive use of Jinja2 to read variables either from the inventories or the vars yaml files. This approach means you only have to change the input files and can leave the playbooks as they are when moving to different environments.

To try the sample project, follow the instructions in the README.

Here is an extract of the playbook to create a Solace PubSub+ Event Broker REST delivery point:

- include_vars:

file: "./lib/vars.rdp.yml"

name: rdp

- name: "RDP: Client Profile"

solace_client_profile:

name: "{{ rdp.name }}"

settings:

# TODO: figure out the correct settings for most restrictive setup

allowBridgeConnectionsEnabled: false

allowGuaranteedMsgSendEnabled: true

allowGuaranteedMsgReceiveEnabled: true

maxEndpointCountPerClientUsername: 10

state: present

- name: "RDP: ACL Profile"

solace_acl_profile:

name: "{{ rdp.name }}"

settings:

# TODO: figure out the correct settings for most restrictive setup

clientConnectDefaultAction: "allow"

publishTopicDefaultAction: "allow"

subscribeTopicDefaultAction: "allow"

state: present

…

- name: "RDP TLS Trusted Common Name: Add to REST Consumer"

solace_rdp_rest_consumer_trusted_cn:

rdp_name: "{{ rdp.name }}"

rest_consumer_name: "{{ rdp.name }}"

name: "{{ rdp.trusted_common_name }}"

state: present

- name: "RDP: Queue Bindings"

solace_rdp_queue_binding:

rdp_name: "{{ rdp.name }}"

name: "{{ item.name }}"

settings:

postRequestTarget: "{{ rdp.post_request_target_base }}/{{ item.subscr_topic }}?{{ rdp.post_request_target_params }}"

state: present

loop: "{{ rdp.queues }}"

when: result.rc|default(0)==0

Summary

In this article we have explored a specific use case, looked at the drivers for automation, and discussed in detail the automation requirements and their implementation using ansible-solace. The sample project implementation should provide a good template to start your own project.

Ricardo Gomez-Ulmke

Ricardo Gomez-Ulmke Swen-Helge Huber

Swen-Helge Huber