If your company has started to embrace event-driven architecture (EDA), you’ve probably been considering or struggling with how to manage your “events” with some semblance of governance, reuse and visibility. If that’s you, then read on!

In 2020, Solace introduced PubSub+ Event Portal to make it easier for enterprises to efficiently visualize, model, govern and share events across their organization. Why?

While organizations are embracing EDA, many are finding it hard to realize the intended benefits of better customer experiences, more efficient operations, and greater agility due to a lack of tools that help them manage the lifecycle of events, integrate with software development tools, and expose event flows for reuse.

Forrester advises that organizations should “implement governance and lifecycle management for event streams. As they grow in importance, you can include enterprise business events in the portfolio management you should already have in place for APIs. Establish an event portal where users of event streams can discover events, understand their functionality, and subscribe.”(1)

If you agree that there is a need for a toolset that helps you manage events, the next question is – what should you look for in such a toolset? And which one should you use?

I caught up with Shawn McAllister, Solace’s chief technology and product officer, to get his take on what enterprises should look for when they look for a tool to manage their events, and how our own offering – PubSub+ Event Portal – meets them and compares to alternatives like Confluent’s Stream Governance product.

Sandra: Let’s start by setting the stage –what does “event management” mean?

Shawn: First of all, it’s not about hosting a conference or a wedding. Event management is like API management for your event-driven system. Many companies are building event-driven microservices and/or integrating real-time data. To achieve either of these significant initiatives, there are certain things architects and developers need to be able to do. One is to have an event broker to route your events where they need to go as they stream across your enterprise in real-time. That need has been met by event brokers like Apache Kafka and our own PubSub+ Event Broker, but there’s been a lack of tools to manage your events like you do your RESTful APIs.

Without such tooling, there’s no way for your architects and developers to collaborate, making it hard to find events to reuse them, to know what events are available where, to keep documentation up to date, and to make complex model changes without impacting upstream and/or downstream applications. There’s no way to manage the lifecycle of events and other assets that are part of an event-driven system. Having a solution to this problem is a critical factor that made the API economy so successful and filling that gap that existed in 2020 for the event driven world is what we set out to do and we call it event management.

PubSub+ Event Portal for Apache KafkaManage, visualize, and catalog your Kafka Event Streams with PubSub+ Event Portal for Kafka.

Can you describe the key functions an event management tool should perform?

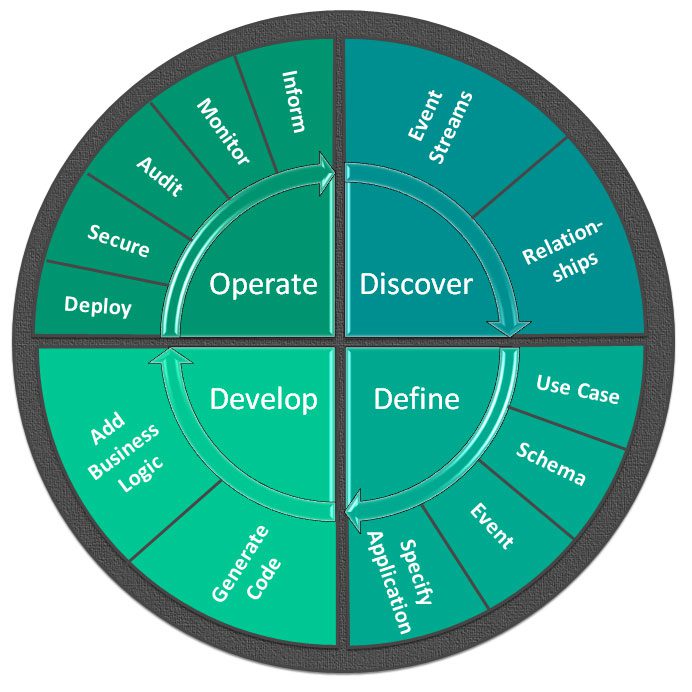

We believe that an event management tool must accelerate and simplify the entire event lifecycle, from design and development right through operation and retirement. It’s innovative, disruptive, and customer-centric, and it’s the right thing to do to ensure EDA can become mainstream!

There are some core functions we think every event management tool should do. Get ready, it’s a long list:

- Event Design – architects & developers need to be able to define their events, schemas and applications…describe them, annotate them, enforce governance, and foster best practices and consistency in their design before they’re deployed. Event streams as reusable assets and interfaces need to be thought through and well described just like RESTful APIs – or it makes reuse really hard.

- Runtime Discovery – Many enterprises have event streams now, but in my experience most don’t have a holistic understanding of what all their streams are, which apps are consuming them, the business moment they represent – and they certainly don’t know all of that across various pre-prod and prod environments. So, you need event management to “learn” this from your broker cluster and show you what’s really there vs. what you think is there.

- Runtime Audit – once you design or discover the flows in your system, you need an ongoing audit to compare your design intent (in event management) to the deployed runtime (in your brokers) and flag any discrepancies for resolution. Just because something is deployed doesn’t mean it’s right.

- Lifecycle Management – you need to be able to manage different versions of different events across different environments (pre-prod, prod) from creation to release to new versions to retirement of the event. You rarely build something and don’t evolve it – handling “day 2” concerns are just as important.

- Catalog & Search – now that you have an inventory of your event streams across environments, you need to ensure they can be found and reused by consumer apps in a self-service manner since this is the key to agility for new application creation. This is where a rich search capability across your many clusters in many environments is critical – otherwise, you don’t get reuse.

- Digital Products – for use cases like data mesh and other real-time integration use cases, you need to define, curate and manage your event streams as digital products, often managed by a product manager. You need to be able to bundle associated events, maybe from different producers, into digital products that we call event API products for sharing, either within your organization or outside.

- Graphical Visualization – for event driven microservices, you want to see which microservice is producing which events and who is consuming them in a visual manner that helps you understand the choreography and architecture of your distributed application or data pipeline and the events used as interfaces between them.

- Tooling Integration – no new tool can exist in a silo. Event management needs to be API first and integrate well with existing tooling such as Git, Confluence, IntelliJ, and Slack to support your development and GitOps processes.

- Support for Several Event Brokers – most enterprises have several message or event brokers, but they do not want multiple tools to manage the information flowing through them. So having one event management tool that handles many and more importantly, can be extended by you to support more is key.

It was with those capabilities in mind that we created PubSub+ Event Portal, which we launched in 2020.

Can PubSub+ Event Portal do all of these things?

Event Portal can do most of them today and will be able to do all of them by early next year. It’s always been our vision to deliver a product that provides all this functionality, and since it’s been on the market for two years we’ve learned a lot from our customers on how it can be improved and what else they need it to do.

Since you brought PubSub+ Event Portal to the market, Confluent introduced Stream Governance. How do they compare?

While I am not an expert on Stream Governance, I’d say that while at first glance they seem to serve a similar purpose, in reality they are quite different. Stream Governance only works with Confluent Cloud, and really only adds value in the area of understanding your live operational environment. Its catalog interrogates a particular Confluent Cloud environment to show you what’s happening in its clusters at that time – which is after an application has been designed, developed and deployed – at a stream and stream processing level.

This is useful for operational reasons once an application or a stream is deployed, but it doesn’t help developers in creating or modifying existing applications or streams, guiding best practices, helping with governance at design time and does not provide a “design vs actual” comparison to ensure what you have deployed is what was intended. This is all because it provides visibility of what is deployed – not what you are designing. It is tightly integrated with Confluent Cloud and combines other functionality to provide runtime lineage and data quality capabilities – again both are operationally focused.

Event Portal, on the other hand, helps architects and developers across all phases of the software development lifecycle, not just after deployment, and is designed to support many types of brokers so you don’t need to have a different tool for each event broker you use. We view Event Portal as the central point that helps you design, discover, catalog, manage, govern, visualize and share your event driven assets. It has specific functionality to help you build event driven microservices applications and to define event driven interfaces/APIs for real-time data integration, data meshes and analytics.

Event Portal works not only with Solace brokers, but with Apache Kafka, including Confluent and MSK brokers. It discovers and imports topics, schemas and consumer groups from these brokers. The open source Event Management Agent, a component of Event Portal, has a pluggable architecture so you can add your own plugin to perform discovery from other brokers too.

So, I say use the right tool for the right job, – these tools serve a complementary purpose. Operational visibility into your Confluent Cloud cluster stream architecture is best done with Stream Governance. But if you want a tool to support cradle to grave design time management of your event driven assets across environments and with different types of event brokers, then Event Portal is what you want. If you are a Confluent Cloud user, then maybe you want both.

Can you elaborate on design vs operational concerns?

In terms of design concerns, here are a few examples of what people want to do at design time in event-driven systems that they need tools for:

- Design and Share Events and Digital Products – Developers want to produce events that others can consume. We find more organizations with product managers creating digital products for internal or external consumption. To do this, you need to collaborate to define the specifics of the streams for your domain. Say for a data mesh, you want to describe these streams, annotate them and have them in a catalog with custom fields, descriptions, owners, state so others can find and consume them. You want to version them as they change, and deprecate them at end of life while doing change impact assessments. You want to see which topics use which schemas, which applications are consuming which topics. You want to bundle events into an event API to describe all order management events or all fulfillment events and make them available to consumers as a managed digital asset.

- Consume Events – Other developers want to find the stream or streams they need to consume to build their application, and they’d ideally like to do so using a rich Google-like search of a catalog that spans all environments and clusters and contains all the metadata that owners have added to annotate topics, schemas, applications. They want to know which cluster they can consume it from whether in pre-prod or prod and which version is where, whether security policies allow them to consume it, whether it is being deprecated and who to talk to if they have questions.

- Define Applications and Generate Code – You want to define event-driven applications and describe what they do, who owns them, link out to Confluence pages or import this data into Confluence. When a developer has defined their microservices and the events that each one produces and consumes, you want to visualize the interactions at design time and afterwards to better understand your system, and be able to generate code stubs using AsyncAPI code generators is a big productivity boost.

- Govern Event-Driven Applications and Information – Enterprise architects want to decompose their enterprise for democratized data ownership and create and enforce a topic structure associated with domains that makes ownership and governance clear, enforce role-based access controls (RBAC) uniformly across teams at design time – not just runtime, define and enforce conventions and best practices. And an increasing number of enterprise architects I’ve talked to want to integrate their API management solution or developer portal with their event management services so they can offer a “one stop shop” developer experience for synchronous and asynchronous APIs.

Here are some of the areas in which Stream Governance does not address design time concerns:

- Stream Governance provides some of this information, but only for streams currently available in a particular Confluent Cloud environment. This means that if you are designing or updating a stream but have not deployed it yet, you can’t see it.

- Role-based access controls do not let you control information sharing in as fine-grained a way and can’t be controlled by data owners.

- Stream Governance supports tags on schemas, records and fields are supported, but no other metadata on other objects (eg via user-defined attributes, owners, etc.).

- Since it is not a design tool, promotion of a given artefact between environments and AsyncAPI import/export are not supported. You are shown what is in your environment.

However, from an operational point of view, Stream Governance is better suited for managing schema registries, validating schemas at runtime, determining runtime data lineage and resolving operational issues involving the flow of streams in Confluent Cloud.

Can you tell me how the lifecycle management capabilities of the two solutions differ?

Lifecycle management is defined as the people, tools, and processes that oversee the life of a product/application/event from conception to end of life.

Most enterprise software development teams follow a software development lifecycle management process in delivering high value applications to their organization. We believe that event management must integrate with these development processes and tools to facilitate and provide added governance and visibility into EDA application development and deployment. As a result, new application and event versions can be created efficiently and without negative impacts to downstream applications.

Supercharge your Software Development Lifecycle with PubSub+ Event PortalLearn how PubSub+ Event Portal can work with your existing SDLC tools and add crucial EDA perspective with our open source plugins.

This graphic shows the typical stages of development of an event driven application or an event driven API and Event Portal plays a role in each of these stages. From supporting the definition of streams, microservices and visualization of their interactions during the design phase, to versioning as you evolve artefacts on day 2, to environments and promotion between them, to audit of design intent vs what is actually deployed. Open-source integrations we have created integrate Event Portal with tools like Confluence for design, Intellij for development, Git for GitOps and you can use Event Portal’s API to integrate its catalog and repository with your own favorite developer tools using our open source integrations as examples.

In comparison, Stream Governance provides visibility into what is deployed in Confluent Cloud clusters – and it does give you great visibility of that – but not across the entire development lifecycle and not beyond Confluent Cloud deployments.

Conclusion

Event Portal and Stream Governance solve different problems in overlapping situations. Event Portal focuses on cradle-to-grave design, discovery, reuse, audit and lifecycle management of your event streams, schemas and applications across Solace brokers and Kafka brokers including Confluent Platform and Confluent Cloud along with Apache Kafka, MSK and others that can be added via the open-source Event Management Agent. Stream Governance focuses on visibility of your runtime streams in Confluent Cloud deployments. Which tool is best for you depends on the problem you are trying to solve.

I hope this Q&A with Shawn has helped you understand the difference between PubSub+ Event Portal and Stream Governance. You can learn more about Event Portal at https://solace.com/products/portal/kafka/

- Source: Forrester Research “Use Event-Driven Architecture in Your Quest for Modern Applications”, David Mooter, April 9 2021