Preface

Over the last few years Apache Kafka has taken the world of analytics by storm, because it’s very good at its intended purpose of aggregating massive amounts of log data and streaming it to analytics engines and big data repositories. This popularity has led many developers to also use Kafka for operational use cases which have very different characteristics, and for which other tools are better suited.

This is the third in a series of four blog posts in which I’ll explain the ways in which an advanced event broker, specifically PubSub+, is better for use cases that involve the systems that run your business. The first was about the need for filtration and in-order delivery, the second was about the importance of flexible filtering, and this one is obviously focused on securing Apache Kafka. My goal is not to dissuade you from using Kafka for the analytics uses cases it was designed for, but to help you understand the ways in which PubSub+ is a better fit for operational use cases that require the flexible, robust and secure distribution of events across cloud, on-premises and IoT environments.

- Part 1: The Need for Filtering and In-Order Delivery

- Part 2: The Importance of Flexible Event Filtering

- Part 4: Streaming with Dynamic Event Routing

Summary

Architecture

A Kafka-based eventing solution can consist of several components beyond the Kafka brokers: a metadata store like Zookeeper or MDS, Connect Framework, Protocol proxies, and replication clusters like MirrorMaker or Replicator. Each of these components has its own security model and capabilities, and some are way more mature and robust than others. This mix of components with their own security features and configuration introduces the “weak link in the chain” problem and makes it harder than it should be to centrally manage the security of your system. It’s so hard to secure Kafka that securing Apache Kafka installations is a big part of the business models of Kafka providers like Confluent, AWS, Redhat, Azure, IBM and smaller players – each one with a unique approach and set of tools.

Solace handles everything in the broker, with a centralized management model that eliminates these problems and makes it easy to ensure the security of your system across public clouds, private clouds and on-premises installations – which is critical for the operational systems that run your business.

Performance

The centerpiece of the Kafka architecture is a simple topic for publish and subscribe, and Kafka derives a lot of its performance and scalability from this simplistic view of data. This causes challenges in implementing the security features it takes to ensure that a user is authenticated and authorized to receive only the data they should be able to. Kafka security features are too coarse, and too complicated to implement due to the many components in a Kafka system, which in some cases are not very secure.

Solace has a full set of embedded, well integrated, security features that impose a minimal impact on performance and operational complexity.

Connection Points

Beyond the Apache Kafka security concerns mentioned above, different types of users, (native Kafka, REST, MQTT, JMS), makes the task of securing Apache Kafka-based systems immensely more complicated. Each type of user has different connection points (proxies, broker) to the eventing system, every connection point has different security features and it is the task of the operator to coordinate authentication and authorization policies between these entry points where possible. Adding new user types to the cluster is a complicated task that likely introduces new licensed products and greatly affects operational tasks.

Solace has a single-entry point to a cohesive event mesh for all user types (of varying protocols and APIs) and applies consistent authentication and authorization policies to all users without changing product costs or greatly changing anything from an operational point of view.

Simple Example of Securing Apache Kafka

Adding Security to Eventing Systems

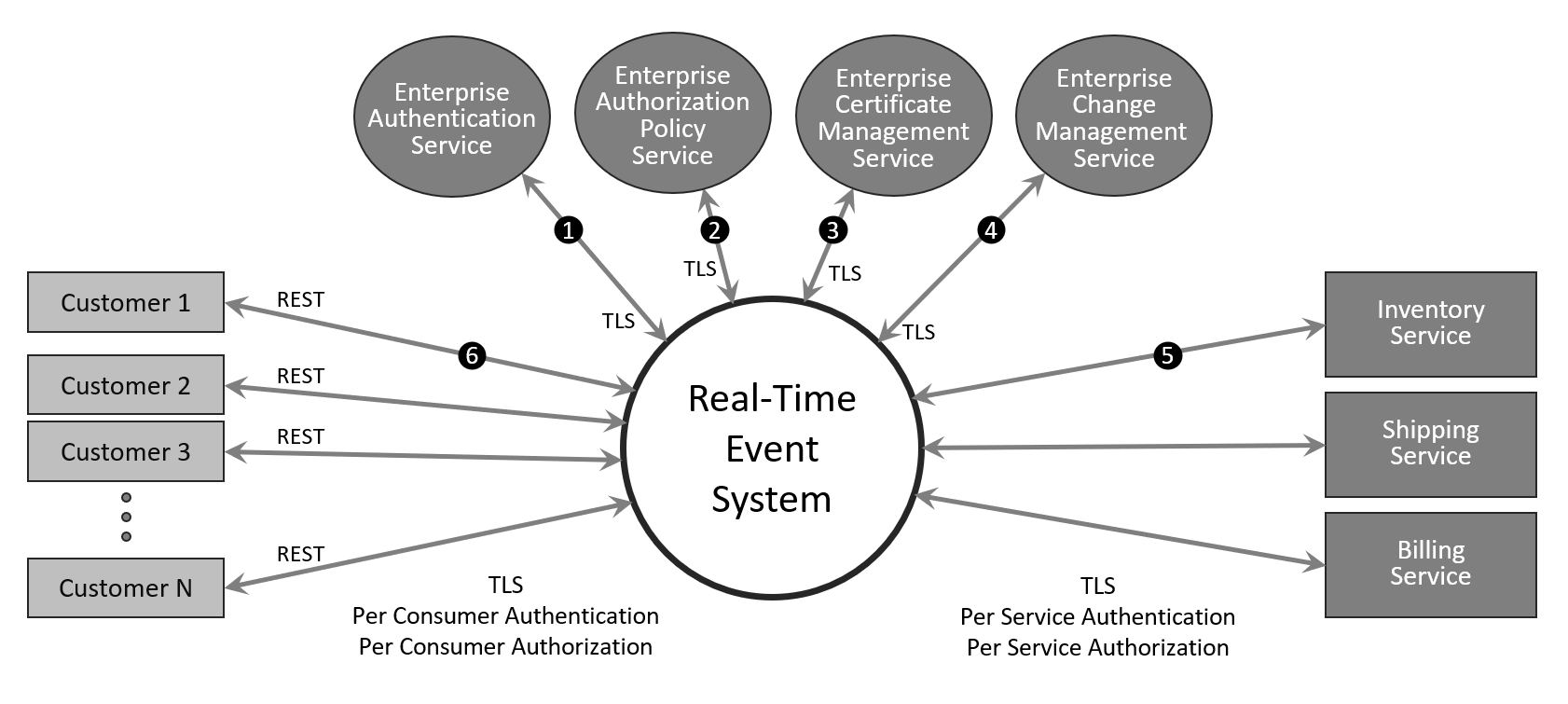

In the first article in this series, I discussed an event-based infrastructure used to process orders for an online store. In the scenario, you are asked to imagine that you’ve been tasked with splitting the infrastructure across your datacenter and a public cloud, and now must ensure that all interactions happen securely. This would involve:

- Authenticating all users (administrative users and applications), likely integrated with existing enterprise authentication mechanisms.

- Authorizing authenticated users to produce and consume specific data using access control lists, (ACL), again likely integrated into existing enterprise policy groups.

- Securing all data movement, likely with TLS on all links.

- Securing configurations and monitoring any changes to the system that might compromise security.

At a component level, this would look like this:

- Ability to authenticate all connections, including internal system connections, back end services and remote front-end client. Have the Event System validate authentication with a centralized enterprise service to reduce administrative complexity. Note here that in this simple diagram the Realtime Event System could contain things like Firewalls, or API Gateways or other components seen as necessary to securely terminate the front-end client connections.

- Ability to enforce fine grain authorization policies which are centrally managed.

- Ability to distribute and revoke security certificates from a centralized service.

- Ability to push configuration change notifications to a centralized audit service, looking for introduction of vulnerabilities. Changes should be role based scoped to allow different levels of administration

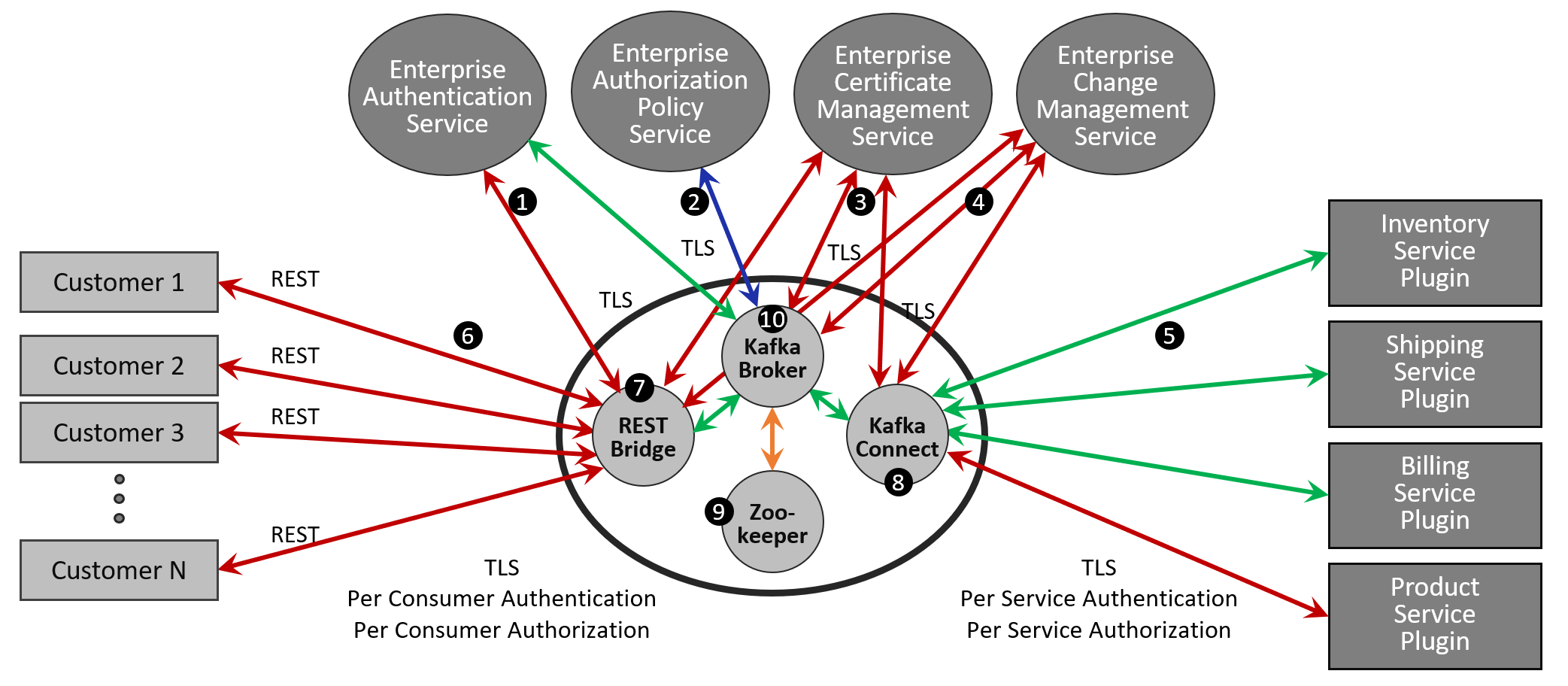

Securing Apache Kafka-based Event Systems

If you look at how to overlay security on a system built with Apache Kafka/Confluent, you can see there are several required components. Each component needs to be addressed when considering an enterprise security posture as you will see things like security principles and certificate validation are disseminated across these components. In the diagram below I show the basic components that make up a Kakfa eventing system and add lines for interactions within the system or with enterprise security services.

The green lines indicate components or interactions that are fairly easy to secure, and to manage in a secure way. Blue lines mean security is available for additional cost, and orange means security has not historically been available, but was added with the latest release. The red lines show areas where security is either lacking in functionality, complicated, or simply left to administrators to come up with their own solution.

1. Enterprise Authentication: Supported in some places and not in others.

Apache Kafka broker supports un-managed, (see #4 below) JAAS file-based authentication in SSL, SASL/PLAIN and SCRAM. It also supports more enterprise solutions including Kerberos and OAuth2.

If you use SASL/PLAIN instead of an enterprise authentication solution, you need to restart the brokers every time you add or delete a user.

If you use SCRAM instead of an enterprise authentication solution, usernames and passwords will be stored in Zookeeper which causes several security concerns, (see 11 below).

Confluent broker extends the authentication with LDAP, but not all components support this. For example, Zookeeper only supports Kerberos and MD5-Digest.

The Confluent REST Proxy does not integrate with standards based OAuth2 OpenIDConnect instead relies on Client/Server certificates with no certificate management, (see 3 below). Ref:

2. Enterprise Authorization: Supported is some releases and not in others.

Apache Kafka SimpleAclAuthorizer stores ACLs in zookeeper with no integration with enterprise policy services.

Confluent does sell an LDAP authorizer plugin for the Kafka broker that supports LDAP group based mapping of policies.

3. Enterprise Certificate Management: Not supported.

Both Apache Kafka and Confluent brokers uses HTTPS/TLS authentication, but Kafka does not conveniently support blocking authentication for individual brokers or clients that were previously trusted. This means there is no way to life cycle manage certificates through revocation.

4. Enterprise Change Management: Not supported

Configurations and JAAS files are spread throughout the solution on brokers, proxies and zookeepers but there is no native Kafka solution to do change tracking of these files. It is up to the administration team to build out a solution for this concern. This file-based configuration also means it is not possible to have RBAC rules around what parts of the configuration you are allowed to administer. Confluent Control Center does help here in that it will allow some level of configuration validation, but there is no ability to back and restore of configurations. Also, it does not provide complete authenticated and authorized interface to control configuration changes and instead points you to un-authenticated command line tools to perform these tasks as “There are still a number of useful operations that are not automated and have to be triggered using one of the tools that ship with Kafka”..

5. Services run as standalone apps or plugins within the Kafka Connect Framework.

Applications can be written to work securely as long as they do not take advantage of data-filtering patterns as described here. If they use this pattern, then centralized authorization policies will be defeated, and you will not be able to tell where the data has been disseminated.

6. REST Clients: Can make request, but no Events of Notifications

The Confluent REST proxy clients can create events such as a new purchase order, but can not receive per client notifications even with polling. This is because filtering is not fine-grained enough to ensure that each consumer receives only its data and not data meant for other consumers. So even if a back-end service receives an event from a REST request, there is no natural way to assign a reply-to topic address that only the correct client can consume. The application development team will have to build or buy another solution to allow REST consumers to interact with the online store securely ensuring interopability with enterprise authentication, authorization, auditing and certificate management systems.

7. REST Proxy: Single Kafka user and loss of granularity in the request

By default, the Confluent REST proxy connects to the Kafka broker as a single user. This means that all requests into the REST proxy are seen as though they came from a single user to the Kafka Broker, and a single ACL policy is applied to all requests regardless of the actual originator. It is possible to purchase a security plugin that extracts the principal from each request and maps it to the principal of the message being sent to Kafka. This principal mapping would allow per user ACLs to be applied by the Kafka broker, but it does mean authenticating twice and authorization once on every REST request. You will need to keep configurations in sync across proxies and brokers for this to work and it does add extra overhead since each publish request requires a connection setup, TLS handshake and authentication to the Kafka broker.

Each REST request URL path is mapped to a Kafka topic based on a matching regex pattern held in a local file. This reduces functionality and strips away ability to implement fine grain controls.

For these reasons most people build or buy a proper REST termination interface instead of using the REST proxy.

8. Kafka Connect: Connect Cluster REST interface exposes a new attack vector

The producer and consumer interfaces for the embedded plugins and Kafka broker connections pose no additional security risks, but the Connect Cluster REST interface exposes the inner workings of the Connect framework such that someone could glean sensitive information about other plugins and application credentials. This REST interface cannot be secured via HTTPS or Kerberos, and there is no RBAC-like role policies on what endpoints can be accessed once a REST connection is made to the Connect Cluster.

9. Zookeeper: Security issues

Until recently Zookeepers, and the interactions with them, were not easily securable due to lack of TLS support. Any deployment that do not use Zookeeper 3.5 or above cannot be secured. Apache Kafka 2.5 was the first to use Zookeeper 3.5.

10. Kafka Broker: Performance degradation with TLS enabled

The key to Kafka performance is the broker’s ability to forward events on simple topics in kernel space. Enabling TLS disables this ability so Kafka can’t deliver events directly from kernel write cache, which means it spend way more CPU cycles processing every event.

Securing Apache Kafka entails using the Java Crypto Architecture (JCA) for encryption and decryption, which means encryption behavior and performance will vary based on the underlying JDK. This makes it difficult to know exactly what to expect for performance degradation when enabling encryption, but most tests show about 50-60% increase in broker CPU utilization for the same message rates and size, and some users have seen degradation as high as 80%.

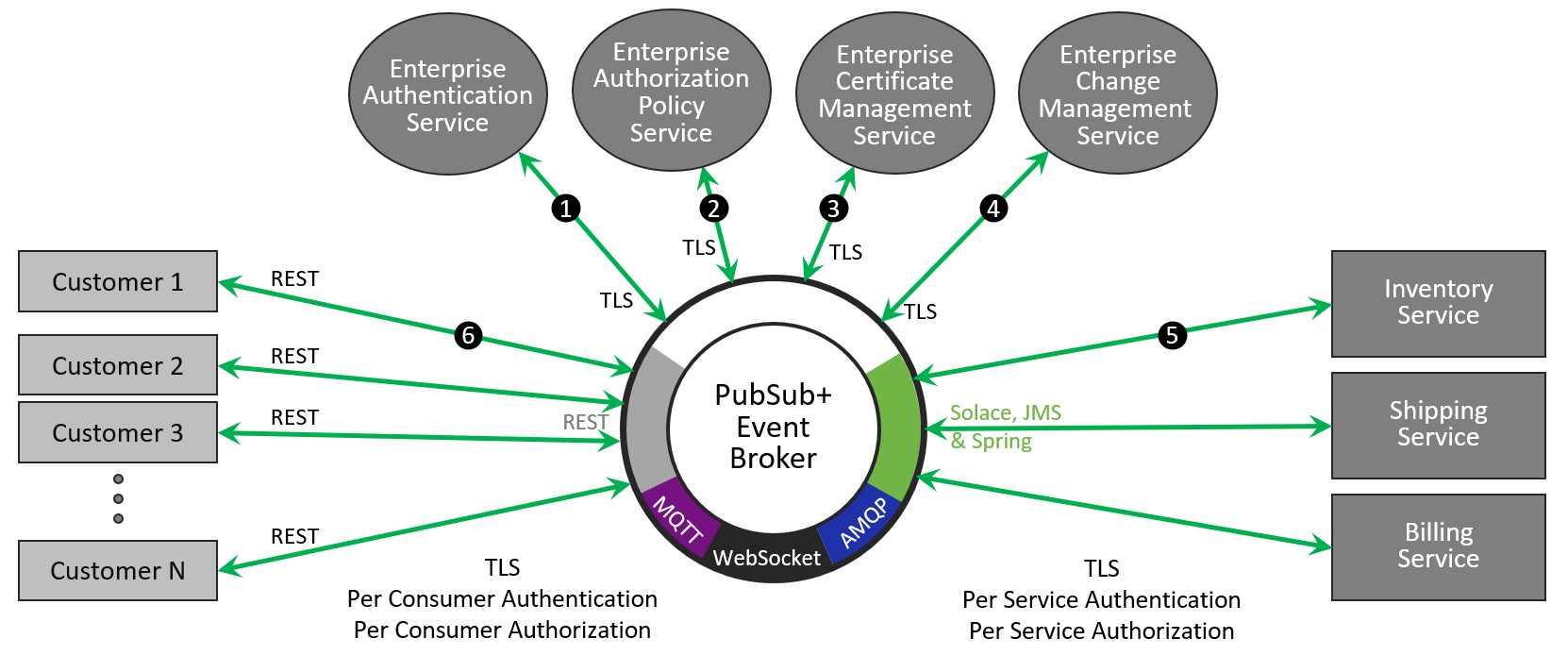

Securing an Event Mesh Built with PubSub+

When you compare securing Apache Kafka to overlaying security on an event mesh built on top of Solace PubSub+, we see the beauty of an integrated solution that has been designed from day one to be the backbone of an enterprise eventing system. The Solace broker supports clients using multiple open protocols like REST, MQTT, AMQP, as well as a Solace protocol and JMS. Each of these clients are authenticated and authorized the same way, with the same interactions with enterprise authentication and authorization tools. The configuration and policies are all stored in an internal database where changes can be controlled through RBAC policies and changes are traceable via logging infrastructure.

1. Enterprise Authentication: Consistent integration with enterprise authentication services

All protocols, including those on the management interface, uniformly support mutual certificate authentication, Kerberos, Radius and LDAP. Integration into enterprise authentication services is easy and secure, the configuration options are comprehensive, and configuration is possible through our WebUI. MQTT, Mobile and Web also support Oauth2 OpenIdConnect. From an authentication perspective it does not matter which protocol you use to connect to the Solace broker to produce and consume events, the producers and consumers are authenticated in a consistent manner which ensures security and is easy to administer.

2. Enterprise Authorization: Consistent integration with enterprise authorization services

Administrative users are authorized against RBAC roles-based policies, and these users can have their roles set via enterprise authorization groups. This shows the biggest difference between what Kafka and Solace mean by multi-tenant. When the concepts for securing Apache Kafka were introduced in Kafka 0.9, they then called it multi-tenant, but it is really multi-user. With Solace an administrator can be given administrative permissions to manage a single tenant application domain (called a VPN), and in that domain they can create named users, queues, profiles and ACLs without worrying about conflict with other administrators in other domains. All objects within their application domain are namespace separated, much like being in your own docker container. With Kafka there is no application domain level to administer. All topics and users live in a single flat namespace.

On the producer and consumer side, authorization ACL policies can be managed via enterprise authorization groups, meaning individual producers and consumers can be added or removed to policy groups which affects which ACLs are applied. The ACL rules themselves, and the application of ACLs to an application, are uniform and independent of the protocol the application uses to connect with the broker.

3. Enterprise Certificate Management: Able to control lifecycle of certificates

Solace supports TLS with mutual authentication for all protocols with strong binding of Common Name (CN) to security principle for authorization. Solace supports proper life cycle management of certificates with ability to revoke certificates in place.

4. Enterprise Change Management: Able to push notify configuration changes as well as secure config

Solace configuration data is stored in an internal database that can be manually or automatically backed up periodically, exported for safe keeping, and restored. Configuration change notifications can by pushed to a change management system and are locally journaled for verification. Change logs include who made the change, when they made it, and where they connected from.

5. Application connections: Securely connect and send/receive data

Solace allows the integration of authentication, authorization, accounting, and certificate management integration so applications can securely connect send and receive the data they are entitled to produce and consume. Fine-grained filtering and ACLs allow for strict governance of the flow of data.

6. REST/MQTT application connections: Securely connect and send/receive data

The description written for back end application (see 5 above) also applies here because Solace’s broker natively supports open protocols support, so it doesn’t rely on an external proxy. Solace’s broker was designed to directly authenticate and authorize hundreds of thousands of connections, allowing a consistent application of these policies across all connection protocols. Solace is able to apply ACLs directly to MQTT topics and subscriptions and also apply dynamic subscriptions to allow per-user topics so a single customer can only produce and consume data related to themselves. The brokers also include advanced ACL capabilities, called substitution variables, to prevent applications from generating events that impersonate other users by validating the application’s authentication name within topics produced or consumed. Similar capabilities exist on the consumer side.

7. Solace Broker: TLS has smaller impact on performance.

Enabling TLS will have some performance impact, but Solace has minimized this by using highly optimized natively compiled C libraries.

Security Documentation

I’ve talked here at a fairly high level for the sake of brevity. If you want more information about any of the capabilities I’ve touched on, I suggest you reference the actual vendor documentation:

- Apache Kafka security feature overview

- Confluent security features overview

- Solace security feature overview

Conclusion

For operational use cases that run your business, it is essential that you can trust the data has not been corrupted or tampered with, also that the data has not been observed by un-authorized users.

Securing Apache Kafka is difficult because its lightweight broker architecture doesn’t provide everything it takes to implement a complete event system, so you must surround the broker with complementary components like Zookeeper, proxies, data-filtering applications, etc. If you are using Kafka for analytics-centric use cases, the security models around these components might be enough, but for operational use cases, these components with their varying ability to be integrated with enterprise security service will probably not meet your security requirements.

Solace event brokers were designed to be multi-user, and have evolved to be truly multi-tenant and multi-protocol. This has provided a platform for integrating enterprise security services in a consistent way that lets you more easily and completely secure your system and the information the flows through it.

Specifically:

- Securing Apache Kafka is complex because of the multiple components needed to build out a complete solution. Solace makes securing an eventing solution easy across all channels, (web, mobile and IoT clients as well as back end services) and for all users – applications and administrators.

- Kafka does not provide the level or fine grain authorization needed in purchase order type and other operational systems and another set of 3rd-party tools will need to be added to make a complete solution. Solace fine grained ACLs allow simplified per-client authentication and authorization.

- Kafka is not optimized for encryption and in some links, encryption is not supported. Solace is optimized for end-to-end encrypted connections.

In the next post in this series, I’ll explain the importance of replication in operational use cases.

Why You Need to Look Beyond Kafka for Operational Use Cases, Part 4: Streaming with Dynamic Event RoutingComparing and contrasting technical details of point-to-point data replication and dynamic routing through an event mesh with a series of use cases.

Ken Barr

Ken Barr