When dealing with any technology one of the most important things you need to understand is terminology. Every technology has its own set of words and phrases that help define what it is and how it operates. Event-driven technology is no different. If you’re dealing with event-driven technology, you’ll often hear the terms “EDA,” “integration,” “event broker,” or “iPaaS” coming up in conversation. I have frequently encountered the question “What is the difference between event-driven architecture and event-driven integration?” so I thought it was a good time to publish an answer!

What is Event-Driven Architecture?

Event-driven architecture (also known as “EDA” in the industry) is a software design pattern in which decoupled applications asynchronously publish and subscribe to events via an event broker. The focus of EDA is on the loosely coupled, real-time architecture of publishers, event brokers and consumers, so that the business value becomes the star of the show. If you are using events to communicate between microservices that comprise a new application that may be all local, all cloud, or hybrid based, that is EDA.

In a truly event-driven system, teams building services must assume that they are responsible for data transformation, schema validation, and advanced filtering of payload data. And the teams are responsible for communicating with the event broker in a supported protocol which is either vender-specific (like Solace’s SMF for Solace or Kafka wireline for Confluent) or industry-standard (like JMS or MQTT).

Strict EDA is really only possible in greenfield scenarios (with little to no legacy applications and services) and with mature development teams that are capable of managing the complexities of EDA without supporting tooling.

What is Event-Driven Integration?

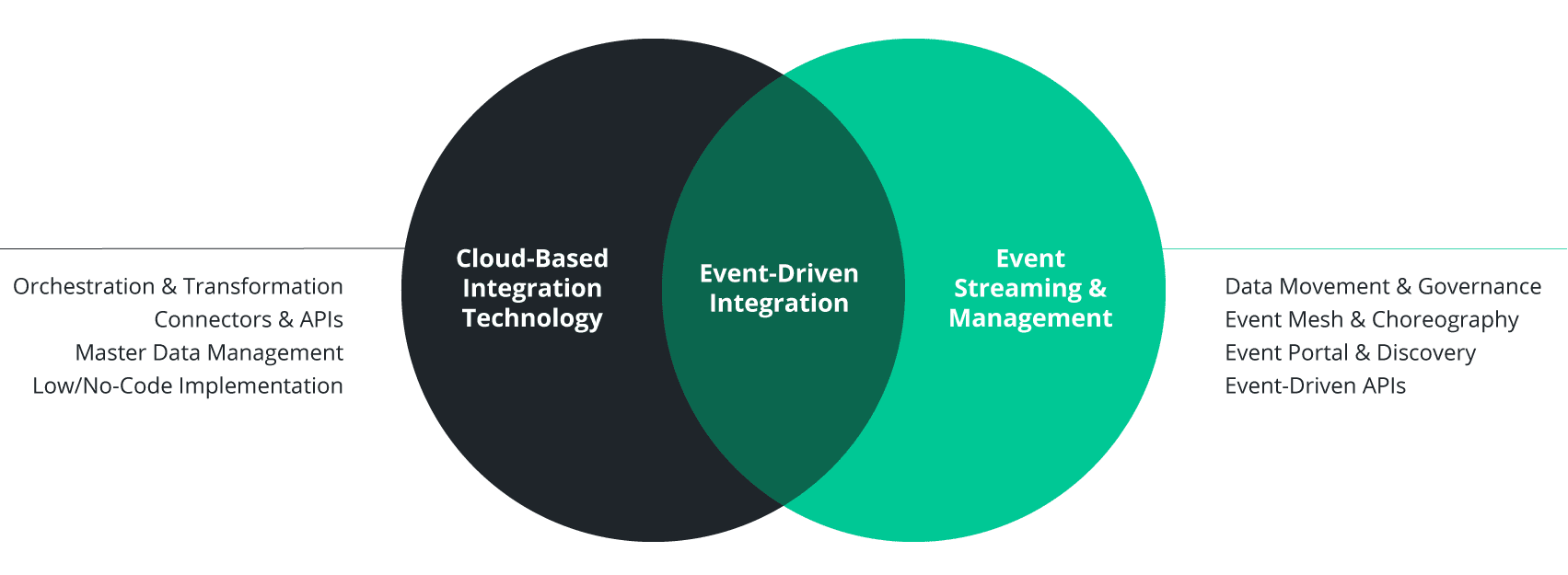

Integration in the synchronous world typically relies on point-to-point polling, request-reply, and batch-based data movement, which can be accomplished with most integration platforms as-a-service (iPaaS) like Boomi and MuleSoft. In the asynchronous world, event-driven integration focuses on the integration of data from multiple applications within an event-driven ecosystem. Integrations (application, data, B2B, process automation) are triggered and supported by event-driven data movement patterns (publish-subscribe, queuing, streaming) via an event broker.

Event-driven integration allows one application to take a subset of what it does and share it with the other applications. You need to consider that data in one application may not look that same as data in another application. The applications that need to share data with each other are likely a mix of new and old, with each application built by a different team, with different ways to store and transmit data, and their own set of external interfaces. You also need to take into account that the way one application accesses its data may be different than the other. One application may use REST, another may use a vendor-specific API, while another may simply store a nightly batch file on a shared drive. Additionally, you may need to aggregate unrelated data in one application for a task in another.

All these considerations translate into classic integration requirements: data transformation, application connectivity, data enrichment, and schema validation. Event-driven integration uses supporting technologies such as iPaaS, microservices frameworks, management capabilities, and more to expand on EDA and provide standardized, enterprise-grade solutions to integration challenges.

So, what’s the difference?

In essence event-driven integration is a specific application of EDA, i.e. applying EDA to requirements found when solving integration problems. You can have EDA without event-driven integration, but you can’t have event-driven integration without EDA.

Explore other posts from category: For Architects

Rey Riel

Rey Riel