From Main Street to Wall Street and their international equivalents around the world, financial institutions of all kinds are working hard to improve efficiency, regulatory compliance and customer experience.

The financial services sector is remarkably diverse, and some of the challenges faced by different kinds of banks, capital market participants, insurance companies and payment processors vary, they all face the need to get real-time data flowing between back-office, front-office and customer-facing systems across geographies and operating environments.

In recent years many of them have turned to Apache Kafka – an open source tool initially built by LinkedIn’s engineers to log data about activity within their platform. The functionality has evolved somewhat since then, to the degree that many try to use it as the foundation of their event streaming. That’s not always the right path though…

“Apache Kafka is not designed to be an enterprise message broker acting as the central nervous system for streaming event data throughout your organization.” – David Menninger, SVP and Research Director, Ventana Research

I’ll explain here the four common challenges enterprise IT teams face when implementing Kafka, how you could address them, and how you should address them.

1. Troublesome Troubleshooting

- Challenge: You’re struggling to troubleshoot outages and identify instances of performance degradation in your Kafka infrastructure

- Impact: Your business – could be trades, transfers, payments, risk management or post-trade activities – grinds to a halt, which can introduce regulatory penalties, fees for SLA violations and damaged reputation with your customers and in the marketplace.

- What you could do: Hire more people to sift through logs and add more tools like Datadog to monitor your Kafka clusters

- What you should do: Choose a system that lets you govern and track the data lineage of your event streams even as they span hybrid and multi-cloud environments.

2. Counting on Kafka Experts

- Challenge: You’re facing a backlog of compliance and feature requests, and they all require the direct involvement of your Kafka team

- Impact: Slower release cadence and unwanted bureaucracy

- What you could do: Hire more Kafka Ops people to perform tasks like re-balancing topic partitions and updating the allocation of topics.

- What you should do: Implement an event management solution that lets you catalog all of your business events and frees your team from menial tasks like topic allocation and partition management. This democratizes your events so application development teams and partners can securely use services and accelerate innovation and delivery

3. Popularity-Based Pervasiveness

There’s an old saying “If the only tool you have is a hammer, you will start treating all your problems like a nail.”

- Challenge: Kafka has become so popular with your developers that you’re afraid it’s being used to meet all of your event streaming needs, even those it’s not well suited for.

- Impact: As Kafka becomes entrenched in your organization, it’s being used to address use cases.

- What you could do: Add more resources and tools to keep your existing system from unraveling, and to fill the gaps between what Kafka does and what you really need as new use cases and requirements emergs. .

- What you should do: Build an event mesh that helps you manage your Kafka event streams in context of the ability to use the best event brokers or integration tool for whatever use cases come your way.

4. Surprising Cost and Complexity

Longtime Sun Microsystems CEO Scott McNealy once said “Open source is free like a puppy is free,” and many find that applies in spades to Kafka.

- Challenge: It’s expensive to run and scale your Kafka system because of all the components like Zookeeper instances, proxies, and schema registries.

- Impact: The inability to quickly scale up to handle seasonal or volatility-driven bursts of activity can lead to failed trades, slower apps and a poor user experience

- What you could do: Reallocate budget and resources from other projects to over-provision Zookeeper instances and other components, or try out a managed Kafka.

- What you should do: Build your apps on a system that can easily scale horizontally or vertically as needed, and supports a wide range of APIs and protocols so you can dynamically shift workloads into the cloud.

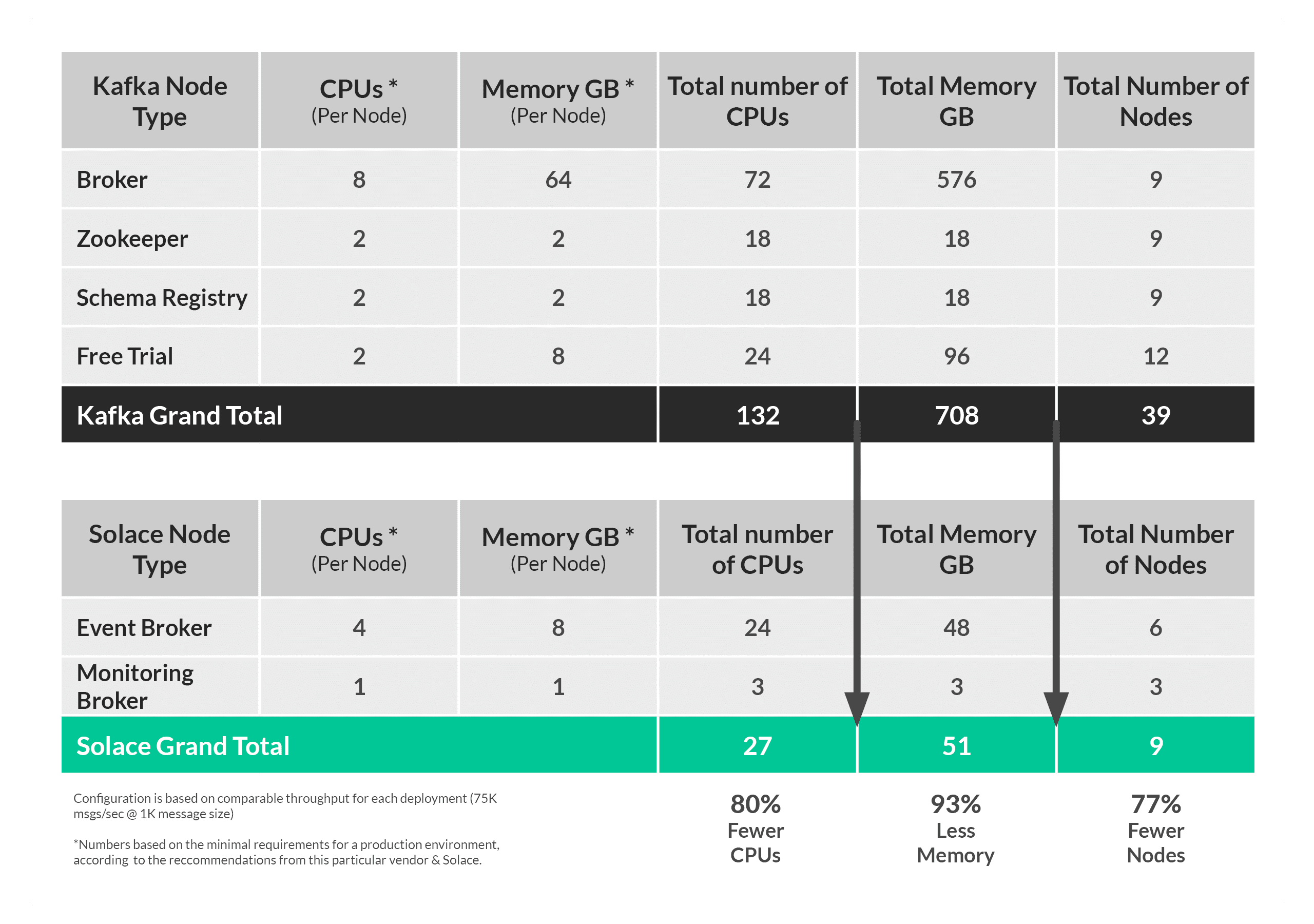

Any IT pro worth their salt knows that open source tools aren’t really “free” when they’re being used to meet real-world enterprise computing requirements. You know already (or will soon realize) you can’t DIY it and might look for a “managed” flavor. While cloud providers come with the obvious risk of “lock-in”, a public-listed Managed Kafka provider creates unnecessary bloat in your infrastructure. Here is an example of how that looks for size and price.

Summary and Next Steps

The bottom line is that it’s difficult and expensive to get Kafka to meet the scalability and management needs of most enterprises. As such it can quickly evolve from being an enabler of efficiency and innovation to being a burden that hampers your ability to innovate, make smarter decisions, and better service your customers.

Solace has the tools and experience to help you avoid these pains, so consider Solace PubSub+ Platform to leave your Kafka-rooted challenges behind and innovate faster and better.

Contact us to book a consultation and start to evaluate your Kafka estate so you can chart a path to Kafka right-sizing at your business.

Explore other posts from category: For Architects

Gaurav Suman

Gaurav Suman