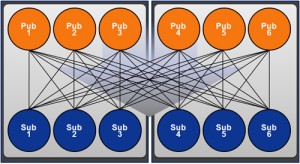

Much has been written about algorithmic traders squeezing microseconds of latency out of their trading infrastructure, but latency obsessed high-frequency traders have sharpened their pencils and now talk nanoseconds. They can think in these speeds because they are running multiple applications, such as feed handlers and algo engine, on different cores of powerful multi-core servers so those applications can communicate using shared memory instead of over a network.

Architecturally, the applications work just like they would if they were sharing information across a network, just without the latency associated with that process. To get the data between applications running on the various cores, developers can either write their own IPC protocols or leverage messaging semantics but with inter-process communications (IPC) in place of network protocols.

2009: Introducing Solace IPC

In September 2009, we announced a shared-memory IPC protocol transport for our client-side API that provides inter-core messaging capability for high-frequency trading application developers. We became the first messaging vendor to demonstrate IPC with average latency under a microsecond when we published a report showing average latency of 618 nanoseconds at a rate of one million messages per second.

2010: New Benchmarks on Solace IPC

and Cisco Unified Computing Platform

Fast forward a year, and we figured it was time to update our numbers. During the summer, we worked with Cisco to benchmark our IPC on their latest Unified Computing System servers.

All the gory details are available in a new white paper we published with Cisco on these benchmarks. We believe these are the fastest IPC benchmarks ever run by a wide margin, improving on our own record results from a year ago.

Truth in Advertising

While I am on the topic of benchmarks, I want to revisit a pet peeve that I’ve blogged about in the past – benchmark transparency. The kinds of benchmarks that most vendors publish give marketing people (like me) a bad name. But worse, they undermine the credibility of all benchmarks. Many vendors cherry pick just a few data points that make them look good, and leave out the rest of the detail. They may publish a lowest latency and highest throughput number, but fail to mention that they were two different tests, one optimized for each.

Another favorite tactic is to publish only average latencies and “forget” to show the 99th percentile data if the jitter results are unflattering. Or select the message sizes that make the results look good and cut the chart off as the results peak, before they reverse direction. In general, I suggest you approach any vendor produced benchmark with skepticism, including ours. The published results will tell you a story based on what data is included, but what can you infer based on what is left out?

Our philosophy is to earn your respect and trust by giving you all the data, the good and the bad. In this IPC white paper, you’ll find latency and rate published together. Average latency and 99th percentile published together. Where results peak, and where they reverse direction. How the server was configured and more.

Have more questions? Please ask us and we’ll tell you what we’ve learned. Serious about IPC and multi-core development? We can help you get results very similar to these in your own environment. A benchmark document exists to help you better understand performance characteristics, and if it doesn’t, it isn’t helpful in my book.

In closing, I’d like to extend a special thanks to Cisco for inviting us into their labs to give our IPC product a workout, and for sharing their expertise in leading edge server technologies.

Explore other posts from category: Use Cases

Solace

Solace