Kafka has become the most prominent log broker and stream processing solution for message delivery in analytics use cases. The implementation of analytics is evolving to a more distributed, hybrid-cloud based, and operations integrated solution that spans from factory to edge, and involves many thousands or even millions of connected devices.

This evolution to globalize and operationally interconnect analytics hits upon limitations of Kafka, such as:

- Inefficient cluster-to-cluster message replication that doesn’t respect message order

- Restrictions in the request-reply response when coupled with in-order delivery often make Kafka operationally inefficient

- Lack of command and control support

- No support for message exchange patterns that rely on queuing

- Limited alignment to microservices architectures

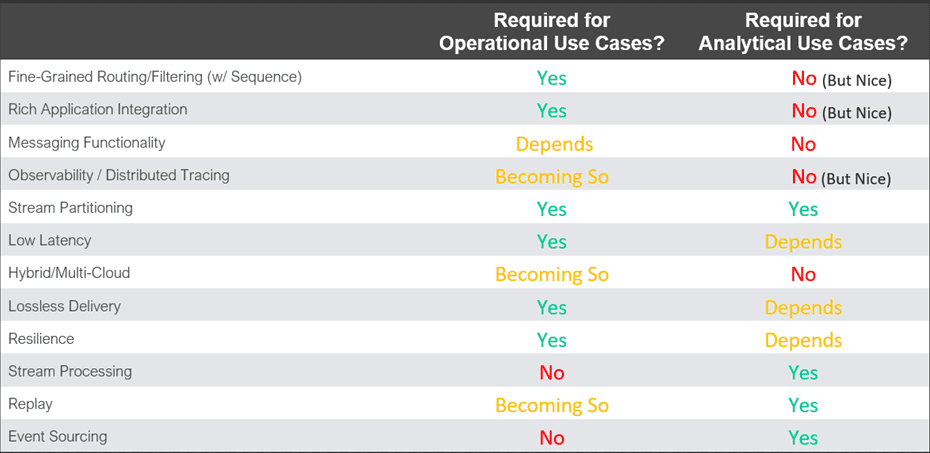

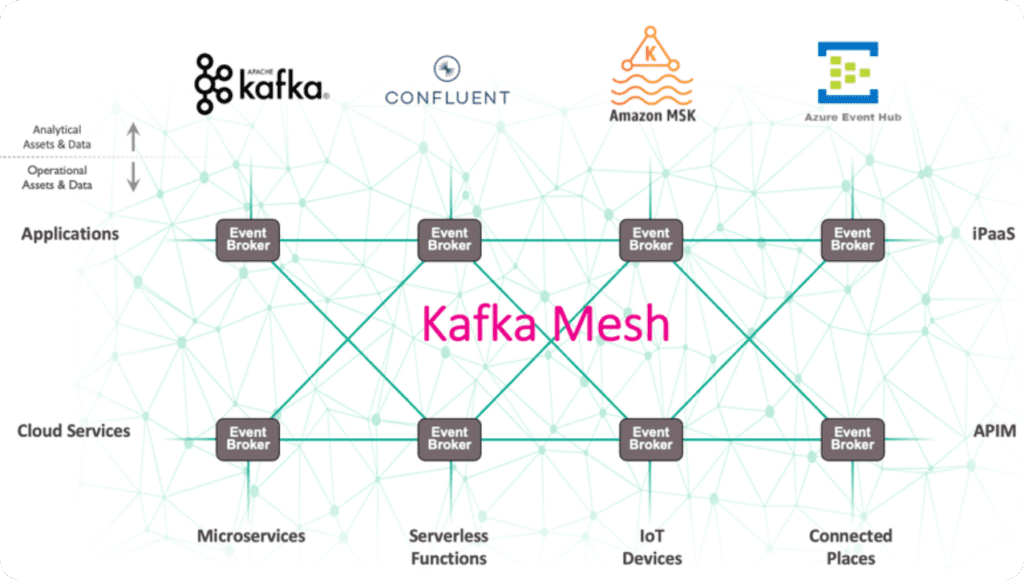

By building a Kafka mesh, you can enable your Kafka infrastructure to work well with enterprise operations. By comparing historical event broker and message broker requirements from both the operational and analytical perspectives, it becomes clear that combining the two drives a unified approach to these challenges.

A Kafka mesh links a Solace infrastructure to your Kafka infrastructure, allowing full integration of your analytics and operational environments!

Common Challenges when Replicating Kafka Messages Across Clusters

While there are multiple different technologies that can be leveraged to replicate Kafka messages, they all lead to a variety of different inefficiencies and deficiencies including:

- Unsophisticated filtering – While these technologies support filtering based on Kafka topic, often customers want to filter with greater granularity to do things like route messages based on metadata that indicates destination region. The inability to intelligently filter event streams is costly as they must transit your expensive WAN only to be discarded at destination.

- Unidirectional or publish to local topologies – These technologies support unidirectional, 2-cluster bidirectional, or many-to-one aggregation (publish to local) cluster topologies, most customers want to control the direction of replication with meshed cluster topology support. Not being able to customize your own topology and being forced into unidirectional or complicated aggregation cluster topologies that require messages to pass through extra clusters prior to delivery adds to your inter-cluster WAN costs.

- No or Limited Order Preservation – While some replication technologies support order preservation, configuring order preservation often reduces performance, is difficult to maintain, or adds licensing costs. For instance, it may require an aggregation cluster approach or a Kafka Connect infrastructure that leverages multiple systems to perform parallel message replication for performance. Many applications rely on in-order message delivery where out of order message delivery can require costly manual mediation, or simply will not work.

- Multi-flavor support – Each commercial/open-source variation or variation of Kafka has its own version of the replication technology that does not work or is not supported with clusters of a different flavor.

How Solace Improves Message Replication Between Kafka Clusters

Solace Event Broker excels at event routing in operational use cases, with support for fine-grained filtering, in-order message delivery, mesh topology, and integration with a wide variety of technologies including cloud native services and Kafka flavors. Let’s look at how building a Kafka mesh with Solace Platform can help you more effectively replicate Kafka messages.

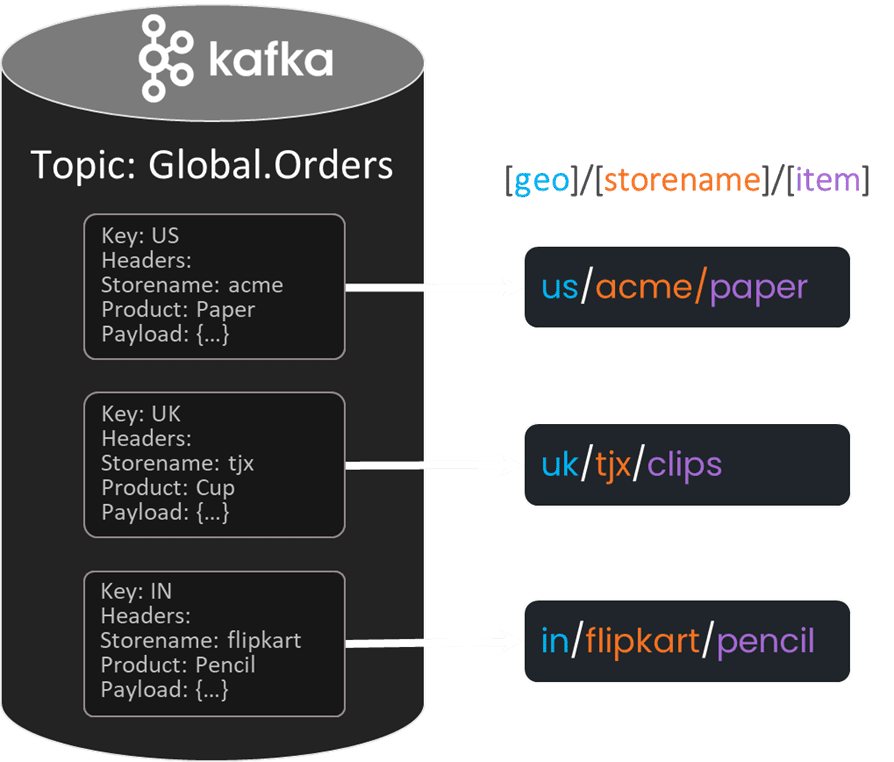

- Kafka Bridge’s Metadata Mapping – Solace Event Broker’s integrated Kafka bridge lets you dynamically map your Kafka message metadata into a Solace message metadata and vice versa including Solace topic’s fine-grained hierarchical topic structure.

- Solace Topics Solace topics filter on more than the Kafka topic, including any metadata you might want to include in the topic. Each destination Kafka cluster has one or more Solace queues that subscribes to a variety of Solace topics. A message may be subscribed to by multiple clusters (replication), a single cluster (routing), or none (filtering). This could allow you to either directly or indirectly identify from the publisher which clusters the message is to be replicated into (with prior defined knowledge of the downstream cluster’s queue subscriptions) or to identify attributes about the message that the downstream Kafka clusters may want to subscribe into (e.g. destination zones).

- Kafka Mesh – Solace Event Brokers can be deployed with Kafka senders (each of which transmit events to a Kafka cluster) or Kafka receivers (each of which receives messages from Kafka topics from a particular Kafka cluster) to control the direction of message delivery. Further, each Kafka sender and receiver can subscribe to or publish to the full Solace infrastructure. The Solace infrastructure can comprise one broker or many, each supporting up to 200 different bridges (each of which is a Kafka sender or receiver), allowing full mesh connectivity.

- Exclusive Queues – As discussed above, Solace Event Broker leverages queues to funnel messages from all Kafka and Solace sources into a given Kafka cluster. By leveraging the exclusive queue in this regard, all messages that are received by the broker are managed in a strict “first in first out” (FIFO) manner.

- Kafka Bridge’s Kafka API – Solace Event Broker’s integrated Kafka bridge leverages the ubiquitous Kafka Client API. By acting as a client to the Kafka broker and cluster and not appearing as a special replication software streaming component or a log database reliant on Kafka Connect, the broker can communicate directly with all major flavors of Kafka and avoid the cost overhead and message order limitations of Kafka Connect on all source and destination clusters. Perhaps most importantly, Solace offers support for the Kafka bridge to all major flavors of Kafka.

Conclusion

The software version of Solace Event Broker gives you the ability to build a Kafka mesh with Solace topics and subscriptions, leverage exclusive queues, and use an integrated Kafka bridge to deliver a WAN-optimized Kafka message replication solution with fine grained filtering, in-order delivery across hybrid cloud and multi-flavor Kafka environments.

Explore other posts from category: For Architects

Rob Tomkins

Rob Tomkins