Home > Blog > For Architects

Subscribe to Our Blog

Get the latest trends, solutions, and insights into the event-driven future every week.

Thanks for subscribing.

As enterprises continue to expand their digital footprint and adopt hybrid cloud strategies, one challenge consistently emerges in boardroom discussions and architecture reviews: how to effectively distribute master data across increasingly complex IT landscapes.

Having worked with numerous enterprise customers implementing event-driven architecture (EDA) within SAP environments, I’ve seen firsthand the friction traditional master data distribution (MDM) approaches create for integration teams and enterprise architects.

The Master Data Distribution Challenge

Master data—the informational representations of assets and entities like customers, products, suppliers, and organizational hierarchies—forms the backbone of enterprise operations. But despite its importance, most organizations are saddled with distribution approaches and tools that haven’t meaningfully evolved in decades.

The Hidden Cost of Bad Master Data

Before diving into solutions, it’s important to understand what happens when master data goes wrong. Consider these real-world scenarios:

- Expired Chart of Accounts: When finance teams work with outdated account structures, every transaction posted to the wrong account requires manual correction. A single product launch using an expired cost center can result in weeks of reconciliation work, delayed financial reporting, and frustrated accounting teams scrambling to restate numbers before month-end close.

- Invalid Customer Addresses: When a customer record contains an outdated or incorrect shipping address, the ripple effects are immediate and costly. Orders get returned, customer service receives angry calls, replacement shipments must be expedited, and sales teams lose credibility. Each incident requires manual intervention from multiple departments—customer service to field the complaint, warehouse teams to process returns, and accounting to handle refunds or credits.

- Stale Product Information: When marketing launches a campaign using outdated product specifications or pricing, the downstream chaos is predictable. Sales teams quote wrong prices, customers receive incorrect product information, and order fulfillment teams struggle with mismatched inventory. The manual effort to correct orders, update systems, and manage customer expectations can consume days of productivity across multiple departments.

These scenarios highlight a fundamental truth: bad master data doesn’t just create IT problems—it creates business problems that require expensive manual rework across the entire organization.

Current State Challenges

Most integration teams face a similar set of problems:

- Batch-based synchronization processes create latency gaps where different systems operate with stale data, leading to inconsistent customer experiences and operational inefficiencies

- Point-to-point integrations proliferate as new applications join the landscape, creating maintenance difficulties and increasing the risk of data quality issues

- Manual intervention is required when master data changes occur, as the ripple effect across dependent systems often requires coordination between multiple teams

Enterprise architects encounter equally frustrating issues:

- Traditional hub-and-spoke architectures, while proven, become harder to maintain and extend over time as data volumes grow and real-time requirements increase

- Lack of event-driven capabilities means applications can’t react dynamically to master data changes, forcing organizations to build complex polling mechanisms or accept data staleness

- Scalability concerns emerge as the number of consuming applications grows, particularly in cloud-native environments where elastic scaling is expected

Perhaps most importantly, business stakeholders are increasingly demanding real-time capabilities. When a customer updates their information, or when a product launches in a new market, the expectation is that this change propagates instantly across all touchpoints—from e-commerce platforms to customer service systems to analytics dashboards. Traditional MDM solutions simply can’t deliver on this expectation.

A Business Analogy for EDA

To understand how EDA solves these problems, think of it like a modern news organization compared to old-fashioned newspaper delivery.

- Traditional MDM (Old School Newspaper Model): Imagine if your business ran like a daily newspaper. Every morning, someone collects all the changes that happened yesterday, prints them in a batch, and delivers them to every department. By the time accounting receives yesterday’s customer updates, sales has already quoted prices based on old information, and customer service is working with outdated contact details. If something urgent happens at 2 PM, everyone has to wait until tomorrow’s “edition” to find out about it.

- Event-Driven MDM (Breaking News Model): Now imagine your business operates like a modern news network with instant notifications. The moment a customer updates their address, every system that needs to know—shipping, billing, customer service—gets an immediate alert with the new information. When a product price changes, sales tools, e-commerce sites, and inventory systems all update simultaneously. No waiting, no batch processing, no stale information.

In technical terms, EDA works on a “publish-subscribe” model. When something important happens (an “event”), the system immediately notifies (“publishes”) the change to all interested parties who have “subscribed” to receive those updates. It’s like having a smart assistant who instantly tells everyone who needs to know whenever something changes, rather than waiting to compile a daily report.

The Shift to Real-Time, Event-Driven Master Data

Modern master data distribution requires a shift from batch-oriented, request-response patterns to an event-driven, publish-subscribe, real-time approach. This transformation enables organizations to move from asking “what changed?” to automatically responding to “something changed.”

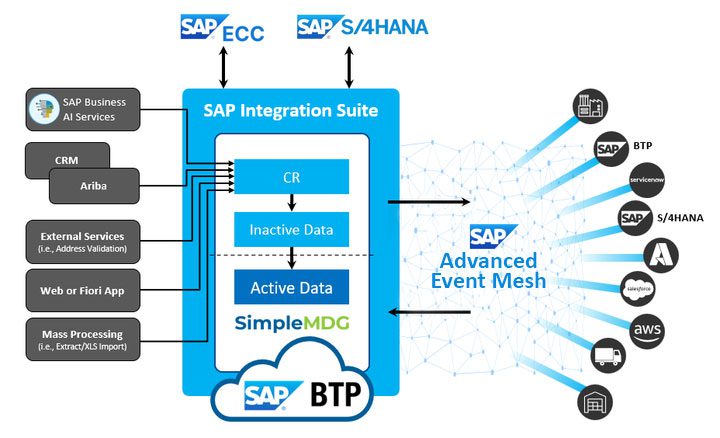

This architectural evolution is powered by robust event streaming infrastructure, such as SAP Integration Suite, advanced event mesh, which provides the foundational capabilities within SAP Business Technology Platform. Rather than treating master data changes as periodic synchronization events, advanced event mesh enables real-time event streaming that can instantly notify any number of consuming applications when master data updates occur.

The technical advantages of this event-driven distribution are significant:

- Eliminates polling overhead that burdens traditional systems

- Reduces network traffic through targeted event delivery

- Provides natural decoupling between master data sources and consumers.

- Applications can subscribe to only the master data events they need, creating more efficient and focused integration patterns.

From an operational perspective, advanced event mesh’s guaranteed message delivery and built-in replay capabilities ensure that no master data change is lost, even during system maintenance windows or unexpected outages. The platform’s ability to handle millions of events per second allows organizations to scale their master data distribution without performance degradation.

Making Master Data Self-Service

One of the most compelling advantages of event-driven MDM is the ability to make master data consumption self-service. Consider a common scenario: an SAP customer wants to update Salesforce with SAP change data. Traditionally, this requires custom development, point-to-point integration, and ongoing maintenance.

When master data is always streaming—if any new consumer wants it, they simply subscribe to it. No need to code this change. It’s as simple as tuning into a radio station or following an X (formerly Twitter) hashtag. This self-service model dramatically reduces integration complexity and time-to-value for new use cases.

Ensuring Data Quality and Compliance Through Governance

While real-time event streaming provides the necessary speed and agility, effective master data distribution also requires sophisticated data governance and quality management capabilities. This is where a dedicated governance platform like SimpleMDG creates compelling value for enterprise customers.

SimpleMDG brings advanced master data governance capabilities that complement advanced event mesh’s event streaming infrastructure. Their platform provides data quality validation, workflow management, and governance controls that ensure only high-quality, approved master data changes trigger events through advanced event mesh. This combination addresses a critical gap in many event-driven implementations where the speed of event distribution can amplify data quality issues across the enterprise.

Keeping the Core Clean

SimpleMDG aligns perfectly with SAP’s best practice of keeping the “core clean.” Running natively on SAP Business Technology Platform (BTP) rather than in the SAP core, SimpleMDG enables organizations to customize and govern master data distribution processes without touching the SAP core system. This approach ensures that core SAP systems remain stable, upgradeable, and compliant with SAP’s clean core strategy while providing the flexibility needed for sophisticated master data governance and distribution.

Preventing Costly Rework Through Governance

The integration between SimpleMDG and advanced event mesh creates a powerful feedback loop that prevents the costly rework scenarios described earlier:

- Chart of Accounts Protection: SimpleMDG’s workflow management ensures that accounting structure changes go through proper approval processes before being distributed. When a new cost center is created or an existing one is modified, the governance workflow validates the change against business rules and routing approvals to the appropriate finance managers. Only after approval does advanced event mesh distribute the change to all financial systems simultaneously, ensuring every application has the correct, current account structure.

- Customer Data Validation: Before a customer address change propagates across systems, SimpleMDG can validate the address against postal databases, check for completeness, and route changes through appropriate approval workflows when needed. This prevents the cascade of problems that occur when invalid addresses reach shipping, billing, and customer service systems.

- Product Information Accuracy: SimpleMDG’s data quality controls ensure that product specifications, pricing, and availability information meet business standards before distribution. This prevents marketing campaigns from launching with incorrect information and sales teams from quoting outdated prices.

The seamless handoff between SimpleMDG’s governance processes and advanced event mesh’s distribution capabilities ensures that governance doesn’t become a bottleneck in real-time scenarios. When SimpleMDG’s workflows complete—whether approving a new customer record or validating a product hierarchy change—these approval events automatically trigger distribution through advanced event mesh.

Additionally, SimpleMDG’s data lineage and impact analysis capabilities help enterprise architects understand the downstream effects of master data changes before they’re distributed. This visibility, combined with advanced event mesh’s event tracing and monitoring capabilities, provides unprecedented insight into master data flow across the enterprise.

GDPR and Compliance Benefits

Real-time MDM brings significant value to GDPR compliance and broader data privacy requirements. When a data subject requests a change or deletion, it is reflected immediately across all systems—no more waiting for batch jobs or risking that some systems are missed. The complete traceability provided by SimpleMDG’s governance workflows combined with advanced event mesh’s audit capabilities ensures that every change is logged and can be demonstrated for regulatory compliance. This proactive approach to data accuracy and auditability reduces compliance risk while maintaining operational agility.

Enabling Retail Innovation

A German supermarket chain demonstrates the real-world impact of event-driven master data distribution. Using advanced event mesh, the retailer streams master data across their stores and supply chain in real-time. One particularly innovative application involves streaming IoT temperature and humidity data that tells each store when to discount produce based on changes in predicted spoilage dates. This event-driven approach has delivered measurable ROI through reduced waste—a critical success metric in the retail industry where margins are thin and sustainability is increasingly important.

This implementation showcases how event-driven architecture enables new business capabilities that weren’t possible with traditional batch-based systems. By making data immediately available across their enterprise, they can respond dynamically to changing conditions and optimize operations in real-time.

Popular Implementation Patterns

Successful implementations typically follow several common patterns that leverage the strengths of both platforms.

- Customer master data scenarios often implement a “golden record” pattern where SimpleMDG manages the authoritative customer record and its governance workflow, while advanced event mesh distributes approved changes to CRM systems, e-commerce platforms, and analytics environments in real-time.

- Product master data implementations frequently use event-driven product launches, where SimpleMDG’s approval workflows trigger advanced event mesh events that simultaneously update inventory systems, pricing engines, and marketing platforms. This eliminates the traditional delays between product approval and market readiness.

- Financial master data, particularly chart of accounts and organizational hierarchies, benefits from the audit trail capabilities that both platforms provide. Changes flow through SimpleMDG’s approval process and are distributed via advanced event mesh with complete traceability, supporting compliance requirements while maintaining operational agility.

Technical Considerations and Best Practices

Implementing event-driven master data distribution requires careful attention to several technical considerations:

- Event Schema Design becomes critical—events must carry sufficient context for consuming applications while remaining lightweight enough for high-volume scenarios. Both advanced event mesh and SimpleMDG support flexible schema evolution, allowing organizations to enhance event content over time without breaking existing consumers.

- Security and Access Control patterns must account for the distributed nature of event-driven architectures. Advanced event mesh’s integration with SAP BTP’s identity and access management, combined with SimpleMDG’s role-based governance controls, provides comprehensive security coverage from data creation through distribution.

- Monitoring and Observability require new approaches compared to traditional batch integration patterns. The combination of advanced event mesh’s real-time event monitoring and SimpleMDG’s governance dashboards provides the visibility needed to maintain operational excellence in dynamic environments.

Looking Ahead

As enterprises continue their shift toward real-time, event-driven operations, master data distribution is becoming a critical proving ground for architectural modernization. What once required complex batch schedules and brittle point-to-point integrations can now be handled through publish-subscribe patterns that deliver immediate, reliable updates to any number of consumers.

This shift isn’t just about performance—it’s about enabling new capabilities. Real-time master data propagation opens the door to more responsive customer experiences, faster product launches, and AI-powered insights based on up-to-date reference data. As more organizations adopt event mesh technologies across domains like supply chain, finance, and customer experience, the same architectural principles that modernize MDM will form the foundation of a more agile, scalable enterprise.

With governance playing a central role in maintaining data quality at speed, we expect the combination of streaming infrastructure and governance platforms like SimpleMDG to become the new standard for enterprise-grade MDM.

Conclusion

Master data doesn’t have to be a bottleneck. By pairing the real-time capabilities of SAP Integration Suite, advanced event mesh with the governance controls of SimpleMDG, organizations can modernize their MDM architecture without compromising data quality or control.

This event-driven approach simplifies integration, reduces latency, and helps ensure consistent, trustworthy data across all systems and touchpoints. Most importantly, it eliminates the costly manual rework that occurs when bad master data propagates across the enterprise. It’s a proven path forward for enterprises looking to deliver the real-time responsiveness that modern business demands—without the complexity of legacy batch-based systems.

Explore other posts from category: For Architects

Michael Hilmen is senior director of technology in Solace's business development team. With nearly 30 years in the integration and middleware space, he’s worn just about every hat—professional services, sales, engineering, architecture, and business development—across companies ranging from scrappy startups to global giants.

For the past decade at Solace, he's helped enterprises embrace event-driven architecture to reduce the complexity of their system and accelerate the performance of their business. He specializes in showing organizations how EDA forms the backbone of the complex environments it takes to operate efficiently on a global scale.

Subscribe to Our Blog

Get the latest trends, solutions, and insights into the event-driven future every week.

Thanks for subscribing.