What is event-driven integration?

Event-driven integration is an integration pattern in which independent IT components (applications, devices etc.) communicate in a decoupled manner by publishing and subscribing to events. This pattern is enabled by event brokers or a network of event brokers called an event mesh.

Event-driven integration is the application of event-driven architecture to integration, whereby integration functions such as connectivity, transformation and mapping are broken into smaller components and loosely coupled via the publish/subscribe exchange pattern – a lot like event-driven microservices. Event driven integration complements API-led integration, meeting integration needs not well suited to synchronous or point-to-point integrations.

What is the architecture of event-driven integration?

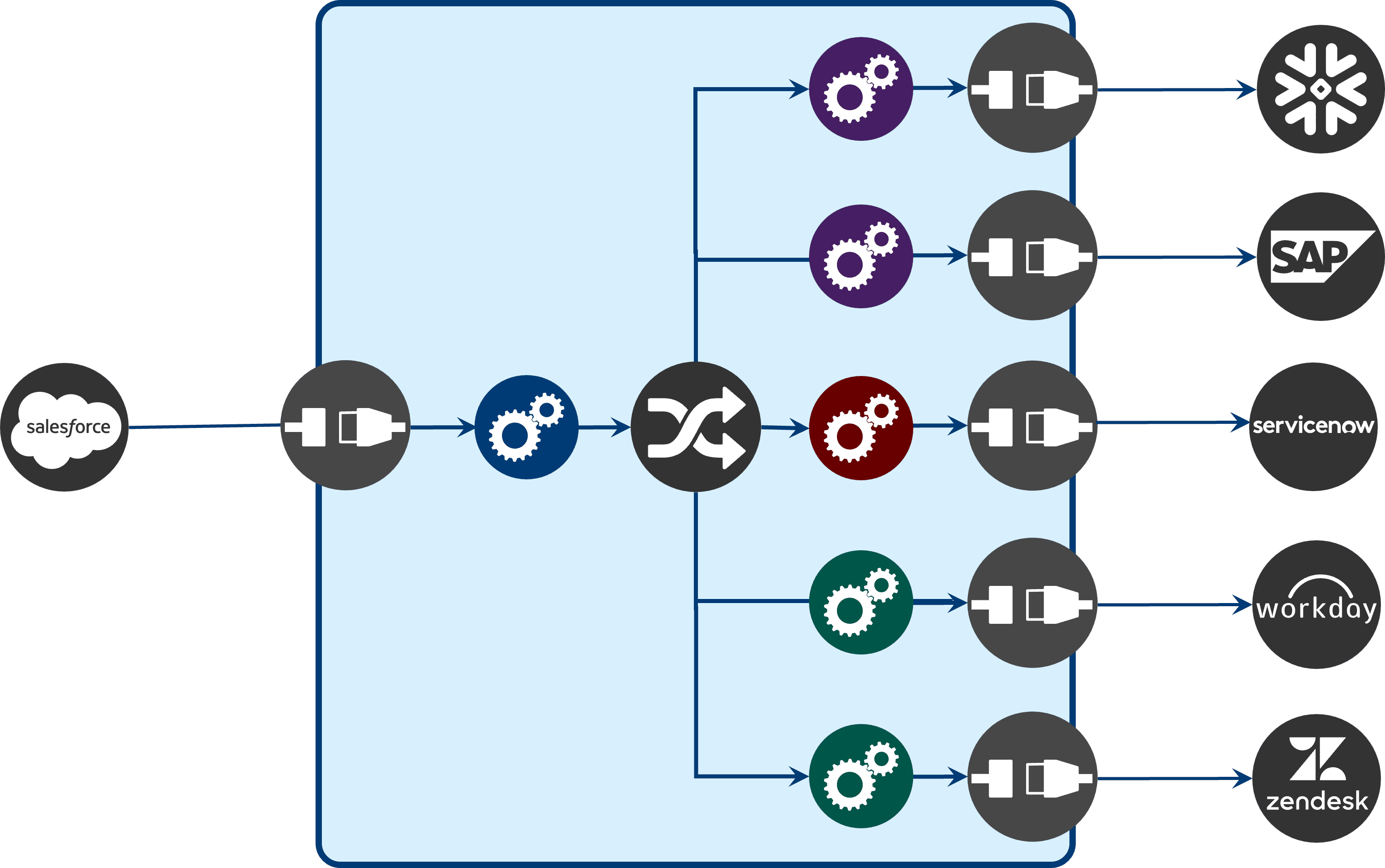

Event-driven integration turns conventional integration architecture inside-out — from a centralized system with connectivity and transformation in the middle to a distributed event-driven approach, whereby integration occurs at the edge of an event-driven core.

This approach requires three things:

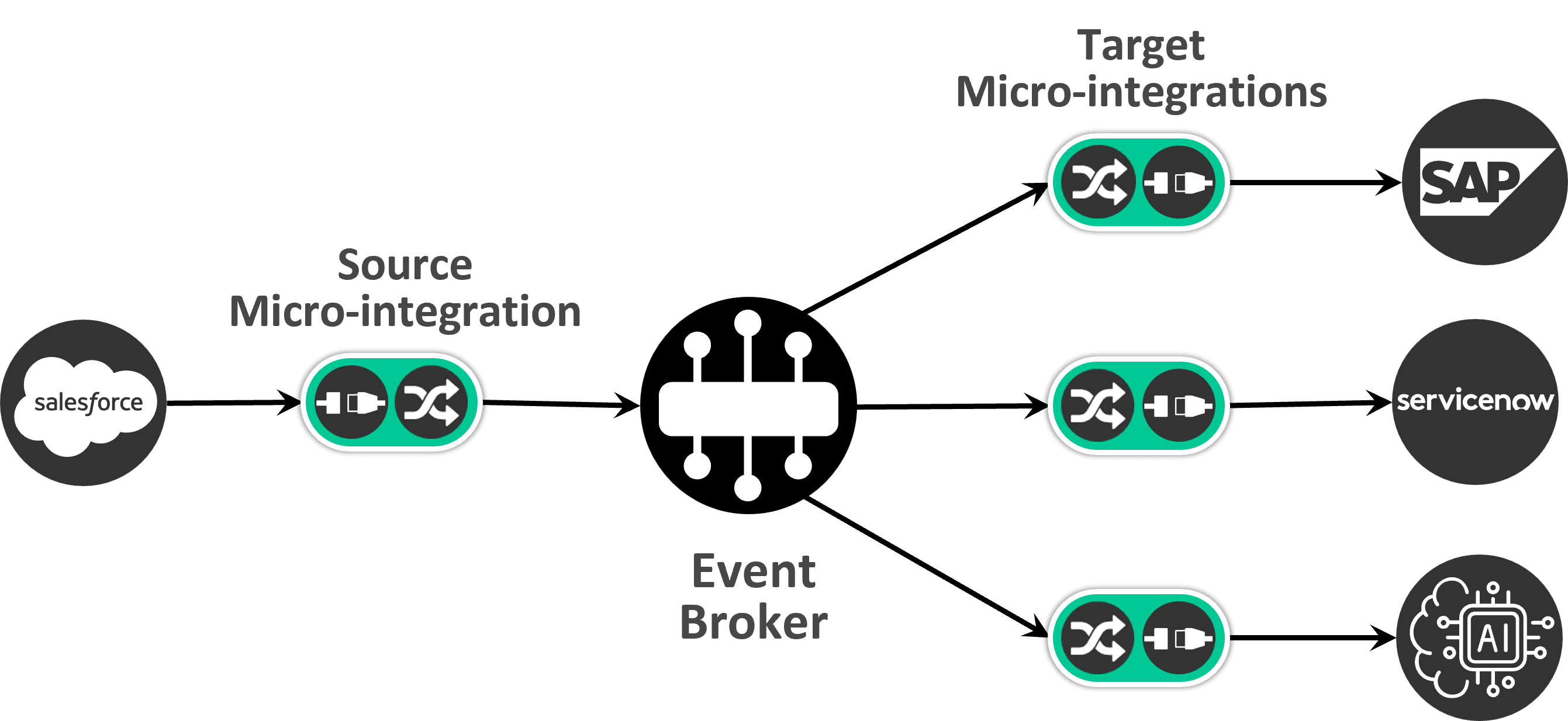

- Micro-integrations that connect and integrate applications (ERP, CRM, SCM, etc.) and infrastructure like messaging systems, databases, files, analytics and AI components with an event-driven data distribution layer (event brokers and event mesh ). Source micro-integrations acquire changes from source systems and publish these changes as events into an event broker whereas target micro-integrations consume events from an event broker and integrate them with a target system.

- An event broker (or event mesh) that supports the publish/subscribe messaging pattern to ensure the intelligent routing and delivery of information to potentially many target systems in a scalable, resilient, secure manner, whether that be locally or across diverse environments and geographies.

- Events that embody the digital change that has occurred. An event is produced by a source micro-integration from a change in a source system and published to an event broker to be consumed by one or more target micro-integrations that each ensures delivery to a given target system. Event definitions, often documented using AsyncAPI, are the well-defined interface (like an API defined by OpenAPI) of the change that has occurred.

Architecturally, event-driven integration looks like this:

Without an event-driven approach, on the other hand, you need to create a synchronous integration flow for each type integration. This means as you add more target systems and more processing to this flow, this negatively impacts performance and reliability which leads to a degraded user experience. In cases of system-to-system interactions, it can lead to timeouts, retransmissions, duplications, loss of updates and instability. Beyond that, tightly coupled integrations make the system brittle, i.e. more difficult and risky to change than event-driven systems which facilitate change.

How does event-driven integration relate to API-led integration?

Similar to API-led integration, event-driven integration is a methodical way to connect applications through reusable components (in this case events instead of APIs) within an organization’s IT infrastructure. These events are distributed in real-time and in a loosely coupled manner via publish/subscribe event brokers (versus synchronous orchestrated integration flows) to make information available immediately to many applications when a change occurs. This ensures immediate access to up-to-date information across many business applications, SaaS, custom applications, analytics, AI, dashboards and more to deliver a richer, more real-time more robust user experience.

Solace sees events as crucial counterparts to synchronous APIs and API-led integration where business solutions are composed of a blend of the two. This “composable architecture” with both types of interfaces is widely promoted by analysts (here’s an example from Gartner) and other industry experts.

What are the key characteristics of event-driven integration?

- Small, modular components, deployed at the edge: The use of micro-integrations enhances performance because connectivity and transformations can be handled near the systems they link to the event mesh. Their single purpose nature also improves agility and reduces system complexity.

- Events are the key API: Rather than traditional synchronous integrations where APIs are defined, discovered and used to integrate, events serve that function as they represent the asynchronous version of the data contract to integrate with.

- Asynchronous event-driven communications decouples applications and devices so they can both send and receive information without any sort of active connection with the system at the other end. Intended recipients can even receive information that was been sent while they were offline or unable to keep up with the flow of information, without impacting the source or other target systems.

What are the benefits of event-driven integration?

Augmenting your existing integration platform with event-driven integration offers several advantages.

- Improve user experience/responsiveness by reducing long running operations, absorbing bursts and being tolerant of system failures so they don’t impact user interactions for increased up time.

- Enhance agility and accelerate innovation by democratizing access to real-time data, making it easy to build, change and add to the way applications and devices interact, and to incorporate new apps, cloud services, IoT devices, AI and future capabilities into existing business processes in a robust and scalable manner thereby future proofing your architecture. Changes at the edges have localized impact/risk which again improves agility.

- Increase the speed and scalability of your integration platform by putting in place a flexible, high performance publish/subscribe data distribution layer.

- Extend the useful life of legacy applications by enabling them to exchange information with new apps and cloud services in a real-time, event-driven manner thereby freeing them from constant polling and allowing new capabilities to be developed in external systems based on up-to-date data – thereby reducing risk and again accelerating time-to-market for new ideas.

- Make your system simpler and more robust by replacing the many spaghetti point-to-point integrations that rely on synchronous communications with more flexible asynchronous, decoupled and one-to-many interactions that can better absorb bursts. deal with speed mismatches, and tolerate failure conditions to prevent cascading failures.

- Reduced cost by (i) removing the need for constant polling for changes from many applications that either impacts systems of record or requires deployment of caching & query offload technologies and (ii) not needing to scale all integration components for peak capacity – only those that are absolutely required for peak since queuing and deferred execution of some processing steps allows those parts to be scaled for average loads which tend to be much, much lower than peak.

- Technology Flexibility and Information Ubiquity. You can use different types of integration technologies to implement micro-integrations at the edge. This allows different choices of technologies for different reasons: performance, features, ease of use, cost, etc. This architecture also supports being the “integrator of integrators” – breaking down the information silos in many enterprises that result from the (often) many integration technologies in use at an enterprise. Use the right integration technology at the edge as needed or migrate from one integration technology/vendor to another.