Home > Blog > For Architects

Subscribe to Our Blog

Get the latest trends, solutions, and insights into the event-driven future every week.

Thanks for subscribing.

Change is a constant in our world, and each change generates an event. These events range from tangible shifts like changes to a room’s temperature to more abstract changes such as the rising and falling of stock prices .

In the digital world, there are tools to move these events from their sources to interested parties. Sophisticated versions of these tools, known as event brokers, allow consumers to subscribe to specific events without burdening the event sources with the details. That way, even as consumers join or leave, event producers remain unaware of any distribution changes and middleware teams don’t need to re-configure applications every time a change happens.

By linking these brokers, you can form an event mesh that distributes events across the digital landscape, reaching everywhere from on-premises data centers, public and private clouds to remote retail stores and connected vehicles. Event meshes empower organizations to adopt a fully event-driven approach, improving awareness of developing situations and enabling immediate action in response to changes large and small. Event mesh is a key component of Solace Agent Mesh.

Although still in its early stages, artificial intelligence’s impact on computer-driven systems and their information processing capabilities is undeniable. Enhancements in natural language processing, image recognition, and audio transcription have been remarkable, and are paving the way for machines to interpret unstructured inputs and perform tasks considered exclusively human domains.

While event meshes and AI are both catalysts for innovation, their combination transcends the sum of their parts.

How an Event Mesh Elevates AI

Building larger and more sophisticated AI models to tackle all possible forms of input is possible, but it is more practical to have smaller specialized models for specific functions – much like how our brains work, with the various lobes handling different jobs in areas such as language, memory, movement, problem solving, speaking and sensory processing. Smaller models are cheaper to run and can respond much faster than large models. However, with smaller more focussed models it is required to deliver the correct data in the appropriate form to each specialized model.

Event meshes excel at coordinating the delivery of data to systems that can process it, and guarantee the delivery of data so that when one component is finished its processing and sends the result back to the event mesh, it can safely forget about it. This makes creating pipelines of processing trivial.

In the context of AI, this greatly simplifies the act of incrementally adding intelligence to events as they flow through various applications that each perform a specific function. Each stage in the pipeline represents the inference of a single model, and the event mesh can take care of the rest.

Modern event meshes also support telemetry so that the entire workflow can be easily monitored and debugged to ensure that it works as expected.

How AI Enhances an Event Mesh

Event meshes are only as valuable as the events they receive. Traditionally, these events were already in the digital domain, such as the output of a sensor on a manufacturing line or an input on a stock trading terminal. Similarly, the reaction to events has traditionally been handled by hand-coded algorithms, performing actions programmatically rather than by thought.

Today’s AI provides some new opportunities within an event mesh. The significant advances in machine vision, speech transcription, and language understanding allows a new domain of events to be sent into an event mesh. Instead of being confined to digital, machine-generated events, AI models can convert the analog world into digital events, such as changes in a video stream or keywords in the transcription of audio input.

Additionally, AI can augment events in the system. AI agents can subscribe to a narrow set of events, provide a prompt template specific to that subscription and then use a large language model to enhance the event with additional information. For example, performing sentiment analysis on user-input forms to identify customers ripe for an upsell, or synthesizing new events based on the combination of accumulated data.

To do this, you’d use the event mesh to link many AI agents, each tailored to a specific set of events. This can be a straightforward as subscribing to all events that contain raw audio and using a speech to text model to create the transcription which is then published back into the mesh. Or it can be an agent that is subscribed to the output of another agent so that it can evaluate the correctness of that other agent.

A Practical Demonstration

I have built a small demonstration showing how an event mesh and a set of AI agents can be used together to produce a valuable application. In this demonstration, I have built a building incident manager. This incident manager is responsible for receiving reports of interesting information in the building that it manages, collating the data and performing the appropriate actions to take care of the incident in progress.

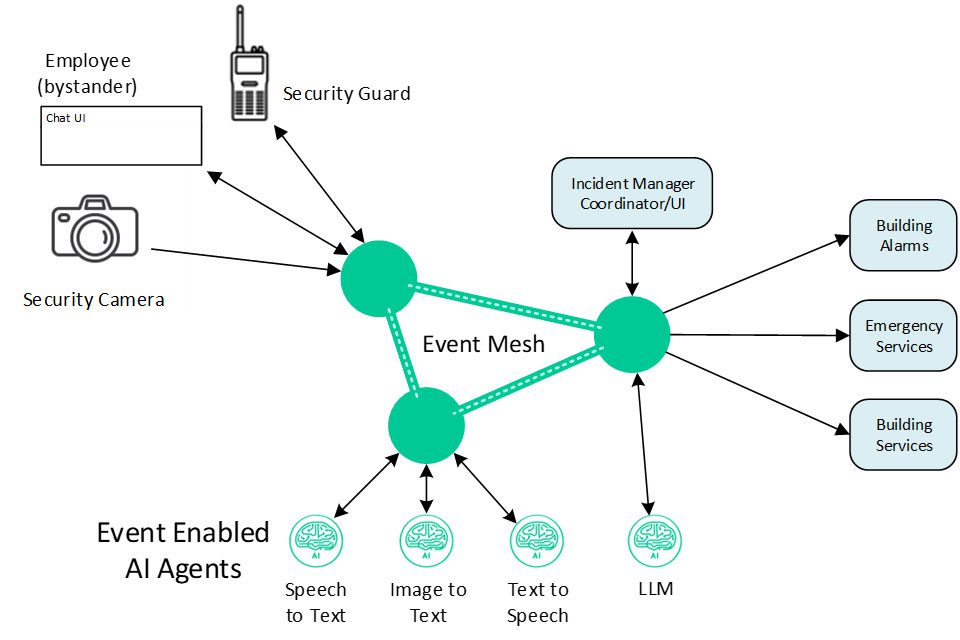

The diagram below shows a high-level view of pieces involved in the application.

The diagram illustrates the following components:

Event Sources

- Security guard – audio input via network connected 2-way radio

- Employee – text input via a messaging app, such as Slack

- Security camera – image input

AI Agents

- Speech to text

- Image to text

- Text to speech

- Large Language Model

Incident Manager

- Coordinates all the reports and issues follow on actions

Responding Apps

- Building alarms

- Emergency services – fire department, ambulance, police

- Building services – janitor, etc

All of these components communicate asynchronously via the event mesh using guaranteed messaging to ensure that no events can be lost in transit.

How it works

By bringing the event mesh and the AI agents together, it is surprisingly straightforward to build this fully functioning demonstration.

In this demo, all the event sources are simple webapps running on mobile phones, with each being able to send an incident report to the event mesh in its appropriate media. For example, with the guard app running on the phone, the user can speak into the phone and that audio is published to the event mesh.

The image and audio reports need to be enhanced before the incident manager can do anything with them, so AI speech-to-text and image-to-text agents are created that subscribe to the appropriate report events. The incident manager subscribes to employee message, transcribed audio and described image reports.

Upon receiving incident reports, the incident manager maintains state of all past reports and all past actions. Then for each new report, it sends an incident specific request to the LLM with the following prompt:

You are an incident coordinator for the building.

There is an open incident in the sector: ${report.sector}.

You must examine the current state, the past actions and the new reports

to coordinate the response to the incident. Your response must only include

valid JSON and no other text or explanation.

You are able to update any of the fields in the incident report. You can also

add new actions to the incident report. You can also close the incident.

Here are the exact actions you may perform:

update_field: {name: <fieldName>, value: <value>},

alert_service: {service: <fire_department|ambulance|police|building_security|building_services>, message: <message>, active:<true|false>},

close_incident: {},

message_employee: {employee_id: <employeeId>, message: <message>},

message_all_employees: {message: <message>},

message_to_guard_in_sector: {sector: <sector>, message: <message in text>},

sector_audio_announcement: {sector: <sector>, message: <message in text>},

building_audio_announcement: {message: <message in text>},

sector_alarm: {sector: <sector>, alarm: <on|off>},

building_alarm: {alarm: <on|off>},

The status field is either "open" or "closed"

The alert_level is a number from 1 to 5, with 1 being low to 5 being critical.

The guard in the sector where there is a problem should be kept up to date with useful information.

You can ask the guard additional questions to get more information.

Before alerting the police, request that a guard confirm the incident and don't just rely on bystanders.

If a building audio annoucement is made, the annoucement should also be messaged to all employees

Don't update the reports or past_actions fields. These are for your reference only.

Reponses must only include valid JSON in the following format:

{

actions: [action1, action2, ...]

}

There is a new report for the incident in sector ${report.sector}.

Incident Details:

${JSON.stringify(state, null, 2)}

New Report:

${JSON.stringify(reducedReport, null, 2)}

What actions should I take? Respond in JSON only.

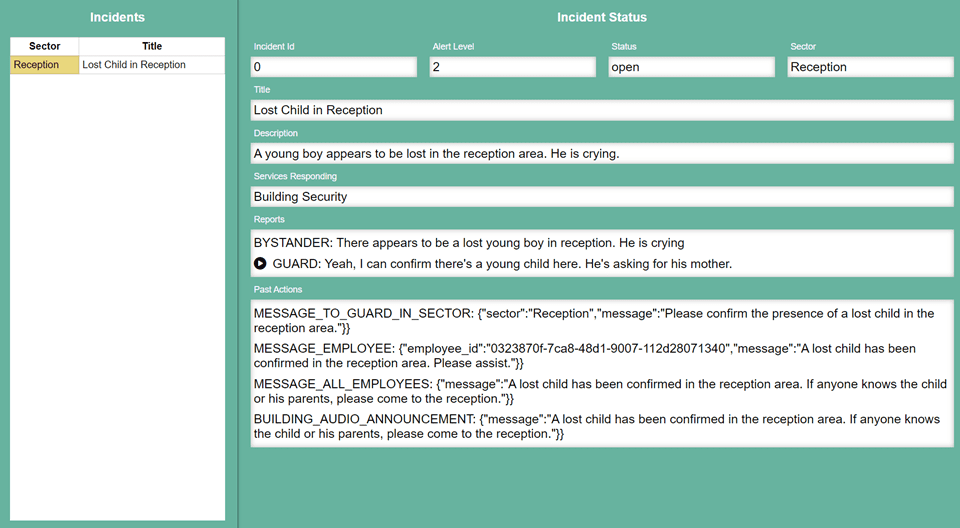

Using GPT-4 as the LLM gives excellent results in this system. As shown in the following diagram, an incident about a lost young child in the reception area of the building is explored. As you can see in the incident manager screen, the first report was from a bystander indicating that “There appears to be a lost young boy in reception. He is crying”.

The actions given by the LLM in response to this are to set several fields in the incident state (e.g. set title to “Lost Child in Reception”) and to request that the security guard confirm the report, which the guard does in the next report.

In reaction to the guard’s response, the system then provides the actions: MESSAGE_EMPLOYEE, MESSAGE_ALL EMPLOYEES and BUILDING_AUDIO_ANNOUNCEMENT. It has also decided that this deserves an alert level of 2 out of 5.

In other examples, such as a strange person with a weapon, the system will ensure that everyone moves away from the area. It will also alert the police after confirming with the guard. In the case of someone reporting a mess in a specific room, the system will request a cleanup crew from building services.

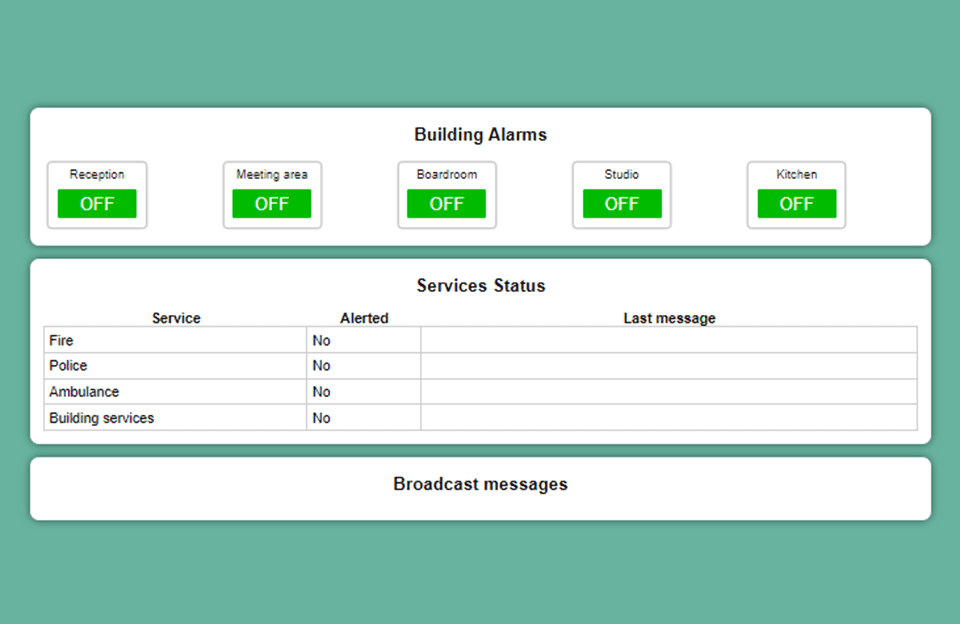

During these incidents, the incident manager is forwarding the requested actions onto the event mesh. Services that can fulfill those actions are subscribed to those events and will carry them out appropriately. In this demonstration, a separate webapp (seen below) is responsible for receiving and displaying them.

This work was achieved using Solace event brokers and Solace API libraries within Python and JavaScript. AI tools from OpenAI, ElevenLabs and Jina AI were used as backends for the AI agents.

Conclusion

The integration of AI with event meshes represents a groundbreaking advancement in digital event management. This fusion, shown here by the building incident manager demonstration, showcases AI’s potential to significantly enhance event mesh capabilities. By utilizing specialized AI models for precise tasks like speech-to-text and image analysis, and employing large language models for decision-making, the system efficiently translates physical occurrences into actionable digital events, ensuring both rapid response and high accuracy.

As I have shown, this approach not only transforms how data is processed and handled within the event mesh but also elevates the system’s ability to make context-aware decisions in complex situations. The demonstrated incident management application underlines the practicality and effectiveness of this AI-enhanced event mesh, opening new possibilities for automated, intelligent systems in various sectors. This marks a significant step towards a future where digital systems can adapt and respond intelligently to the ever-changing dynamics of the real world.

Explore other posts from categories: Artificial Intelligence | For Architects

Edward Funnekotter serves as the Chief Architect and AI Officer at Solace. Leading the architecture teams for both Cloud and Event Broker products, he also leads the company's strategic direction for AI integration within products and internal tools. In 2004, Edward began his journey with Solace as an FPGA architect. He later transitioned into management and led the Core Product Development team for several years before ascending to his present position.

Beginning his professional journey at Newbridge Networks, Edward took on the role of an embedded software developer after earning his Electrical Engineering degree from Queen’s University. After his tenure at Newbridge Networks, he embraced an ASIC architecture position at a Californian Internet Routing chipset firm. There, he successfully architected and designed multiple high-speed network processors and queuing chips.

Subscribe to Our Blog

Get the latest trends, solutions, and insights into the event-driven future every week.

Thanks for subscribing.