Home > Blog > Artificial Intelligence

Subscribe to Our Blog

Get the latest trends, solutions, and insights into the event-driven future every week.

Thanks for subscribing.

The technology industry has a tendency to repeat the same architectural mistakes across different paradigms. We saw this clearly during the microservices era, and we’re witnessing it again today with agentic AI systems. The question is: will we learn from history, or are we destined to rebuild the same brittle, tightly-coupled systems we spent years untangling?

The Microservices Déjà Vu

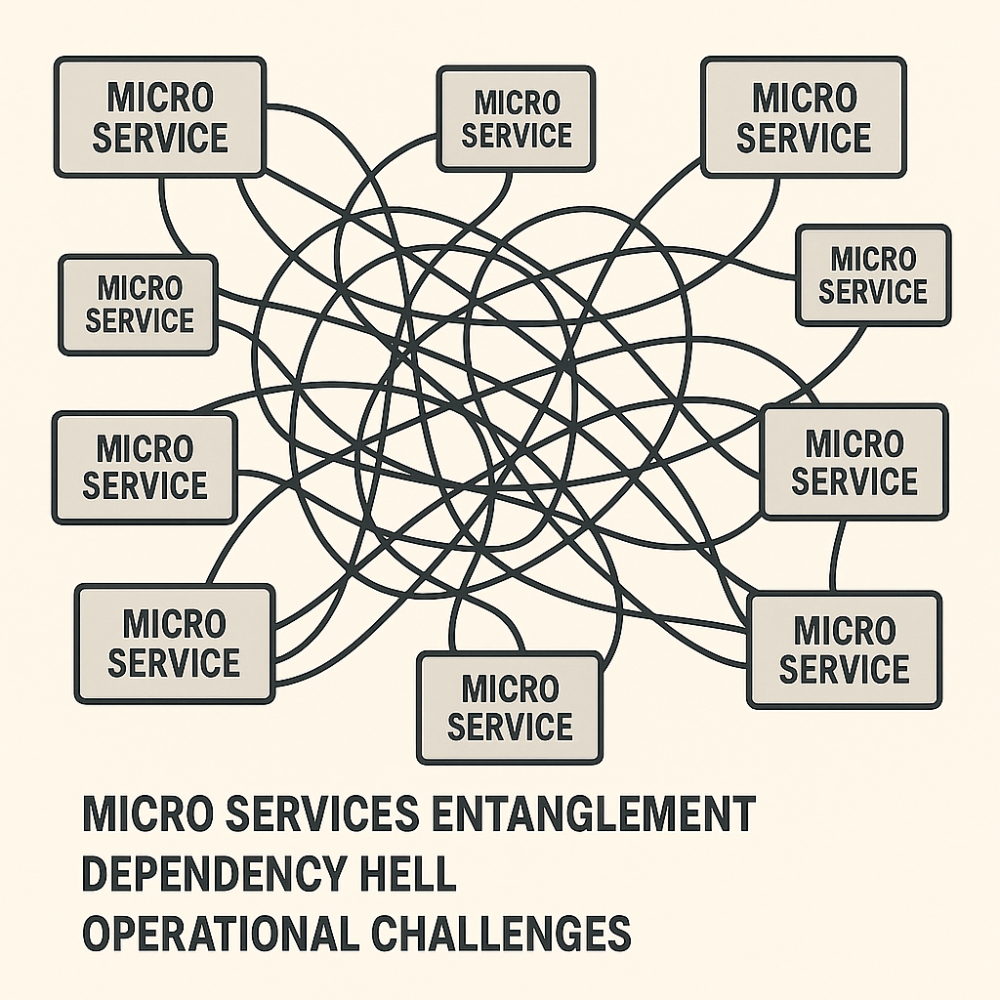

Remember the early days of microservices adoption? We rushed to decompose monolithic applications into smaller services, believing that simply breaking apart code would magically solve their scalability and maintainability challenges. The reality was far more sobering.

Microservices Spaghetti…not tasty at all!

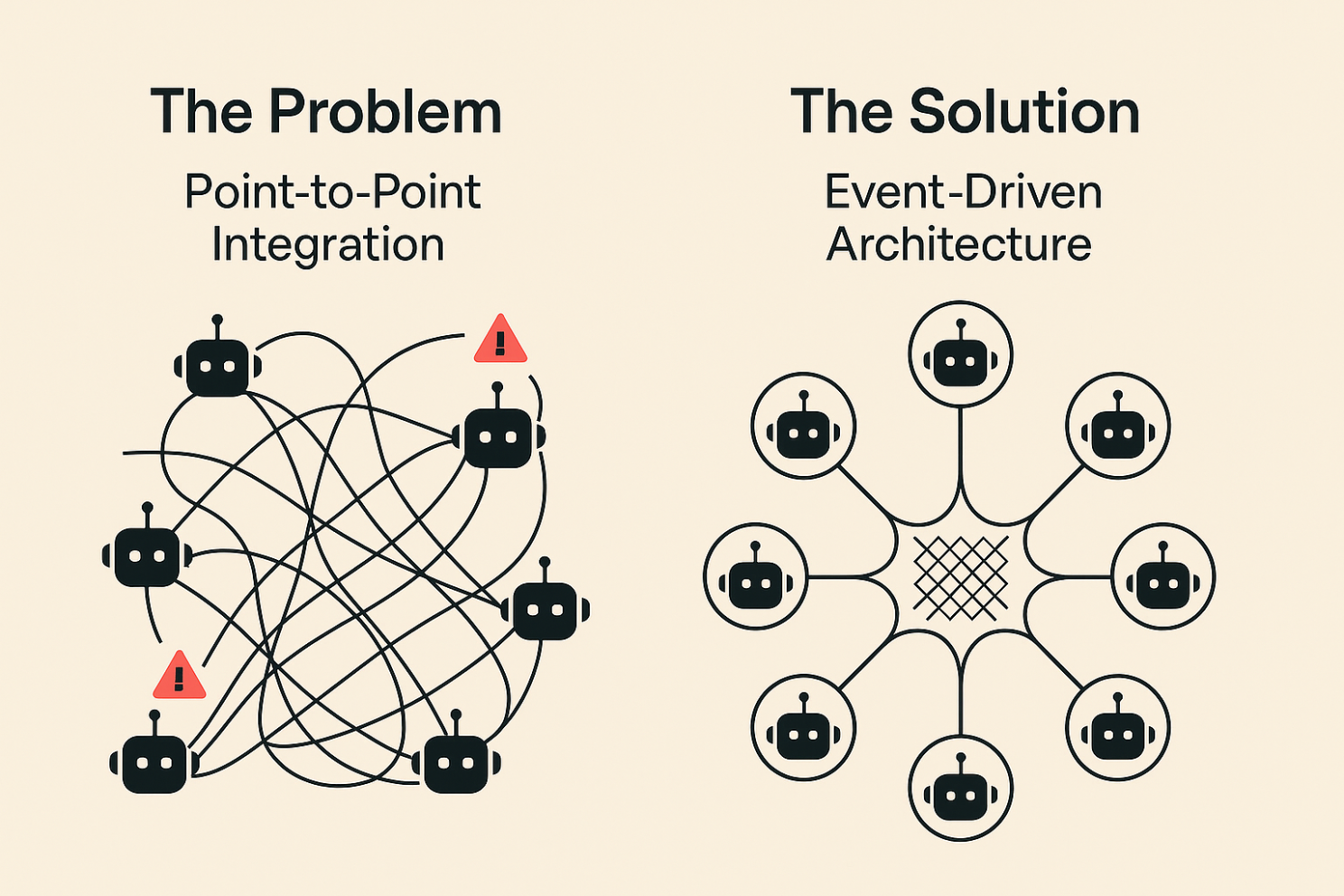

Those early microservices architectures relied heavily on synchronous, point-to-point communication. Service A called Service B, which called Service C, creating intricate webs of dependencies. What appeared to be a distributed system was actually a “distributed monolith” – technically separate services that were functionally inseparable. When one service experienced latency or failure, cascading effects rippled throughout the entire system. Teams found themselves coordinating deployments across dozens of services, and debugging became a nightmare spanning multiple systems.

The turning point came when we started decoupling the services using event-driven architecture (EDA). Instead of services calling each other directly, they started communicating through event brokers. This shift transformed rigid, fragile systems into resilient, scalable platforms. This change allowed microservices to evolve independently, teams gained autonomy, and systems became more fault-tolerant.

History Repeating: The Agentic AI Challenge

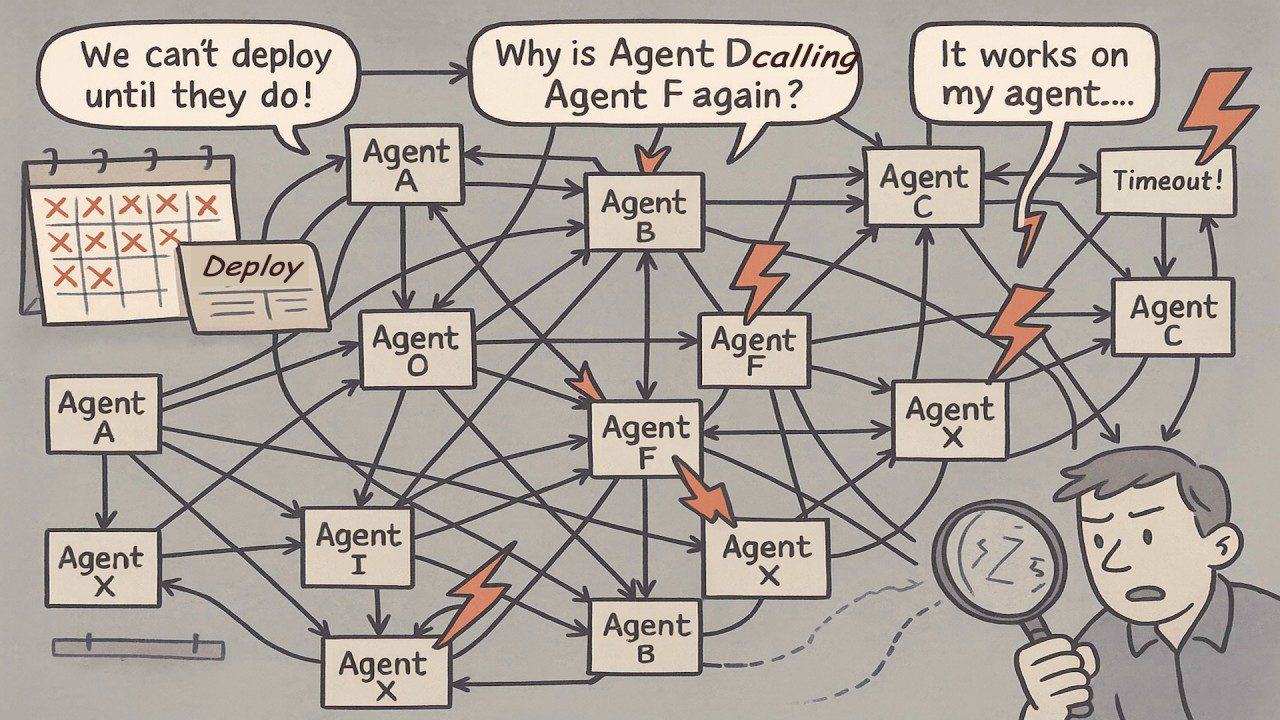

Today, we’re seeing the exact same patterns emerge in agentic AI development. Organizations are starting to build AI systems with multiple agents, but they’re connecting them through point-to-point integrations and client <-> server architecture pattern. Just as with early microservices, this approach creates the illusion of modularity while maintaining tight coupling under the hood.

Consider a typical enterprise AI assistant that needs to handle customer inquiries. It might involve a sentiment analysis agent, a knowledge retrieval agent, a decision-making agent, and a response generation agent. If these agents are orchestrated through synchronous calls or shared state, they create the same fragility we experienced with early microservices and quickly results in many point-to-point connections that need to be configured, maintained, and managed.

The stakes are even higher with agentic AI systems because they introduce additional complexity: variable latency from LLM calls, unpredictable agent execution times, human-in-the-loop workflows, and the need for real-time data and adaptation of workflows based on intermediate results.

The Event-Driven Solution for Agentic AI

EDA isn’t just beneficial for agentic AI – it’s essential. Here’s why:

Enterprise-Ready Resilience

Production AI systems must be bulletproof. When a customer-facing AI assistant processes thousands of requests per hour, individual agent failures cannot bring down the entire system. EDA provides natural fault isolation – if a specialized analysis agent crashes, its events queue up while other agents continue processing. The system degrades gracefully rather than failing catastrophically.

Horizontal scaling becomes trivial. Need more capacity for document processing? Simply add more instances of document processing agents that consume from the same event stream. No reconfiguration, no service discovery complexity – just elastic scaling based on demand.

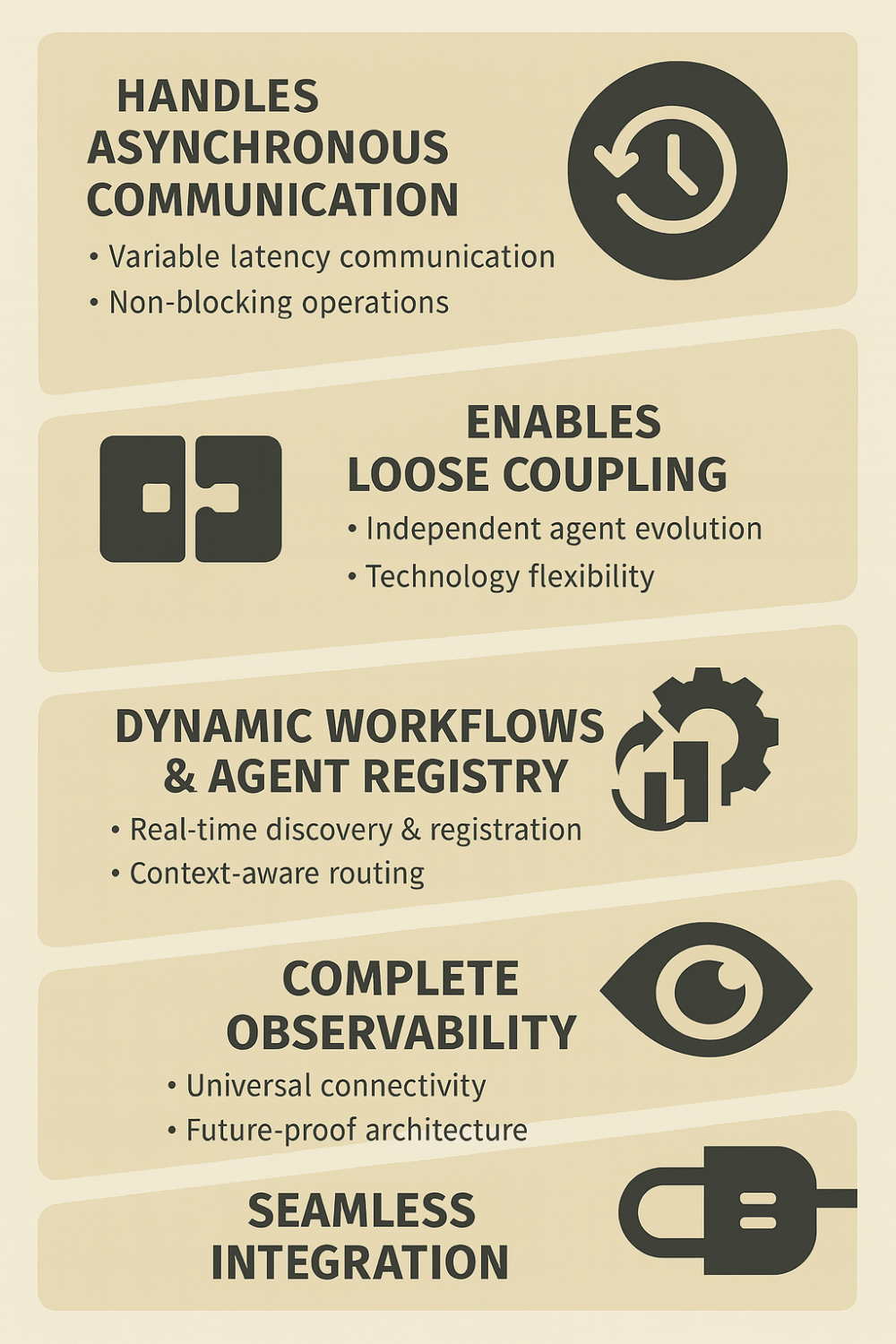

Handles Asynchronous Realities

Agentic AI systems are inherently asynchronous. LLM responses can vary from milliseconds to minutes depending on model load, query complexity, and model type. Agent tasks have vastly different execution times – a simple data lookup might complete instantly while a complex analysis could take several minutes. Human interactions operate on entirely unpredictable timelines.

EDA embraces this reality. Instead of blocking while waiting for responses, agents publish events when they complete tasks and subscribe to events they can process. This pattern enables more robust sequential workflows and enables parallel execution paths. A customer service AI system, for example, can simultaneously have one agent analyzing sentiment, another retrieving customer history, and a third generating response options – all working in parallel and coordinating through events.

Enables Loose Coupling

Just as with microservices, loose coupling is critical for agentic AI systems. Different teams often develop specialized agents using different frameworks, languages, and deployment strategies. Event-driven communication allows these diverse agents to collaborate without tight dependencies.

Consider an enterprise with agents built using different frameworks – some using Solace Agent Mesh, others using LangChain, CrewAI and custom-built agents for proprietary systems. In an EDA, each agent simply publishes its capabilities and subscribes to relevant events, regardless of its underlying implementation.

Dynamic Workflows & Agent Registry

One of the most powerful aspects of event-driven agentic AI is the ability to support dynamic workflows. Unlike systems with hardcoded process flows, agents can register their capabilities at runtime, and orchestration can adapt based on available agents and changing requirements.

Imagine a document analysis system where new specialized agents are being added – perhaps a new agent for analyzing financial documents or another for processing legal contracts. In an event-driven system, these agents simply announce their capabilities, and the orchestrator agent can immediately incorporate them into relevant workflows without system changes or redeployments. In other words, the system incrementally and instantly becomes smarter.

Complete Observability

Debugging distributed AI systems is notoriously difficult. Where did a request get stuck? Which agent made a particular decision? Why did a workflow take an unexpected path? Event-driven systems provide complete visibility because every interaction is captured as an event with full context, timestamps, and traceability.

This observability is crucial for compliance and auditability in enterprise AI systems. Every decision, every data access, and every agent interaction is traceable, enabling organizations to understand and verify AI behavior in production. Picture a visualizer that shows all these interactions and flows and allows you to fully understand how the system works, lineage of the output in order to make it all more explainable and trustable.

Seamless Integration

Enterprise AI systems must integrate with existing infrastructure, data sources, and business processes. EDA subscribing to events, regardless of technology stack or deployment model.

A legacy CRM system can trigger AI workflows by publishing customer events. A modern data lake can feed real-time information to agents through event streams. External APIs can be wrapped with simple event adapters, making them available to the entire AI ecosystem without complex integration code.

Agentic AI will require event-driven data architectures to continuously provide high-quality, relevant, and contextual data products to support the dynamic nature of agentic business activities. IDC “Agentic AI Impact on Enterprises”, March 2025

Benefits of using Event-Driven Architecture for Agentic AI

The Path Forward

The architectural patterns that transformed microservices development are equally applicable to agentic AI systems. Organizations that embrace event-driven architecture early will build more resilient, scalable, and maintainable AI systems. Those that don’t will likely find themselves facing the same challenges we encountered with tightly-coupled microservices – brittle systems that become increasingly difficult to evolve and maintain.

- Getting started is about building with the right foundation from day one: As you embark on agentic AI initiatives, establish event-driven communication as a core architectural principle from the beginning. Start with your first multi-agent use case – whether it’s document processing, customer service automation, or conversational analytics – and design agent interactions through events rather than direct calls. Even with just two agents, this approach establishes the patterns and infrastructure that will scale as your AI capabilities grow. Invest early in event infrastructure and monitoring capabilities, because refactoring is always harder and more costly than starting with the right foundation and building it correctly from the start.

- Establish success metrics from day one: Track your time-to-production for new agent capabilities (EDA should keep this consistent even as system complexity grows), monitor your success rate for integrating external systems and data sources (event-driven systems excel at cross-domain connectivity), measure your mean time to recovery and business impact when individual agents fail (event queuing should minimize business impact), and ensure comprehensive observability coverage across all agent interactions (every event should be traceable end-to-end). These baseline measurements will demonstrate the architectural advantages of your EDA foundation as your agentic AI capabilities expand.

The choice seems clear: we can either learn from the microservices journey and adopt event-driven architecture from the start, or we can repeat history and spend years untangling tightly-coupled agentic systems.

For organizations ready to build production-grade agentic AI systems, platforms like Solace and frameworks like Solace Agent Mesh provide event-driven foundations specifically designed for AI workloads, helping teams avoid the architectural pitfalls that plagued early microservices adoption. The question isn’t whether event-driven architecture will become the standard for agentic AI – it’s whether your organization will be among the early adopters or caught playing catch-up later.

The Architect's Guide toEvent-Driven Agentic AIDiscover how to build agentic AI systems at scale, with an emphasis on real-time responsiveness, scalability, and reliability.Read the Whitepaper

Explore other posts from category: Artificial Intelligence

Ali Pourshahid is Solace's chief engineering officer, leading the engineering teams at Solace. Ali is responsible for the delivery and operation of software and cloud services at Solace, and works closely with product management on product strategy. He leads a team of incredibly talented engineers, architects, and user experience designers in this endeavor. Since joining, he's been a significant force behind Solace Cloud, Solace Event Portal, and Solace Insights products. He also played an essential role in evolving Solace's engineering methods, processes, and technology direction. Most recently, Ali has played a pivotal role in shaping and driving the company’s AI strategy, products, and adoption.

Before Solace, Ali worked at IBM and Klipfolio, building engineering teams and bringing several enterprise and cloud-native SaaS products to the market. He enjoys system design, building teams, refining processes, and focusing on great developer and product experiences. He has extensive experience in building agile product-led teams.

Ali earned his Ph.D. in Computer Science from the University of Ottawa, where he researched and developed ways to improve processes automatically. He has several cited publications and patents and was recognized as a Master Inventor at IBM.

Subscribe to Our Blog

Get the latest trends, solutions, and insights into the event-driven future every week.

Thanks for subscribing.