In this post, I will introduce you to the concepts of blocking vs non-blocking publish available with some of Solace’s APIs. You will also learn when and how to use these two modes in your applications. This feature is supported in only the Java RTO, C, and .NET Solace APIs. The Java API only supports a blocking publish model. For the purpose of this post we will be using Solace’s C API to demonstrate code samples and concepts, with references to documentation on how the same can be achieved using other available Solace APIs.

The post also assumes the following:

- You are familiar with Solace core concepts

- You are familiar with fundamentals of the Solace C API.

Blocking and non-blocking publish can be used with both Solace’s Direct Messaging Delivery mode as well as Guaranteed (a.k.a Persistent) Messaging Delivery mode. In this post we use Direct Messaging Delivery mode and any differences with Persistent Delivery mode will be highlighted when necessary.

What is Blocking vs. Non-Blocking Publish?

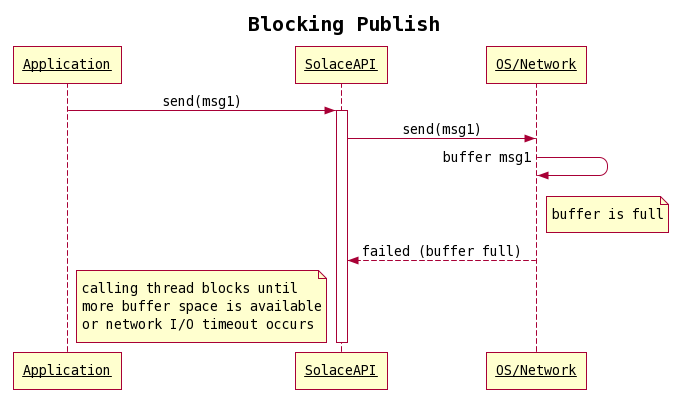

When an application publishes a message to a destination using the Solace API, it will block on the send API method call until the underlying OS has successfully sent the complete message on the network. Once the message is sent on the network the Solace API will allow the waiting send API call to return and the calling thread will resume and likely move on to publish the next message. If the OS is unable to send the message, for example if the network buffer on the host machine is full, then the calling thread will be blocked on the Solace API send call until more buffer space is available or a socket timeout occurs. This is known as a blocking publish, blocking send call or a blocking network I/O model.

Sometimes applications would perform better if they did not block on network congestion. These applications could instead do useful work and resume sending when there is once again bandwidth available. For example, an application publishing Indexes from a stock exchange like NYSE, must be able to handle large message burst common in markets and publish at high speeds and low latency to match the speed at which stock index changes. This type of application would need the send call to return as fast as possible and never wait on the send operation if the network is unable to send the complete message, so it can perform other operations until the network is able to send messages again. In other words, the application requires a non-blocking send call. This call still blocks the calling thread until the complete message has been buffered by the underlying OS/network.

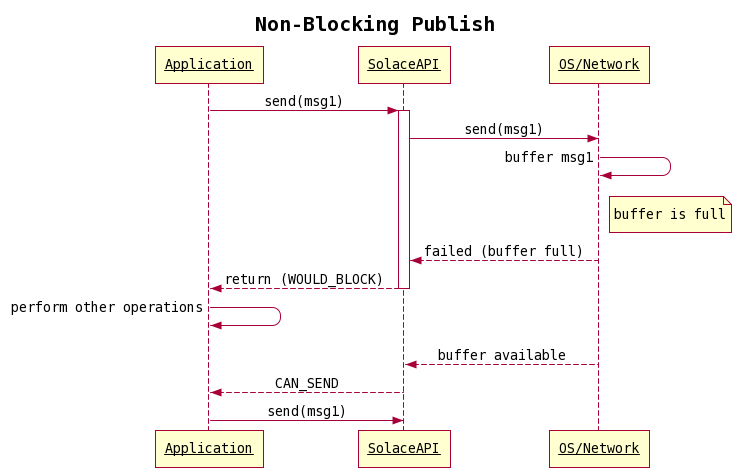

So, how does this differ from a blocking call? Well, the difference is when the OS/network cannot send the complete message on the socket without blocking, for example if the network buffer on the host machine is full, then the Solace API send call returns immediately, with a return code WOULD_BLOCK, instead of blocking until the space becomes available. This is known as a non-blocking publish or a non-blocking send call, or a non-blocking network I/O model.

In the non-blocking publish mode, if the OS/network cannot send the message without blocking the calling thread, then the Solace API returns back to the application a return code of WOULD_BLOCK, indicating that API was unable to send the message as the calling thread would have blocked.

Once more buffer space is available on the host machine, the API notifies the application with a CAN_SEND event on the Session event callback. Upon receiving this event, the application can continue to send more messages.

When To Use Blocking vs a Non-Blocking Publish Mode

Now that we know what is a blocking publish vs a non-blocking publish and how they differ, let us next see when to use each of these modes.

By default the API uses the blocking publish mode as it meets most application requirements and does not require extra logic in the application to handle case when the calling thread would block. But there are reasons why you might want to choose a non-blocking mode. To help understand the reason why we might do so, let us take a real-world example and see how a non-blocking mode would be helpful. So consider for example we are an Investment Bank and our application is Feed Bridge that is receiving stock ticks from an exchange like NASDAQ over multicast and publishing the ticks internally to traders and other applications in the bank.

Now let’s say there is high volatility in the market and the bank is experiencing spikes in the trading activity. During this time we cannot back pressure the multicast feed from the exchange. With burst in trading activity in the network, our Feed Bridge host machine may not be able to push out the stock ticks fast enough to keep up with the multicast feed. In this case the network will cause the I/O buffers on our host machine to fill up causing the Solace API send call to block waiting on buffer space to be available, which eventually would cause back pressure on our exchange feed.

This is once use case where non-blocking publish could help. Here in our Feed Bridge application, we could use the non-blocking network I/O model, and instead of waiting on the send call to return, the application would be free to perform other operations until the network is ready to send more messages. In such scenarios the Feed Bridge could either:

- Discard the old stock ticks for a symbol and only publish the latest tick for that symbol when it can

- And/or it could build a vector of the message during the period is cannot publish, and once the API notifies the application that it can send more message, it can use a feature available in Solace API’s to send the vector in one send API call instead of an individual message at a time.

This way we can avoid back pressuring our exchange stock tick feed and handle scenarios when the network latency is sometimes too high or the traffic pattern is very prone to bursts. But what if the vector send experiences the same problem? Should we build another vector for the vector, but then we would be just going in loops. In such cases even the non-blocking publish mode will not help and most likely you have bigger problems to worry about. For example, your network may not be able to handle the rate at which the application is publishing.

How to Use the Non-Blocking Publishing Mode

Now that we know when to use these publishing modes, let us see how we can enable the non-blocking mode in our applications using the Solace C API and publish messages. Note, since the default publish mode is blocking network I/O model, which does not require you to explicitly set any properties, we do not cover it this post and you can simply refer to the Publish/Subscribe Getting Started tutorial for an example using the C API.

The below code snippets build upon the HelloWorldPub.c sample code provided in the Publish/Subscribe Getting Started tutorial, showing only the properties and code required for performing non-blocking send operations. API initialization, session/context creation, etc. are out scope of this post.

Non-blocking send model is controlled by the session properties. In the C API this property is SOLCLIENT_SESSION_PROP_SEND_BLOCKING and it is set to true or enabled by default. The property can be disabled to use non-blocking send model as shown below:

const char *sessionProps[20]; int propIndex = 0; /* Other session properties are omitted here */ sessionProps[propIndex++] = SOLCLIENT_SESSION_PROP_SEND_BLOCKING; sessionProps[propIndex++] = SOLCLIENT_PROP_DISABLE_VAL;

To enable this in other Solace APIs refer to their respective API documentation here.

We learned previously, in non-blocking publish mode, if the OS/network cannot send the message without blocking the calling thread, then the Solace API returns back to the application a return code of WOULD_BLOCK when the Solace API send call returns. The application is notified that the network is ready to send more message when the CAN_SEND event is received. This is received and handled in the session event callback as shown below.

static int s_canSend = 1;

void

eventCallback ( solClient_opaqueSession_pt opaqueSession_p,

solClient_session_eventCallbackInfo_pt eventInfo_p, void *user_p )

{

switch ( eventInfo_p->sessionEvent )

{

/* You can also handle other session event here */

case SOLCLIENT_SESSION_EVENT_CAN_SEND:

/* Notify publishing thread it can send messages now */

s_canSend = 0;

break;

default:

break;

}

}

When the application receives the SOLCLIENT_SESSION_EVENT_CAN_SEND session event, it should notify the publishing thread that it can send messages now. On the publishing thread, if the send call returns a WOULD_BLOCK then the application should not publish more messages until it receives the SOLCLIENT_SESSION_EVENT_CAN_SEND session event.

solClient_returnCode_t rc;

if( s_canSend ) {

if ( ( rc = solClient_session_sendMsg ( session_p, msg_p ) ) != SOLCLIENT_OK ) {

if ( rc == SOLCLIENT_WOULD_BLOCK ) {

/* Flag we cannot send any more messages */

s_canSend = 0;

} else {

printf ( "solClient_session_sendMsg() failed - ReturnCode = %s", solClient_returnCodeToString ( rc ) );

}

}

} else {

/* Buffer messages until we can send */

}

In the meantime the application can buffer messages, pending to be sent, in a vector and use the send multiple messages Solace API call to publish in a batch when it can send more messages.

Putting it All Together

Building the non-blocking publisher is simple. The following provides an example using Linux. For ideas on how to build on other platforms you can consult the README of the Solace C API library.

gcc -g -Wall -I ../include -L ../lib -lsolclient NonBlockingPublisher.c -o NonBlockingPublisher

Referencing the downloaded C API library include and lib files is required. If you start the NonBlockingPublisher with a single argument for the Solace message router host address it will connect and start publishing messages.

$ LD_LIBRARY_PATH=../lib:$LD_LIBRARY_PATH ./NonBlockingPublisher <<HOST_ADDRESS>>; NonBlockingPublisher initializing... Connected. Publishing messages in Non-Blocking mode... ** Send failed - WOULD_BLOCK - Num Msgs Sent = 20652 Session EventCallback() called: Ready to send (CAN_SEND) - Resuming publish Done - Messages Sent = 50000. Exiting.

Summarizing

I have shown you that an application can use a blocking or non‑blocking network I/O model for send operations. Both modes behave the same on the send operation except in the non-blocking mode if the OS/network cannot send the whole message without blocking the calling thread until more buffer space is available, then the send operation returns immediately and the application is notified with a WOULD_BLOCK return code. When a WOULD_BLOCK return code is received, the message is not sent and the application must wait for a CAN_SEND Session event, which indicates that the network is then ready for the send.

The non-blocking network I/O model is useful when your application cannot wait on send operations. An example of this might be in situations where the network latency is sometimes too high or the traffic pattern is very prone to bursts. In these scenarios, the OS may hit times when it cannot send the message on the network. We had seen how to enable this mode, check return codes on the send operation, and how the Solace API notifies the application that the network is ready to send messages.

More details on the two modes can be found in the Solace Messaging APIs Developer Guide and the API specific documentations.

Explore other posts from category: DevOps

Dishant Langayan

Dishant Langayan