Leveraging machine learning (or artificial intelligence) capabilities for your application is easier than it ever has been. The major cloud computing providers offer these capabilities in an easy to consume manner, so you can start building applications quickly.

However, did you know the actual results across the providers can vary greatly?

I decided to build a demo application that uses two machine learning capabilities to analyze BBC News articles and compare results across the big three cloud providers: Amazon’s AWS, Microsoft’s Azure and Google’s Cloud Platform.

You can try the application yourself here.

What is Machine Learning?

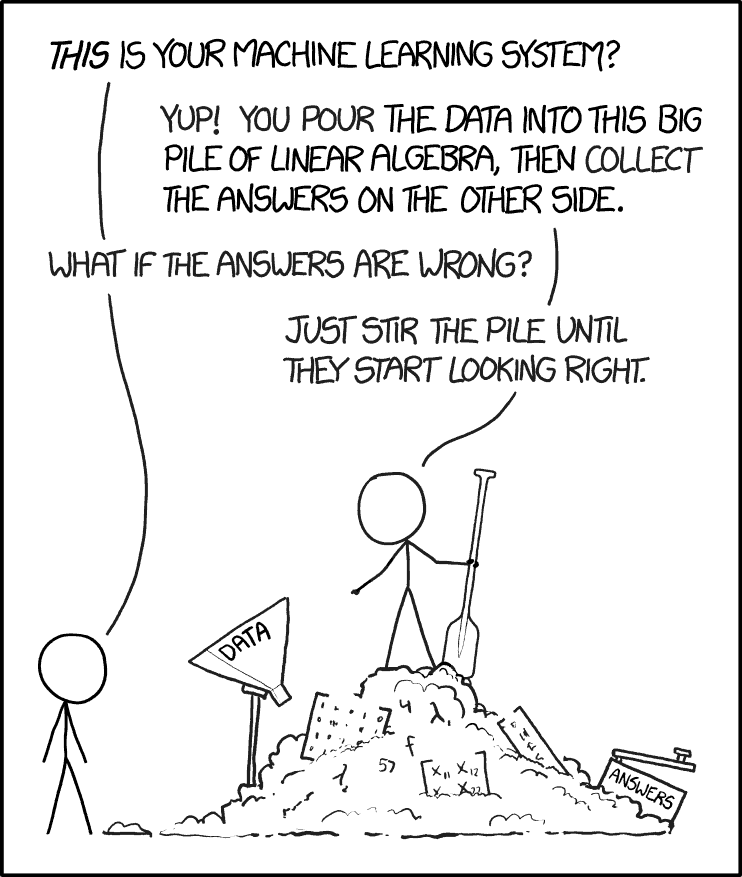

Machine Learning (ML) is a branch of Artificial Intelligence (AI), although often the terms are used interchangeably. It describes the ability of computers to “learn” how to perform an action, without being explicitly told how to do it, as would have been the case for computers in the past. It is unlocking a whole range of new possibilities. I came across this description recently that summed up very well this step-change in computing:

“Computers have been able to read text and numbers for decades, but have only recently learned to see, hear and speak.”

For my demo application I focused on two ML capabilities: Image Analysis (a.k.a Computer Vision) and Sentiment Analysis (a.k.a Natural Language Processing).

What is Image Analysis?

Going back to the earlier quote, put simply, Image Analysis is the capability for computers to now “see”. More concretely, this ‘Computer Vision’ is how faces can be detected within photos. Or it is how a Self-Driving Car may continually process its surroundings to detect the pedestrian crossing the road ahead.

In my application I use this capability to produce ‘labels’ to annotate the central photo that is accompanying the BBC News article. In effect, describe the contents of the photo if you could not see it.

What is Sentiment Analysis?

Sentiment Analysis is simply categorising a piece of writing on whether it is positive, neutral or negative in its content. An example use would be an application automatically processing product feedback left by a user and flagging it for follow-up if it seems negative. A marketing department may also wish to monitor live tweets and have its team intervene if there are negative tweets affecting their brand.

In financial services, political and business news can move markets, so sentiment analysis of live news may be an input into automated trading engines that respond to world events in real-time.

In my application I use this capability to ‘read’ the headline and description of the BBC News article and ascertain its sentiment. In other words, is the news about a given event positive, neutral or negative?

Observations so far…

Not All Machines Are Created Equal

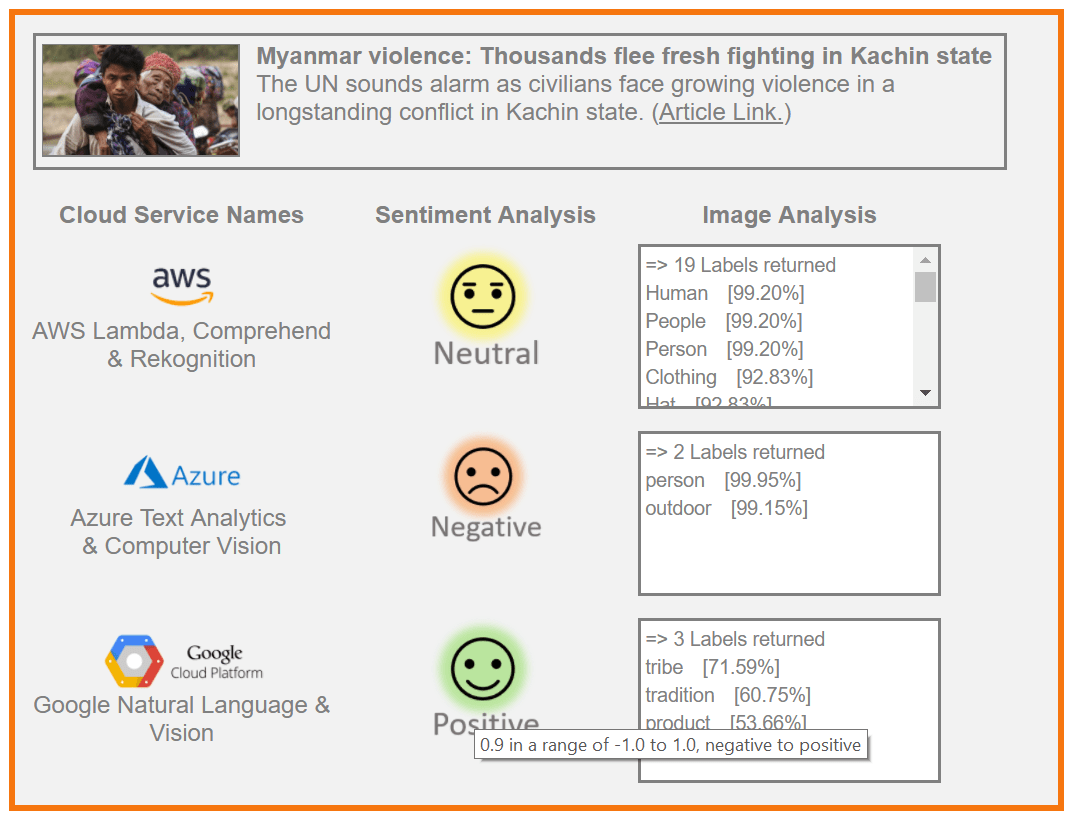

As mentioned in the beginning, I was surprised to see the differences in results across the three providers. Below is an example result where it was not unanimous between them on what the article sentiment is:

As humans, it is quite clear to us that this is a sad and distressing piece of news, yet Google’s Natural Language service deemed it positive in sentiment. In fact the score is 0.9 where 1.0 would represent the maximum for a positive sentiment. It is surprising why words such as “fresh fighting”, “growing violence” and “conflict” have not led it firmly into the negative sentiment territory. Azure’s Text Analytics did the best in this example.

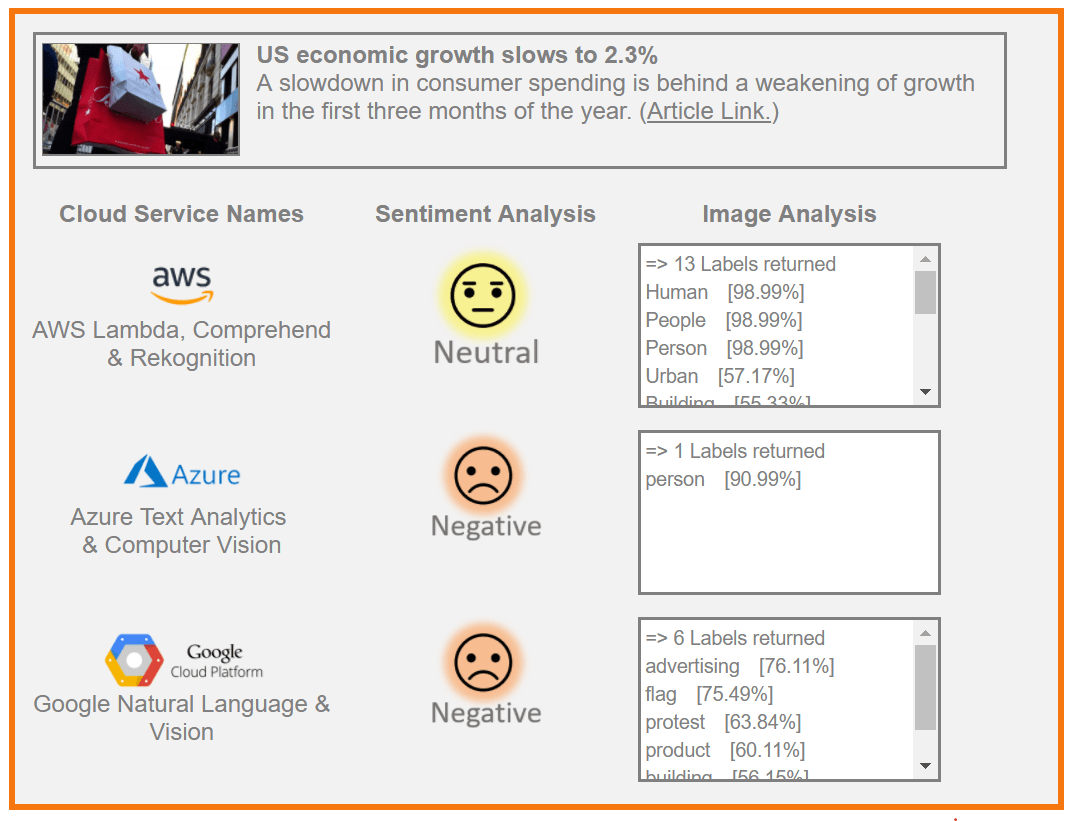

The neutral sentiment determined by AWS’s Comprehend is also worth highlighting here. That service seems to often play it safe with a ‘on-the-fence’ assessment of ‘neutral’ being its dominant stance:

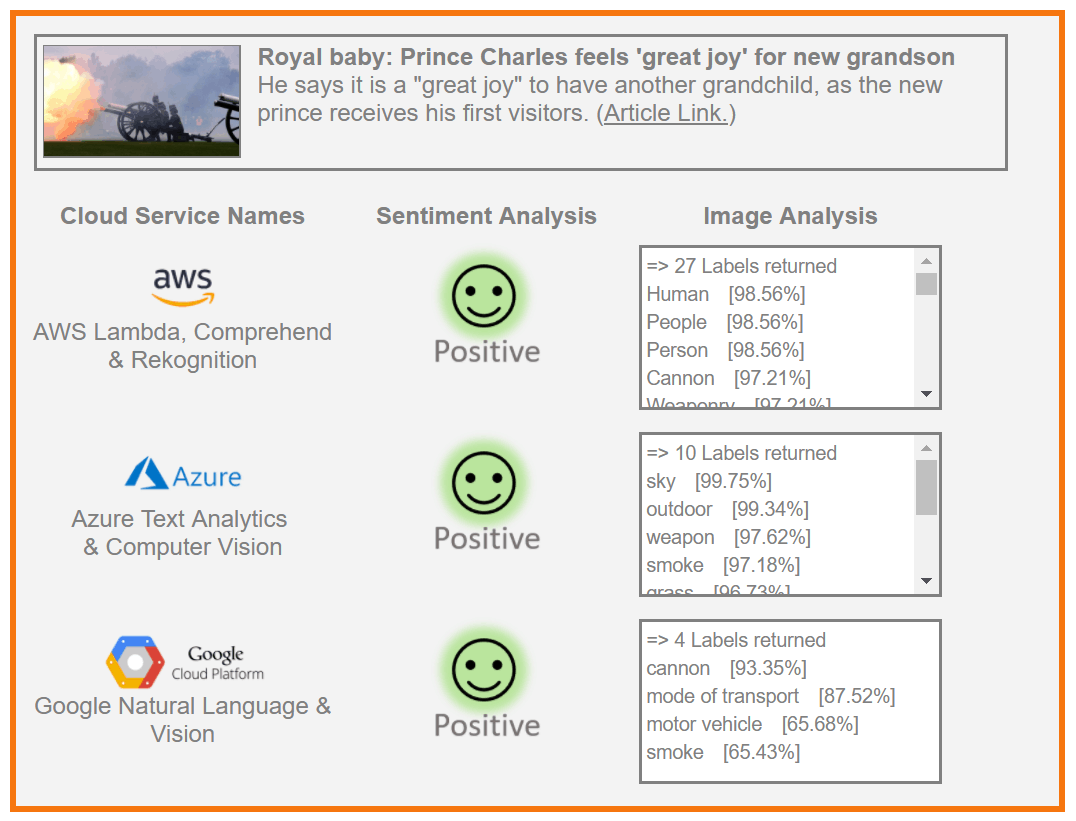

On a happier note, it does seem quite universal though that everyone loves the news of a new Royal Baby:

A Picture Paints How Many Words? Well I’ll Tell You…

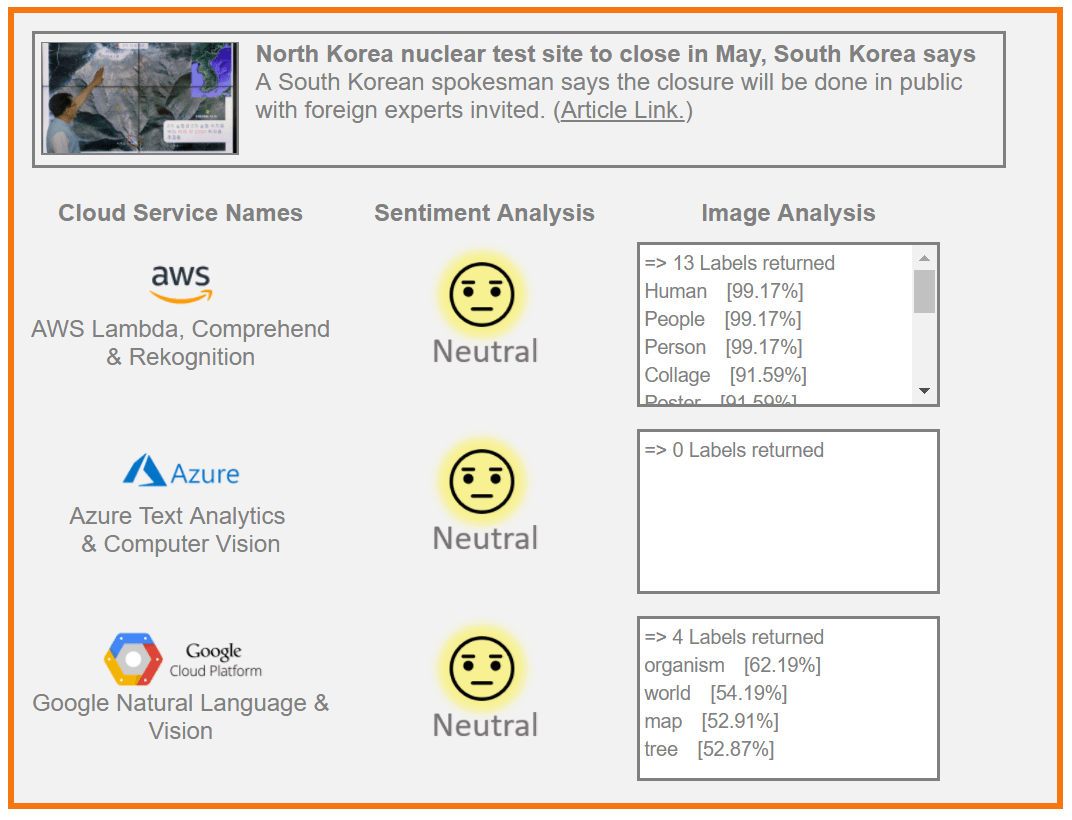

As you’ve probably noticed, the previous three examples also show quite a difference in the Image Analysis capability too.

The trend appears to be AWS’s Rekognition yielding many more labels per image compared to Azure’s Computer Vision and Google’s Vision services. A quick scan also shows most of the labels to be relevant to the image too. In fact compared to its ‘on-the-fence’ approach to Sentiment Analysis, the Image Analysis capability is a lot more aggressive and offers up more ‘inferred’ labels that are likely to be associated to the image.

For example, the Royal Baby article has an image of the Royal Horse Artillery firing a 41-gun salute. While just the fired gun, smoke plume, and 3 service personnel are seen, it produced a whopping 27 labels for the image:

“Human, People, Person, Cannon, Weaponry, Smoke, Airport, Weapon,Airfield, Engine, Locomotive, Machine, Motor, Steam Engine, Train, Transportation, Vehicle, Aircraft, Airplane, Jet, Military, Army, Military Uniform, Soldier, Team, Troop, Warplane”.

Azure and Google seem to be more comparable with each other in that a lesser number of (more focused?) labels are produced. That is, if the service is not completely stumped and produces nothing, as Azure did here:

To Conclude…

This was a very high-level summary of my findings so far. It is in no way a comprehensive review. The demo application is available for you to submit your article choices for analysis and better form your conclusions at http://london.solace.com/cloud-analytics/machine-learning.html

As always, the correct selection of Machine Learning provider will come down to your own usage requirements. For instance, those requirements will determine if a verbose set of labels produced for an image is useful, or whether less is actually more.

One thing we can perhaps take from this is that Machine Learning has made great leaps forward, but we are a long way from intelligent robots taking all our jobs! The systems still need a little more stirring:

Please do let me know what you thought. I have a program on standby to perform sentiment analysis of the comments on this post and automatically reply with the relevant emoji.

***

See Also:

Explore other posts from categories: For Developers | Use Cases

Jamil Ahmed

Jamil Ahmed