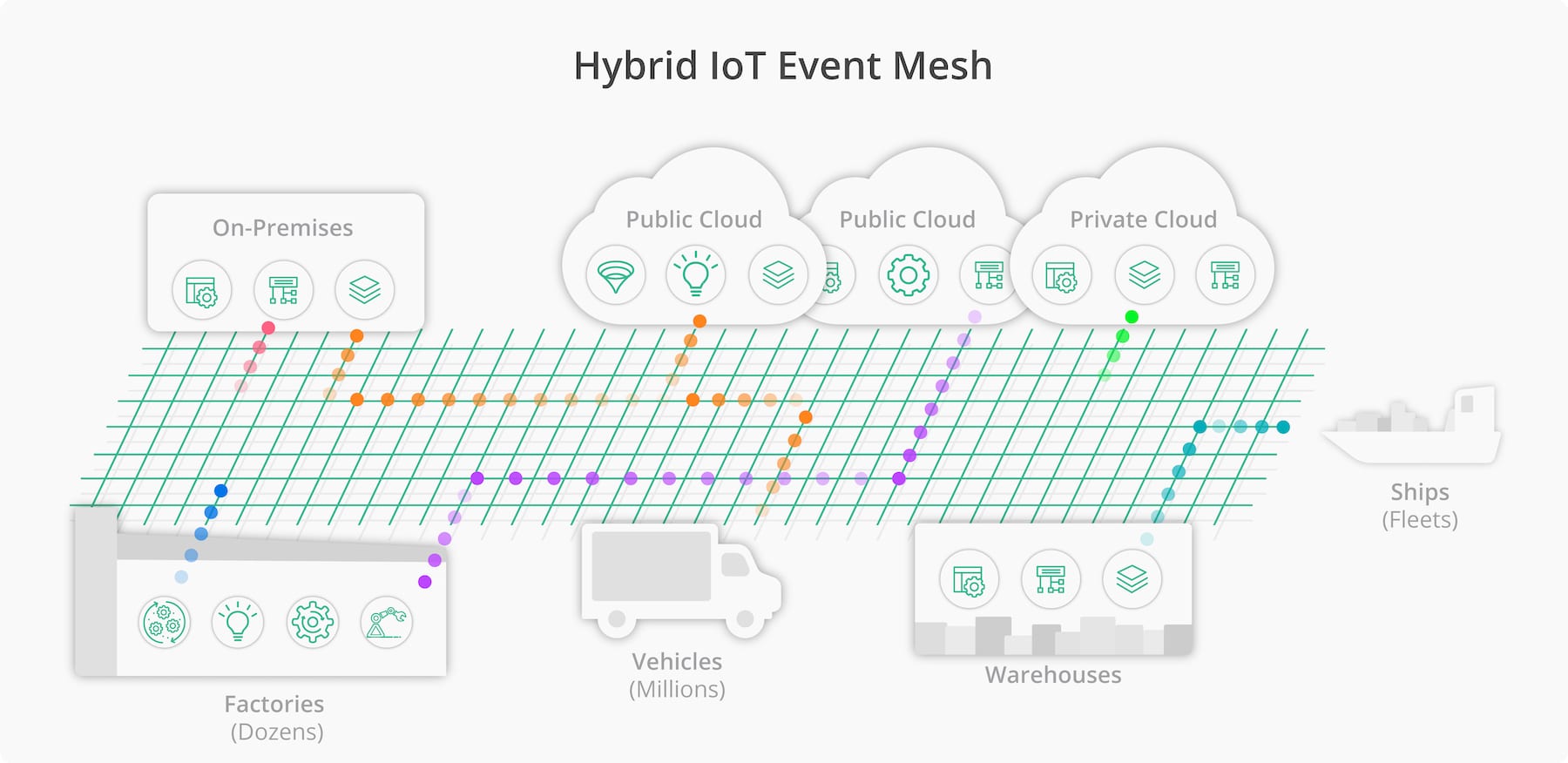

Summary: An event mesh is a foundational layer of technology that supports modern digital manufacturing use cases by enabling the fast, reliable and secure movement of events and other data, within and across plants, HQ (data centers), clouds, and transportation networks. For large manufacturers with diverse technologies and value chains distributed across the globe, an event mesh can be the common data dial tone ensuring that events are routed from where they are created to where they need to be.

Event Mesh 101

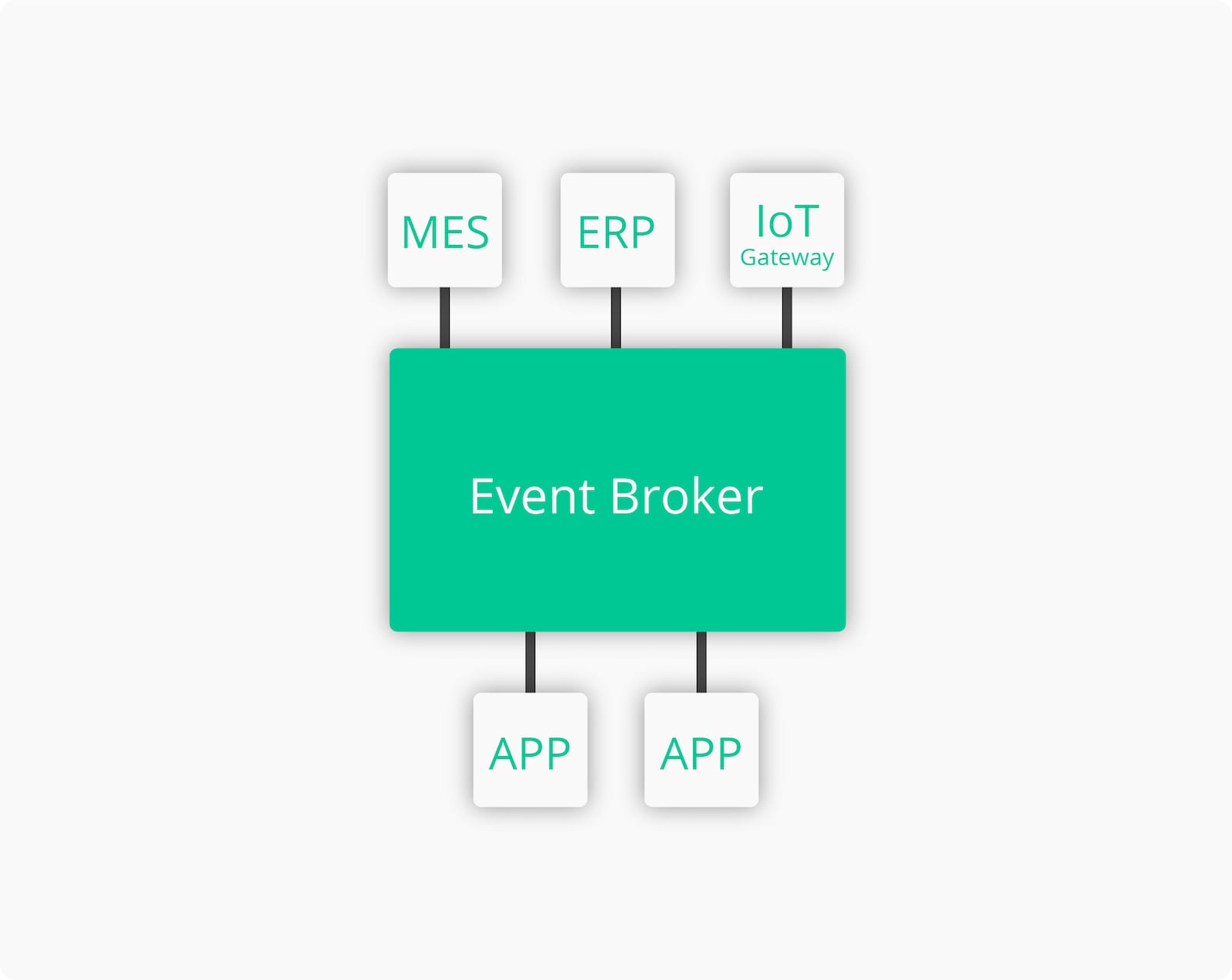

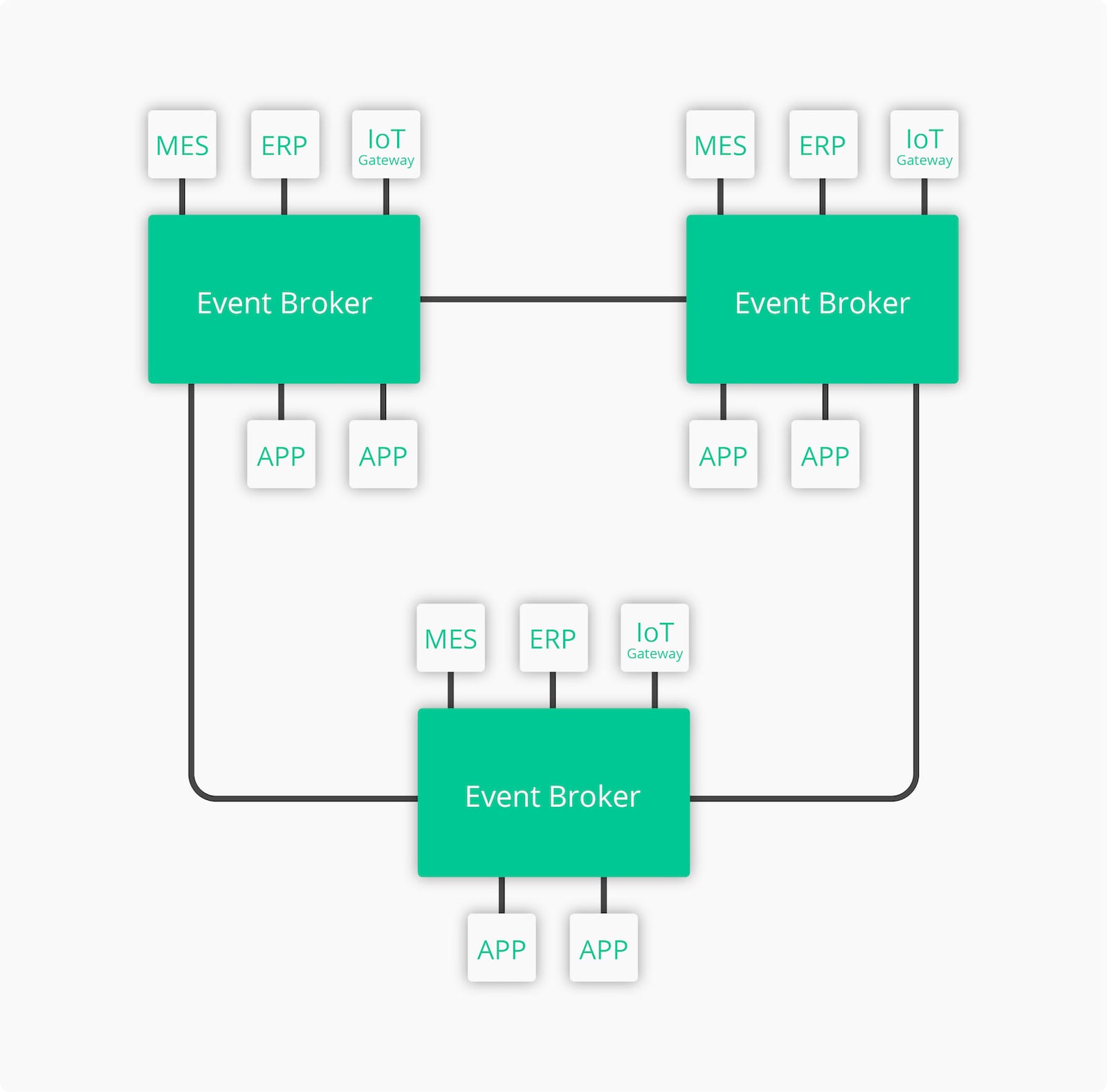

Before we explore the digital manufacturing transformation use case, it’s critical to understand what an event mesh is and how it works. An event mesh is composed of a network of interconnected event brokers. Event brokers are modern messaging middleware uniquely designed to enable event-driven apps and architectures, with support for the publish-subscribe messaging pattern, and more. This is what makes an event mesh the foundational layer enabling data movement across every environment and component of a distributed enterprise (hybrid cloud, multi-cloud, IoT, etc.).

Here’s how it works: an event broker will be deployed in an environment, and various applications (including microservices, devices, and edge gateways) will connect to it. Some will publish events (and other data) to it, and some will subscribe to various events. Then, as events get published and pushed to the broker, applications that have subscribed to those events will receive them, without delay. That’s the story for a single, local environment.

Now imagine this happening in multiple environments—including data centers, public clouds, private clouds, and across different geographies and cloud regions. Because the event brokers running in the different environments are interoperable, they seamlessly connect to enable the routing of events and other data across every environment. An application might live, for example, in a public cloud and connect to a broker in that cloud, but be subscribing to and receiving events without delay that are published by an application that lives in a data center on the other side of the world. That’s an event mesh at work.

With that as our grounding, let’s see how an event mesh supports modern digital manufacturing use cases.

An event mesh applied to digital manufacturing use cases and transformation projects

We can look at digital manufacturing transformation projects through four primary use cases:

- in-plant;

- between plants to central HQ/private cloud(s);

- between plants to central HQ/public cloud(s); and,

- tracking and tracing of materials across global supply chains.

Let’s look at how an event mesh can be applied in each context.

In-plant

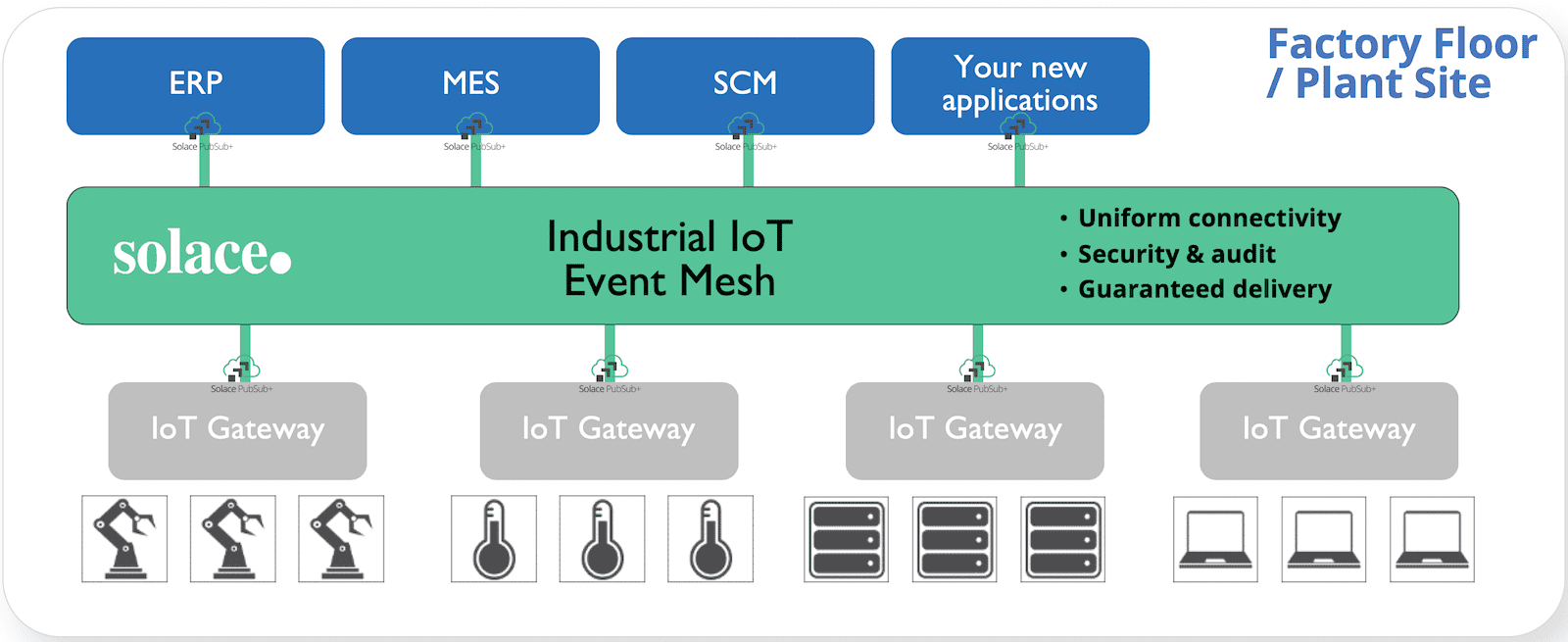

In-plant, modern manufacturers are working to better integrate operational technology (OT)—and to integrate OT and IT—to optimize the maintenance, reliability, performance, and command-and-control of machines and equipment. An example is extracting the rich, raw data in machine tools and running it through applications and analytics engines to ensure optimal machine utilization and maintenance.

But a major challenge is that a plant can have a variety of equipment and machines using multiple different sensors and IoT gateways, technology from different eras (Modbus, SCADA, PLC, DDS, OPC UA) and vendors (Siemens, Honeywell, Rockwell), and using a variety of in-plant applications (MES, SCM, Historians like OSIsoft PI System), none of which may communicate easily.

An event mesh will support event-driven data movement between these disparate technologies in-plant. Event brokers can be deployed to sit between IoT gateways (and in some cases put onto IoT gateways) from one or multiple vendors (Siemens SIMATIC, Dell Edge Gateway, Bosch Rexroth, etc.), and between a variety of in-plant applications (ERP, SCM, MES, PPM), providing a unified event and data distribution layer across technologies.

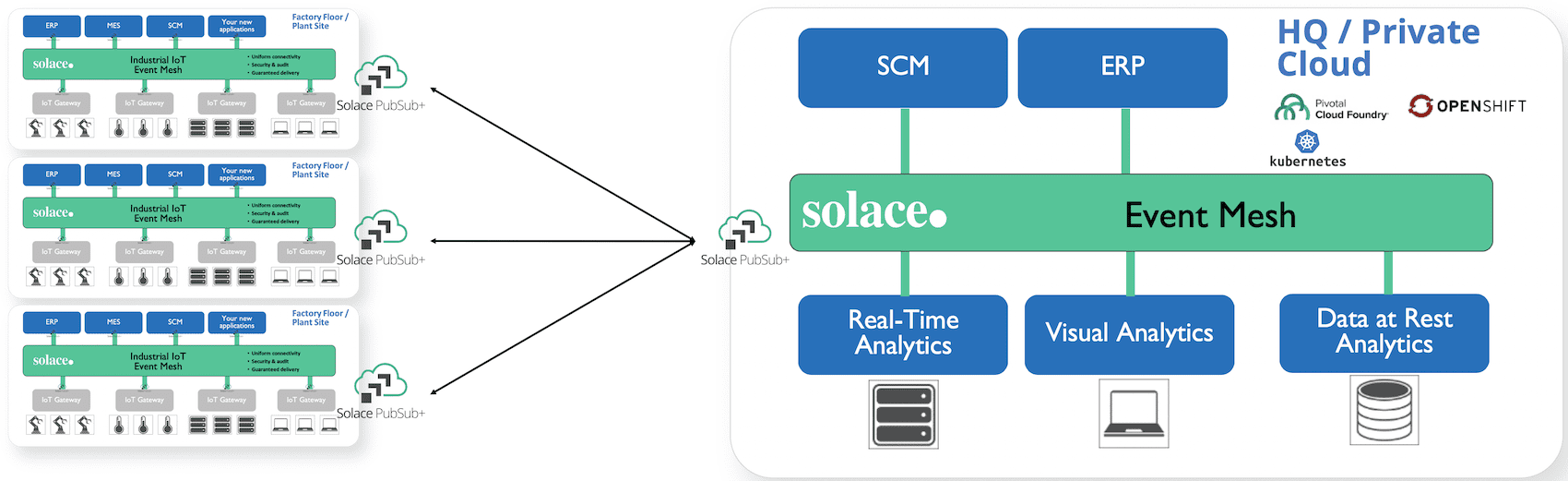

Plants to central HQ/private cloud(s)

Global manufacturers are also working to integrate different manufacturing sites (owned and contracted), HQ applications (such as ERP, CRM, SCM) and HQ private clouds for real-time analytics, to feed data lakes for data-at-rest analytics, and to monitor the quality and status of equipment and production processes—all for better business outcomes. And it’s not only data ingest that needs to be supported. Data, events and commands also need to flow from back-end systems to plants to optimize the health, performance and reliability of in-plant applications, sensors and devices.

Consider a few examples, such as having an HQ ERP system creating service tickets for machines in various plants, based on data that’s being streamed in about degrading OT components. New machine components could be ordered and shipped to the plants, and the machine components upgraded, before the machines fail.

Consider the implications of being able to correlate real-time production data from various plants; you could streamline and realize cost efficiencies in warehousing, storage, shipment, and more.

Consider the critical ability to detect and respond to new allergens in your supply chain, at speed.

All these opportunities demand real-time, bi-directional data flow, which can prove challenging. Data transport/routing can represent significant obstacles due to the variety of data formats, APIs and protocols used by different applications and systems. Streaming data from globally distributed facilities can also be costly, especially without edge intelligence to reduce the amount of noise being sent to the cloud.

An event mesh is a solution to these problems. Event brokers can be deployed in any or all plants, in a central HQ (data center/private cloud), and connected to form an event mesh that spans them all. Events and other data can then be pushed from local applications and gateways, to local brokers, where they will be broadcast to subscribing applications no matter where they live on the mesh.

If the event brokers that compose your mesh are advanced, they can also do things like:

- Provide for standard protocol translation. For example, local brokers might receive event notifications and transmit them to regional or central HQ brokers over protocols such as MQTT or AMQP, where they are then sent to back-end systems/apps via JMS.

- Enable edge intelligence for filtering and processing events and other data at the edge, so that only the events and data are being subscribed to beyond the environment.

- Provide for WAN optimization to reduce costs and increase performance.

- Provide security and data access controls across the mesh.

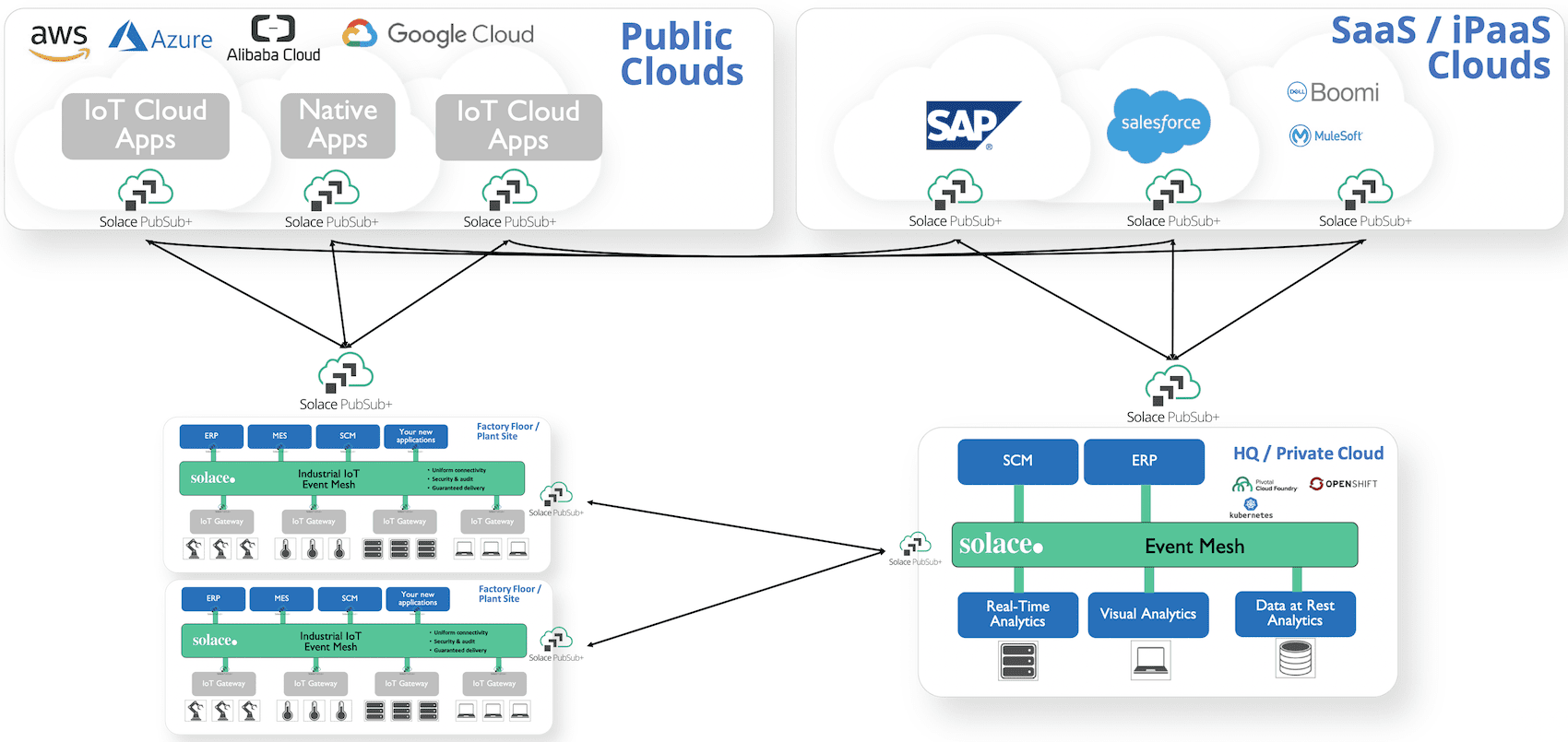

Plants to central HQ/public cloud(s)

Another set of transformation opportunities and challenges relate to the integration of HQ and in-plant applications with public clouds and the services they offer. To leverage modern tooling, maintenance, storage, operations, and pay-as-you-go pricing, many manufacturers are creating new cloud-native applications and microservices, as well as migrating existing on-premises applications to the cloud. Cloud data lakes and cloud services for AI and machine learning promise to support modern use cases, like shifting from reactive to predictive processing, and from reactive to preventative maintenance.

Working with one public cloud is usually pretty straightforward, and that’s where you’re likely to start. You’ll adopt a public cloud and/or an IoT platform like Siemens’ MindSphere and start developing new apps within that environment. Over time you may start migrating legacy apps to that public cloud and begin experimenting with cloud services.

All good.

But then you’ll realize that you need a feature or capability that’s not offered by the public cloud you’re in, or you’ll want a more advanced version of a service that’s offered elsewhere. You’ll want more flexibility, the ability to mix and match capabilities from different cloud environments, and the freedom to move data and apps wherever you want. In short, you’ll want to deploy a hybrid and multi-cloud architecture and enable data to flow easily and efficiently across it.

With an event mesh, working in and across multiple public clouds and cloud regions, and integrating cloud-native apps and services with legacy systems, is straightforward. Event brokers can be deployed natively in different public cloud environments (AWS, GCP, Azure) and on-premises, and connected to form a hybrid, multi-cloud event mesh. With an event mesh set-up in this fashion, you can easily:

- Leverage multiple public cloud services, from different public clouds

- Add, remove and move apps throughout your system, without disrupting the system

- Migrate to the cloud in a stepwise fashion

- Integrate IoT

- Set-up robust disaster recovery, leveraging your multi-cloud architecture

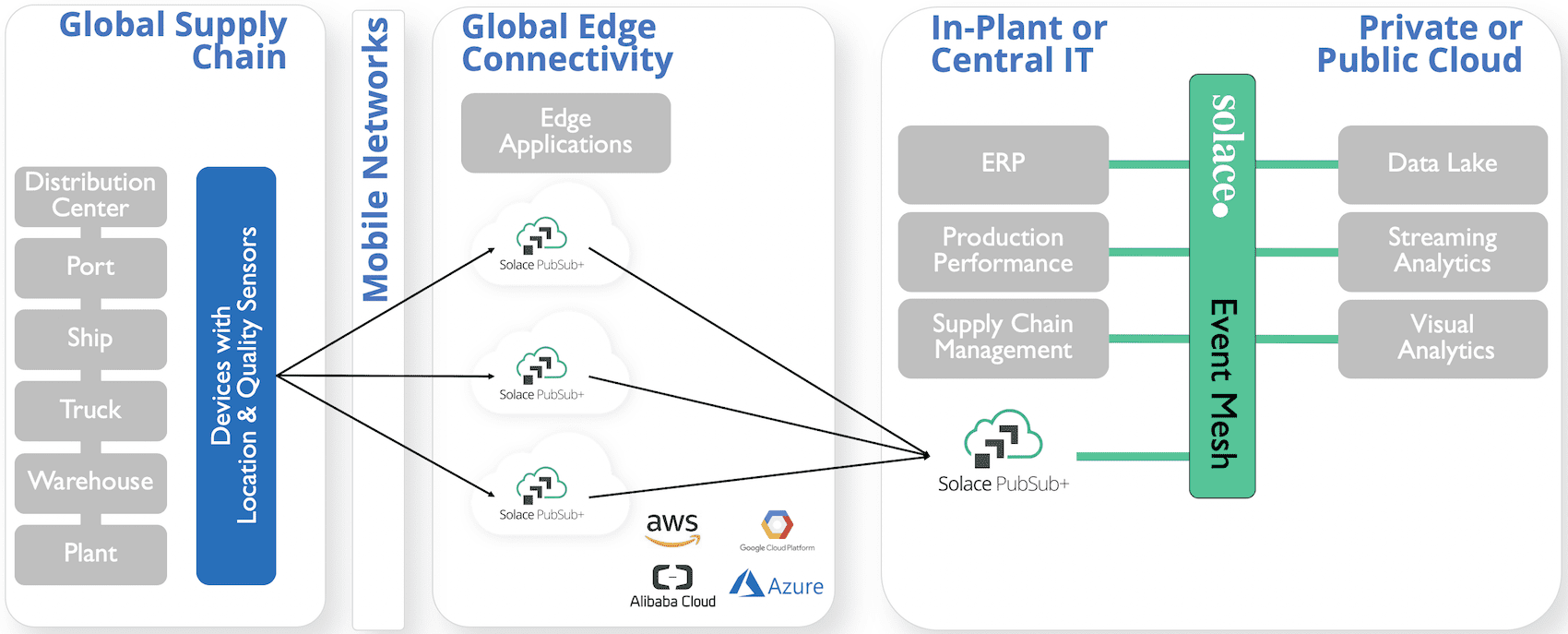

Track and trace materials across global supply chains

Another important objective of digital manufacturing transformation is to support the tracking and tracing of materials across global supply chains. We are seeing scenarios today where sensors are being deployed on every container or palette on a ship (or truck or plane… or multiple), to follow materials and products in-transit, monitor the environment and condition of the material in-transit (measuring humidity, temperature, etc.), and to modify the speed, direction and timing of the transport itself.

Think about the ship that will slow down to save fuel when it receives a notification that its berth will be too busy to receive it at its original arrival time. Or the packaging manager who can efficiently allocate staff and equipment to receive a new shipment the moment it arrives at the facility. Or the CIO or CSCO who can lead a strategy conversation with real-time, historical and predictive views of the company’s global logistics. Millions of sensors are being deployed with these outcomes in mind.

But there are challenges.

One major challenge is ensuring data delivery despite intermittent connectivity. After all, sensors deployed in this fashion will travel through multiple and often erratic mobile networks, as well WiFi-networks; sometimes they’ll be online and sometimes they’ll be offline. This can risk the loss of events and other data before they are delivered.

With advanced event brokers and an event mesh deployed, this challenge can be overcome. Event brokers can be deployed on a truck or ship or plane, and connected to receive all of the event notifications from the sensors on the transport. When the broker is connected to a mobile network, these events will be published to the event mesh. When mobile connectivity is unavailable, the events will get buffered by the broker and sent whenever connectivity is restored.

And with an event mesh enabling events to be routed efficiently between these transports—and plants, warehouses, HQs and public clouds—digital manufacturers will be able to both track and trace their materials throughout their global supply chain and gain a better understanding of the choreography of their supply chain so they can test it and improve it to meet rising customer expectations (for example, lot-size-one production).

In summary, global manufacturers are innovating to meet rising consumer expectations, and an event mesh is an architectural layer that can enable global manufacturing innovation and transformation by helping manufacturers move events and other data:

- between applications, devices, assembly lines, buildings, fleets, containers and more;

- across hybrid cloud and multi-cloud architectures;

- in an event-driven fashion;

- at IoT scale; and,

- with industrial strength reliability, performance and security.

Explore other posts from category: Use Cases

Chris Wolski

Chris Wolski