Subscribe to Our Blog

Get the latest trends, solutions, and insights into the event-driven future every week.

Thanks for subscribing.

With most companies in the early days of cloud migration, the idea of achieving cloud arbitrage – dynamically shifting workloads across clouds to leverage relative price and performance advantages – can seem pretty daunting. Let’s look at the typical road to cloud adoption, then I’ll explain the first thing you’ll need to do to effectively achieve cloud arbitrage.Most enterprises are still in the first stage of cloud migration: moving to a hybrid cloud architecture – augmenting their on premises datacenters with public cloud services. This can entail migrating some applications to the cloud in their entirety, distributing data collection activities to the cloud while keeping core processing in the datacenter, or pushing overflow workloads to the cloud during periods of peak activity, called cloud bursting.

Somewhere along the way, most companies realize they don’t want to be locked in to one cloud so they build and connect applications in a cloud-agnostic manner. In addition to eliminating the risk of lock-in, this means they can deploy applications into various public clouds to achieve multi cloud architecture, which offers superior fault tolerance in the event of cloud or network problems, and lets you pick the best cloud for each job.

From there the logical leap to cloud arbitrage is pretty short. If you’re able to move workloads between clouds, wouldn’t it be great if you could take advantage of even small and short-lived difference in the clouds’ respective packaging, pricing and performance? Yes…yes it would!

Test Cloud Performance Now

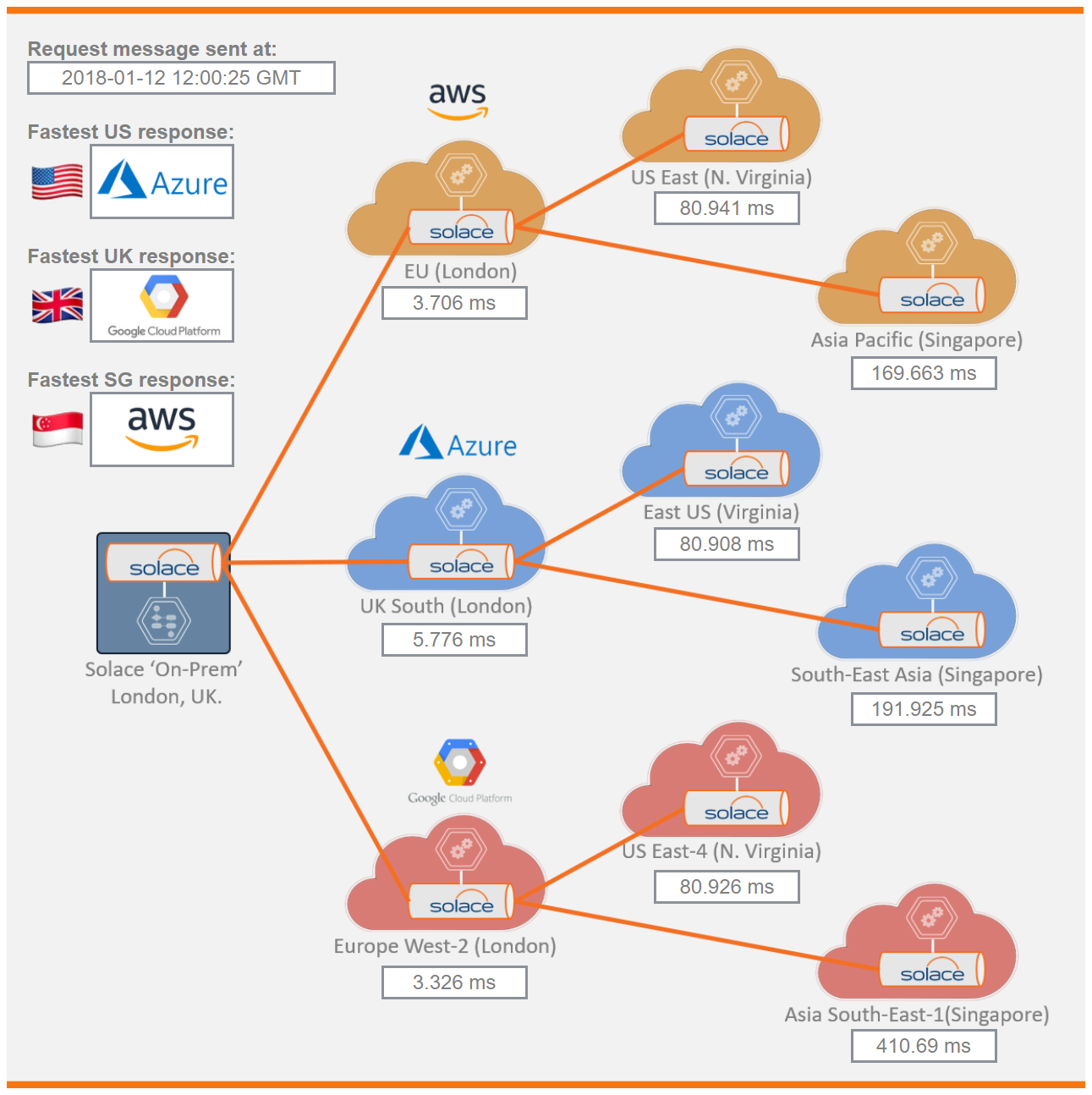

To effectively conduct cloud arbitrage, the first thing you need to do is continuously compare not just each cloud’s current pricing and claimed capabilities, but their real-world performance. With that first step requirement in mind I thought it would be interesting to come up with a tool that measures the relative performance of the top three public clouds – AWS, Azure and Google – in real-time.

The results show ‘round trip time’ results from the nearby UK node of each cloud, and from their far-off nodes in Asia and the US.

In the example shown here you can see that when I ran this particular test, for messaging between our datacenter and each cloud’s UK datacenter, Google Cloud was fastest. At the same time Azure was fastest for communications to the US, and AWS was fastest to Asia.

Note that shortly after that, the tool showed that Azure was fastest for Asia, after AWS slowed down considerably compared to the previous result. If you run the test multiple times you’ll see results change quite frequently. By logging that information over time you should be able to identify which cloud is best for your needs, and maybe even pick up on patterns that indicate which is best for specific workloads and interactions at different times of the day.

Ready to start down the road to cloud arbitrage?

I think this demo really highlights how keeping your options open with a multi-cloud strategy can help you achieve the best performance in all circumstances, including through cloud and connectivity failures. Once you take the first step of figuring out how to compare the clouds in real-time, the road to cloud arbitrage is clear.

- To learn more about how Solace enables hybrid and multi-cloud, click here.

- To learn what’s going on behind the scenes of my demo, check out its page in Solace Labs.

- To evaluate Solace messaging, sign up for our ‘messaging as a service’ offering.

Explore other posts from categories: Business | For Architects | Use Cases

Jamil Ahmed is a distinguished engineer and director of solution engineering for UK & Ireland at Solace. Prior to joining Solace he held a number of engineering roles within investment banks such Citi, Deutsche Bank, Nomura and Lehman Brothers. He is a middleware specialist with in-depth knowledge of many enterprise messaging products and architectures. His experience comes from work on a variety of deployed mission-critical use cases, in different capacities such as in-house service owner and vendor consultant, as well as different contexts such as operational support and strategic engineering.

Subscribe to Our Blog

Get the latest trends, solutions, and insights into the event-driven future every week.

Thanks for subscribing.