I’d like to go one layer down on a point that was introduced in the last post and why you can’t lump all non-software approaches into one hardware bucket. Software suffers in terms of performance in two fundamental ways, heavy CPU loading when tasks are complex, and kernel to application space context switching when iteration counts are high (ie. millions of anything per second). Let’s go through how hardware helps with each.

Issue 1: Heavy CPU Loading/CPU Offload

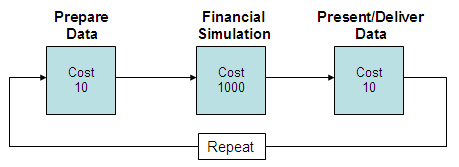

CPU intensive operations are difficult for general purpose software to execute on general purpose CPUs. Examples might be monte carlo simulations, complex algorithms or complex transformation of large data records. Think of it this way: let’s say that the CPU cost of running simulations in software is as follows:

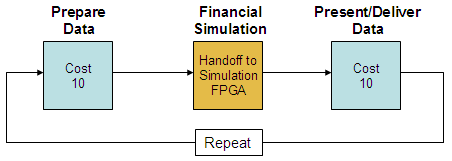

You can see that relative to the task of preparing and presenting data, the simulation is a CPU hog. If that task is handed off to an FPGA, two things happen:

- Special purpose hardware might complete the simulation 20-100 times faster than software (in terms of elapsed time). So you can allow more iterations to complete in the same time.

- Equally important, you have unburdened the general purpose processor of 1000 CPU work units, or in this example, by over 99% per of its work per iteration. Obviously there is additional operating system CPU work in the formula, but the key is that the hardware offload very significantly lightens the load for the general purpose CPU.

So ignoring the challenges of how the FPGA simulation code get written, this CPU offload use case is the most likely scenario for FPGA co-processing as suggested by Intel and AMD in their architectures. I suppose you could execute the prepare data and present data steps in hardware as well, but 99% of the performance savings come from focusing on the high CPU cost of the simulation.

Issue 2: Eliminate Context Switching

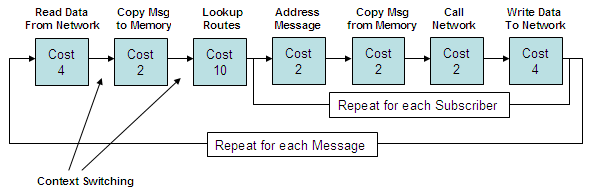

The second problem that FPGAs and similar technology can address comes about when you swap out ALL of the software for hardware. Take a look software pseudochart below for a simple view of how pub/sub messaging works:

Each one of the steps is very light on CPU cost and it all works great if the number of messages is low, but when you try to execute lots of messages a second, a rate limiting issue comes up. The cost of ‘context switching’ – the time it takes for the operating system to pass control from the network stack to an operating system function to the application space and back again – becomes very high. Each individual context switch is fast, but a single publish to multiple subscribers can cause hundreds or thousands of them which leads to the mathematical principle:

fast * many iterations = slow

This is fundamental to software. No co-processing architecture can address it. If you were to try to apply CPU offloading from the prior example to this scenario, you might pick route lookups as the task you implement in hardware. But in the context of all the work performed, making that one step 100 times faster might only reduce performance by 10% or less. All the other steps are the same, and context switching becomes the performance killer at high volume.

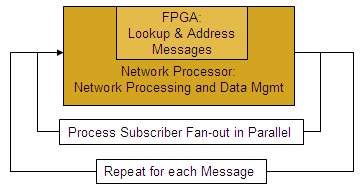

This is why an all-hardware messaging approach is so fundamentally different from software messaging.

With FPGAs doing the application processing and network processors performing networking, security, compression etc you have eliminated the operating system and coupled the application and network stack all in hardware. By doing so, you eliminate context switching. FPGAs and network processors can also more naturally parallelize operations that need to be iterative in software, providing further major performance gains. Of course, removing context switches and parallelizing also substantially lowers end-to-end latency.

These two concepts are most of the reason software often runs out of steam or gets very erratic in terms of behavior and latency at just a few hundred thousand messages/second and hardware can scale all the way up to wire saturation, above 10 million messages per second per hardware blade. An all hardware solution rewrites the rulebook for what is possible.

So in the context of the prior blog post, this hopefully clarifies why FPGA co-processing can help with CPU offloading, but it does little to resolve throughput issues relating to context switching.

Explore other posts from category: Products & Technology

Solace

Solace