In order to demonstrate the power of combining traditional API management concepts and technologies with the increasing need for event-driven data distribution and interaction, we jointly deployed Apigee (Google) and Solace. We made sure to demonstrate functionality in areas such as governance, access control, security, auditing, and metering.

We have used a connected elevator scenario to demonstrate our solution. Our goal was to retain the user-facing experience of API management and only add minimal interactions to the process so API users and developers can find themselves equally at home with RESTful APIs as well as event-enabled APIs.

Disclaimer: The solution described here is not an officially supported Google or Solace product.

The Business Case for Federated Architecture

A solid foundation for enabling an IoT ecosystem can dramatically extend and increase the value of connected assets – like cars, elevators, and equipment. Building on mature technologies using proven techniques makes implementation faster, less expensive and less risky.

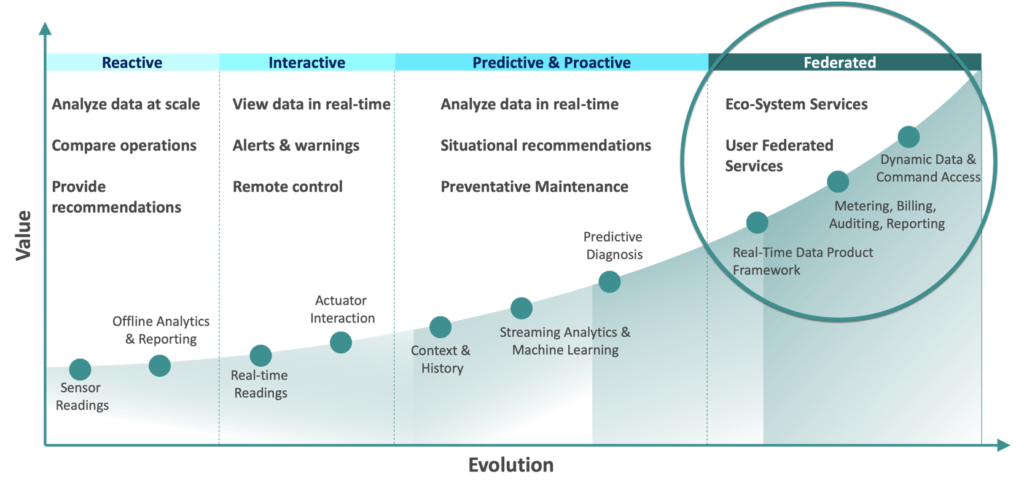

The following ‘value curve’ shows how the value of IoT platforms increases dramatically with maturity stages, from reactive, interactive, predictive & proactive, and federated. The green dots represent the technological capabilities that are required at each stage, while the list represents the business-level goals that a company aims to achieve.

- Reactive: Companies typically start their IoT projects reactively – they analyze data, compare operational KPIs, and provide offline recommendations.

- Interactive: Data from connected assets is viewed in real-time dashboards, including alerts and warnings.

- Predictive & Proactive: Companies analyze data in real-time and derive actionable insight such as situational awareness and preventative maintenance recommendations.

- Federated: Federation of data and insights to a wider ecosystem of internal and external partners as well as end-users.

As you can see, the more we go to a federated model the higher the value and business benefits we get out of our IoT data.

Consumption of Data: Managing External Access

We have already introduced a real-time, event-driven backbone by moving through the previous stages of maturity shown in the diagram, but opening up internal data streams and analytics outcomes for external consumption requires additional capabilities.

We aim to achieve this through traditional API management, which can securely manage access to data streams and events (often governed by contractual agreements), with the ability to audit and meter usage to enable commercial models.

If we want to enable third parties and users to interact with our platform or assets, security and access control mechanisms become even more challenging.

Let’s take a closer look at a use case that would benefit from event-enabling APIs.

Connected Elevators – Unlocking the Potential for Remote Monitoring and Analytics

Connecting building infrastructure and assets unlocks the potential for remote monitoring and analytics; thus paving the way for proactive and preventative maintenance. Connected elevators are able to send both operational and diagnostics data.

| Operational Data | Diagnostics Data |

|

|

This kind of information could be valuable to a number of different parties – large hotel chains, on-site staff, maintenance companies, elevator companies, insurance companies, or even advertising companies. It could also be useful to be able to send commands to control elevators remotely, such opening doors, stopping the elevator, or sending it to a certain floor.

So, in the case of connected elevators, there is a need for internal business units, partners, or customers/users to:

- Receive real time data from these assets (current speed, current floor)

- Send actions to the assets (open the door, go to a floor)

- Get notifications of certain events (breakdowns, floor arrivals)

- Access aggregated insight and data based on analysis and machine learning models (expected parts change, usage optimization, preventative maintenance)

However, there is only limited value in sharing the raw data streams. Therefore, we will introduce the concept of data products – the transformation of raw data streams to value-added, actionable insight streams and events to federate via APIs.

From Raw Data to Actionable Events: Using a Hybrid IoT Event Mesh API Management

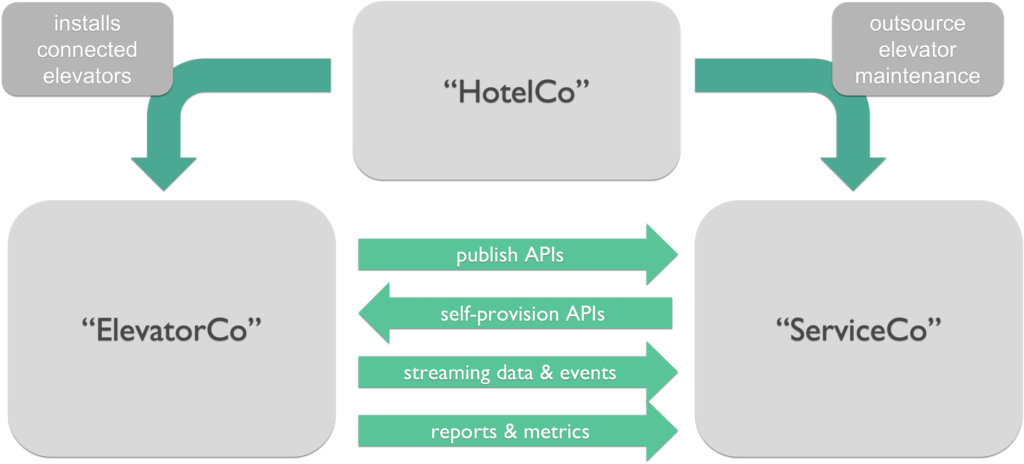

Combining Apigee and Solace can solve the needs discussed above in a scalable, automated, and secure way. We’ll now describe some of the highlights of the solution we have developed using the following scenario:

- A hotel group installs connected elevators in their properties and outsources the maintenance to one (or many) service companies.

- The elevator company provides the IoT platform, connecting millions of elevators globally, and creates digital ‘data products’ for API users to consume.

- The service company contracts with the elevator company to get access to their event-enabled data products.

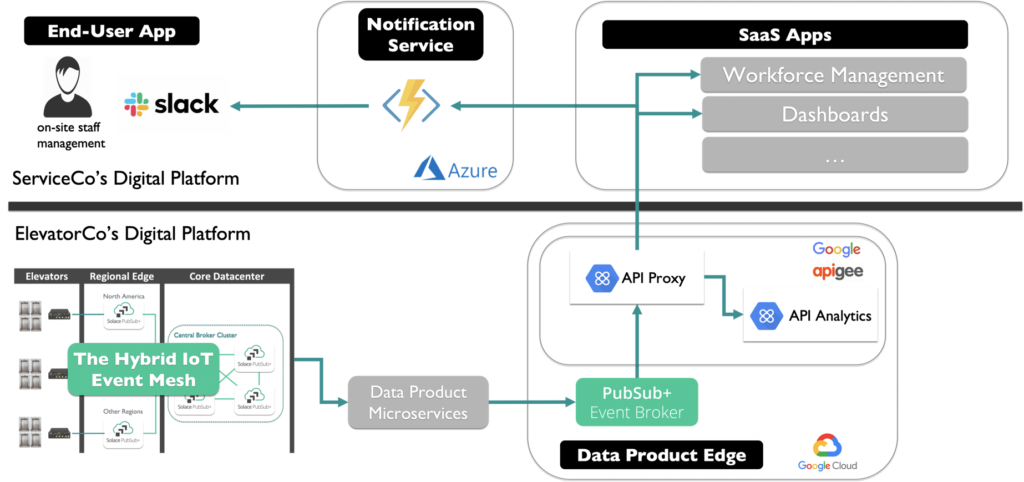

The Run-time View

The following picture shows the architecture at run-time:

The elevators are connected through an event mesh based on PubSub+ Event Brokers. This makes the raw telemetry data available at the core and enables the sending of control and command messages. Based on the raw data, ElevatorCo can now create data products for specific use cases and/or customers.

Apigee makes it possible to expose these data products as APIs so external consumers (ServiceCo) can subscribe to events from the provider (ElevatorCo) via its API Proxy. The analytics component gives the provider insights into the usage of the various APIs.

On the consumer side, ServiceCo provides either the REST endpoints for events and data to be pushed to and/or the MQTT clients connecting to the data product Edge. In this project, we have implemented an Azure function that is triggered every time an event arrives; it then introspects the event and forwards it to a specific user / slack channel. Other applications such as a Workforce Management SaaS or other dashboards could receive the same events.

Apigee handles the data flow outside of the provider’s system, collecting usage metrics and providing a developer portal that lets consumers see what data available is and register for the service.

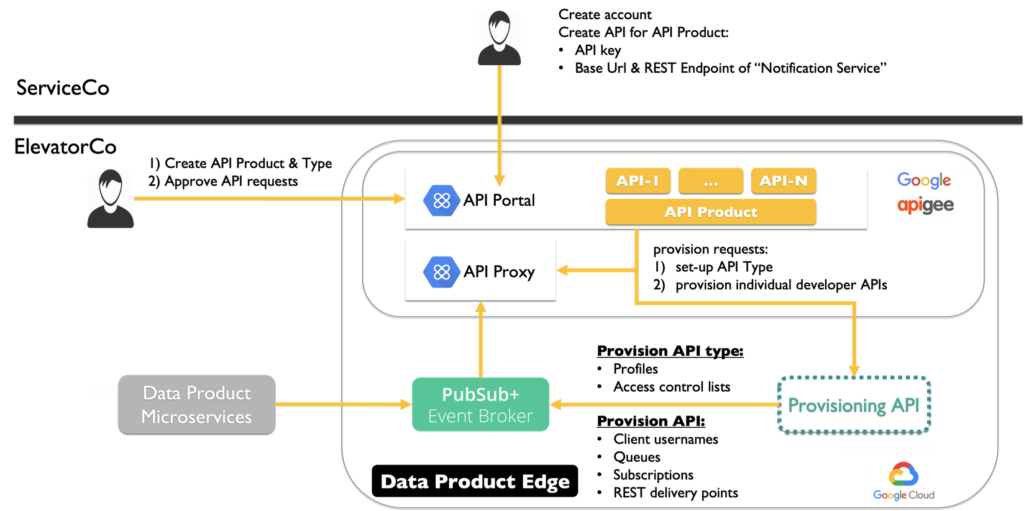

The API Provisioning View

The following picture shows the provisioning flow:

The provisioning is divided into two parts:

- Creating the data products

- Creating the API products in the API Portal and the provisioning of individual developer APIs

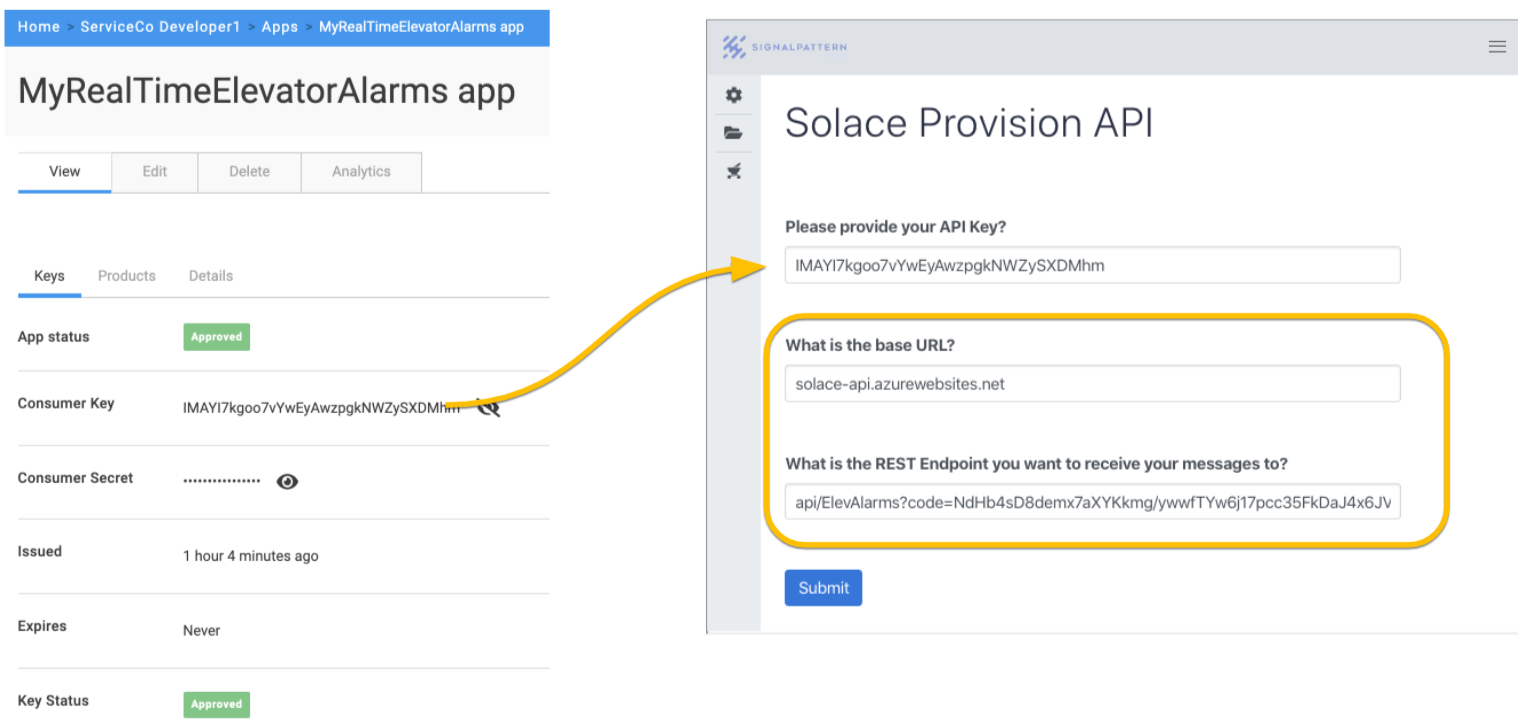

Now, let’s take a closer look at the flow when an individual developer requests an API. The developer signs up for an API, providing the REST endpoint of their ‘notification service’. This request is managed within the API Portal, which generates an API key and triggers the ‘provisioning API’. The provisioning API automatically configures the Solace broker(s) with the correct access profile/lists, the internal queues, subscriptions, and various other settings. These settings ensure the secure delivery of the selected data product streams via the broker and the proxy to the developer’s notification REST endpoint.

Creating the Data Products

As a first step, ElevatorCo developers need to create data products to share via the API.

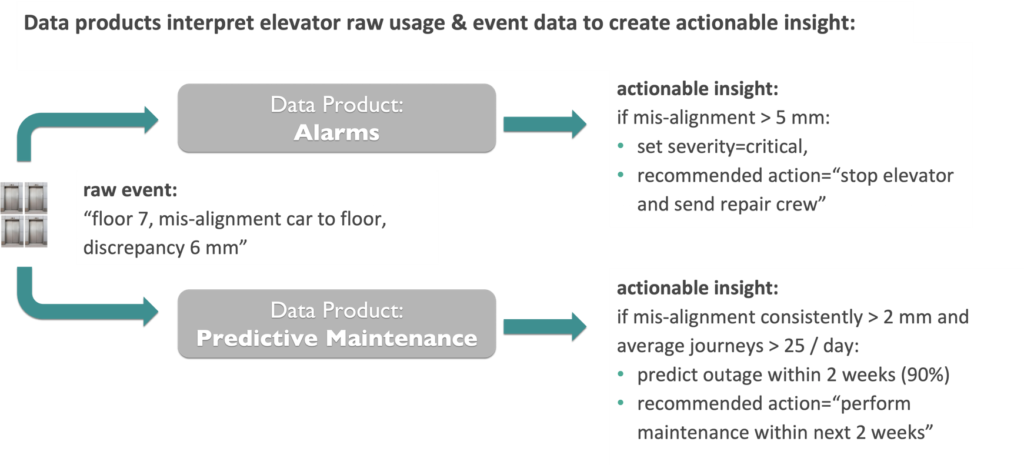

The main concept of a data product is based on the notion that typically the raw event and data streams from the connected assets are not necessarily something the company would like to expose. Instead, data product microservices ingest the stream of raw telemetry and event data from the assets and create what we call ‘actionable insight’ from these.

The following picture shows two examples:

In order to facilitate the creation, distribution, and publishing of data products, we have designed a hierarchical topic namespace which includes the semantics required to describe a data product as well as providing for flexible routing and distribution.

The raw telemetry data from the assets (elevators) contains the detailed categorization, including the resource type (which elevator type) and the resource ID (which elevator). The data product then adds specific business level information, for example:

- The organization who ‘owns’ the elevator

- The installation region

- The city / sub-region, and

- The actual site id, for example the hotel property

We now use this information to create a topic string template that all data products will follow, for example:

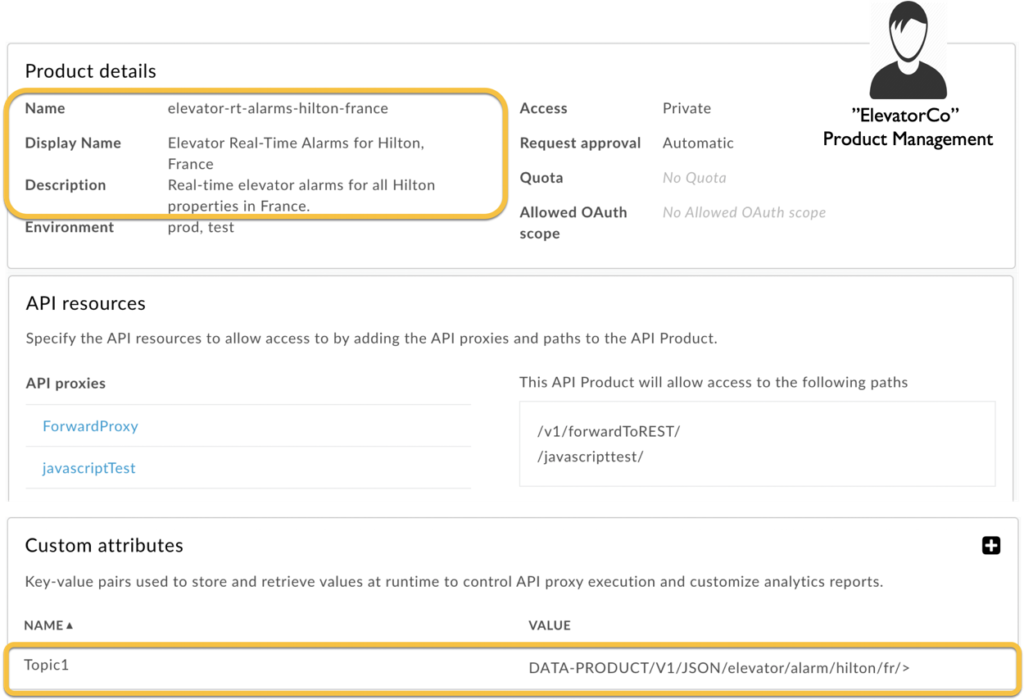

DATA-PRODUCT/{version}/{representation}/{asset-type}/{product-type}/{resource-categorization}/{resource-type}/{resource-id}

Following this template, and assuming that elevators are installed in the Marriott Marquis in NYC and all Hilton properties in France, the data product alarms would publish on:

DATA-PRODUCT/V1/JSON/elevator/alarm/marriott/us/nyc/marquis/3100/9876DATA-PRODUCT/V1/JSON/elevator/alarm/hilton/fr/paris/opera/3100/2389DATA-PRODUCT/V1/JSON/elevator/alarm/hilton/fr/paris/opera/3100/2469DATA-PRODUCT/V1/JSON/elevator/alarm/hilton/fr/lyon/hotel/3103/2678

And so on …

Whereas the data product for predictive maintenance publishes on:

DATA-PRODUCT/V1/JSON/elevator/prediction/marriott/us/nyc/marquis/3100/9876DATA-PRODUCT/V1/JSON/elevator/prediction/hilton/fr/paris/opera/3100/2389

Using this topic template or pattern, we can now define very specific data products streams for ServiceCo, for example:

- ServiceCo has a maintenance contract with HotelCo for elevators in the Hilton Opera in Paris, France. ElevatorCo grants ServiceCo access to data products alarms & predictive maintenance:

DATA-PRODUCT/V1/JSON/elevator/alarm/hilton/fr/paris/opera/*/*DATA-PRODUCT/V1/JSON/elevator/prediction/hilton/fr/paris/opera/*/*

- ServiceCo has a maintenance contract with HotelCo for elevators in all Hilton properties in France. ElevatorCo grants ServiceCo access to data products alarms & predictive maintenance:

DATA-PRODUCT/V1/JSON/elevator/alarm/hilton/fr/>DATA-PRODUCT/V1/JSON/elevator/prediction/hilton/fr/>

In the next section we will see how these topic strings (i.e. very specific data streams) are used when defining the specific API for a specific customer (ServiceCo).

Create and Publish API Products in the Developer Portal

As discussed above, the data products are defined by their topic strings and distributed to customers via wildcard subscriptions. The following screenshot shows how a product manager in ElevatorCo uses the portal to define and publish a data product.

API Provisioning by the Consumer/Developer

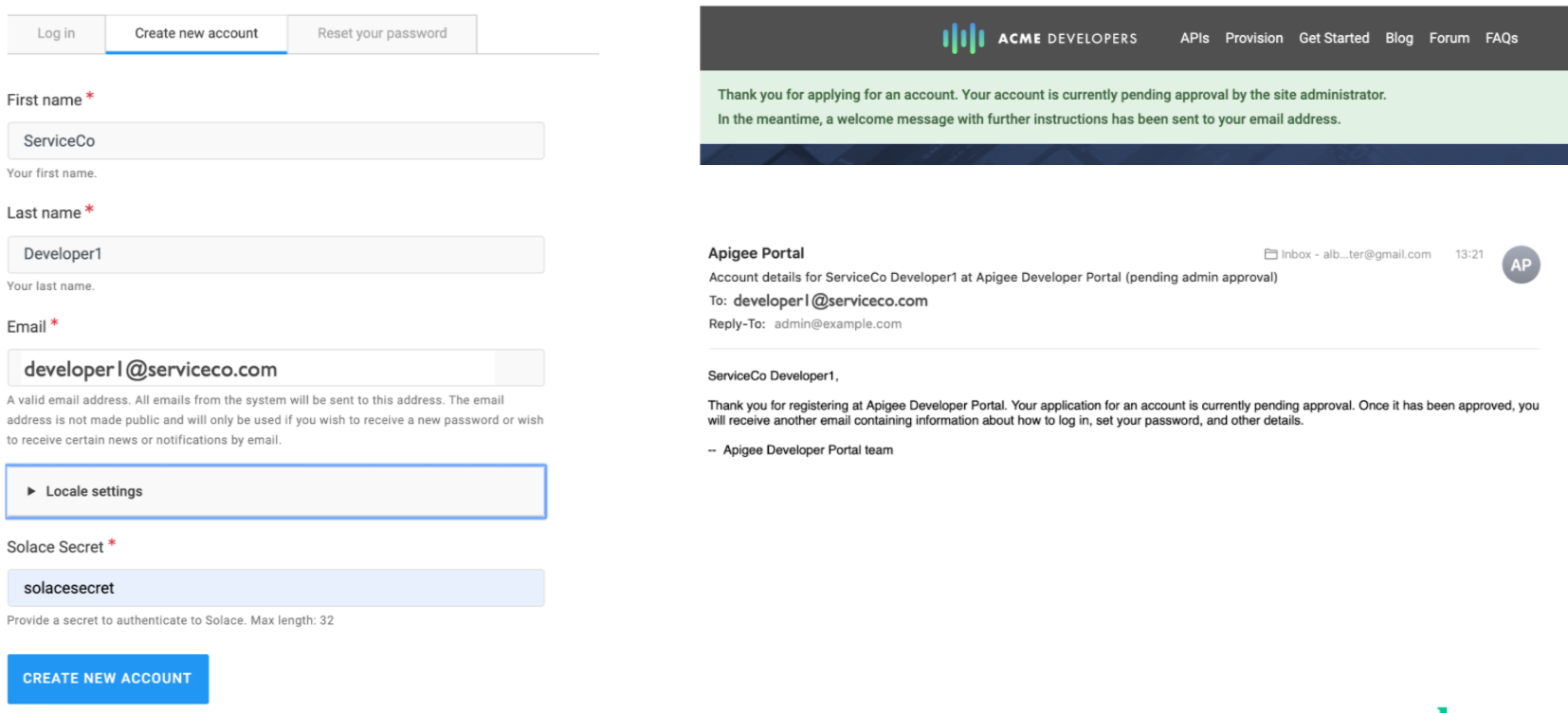

Let’s assume the developer has already created their ‘notification service’, i.e. the Azure function with its REST endpoint and the integration to Slack.

They then create an account on ElevatorCo’s Apigee developer portal and wait for approval. After being approved, they subscribe to their allowed API products and provide a base URL + REST endpoint to send the notifications to.

Behind the scenes, this API calls the provisioning API that sets up the client username, queue, subscriptions, and REST delivery point in the Solace broker(s) amongst others.

Consuming the API Data Streams

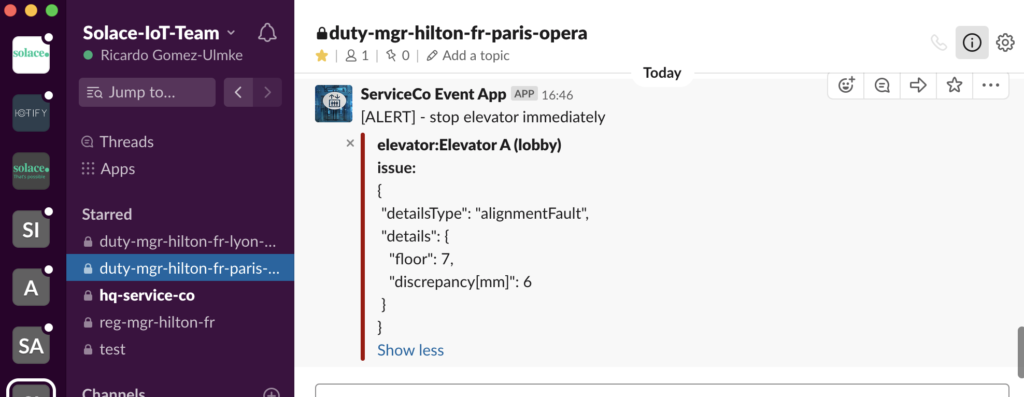

For any event sent by the data product, the PubSub+ Event Broker sends this data through the API Proxy to the consumer’s notification service that they defined in the provisioning step. This way the consumer can receive the data wherever they need them in real time through a REST interface (webhook). The following picture shows this integration with Slack, where a critical alarm is sent/routed to the correct channel – in this case, the duty manager in the Hilton Opera, Paris, France.

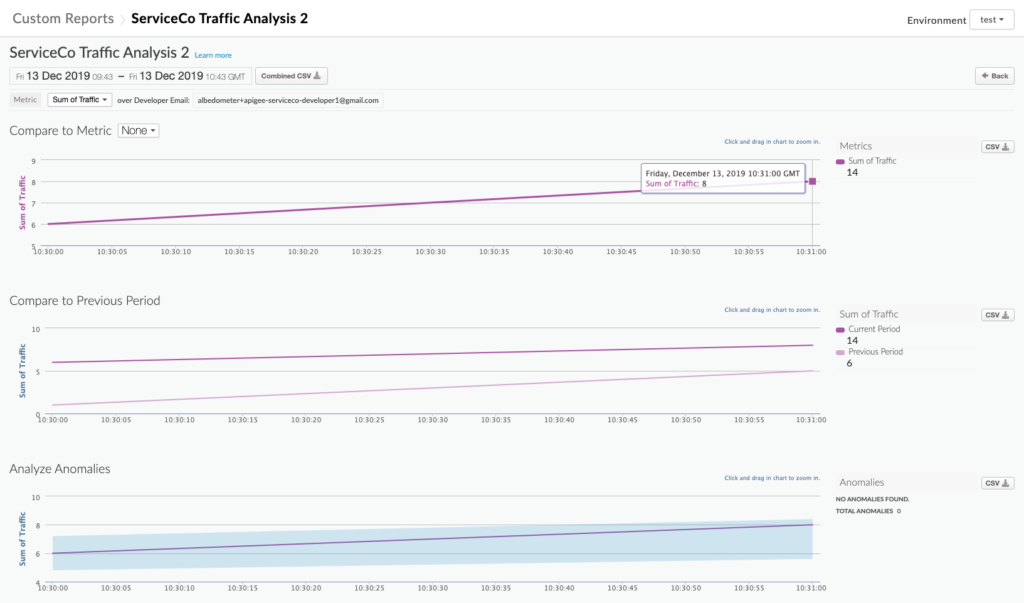

Analyzing and Metrics

The provider (ElevatorCo) can see all the data that went through the proxy and can create reports. For example, the usage report will tell them what each consumer has subscribed to and on which API product, including the number of received alerts.

In addition, Apigee also allows the provider to create custom reports that would offer insights on the type of alerts, time, or any other dimension in the data product.

Conclusion

We hope that with this article we have shown and explained the concept of event-enabling APIs and how a combination of the real-time, highly scalable event mesh and PubSub+ Event Broker architecture and Apigee’s API Management Platform can power these concepts.

The approach we have taken here was guided by the notion that we wanted to create a seamless experience, based entirely on the well understood and accepted RESTful API experience, without introducing additional risk and cost. We have created a working solution based on a realistic use case. Of course, there are many other combinations and use cases out there. We are confident that using the flexibility of both platforms can address these as well.

For a more detailed view of the solution please look at the recording that walks the audience through the exact steps:

If you are interested to learn more about this, please contact the Solace or Apigee Sales Team.

Explore other posts from categories: API Management | For Architects

Ricardo Gomez-Ulmke

Ricardo Gomez-Ulmke Florian Geiger

Florian Geiger