Solace has been serving the financial industry with its industry leading event broker, PubSub+, for almost two decades, and is known for event-enabling enterprises by liberating their data through real-time streaming. Kx Systems has been a major player in the financial services space for years too, and its kdb+ time series database is known for providing users with efficient and flexible tools for ultra-high-speed processing of real-time, streaming and historical data. After establishing their footprint in the financial industry, both companies have expanded into other industries facing similar challenges such as IoT, retail, and aviation.

Why use kdb+ and PubSub+ together?

kdb+ has been optimized to ingest, analyze, and store massive amounts of structured data. Its columnar design and in-memory capabilities means it offers greater speed and efficiency than typical relational databases, and its native support for time-series operations accelerates the querying, aggregation and analysis of structured data.

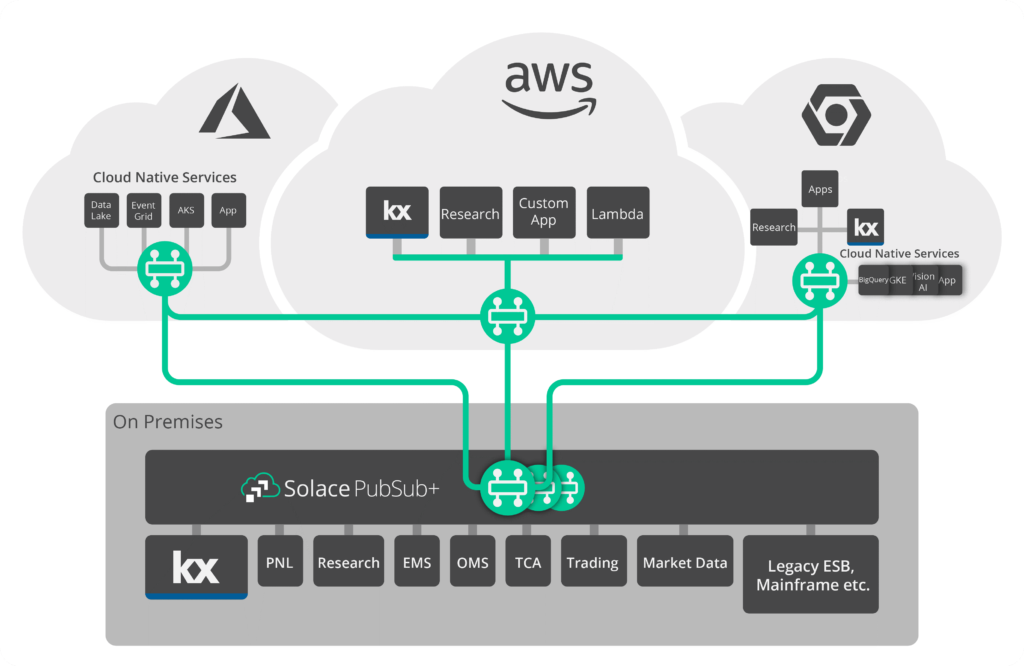

Solace PubSub+ message brokers efficiently move information between all kinds of applications, users and devices, anywhere in the world, over all kinds of networks. With PubSub+, you can take advantages of rich hierarchical topics, open APIs and protocols, event mesh, and Replay.

With so many mutual customers, Solace and Kx decided it would be helpful to bridge the gap between PubSub+ and kdb+. With that in mind we teamed up with Kx’s head of fusion interfaces, Andrew Wilson, and lead developer Simon Shanks to develop a PubSub+ API for kdb+ that would benefit those using the two tools today and make it easier for new users to adopt either. The API is publicly available on Kx’s github.

Here are just a few of the ways kdb+ developers can leverage PubSub+:

- Consume data from a central distribution bus – many financial companies capture market data from external vendors and distribute it to their applications internally via PubSub+. kdb+ developers can now natively consume that data and store it for later use.

- Distribute data from kdb+ to other applications – Instead of delivering data individually to each client/application, kdb+ developers can leverage pub/sub messaging pattern to publish data once and have it consumed multiple times.

- Migrate to the cloud – Have you been thinking about moving your data to the cloud? With PubSub+ you can easily provide your cloud-native applications with access to your high-volume data stored in on-prem kdb+ instance using PubSub+’s powerful Event Mesh.

- Easier integration – PubSub+ supports open APIs and protocols that make it a perfect option for integrating applications. Your applications can now use a variety of APIs to publish to and subscribe data from kdb+.

- Replay – tired of losing critical data such as order flows when your applications crash? With PubSub+’s Replay feature, kdb+ developers can make their q processes crash proof.

- Data Notifications – it’s always a challenge to ensure all of the consumers are aware of any data issues whether it be an exchange outage leading to data gaps or delay in an ETL process. With PubSub+, kdb+ developers can automate publication of notifications related to their databases and broadcast it to the interested subscribers.

How do I install kdb+ and PubSub+?

You can learn how to install kdb+ here. As for PubSub+, you have multiple (free) ways to setup an instance:

- Install a Docker container running locally or on cloud

- Set up a free 30-day trial instance on Solace Cloud (easiest way to get started)

Please post on Solace Community if you have any issues setting up an instance.

Downloading and Installing the PubSub+/kdb+ API

You can find the API on github. It’s freely available and supported by Kx as part of their Fusion Interfaces. In the README.md doc, you will find all the necessary information on how to install the API.

In the initial release, the API won’t support all of PubSub+’s features, but we’ll add functionality over time. For example, in the initial release, you will not be able to initiate replay from kdb+.

Currently, here is what you can do with the API:

- Connect to a PubSub+ instance

- Create and destroy endpoints

- Perform topic to queue mapping with wildcard support

- Publish to topics and queues

- Subscribe to topics and bind to queues

- Set up direct and guaranteed messaging

- Set up request/reply messaging pattern

Examples of what you can do with the PubSub+/kdb+ API

The API comes with some very handy examples that show you how to use it. You can find all the examples in examples directory and find related documentation in examples/README.md.

Note that to run the scripts in examples directory, you will need to provide your PubSub+ connection details via command line parameters. I have a local instance of PubSub+ running with default vpn. You can also use -user and -pass parameters to provide your credentials.

Check PubSub+ Capabilities

You can check capabilities of your PubSub+ instance. For example, I would like to check whether my user is able to manage endpoints by checking for SESSION_CAPABILITY_ENDPOINT_MANAGEMENT ; by running this simple script: q sol_capabilities.q -opt SESSION_CAPABILITY_ENDPOINT_MANAGEMENT -host localhost:55555 -vpn default

Here is what the output would look like:

### Registering session event callback ### Registering flow event callback ### Initializing session [29226] Solace session event 0: Session up ### Getting capability : SESSION_CAPABILITY_ENDPOINT_MANAGEMENT 1b ### Destroying session

As you can see SESSION_CAPABILITY_ENDPOINT_MANAGEMENT is set to 1b so we are allowed to manage our endpoints.

Create a queue

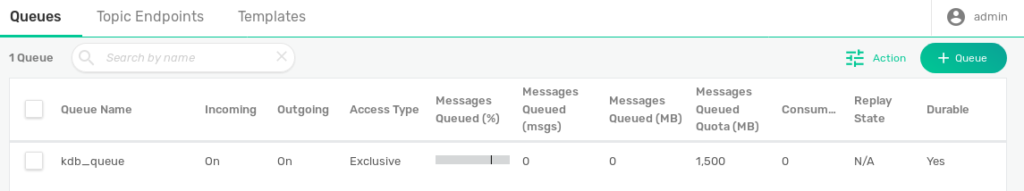

Once you confirm that you can manage endpoints, you can create a queue: q sol_endpoint_create.q -name "kdb_queue" -host localhost:55555 -vpn default

…and see in the Solace GUI that your queue kdb_queue was created:

Map a topic to our queue

You can map topics to your queue from Solace’s UI but you can also do it from your kdb+ instance through the API.

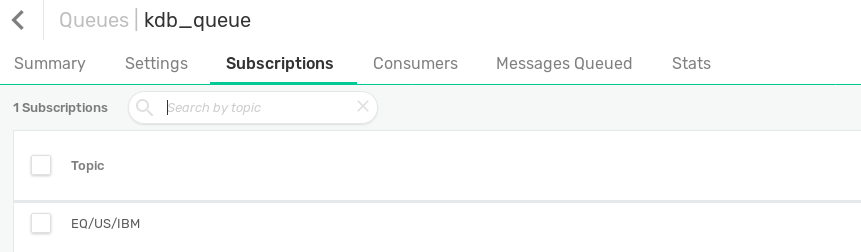

q sol_topic_to_queue_mapping.q -queue "kdb_queue" -topic "EQ/US/IBM" -host localhost:55555 -vpn default

You can see from Solace UI, a topic subscription (EQ/US/IBM) has been added to our queue:

Deleting a queue

You can also delete the queue we just created by running this example: q sol_endpoint_destroy.q -name "kdb_queue" -host localhost:55555 -vpn default

I am going to keep the queue for the rest of the examples.

Publish a guaranteed message to a topic

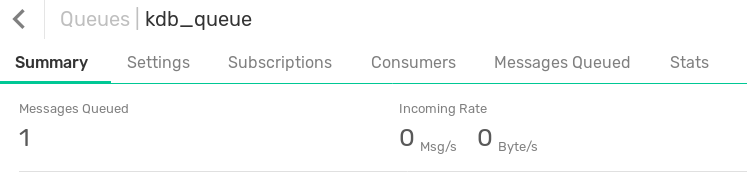

In this example, you will publish a guaranteed message (as opposed to a direct message) with the payload hello world to a topic, EQ/US/IBM and because our previously created queue, kdb_queue has this topic mapped to it, we should see your message enqueued.

q sol_pub_persist.q -dest "EQ/US/IBM" -dtype "topic" -data "hello world" -host localhost:55555 -vpn default

You can then see 1 message queued in your queue.

Note that you can also publish to a queue, but that is a point-to-point messaging pattern. Publishing to a queue facilitates pub/sub messaging pattern.

Consuming messages from a queue

Now that your message has been enqueued, you can consume directly from the queue: q sol_sub_persist.q -dest "kdb_queue" -host localhost:55555 -vpn default

Here is the output:

Registering session event callback ### Registering flow event callback ### Initializing session [29968] Solace session event 0: Session up ### Registering queue message callback [29968] Solace flowEventCallback() called - Flow up (destination type: 1 name: kdb_queue) ### Session event eventType | 0i responseCode| 0i eventInfo | "host 'localhost:55555', hostname 'localhost:55555' IP 127.0.0.1:55555 (host 1 of 1) (host connection attempt 1 of 1) (total connection attempt 1 of 1)" ### Flow event eventType | 0i responseCode| 200i eventInfo | "OK" destType | 1i destName | "kdb_queue" ### Message received payload | "hello world" dest | `kdb_queue destType | 1i destName | "kdb_queue" replyType | -1i replyName | "" correlationId| "" msgId | 1

As you can see, the message was received with the payload “hello world”.

Conclusion

I highly recommend checking out other examples in the examples directory that show you other features of PubSub+ that you can implement using this API. In the next few weeks, we will be publishing a series of blog posts demonstrating how you can enhance your existing kdb+ stack with PubSub+. Here are some of the usecases these blogposts will cover:

- Subscribing and publishing events via PubSub+ from a q process

- Replicating kdb+ instances in real-time with PubSub+

- Architecting decoupled q processes with PubSub+

- Controlling data streams to your clients on a per symbol basis via ACL profiles

Let us know what you think about the API and if you have any suggestions for future improvements. Hope you like it!

Explore other posts from category: For Developers

Himanshu Gupta

Himanshu Gupta