Susinda Perera is an experienced IT professional and a certified Solace solutions consultant.

Intercepting REST request and response data helps an organization to gain insights into the communication patterns and behaviors between microservices or applications, including the frequency and types of requests, the performance and responsiveness of services, and any potential issues or errors. This information can be used to improve the reliability and performance of the overall system and to make informed decisions for future operations.

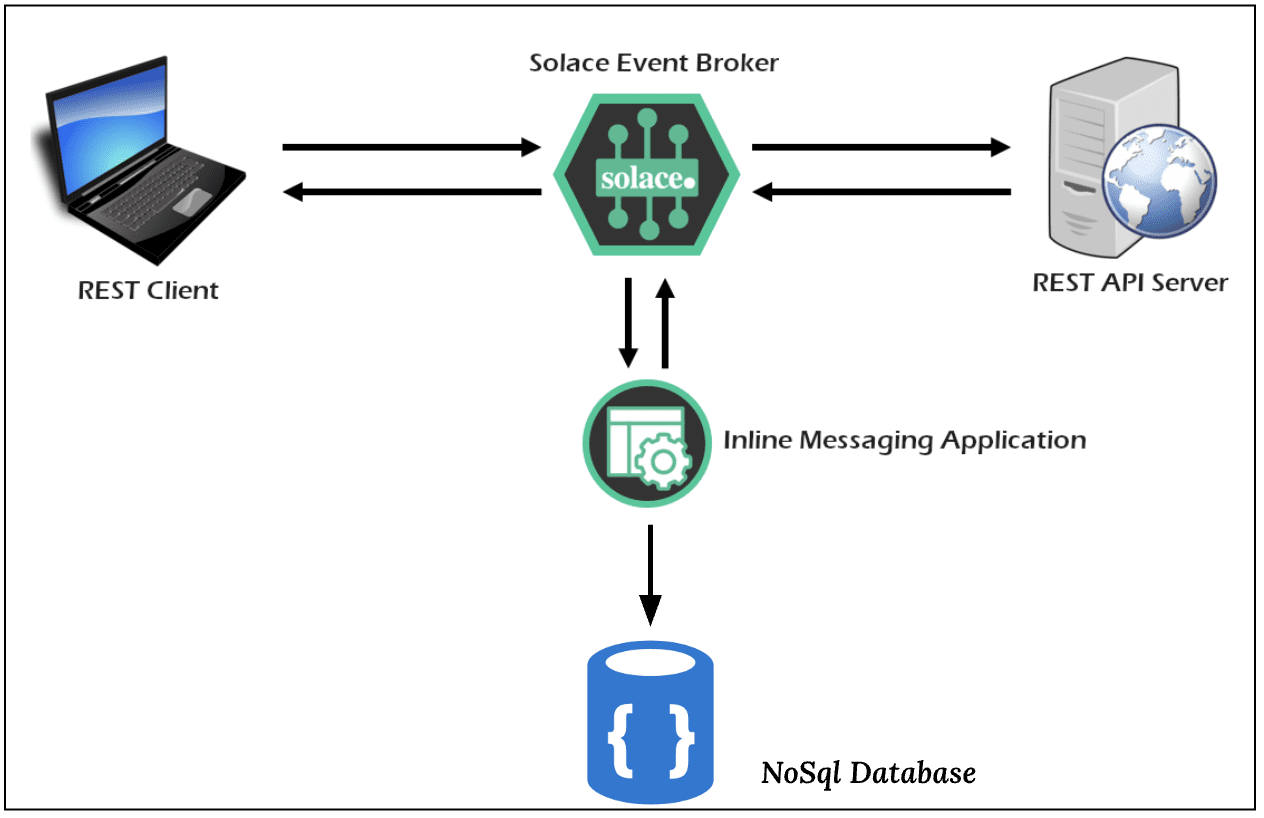

In this article I’ll explain how you can implement an intercepting and logging solution for REST messaging using event-driven architecture and the publish/subscribe message exchange pattern. The approach can be extended to smoothly migrate from REST to event-driven architecture.

The Interception Process

The intercepting process should add minimal overhead to the communication and be performed in a non-intrusive manner. Additionally, the communication should continue to function even if the interception is not performed or fails.

Implementing a solution from scratch for intercepting REST requests and responses can be complex and time-consuming, and has risks such as performance degradation, security vulnerabilities, and added overhead, so I decided to use a well-established and proven solution which ensures reliability, security and performance.

Why Solace PubSub+ Platform

Solace PubSub+ Platform is an EDA/messaging platform with advanced features like flexible routing, multi-protocol support, scalability, strong security, high speed, and low latency. The main reason I selected it, however, is how easy it makes it to transition from traditional resource-based architecture to event-driven architecture.

Implementing event-driven architecture can be a complex process, involving multiple steps and significant investments in technology, personnel, and training, and it can not be done overnight. Solace offers a REST Gateway that overcomes many of these challenges by acting as a bridge between REST clients and Solace, allowing REST clients to publish messages to and receive messages from Solace event brokers using standard HTTP methods (e.g. POST, GET, etc.). The Solace REST Gateway provides a simple and efficient way to integrate RESTful web services with Solace’s messaging infrastructure, making it easier for organizations to achieve their EDA migration goals more smoothly and effectively.

Prerequisite

I recommend you review the official Solace documentation regarding REST messaging concepts and the REST Gateway before proceeding, as familiarizing yourself with these concepts will enhance your understanding and facilitate a smoother experience.

If you prefer hands-on learning, you can follow this Codelab by Aaron Lee, one of Solace’s developer advocates. It focuses on how to configure Solace as a proxy using a REST delivery point (RDP) and publish-subscribe pattern for easy access to multiple copies of data. This article does not repeat these steps and instead focuses on demonstrating how to build a logging application.

Functional and Design Requirements

The logging process for intercepting REST request and response data should:

- Have minimal impact on the communication performance

- Store logs in a way that is robust and secure

- Make it easy to access logs for querying and reporting

- Be scalable so it can handle increasing amounts of log data

In this solution I have chosen Azure Cosmos Db as the datastore since it is a secure and highly scalable document database. For querying purposes I have identified that at least the following should be logged:

- Time of Request

- Direction of Request (Request or Response)

- API context and or query parameters

- Payload

- Info about requester (IP, client etc)

Implementation

Solace provides many APIs to connect and interact with its event brokers and sample codes for many different scenarios. For this implementation I decided to use Spring Boot with auto configuration because Azure Cosmos Db also provides Spring Boot auto starter, which makes it easy to implement both in the same framework.

(If you’re not familiar with Spring Boot, it is a framework for building Java applications that makes it easy to create stand-alone, and easily deployable Spring applications. Spring Boot Auto-configuration is a feature that automatically configures your application based on the dependencies you have added to your classpath. It makes it easier to get started and eliminates the need for manual configuration, thereby reducing the time required to develop and launch an application.)

The Code

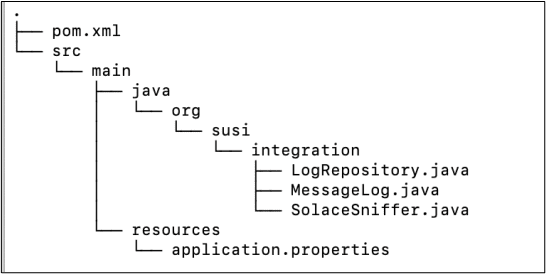

You can find the code in my github repository. Thanks to Spring Boot, Solace and Azure libraries, everything is implemented in less than 200 lines of code in just 3 class files. The code isn’t complex so I’m not going into detailed explanations, but feel free to comment or raise an issue in the repo if anything isn’t clear.

The MessageLog.java is the model (entity) class which defines the data model that is going to save in the database.

The LogRepository.java is a Java interface that defines a reactive repository for a MessageLog entity. It extends the ReactiveCosmosRepository interface and specifies the type of the entity (MessageLog) and its ID type as String.

The EntryPoint of this application is the SolaceSniffer.java, where SpringApplication.run method is used to bootstrap the application with SolaceSniffer.class as the configuration class for the application.

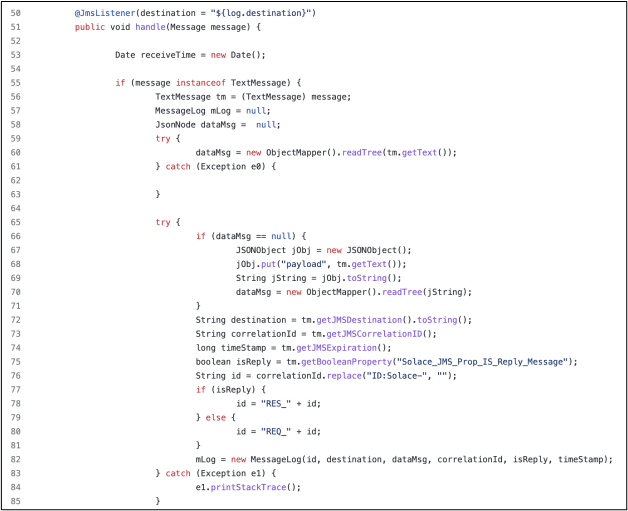

- The handle method, annotated with

@JmsListener, is a JMS message listener that listens for messages on thelog.destinationspecified in the application’s configuration. - The listener handles the messages by checking if they are TextMessage objects, and if they are, they are processed and converted into a MessageLog object. This object is then persisted in the Cosmos database using the

logRepository.savemethod. - Note that if the payload is json it will be saved as is, but if it is xml or text then the content of the message will be saved in an element called “payload”

Configurations and Execution

To run this application you need Java and Maven. First you need to build this project using Maven and then you can run this with java -jar .jar command.

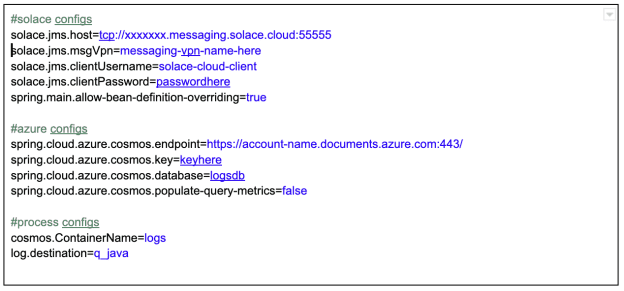

Before running this project you have to configure the Solace and Azure properties as below.

Project Config (applications.properties) file

Solace Configs

The following Solace configurations are need to run this project:

- Creating a REST delivery point (RDP) and a queue,

- Topic subscriptions for the queue to listen to GET and POST requests and responses

- RestConsumer for your backend REST API

- Queue binding to associate the above queue with the RestConsumer

Note that I have not discussed these in detail as this is covered and detailed steps are given in this Codelab. I strongly recommend following that to configure the above.

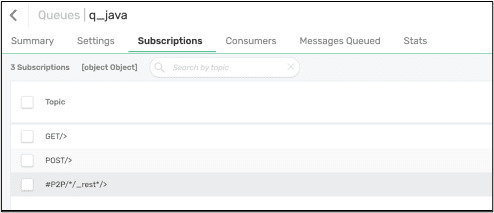

Apart from the above configs, you need another queue for our java application, one that needs to be subscribed to following topics so it can listen to the REST messaging requests and responses. In this case I have created it as “q_java” and once created it should look like this:

Tests and Results

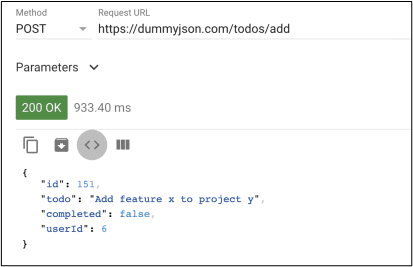

Once everything is set up, you can perform some tests and verify your setup. In this setup I have used https://dummyjson.com as my backend, which has a POST method to add a todo in /todos/add path.

First, check how long it takes to return the output for direct invocation.

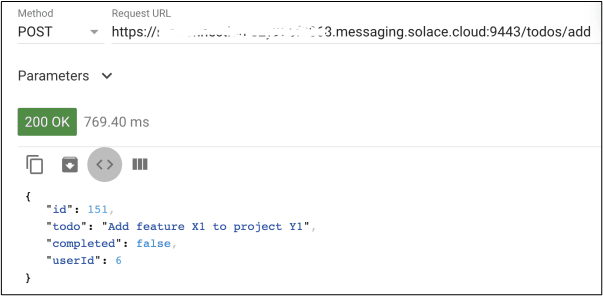

Then invoke the same via Solace, which does not add much delay (though this isn’t a good test for performance).

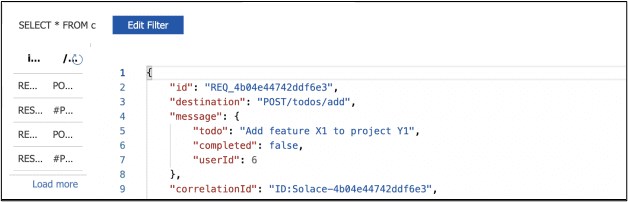

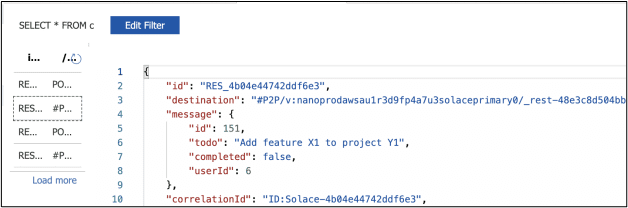

Now it’s time to see what’s stored in the Cosmos Db. You can use the data explorer pane of the Azure Cosmos Db to view the data and perform queries. The following screenshots show how request and response are logged as 2 objects. Note the “REQ_” and “RES_” prefixes and their destinations.

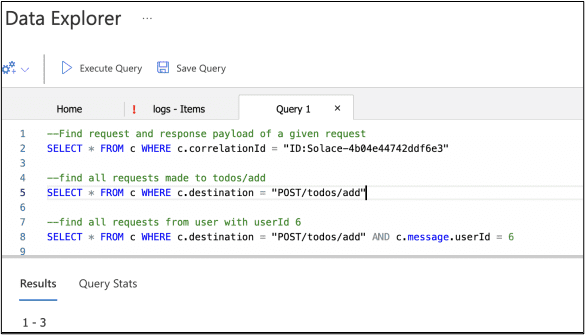

Cosmos DB allows you to store and access data in the form of documents, graphs, key-value pairs, and columns. The document data model is one of the supported data models in Cosmos DB, and it allows you to store JSON data with a flexible schema, enabling you to store any formatted JSON data. With this feature you can store the REST request responses in JSON and further query them using their data properties. For example, the following queries are possible in this system:

I hope you enjoyed the article.

About the Author

Explore other posts from categories: For Developers | Solace Scholars

Solace Scholars

Solace Scholars