Introduction

If your company is like most, the applications and AI agents that power your business run in diverse environments, including clouds, on-premises datacenters, field offices, manufacturing floors, and retail stores – just to name a few.

If these systems can only interact via tightly-coupled synchronous request/reply interactions, individually customized batch-oriented ETL processes, or bespoke integrations, you’re looking at slow responses, stale data and difficulty keeping up with constantly changing business needs and real-time demands.

For your enterprise to be real-time, information must be streamed between the applications, agents and devices that generate and process it so insights can be gleaned and decisions can be made – fast. To become more responsive and to take advantage of new technologies like AI, cloud, IoT and microservices your architecture needs to support real-time, event-driven interactions.

Every business process is basically a series of events. An “event” can broadly be described as a change notification. These changes can have a variety of forms, but all have the common structure of an action that has occurred on an object. Event-driven architecture (EDA) is a way of building enterprise IT systems that lets applications and AI agents produce and consume these events.

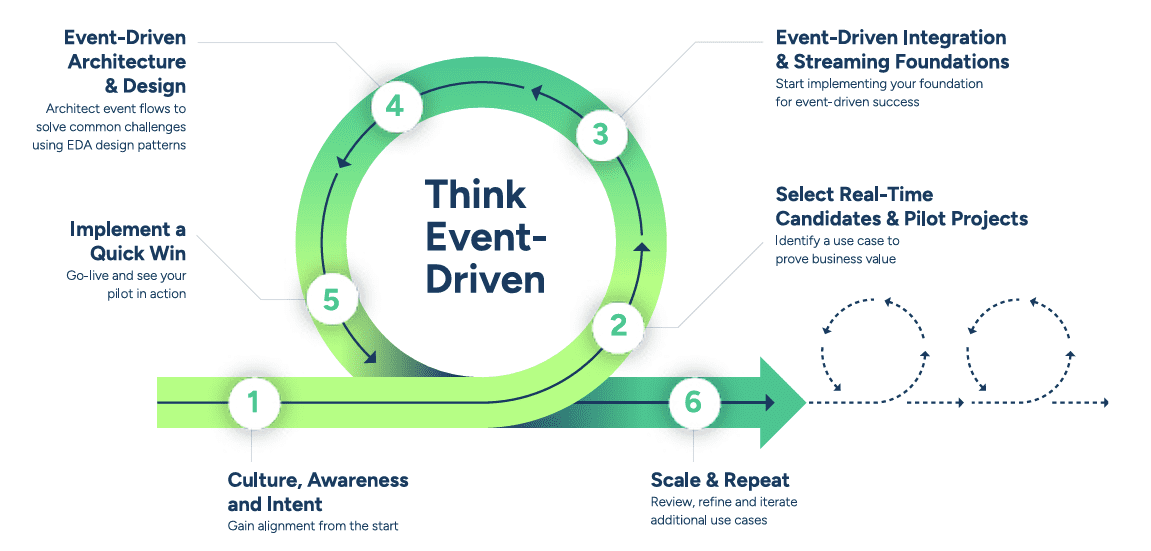

Implementing EDA is a journey, and like all journeys it begins with a single step. To get started down this path, you need to have a good understanding of your data, but more importantly, you need to adopt an event-first mindset.

That’s why the approach introduced in this paper is called “Think Event-Driven.” With this proven process, you will be on your way to getting key stakeholder engagement, seeing initial success, and transforming your entire organization.

How to Become Event-Driven

EDA is not new—capital markets trading platforms have been built this way for decades—but in recent years it has become a necessity across industries and use cases, and the advent of artificial intelligence (AI) makes it mission critical.

That’s because legacy approaches like message-oriented middleware (MOM), service-oriented architecture (SOA); extract, transform and load (ETL); and batch-based approaches don’t effectively meet the needs of AI agents.

Before you can transform your enterprise to become more responsive, agile, and real-time through EDA, you must come to think of everything that happens in your business as an event, and think of those events as first-class citizens in your IT infrastructure.

With EDA, applications, microservices and AI agents communicate via events. Events are routed among these applications in a publish/subscribe manner according to subscriptions that indicate their interest in all manner of topics.

In other words, rather than a batch process or an enterprise service bus (ESB) orchestrating a flow, business flows are dynamically choreographed based on each business logic component’s interest and capability. This makes it easier to add new applications, as they can tap into the event stream without affecting any other system, do their thing, and add value.

So how do you do that? What should your strategy be?

High Level Strategic Approach

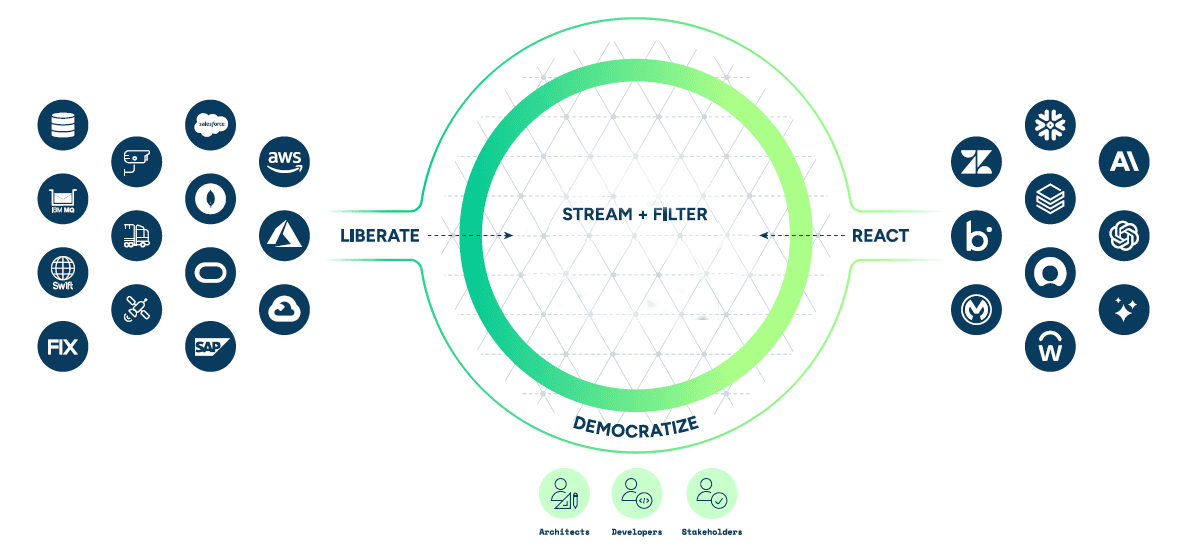

At a high level, if your organization wants to become event-driven, you need to do four things:

- Liberate: Break down data silos by enabling core systems, applications, and devices—like mainframes, ERPs, and IoT platforms—to publish data as events. This shifts data movement from batch to real-time, ensuring information is no longer trapped in isolated systems and is always immediately made available for action.

- Stream & Filter: Once liberated, data must flow across your organization. Use event brokers and intelligent routing to ensure each application, service, or team gets all of the data they need, when they need it.

- React: Empower applications, analytics engines, and intelligent agents to respond to changes the moment they happen—triggering workflows, making decisions, or surfacing alerts—so your business can stay ahead of what’s happening right now.

- Democratize: Make real-time data accessible not just to developers and architects, but to business users, analysts, and AI models through intuitive tools like event portals and catalogs. By democratizing access, teams can discover, subscribe to, and use event streams without having to depend on centralized IT or integration teams.

Practical Implementation Process

The next question is how do you put these steps into practice?

The following six steps use the above strategy and have been proven to make the journey to EDA faster, smoother, and less risky in many real-world implementations.

These actions form the foundation of Think Event-Driven—a proven approach used by leading enterprises to build, scale, and grow event-driven systems with confidence.

The rest of this piece will break down each of these steps, explaining the importance, offering keys to success, and providing some examples you can apply to your own situation.

Step 1: Gain Alignment

EDA generally starts small and grows, but it’s important to get key stakeholder to embrace event-first thinking early on. This requires some thought leadership to get buy-in.

The ESB team needs to start thinking about choreography rather than orchestration. The API teams need to start thinking about event-driven APIs rather than just request/reply.

Most people in IT were trained in school to think procedurally. Whether you started out with Fortran or C, or Java or Node, most IT training and experience has been around function calls or RPC calls or Web Services to APIs – all synchronous. IT needs a little culture change.

Microservices don’t need to be calling each other, creating a distributed monolith. As an architect, as long as you know which applications, microservices and AI agents consume and produce which events, you can choreograph using a distributed network of event brokers known as an event mesh.

It’s important to ponder a little, read up, educate yourself, and educate stakeholders about the benefits of EDA: agility, responsiveness, and better customer experiences. Build support, strategize, and ensure that the next project – the next transformation, the next microservice, the next API – will be done the event-driven way.

You will need to think about which use cases can be candidates, look at them through the event-driven lens, and articulate a go-to approach to realize the benefits.

Step 2: Identify Candidates for Real-Time

Not all systems need change or can be changed to real-time, but most will benefit from an event-driven approach.

You’ve probably already thought about a bunch of processes and projects, APIs and AI agents, that would benefit from being real-time.

But what are the best candidates in your enterprise? The troublesome order management system? The drive to improve customer service with AI agents? The next generation payments platform that you are building? Would it make better sense to push master data (such as price updates or PLM recipe changes) to downstream applications, instead of polling for it?

There will be many candidates with different priorities and challenges, so look for projects which will do these three things:

- Alleviate pain points by addressing issues like brittle integrations, slow performance, or the inability to support new features—problems that frustrate teams and stall progress.

- Deliver meaningful business impact by improving customer experience, enabling competitive differentiation, or boosting profitability in ways that leadership can see and measure.

- Create momentum with a quick win—a project that can be delivered fast, shows tangible value, and builds excitement and confidence across your organization.

By starting with one high value project, you can begin to find the quirks unique to your organization that will inevitably be present at a larger scale. Keep in mind that not every data source is a candidate for EDA. Find a system that has robust data generation capabilities and can be easily modified to submit messages. These messages will be harnessed as your events later in the journey.

Make a shortlist of real-time candidates for your event-driven journey. It’s also important to bring in the stakeholders at this early stage because events are often business-driven, so not all your stakeholders will be technical. Consider which teams will be impacted by the transformation, and which specific people will need to be involved and buy-in to make the project a success from all sides.

Step 3: Build Your Foundation

Once the first project is identified, it’s time to think about architecture and tooling.

EDA depends on decomposing monolithic applications and business processes into microservices and AI agents, and putting in place a runtime fabric that lets them talk to each other in a publish/subscribe one-to-many fashion.

It’s important to start the design-time right, and have the tooling to ensure that events can be described and cataloged, and have their relationships visualized.

You’ll want an architecture that’s modular enough to meet all of your use cases, and flexible enough to receive data from everywhere that you have data deployed (on-premises, private cloud, public cloud, etc.).

This is where the event-driven integration platform comes in with the event mesh runtime. Having an event-driven integration platform in place (even the most basic pieces of it) allows your first project to leverage it as applications and agents start to come online and communicate with each other, rather than via REST.

The following runtime and design-time pieces are essential.

- Micro-Integrations

- Event Broker

- Event Mesh

- Event Portal

- Topic Taxonomy

Micro-Integrations

A key enabler of your event-driven foundation is the use of micro integrations—lightweight, purpose-built components that do more than just connect systems. Unlike traditional connectors, micro integrations perform inline logic and data transformation, allowing legacy systems, cloud APIs, and modern services to participate in the event mesh.

These micro integrations can be implemented across low-code platforms, pro-code services, or serverless functions, making them versatile enough to suit any architecture. Whether enriching events before publishing or adapting legacy formats into modern payloads, micro integrations help unify your enterprise into a truly event-native system.

Event Broker

An event broker is the fundamental runtime component for event routing in a publish/ subscribe, low latency, and guaranteed delivery manner. Applications, AI agents and microservices are decoupled from each other and communicate via the event broker. Events are published to the event broker via topics following a topic hierarchy (or taxonomy), correspondingly subscribed to by one or more applications or microservices, or analytics engines or data lakes.

An ideal event broker uses open protocols and APIs to avoid vendor lock-in. With open standards, you have the flexibility of choosing the appropriate event broker provider over time. Think about the independence TCP/IP gave to customers choosing networking gear – open standards made the internet happen. By leveraging the open source community, it’s easier to create on-the-fly changes, and you’re not stuck having to consult closed documentation or sit in a support queue.

Event Mesh

An event mesh is a network of event brokers that dynamically routes events between applications no matter where they are deployed – on-premises or in any cloud or event at IoT edge locations. Just like the internet is made possible by routers converging routing tables, the event mesh converges topic subscriptions with various optimizations for distributed event streaming.

While you might start with a single event broker in a single location, modern applications are often distributed. Whether it’s on-premises, in multiple clouds, or in factories, branch offices, and retail stores – applications, agents and devices are distributed.

An event originating in a retail store may have to go to the local store’s systems, the centralized ERP, the cloud-based data lake, and to an external partner. As such, event distribution should be transparent to producers and consumers – they should be connecting to their local event broker, just like you or I would connect to our home WiFi router to access all websites, no matter where they are hosted.

There are multiple ways an event mesh supports your application architecture:

- Connects and choreographs microservices using publish/subscribe, topic filtering, and guaranteed delivery over a distributed network

- Pushes events from on-premises to cloud services and applications

- Enables digital transformations for Internet of Things (IoT)

- Enables Data as a Service (DaaS) across lines of business for insights, analytics, ML, and more

- Gives you a much more reactive and responsive way to aggregate events

Of course, how you deploy your event mesh will help determine the kind of event broker you’re looking for.

Event Portal

An event portal – just like an API portal – is your design and runtime view into your event mesh. An event portal gives architects an easy GUI-based tool to define and design events in a governed manner, and offers them for use by publishers and subscribers. Once you have defined events, you can design microservices or applications in the event portal and choose which events they will consume and produce by browsing and searching the event catalog.

As your events get defined, they are enlisted in an event catalog for discovery. This documentation alone is beneficial, but an event portal can also help you done the line to automate and push application configuration to event broker, or manage apps and event promotion across different environments.

This will help to visualize and describe the events that you’re able to process. When building out the system for more event sources and different ways to consume them, the catalog is a great reference to know what’s already been built so you can avoid duplication of effort.

Topic Taxonomy

Topic routing is the lifeblood of EDA. Topics are metadata of events; tags of the form a/b/c – just like an HTTP URL or a File Path – which describe the event. The event broker understands topics and can route events based on who subscribed to them, including wildcard subscriptions.

As you start your EDA journey, it’s important to pay attention to – setting up a topic naming convention early on and governing it. A solid taxonomy is probably the most important design investment you will make, so it’s best not to take shortcuts here. Having a good taxonomy will significantly help with event routing, and the conventions will be obvious to application developers.

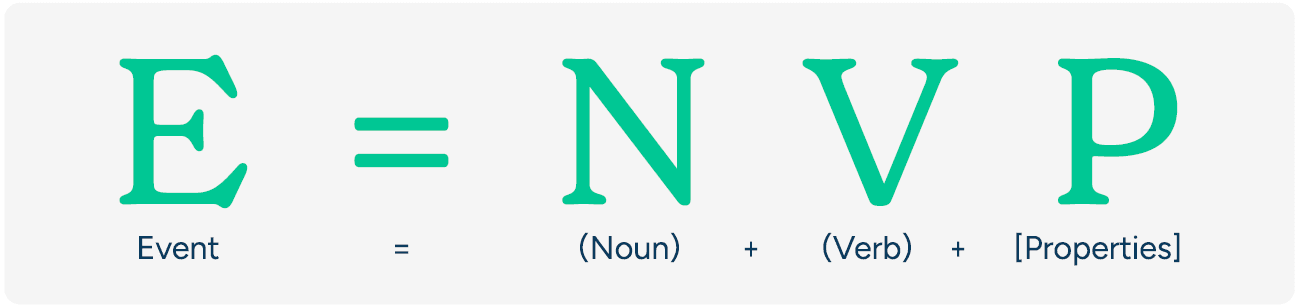

Just like a well-formed sentence in English, a well-modeled event has a subject (noun), an action (verb), and supporting details (context). That’s the idea behind the formula E = NVP, which stands for “Event = Noun + Verb + Properties”.

- Noun identifies the subject — it defines what the event is about. This is usually the core business entity, such as an order, payment, customer, flight, or shipment. The noun anchors the event in the domain language of your business and directly informs topic naming. Choosing clear, consistent nouns makes it easier for teams to filter, route, and reason about events across systems.

- Verb captures the action—what happened to the entity. Common verbs include created, updated, canceled, or shipped. Verbs define the business event and often correlate to transitions in a process or state machine. Selecting precise verbs helps align event flows with real-world processes and supports choreography across systems.

- Properties are the payload—the structured data that adds full context to the event. These include identifiers, timestamps, descriptions, and any business-relevant fields that consumers need to act on the event. Properties should be lean but informative: enough to enable downstream action without overwhelming consumers or tightly coupling them to internal schemas.

Following this simple formula will give your events a clear structure that makes them easier to understand, route, and reuse. Not only does this make them more intuitive, it makes it easier to enforce consistent topic taxonomy. For example:

When naming your events and structuring payloads, this model helps align developers and business stakeholders alike. It also supports smarter topic naming and filtering across your event mesh.

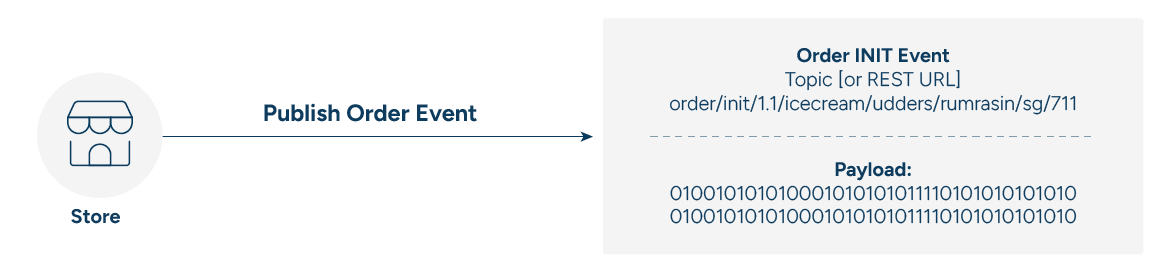

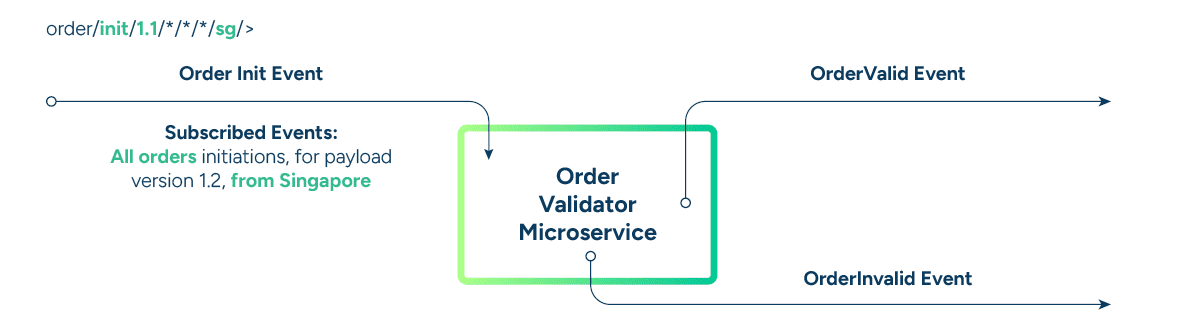

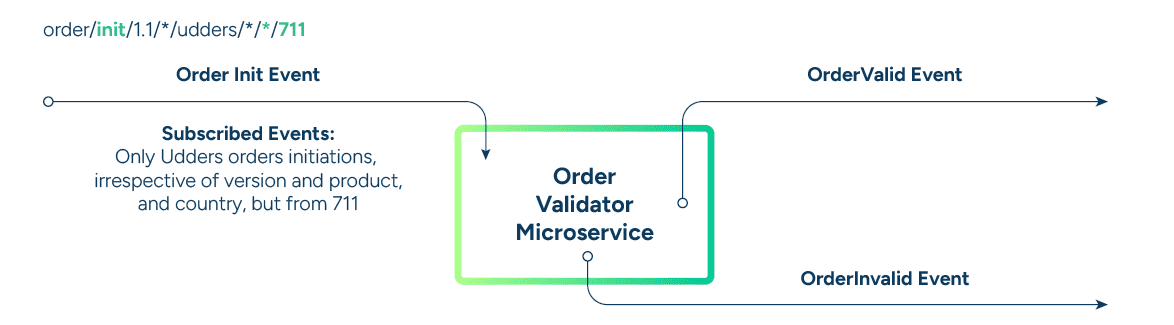

Example: Topic Taxonomy

Let’s say a CPG company receives an order for a specific product—like a new SKU of energy bars—via a regional e-commerce partner in Southeast Asia. The company has structured its event topics to reflect key metadata like event type, product category, brand, region, and channel.

Using these topic structures:

- Order validation services subscribe to order/init/1.1/> to catch all new orders across categories and regions.

- Regional processors can subscribe to order/valid/*/snacks/*/sea/* to handle validated orders for snack products in Southeast Asia.

- Analytics engines or downstream systems might subscribe to order/> to capture a full view of order activity across the business.

By applying topic taxonomy best practices, the company ensures efficient routing, localized processing, and global observability—all without tight coupling between systems.

Because events from the publisher (microservice, application, legacy-to-event adapter) have topics as metadata, consumers can use it to subscribe to events.

Topic subscriptions would be:

- Consume and validate all orders of version 1.1 and publish the order valid message: order/init/1.1/>

- Consume and process all valid orders for ice cream originating in Singapore: order/valid/*/snacks/*/*/sg/*

- All orders no matter what stage, category, location: order/>

Step 4: Design the Flow for your Pilot Project

Essentially, an event flow is a business process that’s translated into a technical process. The event flow is the way an event is generated, sent through your broker, and eventually consumed.

Getting started with a pilot project is the best way to learn through experimentation before you scale.

With the pilot, as you identify events and the event flow, the event catalog will start taking shape. Some event portals can even give you an AI assist. Whether you maintain the catalog in a simple spreadsheet or in an event portal, the initial event catalog also serves as a starting point of reusable events – which applications in the future will be able to consume off the event mesh.

You want to choose a flow that makes the most sense for your current state modernization or pain reduction. An inflight project or an upcoming transformation make ideal candidates, whether it’s for innovation or technical debt reduction via performance, robustness, or cloud adoption. The goal is for it to become a reference for future implementations. Pick a flow that can be your quick win.

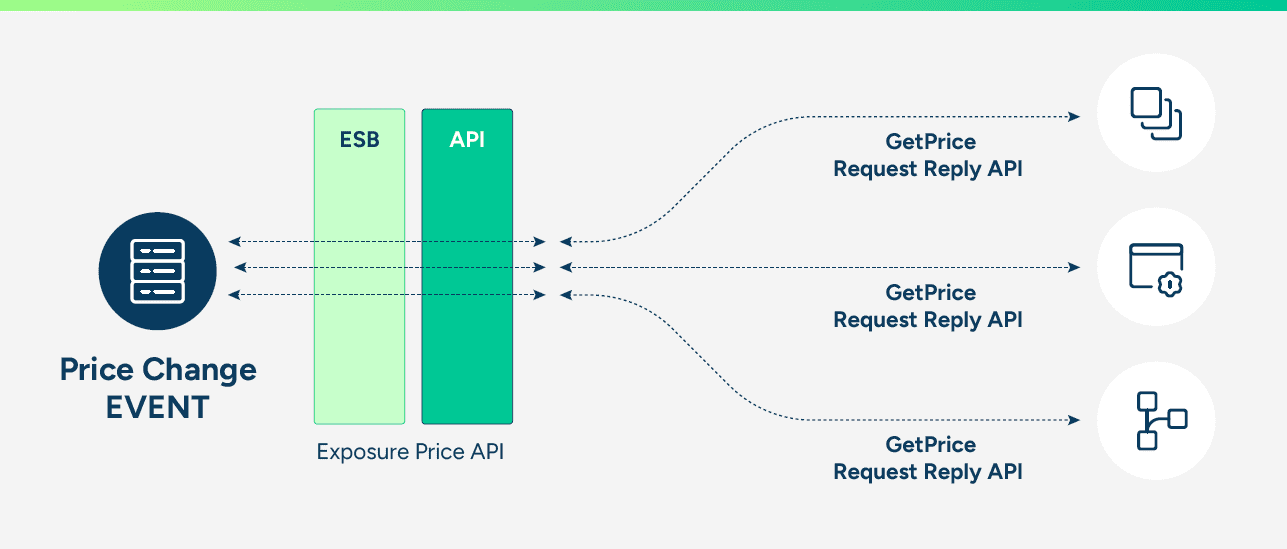

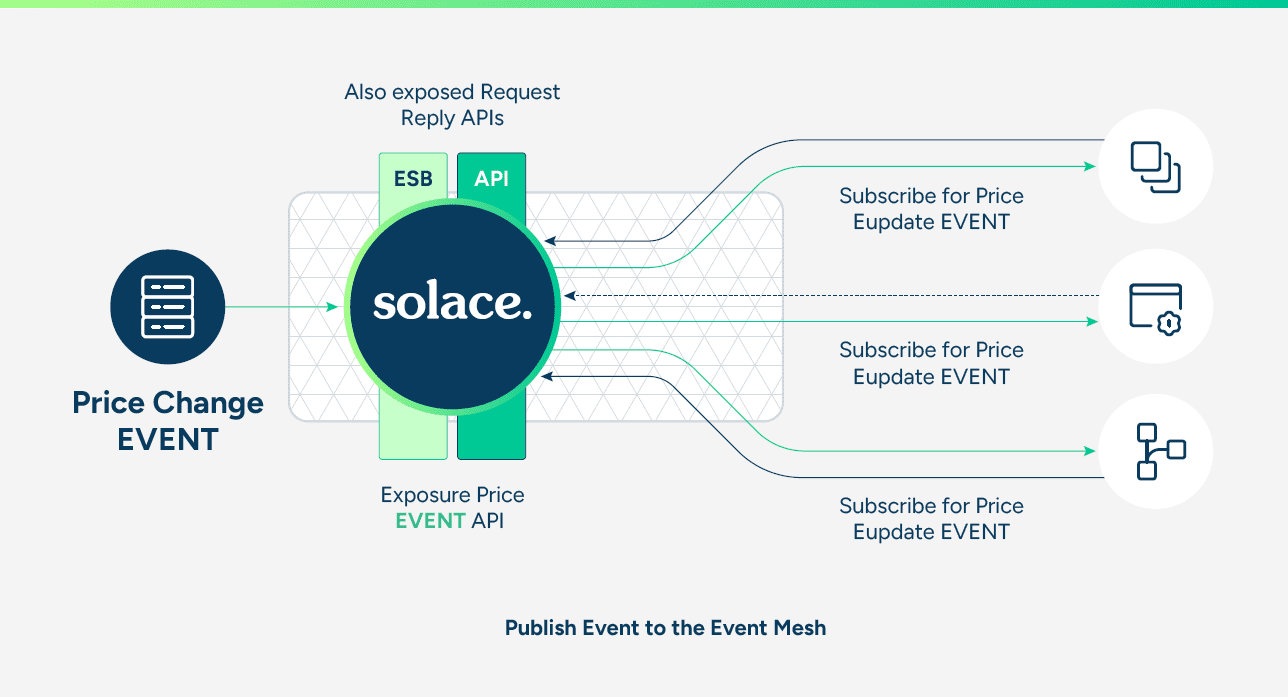

Consider this product price change flow as an example:

The price change itself is considered the event – ‘price’ being the noun, and ‘change’ being the verb.

In an existing architecture that is not event-enabled, the price change event is:

- Not pushed – a request/reply API is required, or maybe even a batch process

- Not real-time – downstream applications don’t know about the price change until they ask

- Not scalable – at least not in a way that’s remotely cost effective

- Bursty – event applications continuously call the API, which can cause load/burst

By event-enabling the price change and implementing EDA with an event mesh, downstream apps subscribe to the price change events and receive event notifications as they happen in real-time. The event mesh filters and only pushes the events that the downstream applications have subscribed to (in accordance with the topic taxonomy).

Events are queued and throttled to reduce load, making it easier to scale. They are also delivered in a lossless, guaranteed manner.

Step 5: Develop and Deploy Event-Driven Elements

Once pilot flows have been identified and an initial event catalog is starting, the next step is to start an event-driven design by decomposing the business flow into event-driven microservices and agents, and identify events in the process.

Decomposing an event flow reduces the total effort required to ingest event sources, as each microservice or agent will handle a single aspect of the total event flow. New business logic can be built using microservices, while existing applications – SAP, mainframe, custom apps – can be event-enabled with adapters.

Orchestration vs. Choreography

There are two ways to manage the agents and microservices in your event flows: orchestration and choreography. With orchestration, they work in a call-and-response (request/reply) fashion, and they’re tightly coupled, i.e., highly dependent on each other, tightly wired into each other. With event routing choreography, agents and microservices are reactive (responding to events as they happen) and loosely coupled — which means that if one application fails, business services not dependent on it can keep on working while the issue is resolved.

In this step, you’ll need to identify which steps have to occur synchronously and which ones can be asynchronous. Synchronous steps are the ones that need to happen when the application or API invoking the flow is waiting for a response, or blocking.

Asynchronous events can happen after the fact and often in parallel – such as logging, audit, or writing to a data lake. In other words, applications that are fine with being “eventually consistent” can be dealt with asynchronously. The events float around, and microservices choreography determines how they get processed. Because of these key distinctions, you should keep the synchronous parts of the flow separate from the asynchronous parts.

This modeling of event-driven processes can be done manually at first or with an event portal, where you can visualize and choreograph the microservices.

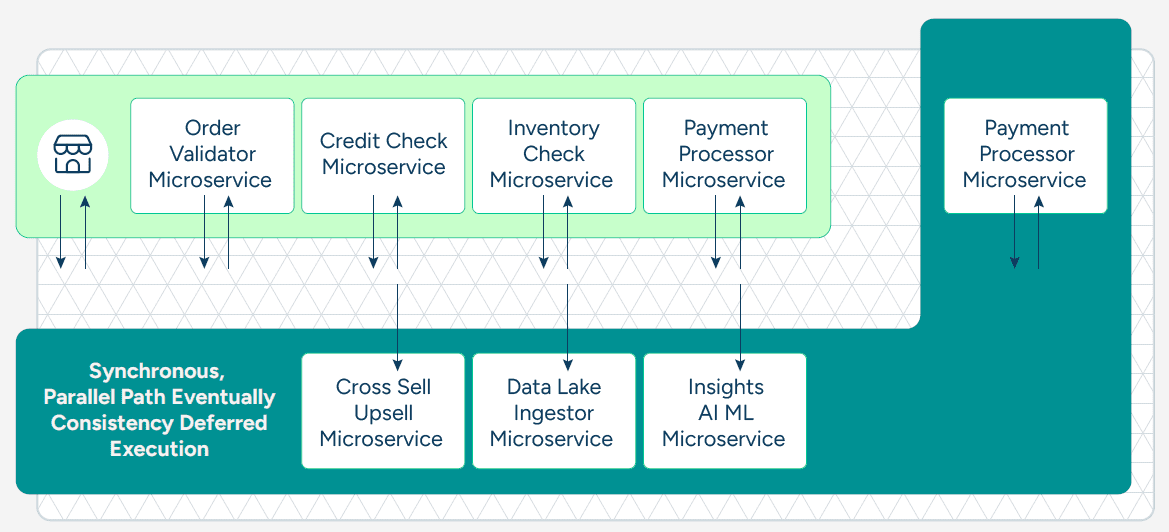

Example: Eventual Consistency

One of the problems with a RESTful synchronous API-based system is that each step of the business flow – each microservice – is inline. When a system submits an order, should it wait for all the order processing steps to be completed, or should only a few mandatory steps be inline?

If you think about it, all the “insights” processes do not need to happen before the point of sale systems get an order confirmation – they are just going to slow down the overall response time. In reality, only the order ingestion and validation need to be inline – everything else can be done asynchronously.

The event mesh provides guaranteed delivery, so the non-inline microservices can get the data a little after the fact, but in a throttled, guaranteed manner. The event stream flows into the systems in a parallel manner, thereby further improving performance and latency.

Parasitic Listeners

As events are flowing and are cataloged, they can also be consumed by analytics, audits, compliance, and data lakes. Authorized applications will receive a wildcard-based, filtered stream of events in a distributed manner – with no change to the existing microservice, and no ESB or ETL changes.

Event Sources and Sinks – Dealing with Legacy Applications

There are a couple of event sources and sinks that you should be aware of. Some sources and sinks are already event-driven, and there’s little processing you’ll need to do to consume these events. Let’s call the others “API-ready.” API-ready means they have an API that can generate messages that can be ingested by your event broker, usually through another microservice or application.

Although there’s some development effort required, you can get these sources to work with your event broker fairly easily. Then there are legacy applications that aren’t primed in any way to send or receive events, making it difficult to incorporate them into event-driven applications and processes.

Here’s a breakdown of the three kinds of event sources and sinks:

Event-Driven Applications

Event-driven applications can publish and subscribe to events, and are topic and taxonomy aware. They are real-time, responsive, and leverage the event mesh for event routing, eventual consistency, and deferred execution.

API-Ready Applications

While API-ready applications may not be able to publish events to the relevant topic, they can publish events that can be consumed via an API. In this case, an adapter microservice can be used to subscribe to the “raw” API (an event mesh can convert REST APIs to topics), inspect the payload, and publish the event to appropriate topics derived from the contents of the payload. This approach works better than content-based routing, as content-based routing requires the governance of payloads in very strict manners down to semantics, which is not always practical.

Legacy Applications

You’ll probably have quite a few legacy systems that are not even API-enabled. As most business processes consume or contribute data to these legacy systems, you’ll need to determine how to integrate them with your event mesh. Are you going to poll the system? Or invoke them via adapters when there is a request for data or updates? Or are you going to off-ramp events and cache them externally? In any case, you’ll need to figure out how to event-enable legacy systems that don’t even have an API to talk to.

The key to accommodating legacy systems is identifying them early and getting to work on them as quickly as possible.

For more details and examples, read How to Tap into the 3 Kinds of Event Sources and Sinks.

Go Cloud-Native As You Can

The accessibility and scalability of the cloud reflects that of an event mesh. They’re perfect candidates to deploy together. That said, you can’t expect to have all of your events generated or processed in the cloud. To ignore on-premises systems would be a glaring oversight. With an event mesh implemented, it doesn’t matter where your data is, so you can more easily take a hybrid approach.

For example, your data lake might be in Azure while your AI and ML capabilities might be in GCP. You might have manufacturing and logistics in China or Korea and a market in India or the USA. With 5G about to unleash the next wave of global connectivity, your event-driven backbone needs to work hand-in-hand with two patterns, 180 degrees apart: hybrid/multi-cloud and an intelligent edge.

By using standardized protocols, smart topic taxonomy, and an event mesh, you are free to run business logic wherever you want.

Example: Order Management

An order management process may see these microservices in an event flow following a point of sale:

- Order validator

- Credit check

- Inventory check

- Payment processor

- Order processor

An order for rum raisin ice cream is initiated when a point of sale system makes an API call to submit it:

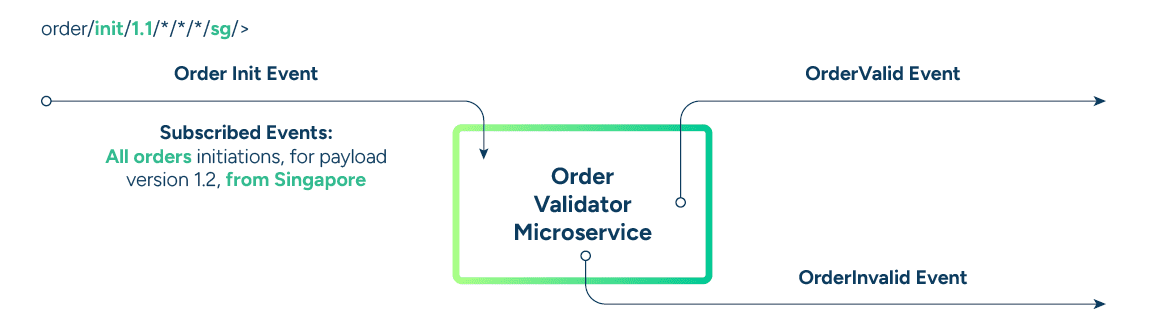

The Order Validator microservice consumes the new order event, and produces the valid order, or invalid order event. The valid order event is then consumed by the Credit Check microservice, which similarly produces the next events.

Subscribed Events: all order initiations, irrespective of version and product

Published Events: order validation status with other meta data

Example: Event Routing

Issue: Order validation rules have been changed from Singapore to the US and payload has changed from JSON to protobufs.

Solution: A new (reused) microservice is created to serve the business logic of validating orders from the US with the new payload and rewire topic subscriptions. The new microservices start listening to the relevant topic, consuming order initiation events from US of the version 1.2. Nothing else changed!

Issue: Want to monitor all orders coming from the 711 chain.

Solution: Implement a microservice with the relevant Insights business logic, look up the event in the event catalog, and start subscribing to events using wildcards.

Step 6: Scale and Repeat

Once the design is done and the first application gets delivered as an event-native application, the event catalog also starts to get populated.

Getting a quick win with the event catalog as a main deliverable is just as important as the other business logic, and it drives innovation and reuse.

Hopefully, with the ability to do more things in real-time, you can demonstrate agility and responsiveness of applications, which in turn leads to a better customer experience. With stakeholder engagement, you can help transform the whole organization!

Now that you have your template for becoming event-driven and hopefully your first quick win in place, you can rinse and repeat. Go ahead and choose your next event flow!

From here, you can walk through the steps again for the next few projects. As you go, the event catalog in your event portal will be populated with more and more events. Existing events will start to get reused by new consumers and producers. A richer catalog will open up more opportunities for real-time processing and insights.

Culture change is a constant but gets easier with showcasing success. The integration/ middleware team might own the event catalog and event mesh, while the application and LOB teams use it/contribute to it. LOB-specific event catalogs and localized event brokers are also desirable patterns, depending on how federated or centralized the organization’s technology teams and processes are.

As you scale, more applications produce and consume events, often starting with reusing existing events. That is how the event-driven journey snowballs as it scales!

Conclusion

Constantly changing, real-time business needs demand one thing: digital transformation. The world is not slowing down, so your best bet is to identify ways you can – cost effectively and efficiently –upgrade your enterprise architecture to keep up with the times, but it’s not an easy task.

Most people in IT were trained in school to think procedurally. Whether you started with Fortran or C or Java or Node, most IT training and experience has been around function calls, RPC calls, Web Services, APIs, or ESB orchestrating flows – all synchronous. Naturally, dealing with the world of asynchronous messaging requires a little culture change, a little thought process switch.

Microservices don’t need to be calling each other, creating a distributed monolith. Events can float around on an event mesh to be consumed and acted upon by your applications, AI agents and microservices.

Architects and developers need a platform and a set of tools to help them work together to achieve the real-time, event-driven goals for their organizations.

With these six steps, you can make the leap with the correct strategy and tools in hand that will support your real-time, event-driven journey. Solace Platform helps enterprises design, deploy, and manage event-driven systems, and can be deployed in every cloud and platform as a service.

Sumeet Puri is Solace's chief technology solutions officer. His expertise includes architecting large-scale enterprise systems in various domains, including trading platforms, core banking, telecommunications, supply chain, machine to machine track and trace and more, and more recently in spaces related to big data, mobility, IoT, and analytics.

Sumeet's experience encompasses evangelizing, strategizing, architecting, program managing, designing and developing complex IT systems from portals to middleware to real-time CEP, BPM and analytics, with paradigms such as low latency, event driven architecture, SOA, etc. using various technology stacks. The breadth and depth of Sumeet's experience makes him sought after as a speaker, and he authored the popular Architect's Guide to Event-Driven Architecture.

Prior to his tenure with Solace, Sumeet held systems engineering roles for TIBCO and BEA, and he holds a bachelors of engineering with honors, in the field of electronics and communications, from Punjab Engineering College