If you are following the key steps to becoming an event-driven enterprise and are ready to dive into the world of events, there are a few things you should know first. For example, how do you deal with legacy systems that don’t even have an API to talk to? Before you can start consuming events, you need to do a little bit of work to get your sources working with your event broker.

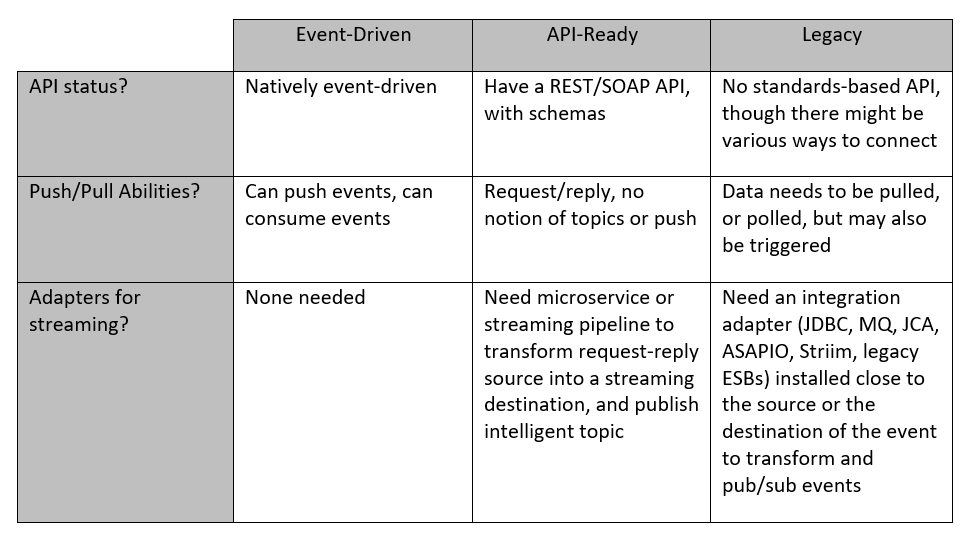

There are a couple of event sources and sinks that you should be aware of. Some sources and sinks are already event-driven, and there’s little processing you’ll need to do in order to consume these events. Let’s call the others “API-ready”. API-ready means they have an API that can generate messages that can be ingested by your event broker, usually through another microservice or application. Although there’s some development effort required, you can get these sources to work with your event broker fairly easily. Then there’s legacy applications which aren’t primed in any way to send or receive events, making it difficult to incorporate them into event-driven applications and processes.

Here’s a breakdown of the three kinds of event sources and sinks:

The Basic Foundation – Event Brokers and an Event Mesh

Event brokers are the runtime brokers which decouple microservices, APIs, and streaming pipelines by being the “intelligent glue” between them. The event broker routes events based on topic metadata in a publish/subscribe manner, including filtering events by topics and wildcards. Guaranteed delivery, burst handling, and throttling event flow are other important capabilities.

Publishers and subscribers talk to the event broker using standards-based APIs/protocols such as REST, JMS, AMQP, MQTT, etc. across multiple programming languages. Multiple event brokers can be networked together to create an event mesh across on premises, cloud, and edge deployments for distributed event streaming. With this basic foundation in place, let’s understand how we can event enable the above categories of applications.

Event-Driven Applications

Event-driven applications can publish and subscribe to events, and are topic and taxonomy aware. They are real-time, responsive, and leverage the event mesh for event routing, eventual consistency and deferred execution.

It’s important to have an event topic taxonomy in place early, so that the event-driven microservices can publish and subscribe to events in conformance to the topic taxonomy. When events are published with a fine-grained topic, the taxonomy metadata makes filtering and event routing – and even throttling and load balancing – super easy. The event mesh provides these topic routing capabilities – rather than just having static REST URLs, queues or static Kafka topics where the responsibility of filtering is pushed to the client, often causing sequencing and security concerns.

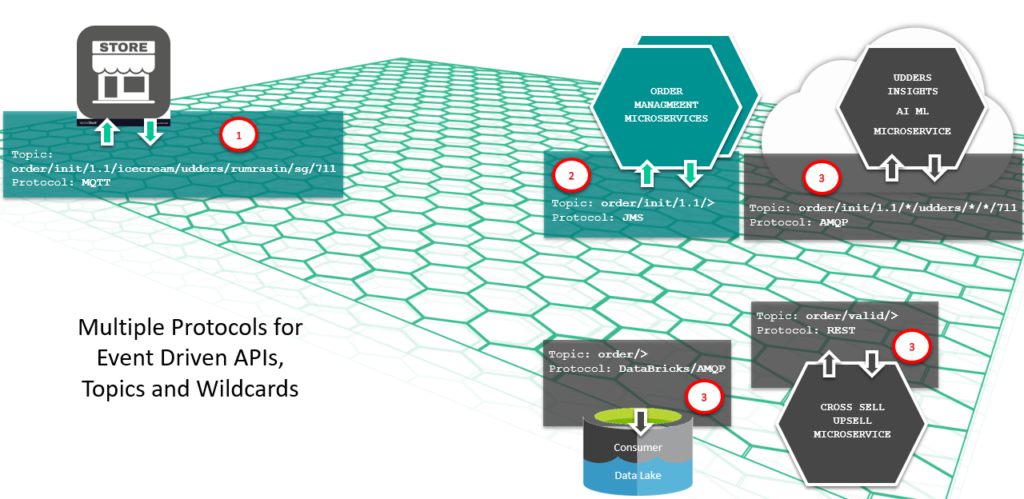

Note the use of wildcards (*, >) in the subscribers. The below flow is a simplified order management use case with synchronous (in green, inline, for which end client waits) and asynchronous (in black, after the fact, deferred execution) microservices.

For example, in the below flow, new ice cream orders of my favorite ice cream (Udders) are being submitted by the event-driven point of sale system in the retail store. Note that the published orders come on MQTT topics with meta data.

The taxonomy is: {event}/{action}/{version}/{category}/{product}/{brand}/{country}/{source} and the example here is order/init/1.1/icecream/udders/rumraisin/sg/711 .

You can create any taxonomy you like – it’s just a convention. The order management microservice is using wildcards to subscribe to all orders (>) of version 1.1, and it’s load balanced. The AI/ML, data lake and cross sell microservices are “tapping the event wireline” and also getting a filtered stream of order events. For example, the “Udders insights” microservice only cares about orders from 7-11 stores (the * in the topic subscription). Similarly, the data lake wants all orders (order/>).

You can understand how topic subscriptions work in more detail here. Having a good taxonomy hugely simplifies and loosely couples microservices (good old pub/sub), rather than coupling them with static URLs or topics (the REST or Kafka approach).

API-Ready Applications

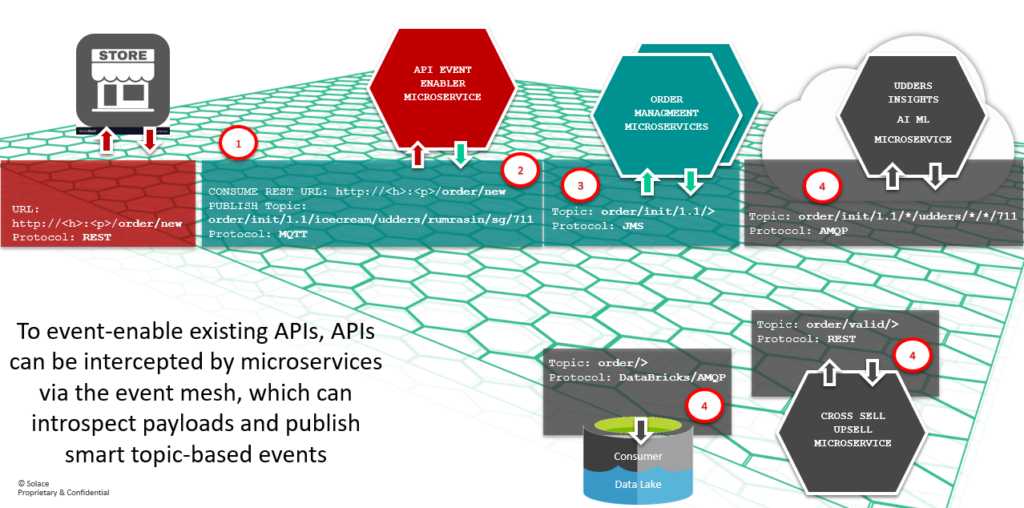

The real power of event-driven architecture comes from event routing by topics and wildcards. While API-ready applications may not be able to publish events to the relevant topic, they do have the ability to publish events that can be consumed via an API. In this case, an adapter microservice can be used to subscribe to the “raw” API (an event mesh can convert REST APIs to topics), inspect the payload, and publish the message to appropriate topics derived from the contents of the payload. This approach works better than content-based routing, as content-based routing requires the governance of payloads in very strict manners down to semantics, which is not always practical.

As you can see in the above diagram, the microservice in red is an interceptor to the “raw” order event published by the retail store. Note the difference between the REST URL in the red rectangle (http://<h>:<p>/order/new) to the REST URL in the event-driven point of sale system in the previous section (order/init/1.1/icecream/udders/rumrasin/sg/711). We need to translate this raw URL into good metadata to leverage event routing. So, we introduce an interceptor microservice (in red) which would subscribe to the raw topic, read the contents, and republish the event on another topic with all the metadata. Subsequent steps have not changed and stay loosely coupled. This demonstrates how agility can be achieved by introducing new channels, like an API-based eCommerce channel.

Legacy Applications

You’ll probably have quite a few legacy systems which are not even API enabled. As most business processes consume or contribute data to these legacy systems, you’ll need to determine how to integrate them with your event mesh. Are you going to poll the system? Or invoke them via adapters when there is a request for data or updates? Or are you going to offramp events and cache them externally? In any case, you’ll need to figure out how to event-enable legacy systems that don’t even have an API to talk to.

The key to accommodating legacy systems is identifying them early and getting to work on them as quickly as possible.

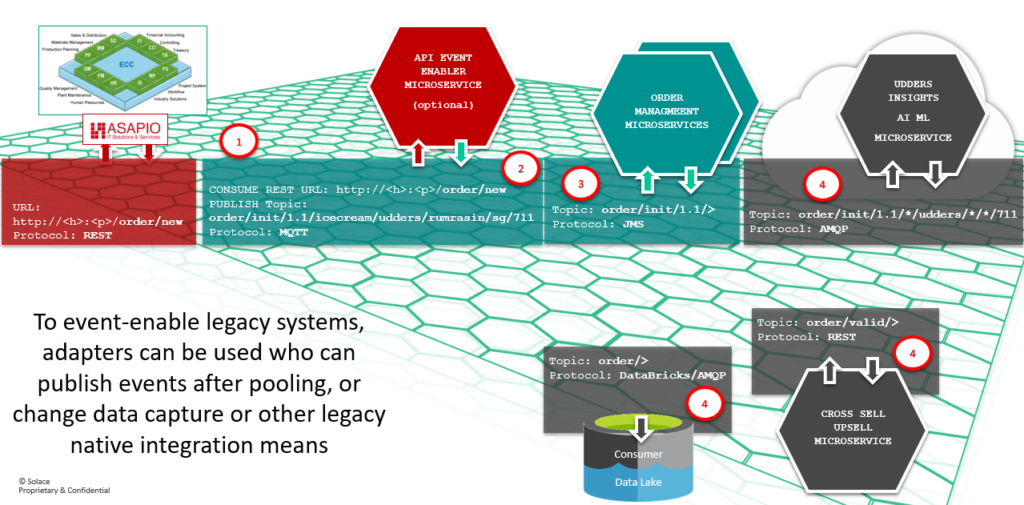

Legacy applications (like database, file systems, mainframe-resident applications and SAP ECC systems) don’t usually have the ability to expose functionality via modern APIs or invoke APIs, but have drivers and adapters. One way to event-enable such systems is with legacy adapters that can understand the means of communications with the legacy system. For example, a database adapter (JDBC, change data capture) or a file adapter (batch to stream) can be used to read the system of record content, and then publish data to appropriate topics based on their content. For example, to event-enable SAP ERP Central Component (ECC), one can use the ASAPIO Cloud Integrator.

As you can see in the above example, ASAPIO is sitting as an ABAP application on SAP ECC and is able to detect changes and publish them to the event mesh over REST of MQTT. If the adapter is not publishing smart topics, we can combine this with the API pattern in the previous section and the microservice (red) can come in to read the payload and publish another event to a smarter topic which follows the taxonomy. The rest of the microservices choreography is not impacted.

It’s important to remember that APIs and events are two strategic pillars that should co-exist.

As you are aiming to standardize your event-driven applications and interactions, consider standardizing using AsyncAPI, whose goal is to enable architects and developers to fully specify an application’s event-driven interface.

Go Cloud Native as You Can — But Stay Open to a Hybrid Approach

You can’t expect to have all of your events generated or processed in the cloud. To ignore on-premises systems would be a glaring oversight. With an event mesh implemented, it doesn’t matter where your data is, so you can more easily take a hybrid approach.

By using standardized protocols, taxonomy and an event mesh, you are free to run business logic wherever you want.

Learn more about implementing event-driven architecture in my next blog “Six + 1 Steps to Implement Event-Driven Architecture.”

Explore other posts from categories: For Architects | For Developers

Sumeet Puri

Sumeet Puri