Summary: The capability to event-enable and stream SAP data across the distributed enterprise is becoming increasingly important, but most enterprises are not well equipped to do so. A solution is to use integration technology that can be embedded in SAP systems to emit events to an event broker; a network of event brokers can then connect across environments to create an event mesh. The event mesh enables events to flow between SAP and non-SAP applications, on premises and in the cloud, dynamically and in real-time.

Why the imperative to event-enable SAP data?

“Event-driven architecture (EDA) is a design paradigm in which a software component executes in response to receiving one more event notification. EDA is more loosely coupled than the client/server paradigm because the component that sends the notification doesn’t know the identity of the receiving components at the time of compiling.” —Gartner

Let’s start with why event-driven design patterns and event-driven architecture (EDA) are becoming increasingly important to enterprises everywhere:

Customers (both external and internal to the enterprise) expect and demand to be able to interact with the business in real-time.

The promise of an event-driven architecture is to make businesses more real-time in their operations and customer interactions. This is done by enabling every software component in the system to publish and subscribe to event notifications in real-time (where an ‘event’ is a change of state: data is created, modified or deleted).

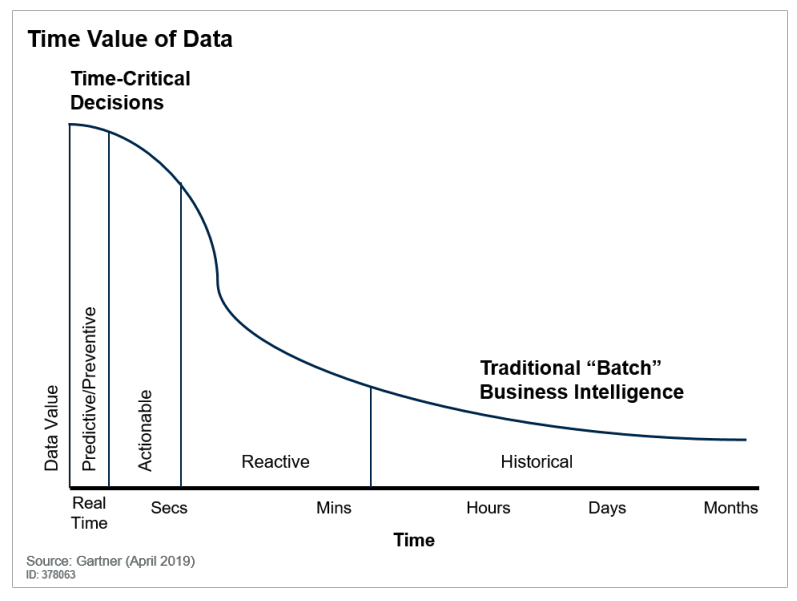

In Gartner’s terms, “EDA delivers some critical aspects of digital business: real-time intelligence and responsiveness, extensibility of applications, global scale, and ‘lossless’ business activity analysis.” The value of data diminishes over time, and businesses that want to make the most of it will put it in motion the instant it is created. You can read more about the business case for event-driven architecture here.

EDA use cases for SAP customers

Specifically, for enterprises with SAP estates, there are a number of use cases/projects that may be on your radar which would benefit from an event-driven design paradigm. Here are a few:

- Smart master data distribution: event-enable SAP master data distribution so that downstream applications are notified about relevant master data changes in real-time. Example: a decision is made to increase the quantity of raisins in a standard cereal box. Event notifications are instantly triggered and streamed to downstream applications, touching systems and processes across production, logistics, finance, marketing, sales, etc.

- Hybrid cloud event streaming: streaming events from legacy systems on premises (such as SAP ECC) to cloud data lakes, cloud services, and SaaS (you want to stream manufacturing quality notifications from an ECC quality management system to a cloud analytics engine to prioritize and increase the speed of quality issue investigations).

- Enabling Data-as-a-Service (DaaS) across lines of business: making events generated across different data centers, factories, warehouses, and cloud applications available on-demand to any application in your distributed enterprise (ex. you want to analyze early sales results in the UK in real-time to forecast the effectiveness of a marketing campaign before it is rolled out in North America).

Each of these use cases (and there are many more) are best served with an event-driven architecture. But adopting an EDA, particularly one that includes legacy SAP estates on premises, can be challenging.

Challenges implementing EDA for SAP data

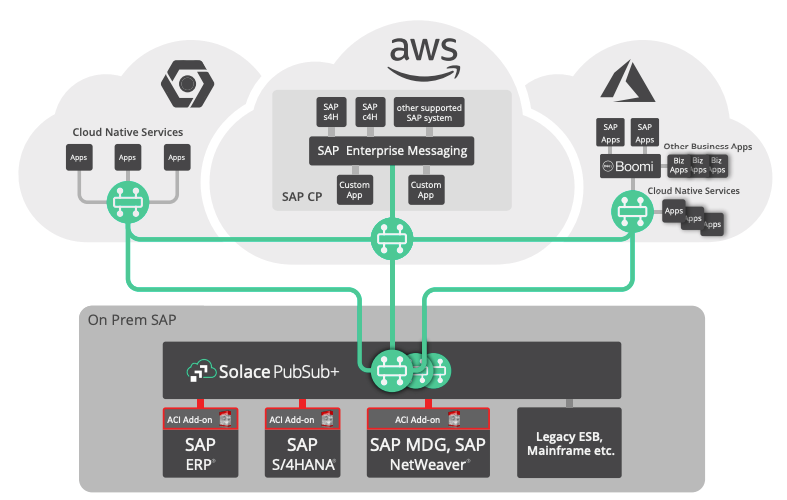

In the cloud, event-driven data movement between SAP applications can be enabled with SAP Enterprise Messaging, SAP’s core event streaming fabric. Most SAP S/4HANA modules can publish events to SAP Enterprise Messaging in the cloud.

On premises, however, the process to extract and event-enable SAP data from ERP (exp. ECC modules for Materials Management and Sales & Distribution) can be much more difficult. You might think to use SAP middleware like PI or PO to do this, but these technologies were not designed to support EDA:

- PI/PO is mostly used for polling (request-reply); it would be costly and resource intensive to set up PI/PO as a listener for SAP events (you would also likely need to keep the connection to the SAP module/object open);

- PI/PO is more of an adapter than a message broker and as such there is no notion of topics/topic routing, which is critical to efficiently stream events (i.e., get only the right event to the right application at the right time).

Alternatively, you might try to leverage existing ESB/messaging technology like TIBCO BW/EMS or IBM WebSphere/MQ, but in these cases you are limited to accessing data that SAP APIs expose, and in the manner (request-reply, batch processing) that the APIs allow.

In both cases, even if you somehow managed to event-enable SAP systems in a data center, there’s the added challenge of efficiently streaming those events to the many applications that might be interested in receiving them. The SAP and non-SAP applications may run in other data centers, plants, or in clouds, all of which can be globally distributed.

Then there are the questions that might not be burning for you today, but are likely to be right around the corner: how do you manage and optimize the flow of events in your system? How do you enable developers to design and discover events? How do you efficiently life-cycle manage events so you can re-use them, monitor their use, and deprecate them when they are no longer needed?

So, in trying to adopt an EDA that incorporates SAP data, you’re really facing challenges on multiple fronts:

- Event enabling SAP data on premises (i.e., generating event notifications on new/changed data);

- Efficiently streaming event notifications across your distributed enterprise; and,

- Effectively managing and optimizing your EDA.

Below, I’ll suggest a solution to all three challenges, but I’ll address the second challenge first, because that really gets to the heart of enabling EDA for the distributed enterprise.

Streaming SAP events across your distributed enterprise, with an event mesh

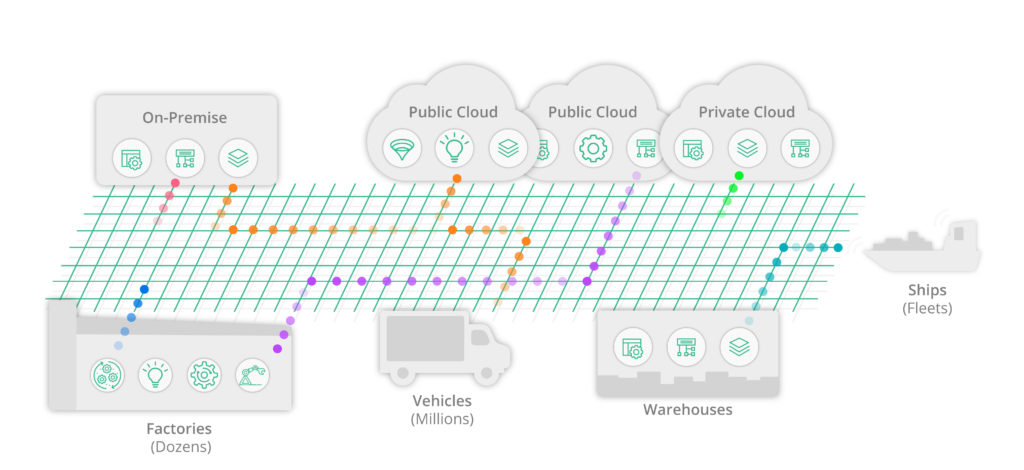

An event mesh is a foundational layer of technology for event streaming across the distributed enterprise. It is an architecture layer that allows events from one application to be dynamically routed and received by any other application, no matter where the applications are deployed (no cloud, private cloud, public cloud).

You create an event mesh by deploying and connecting event brokers (modern messaging middleware) across all your environments – wherever you have applications that need access to events created throughout the enterprise.

Solace is a leading proponent of the event mesh concept. The concept has been highlighted as a “digital business platform priority” by Gartneri. Solace PubSub+ Event Broker is available as run-anywhere software, purpose-built hardware, and as a managed cloud service.

Event mesh in action: event mesh is an architectural layer that can enable events to be transmitted in real-time, between all your distributed technologies.

All of this works like a dream as long as you have a way to connect your application to a local event broker where it can register for events it wants to publish or receive. But as noted above, there are numerous challenges to enabling this for SAP applications on premises. You will need some form of integration technology to get on-prem SAP system events onto the mesh.

Getting SAP events onto the mesh

ASAPIO Cloud Integrator (ACI) is an “add-on” for SAP systems that enables data integration between on-premises and cloud-based applications. Unlike SAP PI/PO or legacy ESBs/messaging, ACI is not middleware; it runs embedded on SAP systems and retrieves data natively. With ACI you can set-up native event triggers on an SAP object and push the events to an event broker/event mesh in real-time.

ACI uses SAP “change pointers” to extract events from SAP systems as they occur. Anything that SAP change pointers support can be emitted as events by ECC, including master data events, PLM updates, delivery notifications, and work orders. Here’s a visual representation of ACI and Solace PubSub+ event brokers at work:

You can learn more about ACI and how it works with PubSub+ Event Broker here.

Managing your event-driven architecture: event portal and event mesh management

It likely won’t be until you’ve started to deploy EDA for select use cases that you’ll run into some basic questions and roadblocks that slow broader adoption. Questions like:

- How can you enable other application teams across the enterprise to discover and leverage the events your applications are generating?

- How can you make it easier to deploy, audit and manage your event-streaming infrastructure (event brokers/event mesh)?

- How can you make it easier for developers to design event-driven applications and microservices?

The fact of the matter is that adopting an EDA that extends beyond one or a few select use cases can be challenging today. There is a lack of tooling and infrastructure, the likes of which support the status quo (REST/API-based architecture).

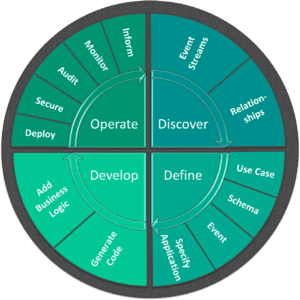

In his post, Event-Driven Architecture Demands Event Lifecycle Management, Senior Architect and EDA guru Jonathan Schabowsky recommends four categories of tools/capabilities that would enable faster and more efficient adoption of EDA: tools for (1) event discovery, (2) event definition (3) event development and (4) operations.

In a nutshell, Jonathan says that enterprises need tools and infrastructure similar to that associated with API management, but designed specifically for the event-driven, asynchronous world. Things like event brokers (act like an API gateway), an event portal (similar to a service portal but for events), and code generators make the creation of event-driven applications faster and easier.

Solace PubSub+ Platform includes components to address many of these needs, including an event portal and event mesh management solutions. PubSub+ Event Portal gives developers and architects tools to design, describe, and discover events within their systems. One is able to see the relationships between applications and events, making event-driven applications and microservices easier to design, deploy, and evolve. Download this datasheet to learn more or check out this demo video.

Conclusion: Steps to Event-Enable Your SAP Data

Event-driven design patterns and architectures are becoming increasingly important to enterprises that want to enable real-time B2B and B2C interactions. But adopting EDA is not easy today, particularly for enterprises looking to incorporate legacy SAP estates. Event mesh, event brokers, and embeddable integration technology are tools that can help accelerate EDA adoption for any enterprise, including those powered by SAP.

You may be wondering where to start.

Denis King, Solace’s Chief Executive Officer, recommends six steps:

-

-

- Identify SAP systems and processes that would most benefit from event enablement. Start with a single, valuable use case.

- Which business processes driven by SAP data have bottlenecks due to batch extraction?

- Which LOBs/users does this affect (internal and/or external)?

- What would be the impact of accelerating/improving these business processes?

- How are SAP modules and SAP business objects involved?

- Enable events to be emitted from the relevant SAP objects.

- Install ASAPIO Cloud Integrator

- Use ASAPIO change pointers to trigger events based on changes in SAP business objects

- Push/publish events to PubSub+ Event Broker.

- Sign up for a Solace PubSub+ Cloud account or download PubSub+ Event Broker: Software (free and paid versions are both available)

- Use ASAPIO to define which SAP events are emitted to the event broker on a topic

- Connect event brokers across environments to create an event mesh.

- Interested applications connected to the mesh can subscribe to events

- Connect interested applications to a local event broker.

- Start streaming events

- Rinse and repeat. Extend your event mesh to other use cases and systems.

- Add additional ECC systems with ease

- IoT devices are able to connect to the mesh

- Identify SAP systems and processes that would most benefit from event enablement. Start with a single, valuable use case.

-

In my next blog, I’ll provide more of the technical details on how to deploy PubSub+ and ASAPIO Cloud Integrator together to improve business processes.

iSource: Gartner “The Key Trends in PaaS and Platform Architecture”, 28 Feb 2019, Yefim Natis, Fabrizio Biscotti, Massimo Pezzini, Paul Vincent

Explore other posts from categories: Business | For Architects

Chris Wolski

Chris Wolski