The Criticality of Real-Time Data in Today’s Business

In today’s business environment, making better decisions by simply integrating applications is not enough.

To stay ahead of their competitors, enterprises must create better customer experiences and quickly make smart decisions in response to shifting market conditions. To achieve those goals, they must move beyond simply getting information from point A to point B and into the realm of intelligently distributing exactly the information that various systems need, across environments and around the world, when they need it.

Consider these scenarios:

- A customer takes advantage of a sale and inventory in the Topeka distribution center suddenly drops from 1,000 to 0. How do you inform the manufacturing plant in China, the regional salesperson in Duluth, executive stakeholders in New York, and the enterprise data lake near Chicago? If another division in your enterprise can benefit from that information, how long will it take you to get it to them?

- A teenager sneaking out in Sao Paulo turns the ignition key on his parents’ Honda Civic. How can you immediately let the parents know so they can stop the car from starting?

- A senior citizen in Brooklyn fills a prescription at her local pharmacy that might conflict with a drug she is already taking. The pharmacy uses a service that instantly checks for interactions, but how do they ensure that they will not lose such crucial information if something happens to their system?

- After a spate of fraudulent transactions in Singapore, a credit card processing company needs to add some artificial intelligence to their fraud detection system. Will the added step in the approval process interrupt existing functionality and increase processing time? How long will it take to get it up and running?

The key in each of these scenarios is not only extracting every drop of information in a rapid, secure, reliable, agile fashion, but also being able to effectively get it where it needs to be without overwhelming your network or any individual systems with unnecessary information. Enterprises that make this leap hold a key advantage in the marketplace.

In the scenarios described earlier, the move from reactive to actionable to predictive data provides a better, safer, more enjoyable customer experience – except for the teenager in Sao Paulo, perhaps. But if accelerating to real-time and then continually innovating once you’re there was easy, more enterprises would do it. Here are the three things you must do to make your enterprise real-time:

- Handle massive amounts of new data generated by technologies like AI, IoT, and mobile computing without overwhelming backend systems;

- Instantly distribute information around the globe, and across cloud and on-premises environments;

- Quickly react to changing market conditions with innovative solutions that meet customer demands, comply with regulations, and keep you ahead of your competition.

As I will explain below, the integration platform as a service (iPaaS) approach on its own is not always able to do all of these things.

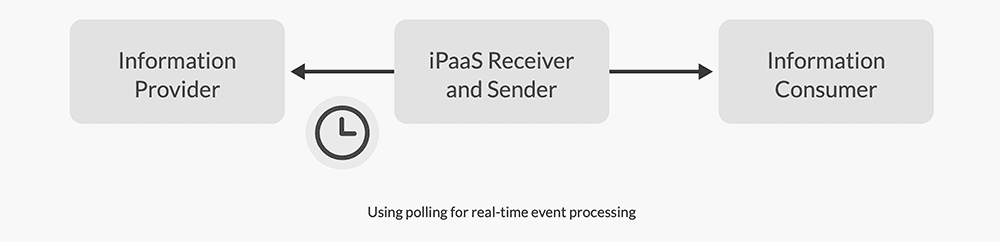

Traditional iPaaS implementations rely on polling to detect changes, leaving significant changes unnoticed for seconds, minutes, or even hours. As consumers demand more from enterprises, those seconds negatively affect a user’s experience.”

Jesse Menning,

Architect in the Office of the CTO at Solace

The Benefits of an iPaaS Solution

Many enterprises turn to iPaaS to accelerate their information distribution. The appeal of an iPaaS solution is obvious: a simple, standard, agile way to integrate information across silos – particularly software as a service (SaaS) applications. For example, it’s only natural to want Salesforce to know about orders coming out of SAP and SAP to know about accounts generated by Salesforce.

An iPaaS solution solves modern integration challenges with a graphical, easy-to-use interface. With teams of developers spread across the globe, modern integration challenges demand a lightweight, standardized, shared, simple and powerful toolset. These challenges include situations where an enterprise must:

- Transform data from one application into the formats used by others;

- Enrich a message with information from a database, a file saved on a disk drive, or a lookup table;

- Route a message based on information in its payload;

- Connect to an application using a complex or unique protocol;

- Split a single message into many, or combine several into one; and,

- Manage multiple synchronous API definitions and expose them to both internal and external consumers.

The Challenges iPaaS Faces in the Area of Real-Time Data

iPaaS makes it easier and faster to integrate distributed applications. So why would real-time data be any different? These three requirements of real-time data are tricky, especially when using an iPaaS by itself:

- Handling bursts of activity without causing customer-facing delays or losing messages.

- Connecting globally dispersed assets, i.e. applications, devices and users.

- Quickly adapting to market conditions, threats and opportunities

In the following sections, I dive a little deeper into each of these requirements, and explain , explaining why these are difficult challenges to overcome with just an iPaaS.

Traffic Surges Overwhelm Systems

Our teenager in Sao Paolo starting the car is certainly important to his parents, but people across the globe turn the ignition key in millions of cars every minute and expect their car to start. And the demands on infrastructure vary over the course of a day. While the number of cars starting at 3 am may be perfectly manageable, the start of rush hour can bring a tsunami of data all at once. This same principle can affect how well all kinds of businesses can deal with bursts of activity – think about retailers who can face bursty sales based on time of day, seasonality, promotions and “going viral,” or utilities companies who need to satisfy wildly fluctuating demand for power or water as weather conditions and natural disasters affect their customers.

The need to process incoming events in real-time, even amidst sudden bursts of data, can be challenging for an iPaaS. A synchronous API request or a static polling interval typically triggers an iPaaS process. In the case of a process invoked by a synchronous API request, dealing with traffic spikes within the structures of an iPaaS typically means increasing the number of processing threads available to handle the incoming flow of information. This often results in the overallocation of memory and processing power.

Even if the iPaaS itself can handle the load, downstream systems may not be able to handle the uneven and uncertain stream of IoT data. As a result, the iPaaS may need to buffer traffic for downstream systems. In a traditional iPaaS architecture, that typically means holding threads open for an extended period, which is not a recommended practice for any integration solution.

Limited Reach Prevents Global Connectivity

The interconnected, global nature of today’s business landscape means that what happens in Topeka has a ripple effect that impacts systems around the world. If the information is stuck in Topeka or doesn’t get out quickly, the business might lose the chance to respond to a short-lived problem or opportunity. In this case, that could mean:

- Just-in-time factories in China devote production time to the wrong products;

- Sales personnel confuse customers by selling parts that are not available;

- Pricing applications lag the latest facts on the ground; and,

- Executives belatedly change marketing strategies in the region.

Traditional iPaaS implementations rely on polling to detect changes, leaving significant changes unnoticed for seconds, minutes, or even hours. As consumers demand more from enterprises, those seconds negatively affect a user’s experience.

iPaaS solutions that rely on REST API implementations struggle to move events and information to multiple datacenters (on-premises and in different clouds) and between suppliers and contractors. Either the iPaaS provider does not allow VPN connections due to security concerns or, if the iPaaS allows it, the networking creates headaches and brittle architectures.

Furthermore, iPaaS solutions usually force transactions to proceed in a step-by- step, point-to-point fashion. Instead of every application getting the information they need simultaneously, the factory in China gets the information first, then the sales personnel, then additional applications down the line. The iPaaS itself also needs to keep track of the next step in the process, leading to a static, brittle architecture.

Tight Coupling Hampers Innovation

Today’s microservice architectures can be composed of dozens of individual components. For instance, the credit card processing company in Singapore may call upon a complex array of functionality before the cash register makes the satisfying “bing” of approval. Adding artificial intelligence might help protect against fraud losses, but it also adds complexity to the testing process, decreases performance, and makes deployments more complex by increasing dependencies.

To aid agility, iPaaS competitors typically feature integrated source control and deployment capabilities.

Having these components as out-of-the-box solutions reduces the amount of required infrastructure to start building an iPaaS. No more setting up a Git repository and configuring Jenkins. And most iPaaS include the ability to call multiple sender processes from a single master process, which orchestrates business processes like a credit card approval in a more modular fashion.

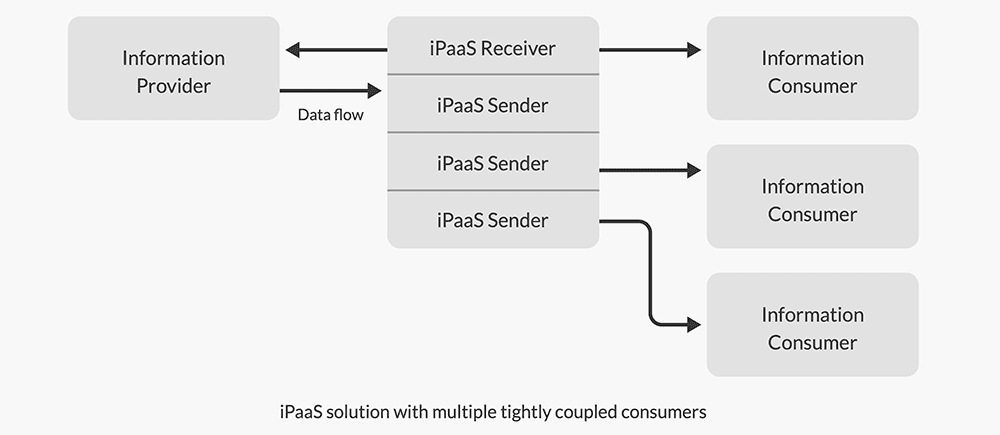

However, even with a code promotion infrastructure in place and the use of subprocesses, traditional iPaaS orchestrations create a single deployment artifact. That block of code consists of multiple components receiving information, applying processing logic, and sending it downstream to consumers. The dependencies created by this architecture (sometimes called “tightly coupled”) prevent innovative features from reaching the market in several ways:

- Adding or updating an information consumer means that someone needs to retest and redeploy the master flow;

- Adding a new information consumer, especially if it is slow or unreliable, adversely affects the entire process; and,

- The master flow code becomes more complex as it orchestrates subflows (and tries to anticipate multiple error scenarios). If multiple teams handle different information consumers, the division of responsibilities can become blurred. That is especially true if HIP-style citizen developers create their own integrations.

How should we address those challenges? We need to form a partnership utilizing the many strengths of an iPaaS solution by enabling instant global information routing, handling surging traffic, preserving information, and adopting innovative technologies.

The Event-Driven Advantage

An event is a change of state that occurs somewhere in an enterprise. Events are business moments that form the lifeblood of any enterprise. In the examples presented above, an event would be the orders in Topeka, the Civic starting in Sao Paolo, the senior picking up a prescription in Brooklyn, and the credit card swipe in Singapore.

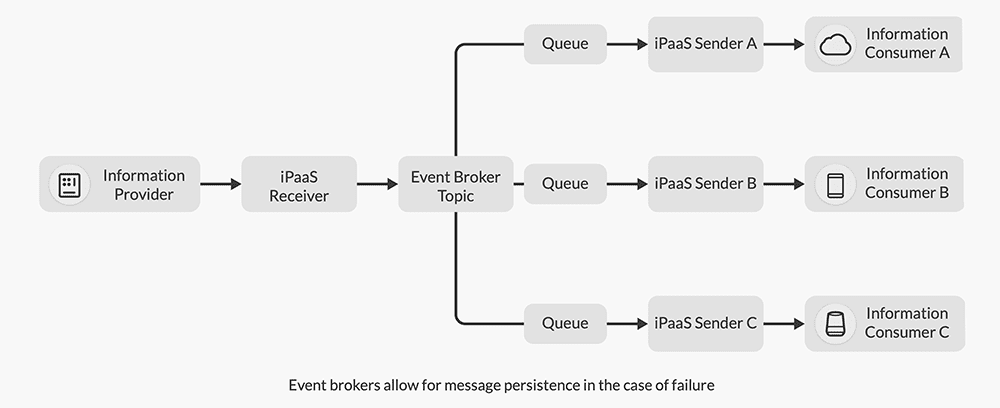

Event-driven architecture is a software design pattern based on the production, publication, distribution, and detection of such events. EDA enables event-driven integration, whereby each event is disseminated as as an asynchronous message that lets other systems detect important business moments right away if they’re able, and eventually even if the recipient is unable to process them in real-time.

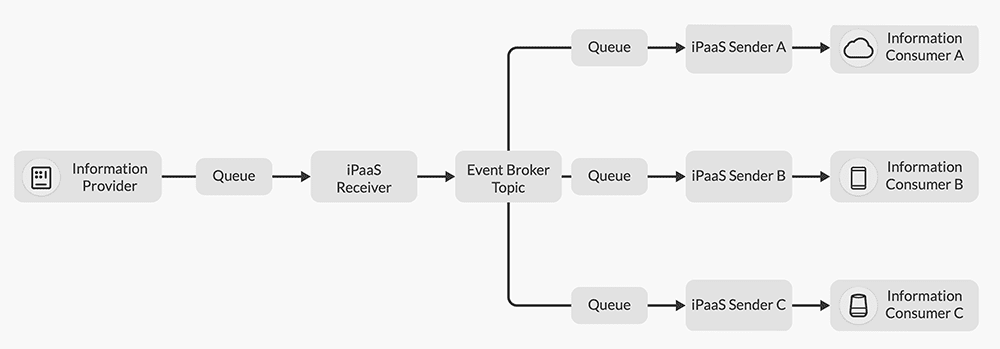

A key element of event-driven integration is an “event broker” that determines what applications are interested in which events, and delivers them accordingly. Think of an event broker as a traffic cop who keeps everyone heading in the right direction.

An event broker registers the interests of disparate systems and handles the details of how to deliver the data. As a result, enterprises can add applications which produce events without needing to know which applications will be consuming them, and letting applications start consuming events without the publisher knowing. This is a concept called “decoupling”— the opposite of the tight coupling discussed earlier.

While a single event broker is powerful, linking many event brokers to create what’s called an event mesh magnifies its power. With an event mesh in place, applications around the world can send and receive information in a real-time, decoupled fashion, with queues located anywhere a broker exists.

As a result, event-driven integration streamlines and accelerates information movement both within and across companies.

Solving the Real-Time Challenge with Event- Driven Integration

Traffic Surges: Buffering & Queuing

An event broker is an ideal way for IoT devices to access your iPaaS’s capabilities. Millions of connected cars (including a Civic in Sao Paolo) and other real-time information sources present a massive opportunity to increase your business’s ability to react to new insights about your customer. However, the amount of information can be a double-edged sword: it can send a massive spike in traffic during periods of high demand.

An event broker helps you overcome these challenges by providing an entry point for events that many IoT information sources can interact with. And as a specialized messaging application, an enterprise-grade event broker can typically handle massive numbers of simultaneous connections.

Once an event enters the enterprise, an event broker can buffer it and prevent the traffic from overwhelming both the iPaaS and other downstream systems. Instead of directly going to the iPaaS, the events sit on queues. If the iPaaS is ready for action, it can process it at once. If the iPaaS is low on resources because of the surge in traffic, the event waits its turn until the iPaaS can handle it.

An event broker is just as important on the outbound side. One of the key characteristics of IoT devices is that they may not always be available, which means that an event needs to be held until the device comes online.

Get Actionable Information Everywhere Immediately

An event mesh allows data to flow around the globe in an instant. As soon as an application generates an event, the event broker immediately processes it, determines the location of interested clients, and sends a copy of the event to whatever event broker they are connected to.

The communication happens without applications or the iPaaS needing to know the details of how it will get there. And because each interested application gets a copy of the message, they can each process it at the same time, making the entire process faster.

Delegating the movement of data to the event broker offers several advantages:

- Democratizes data across LOBs, external partners, and customers;

- Weaves together legacy systems on-premises and more modern applications in the cloud; and,

- Lets DevOps teams use the language, protocol, and application location of their choice.

Accelerate Innovation and Ability to Adapt to Change

The one guarantee in business is that the challenges of tomorrow will look different than what you are facing today. When you think credit card fraud is an outdated criminal activity, someone figures out a way to beat the system. And then it is time to innovate.

I have focused so far on the benefits of decoupling a single sender and receiver from one another, and the benefits of that decoupling are worthwhile on their own, but the value really kicks in as you begin distributing transactions amongst multiple diverse information consumers using a publish-subscribe (pub-sub) mechanism.

An event broker allows you to move into the realm of pub-sub by playing middleman between receivers and senders in such a way that changing a sender does not mean redeploying and retesting the entire process. What’s more, you can seamlessly integrate a new consumer to an existing, deployed solution. With an event broker, you can design a new sender using the toolset available to you through your iPaaS, incorporating the legacy connectivity and graphical mapping that an iPaaS provides. Then add a new subscription and events begin flowing to the new process and onward to the information consumer. The new sender can interact with existing systems, but does not mandate testing the entire system again, which greatly accelerates innovative deployments.

Conclusion

Event-driven integration allows your enterprise to better serve your customer by accelerating the flow of information towards real-time and helps to expand the bounds of your enterprise to cutting-edge technologies like IoT sensors, mobile applications, hybrid multi-cloud adoption, microservices, and machine learning.

In addition, event-driven integration with iPaaS reduces the complexity of bringing innovative ideas to market by simplifying challenges that occur during integration.

If you’re convinced that event-driven integration with an iPaaS is right for you, or if you are intrigued enough to learn about what lies ahead should you choose to go that route, check out The Architect’s Guide to Implementing Event-Driven Integration with iPaaS where I highlight the technical steps to converting your enterprise to event-driven integration.

As an architect in Solace’s Office of the CTO, Jesse helps organizations of all kinds design integration systems that take advantage of event-driven architecture and microservices to deliver amazing performance, robustness, and scalability. Prior to his tenure with Solace, Jesse was an independent consultant who helped companies design application infrastructure and middleware systems around IBM products like MQ, WebSphere, DataPower Gateway, Application Connect Enterprise and Transformation Extender.

Jesse holds a BA from Hope College and a masters from the University of Michigan, and has achieved certification with both Boomi and Mulesoft technologies. When he’s not designing the fastest, most robust, most scalable enterprise computing systems in the world, Jesse enjoys playing hockey, skiing and swimming.