Solace supplies a high performance guaranteed messaging solution that provides fully guaranteed messaging at very high message rates. In common with other vendors Solace provides a mechanism to improve the performance between the appliance and the API, limiting the effect of long rtt, etc. The mechanism used is similar in functionality to the pre-fetch mechanism provided by other messaging vendors in that it allows the appliance to deliver batches of messages to the API.

In terms of implementation, Solace implements a sliding window mechanism for guaranteed message delivery in both directions between the appliance and the API. The use of a windowed acknowledgement scheme is well understood and is in use by a number of protocols that are widely used today, e.g. TCP. The approach means that the transmission of messages does not need to pause between messages while the message sender waits for an acknowledgement from the message receiver. Therefore message throughput is increased.

While the delivery of batches of messages improves performance, in terms of message throughput, it is not desirable for some applications. The remainder of this post looks at the application type, its message delivery pattern, and the tuning of Solace ‘pre-fetch’ to provide correct operation.

Application Processing Engines Fed from a Load-balanced Queue

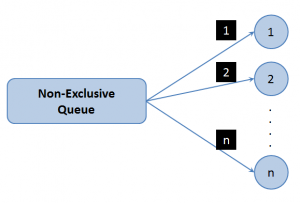

It is a very common design pattern to scale processing power by supplying a number of processing units or engines and then striping jobs across them via a load-balancing mechanism. Enterprise messaging solutions use non-exclusive queues to provide exactly this functionality and is illustrated in Figure 1.

In the figure we see a number (n) of consumers connected to a single non-exclusive queue. Messages are delivered round-robin with message 1 to client 1, message 2 to client 2, and so on.

This type of behaviour is exactly what is required in many grid environments and is the default expectation of many application servers that by default start up many Message Driven Beans (MDB) that bind to the same queue.

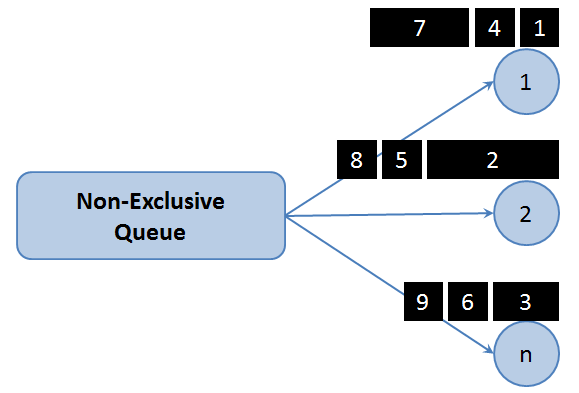

If the time taken to complete a unit of processing is relatively constant then this model probably works well enough with the default settings. However, if the processing time varies according to the message being processed the coupling of pre-fetch with this style of message delivery pattern can be problematic. The problems stem from the fact that a number of messages will be delivered to each client even though the client may not have finished processing the previous message. This effectively allows short process time messages to become stuck behind long process time messages which can have serious consequences in overall calculation time if there are any dependencies on the order of results. This problem is illustrated in Figure 2.

In this figure the message lengths indicate the processing times required. It can clearly be seen that message 2 is a long hold time message. Pre-fetch has put message 5 behind message message 2 which effectively holds up the processing of message 5. In the figure messages 6, 7 and 9 complete before message 5.

In this context it would be better to have no pre-fetch (or a pre-fetch = 1). This would ensure that clients were only given the next message in the queue once they had processed and acknowledged the current message. Luckily it is possible to tune the Solace pre-fetch in this way.

Tuning Solace Pre-fetch

It is possible to tune Solace pre-fetch on a per queue basis. It is not an appliance wide setting and it is possible to have pre-fetch and non pre-fetch clients working in the same appliance and even within the same VPN.

Solace provides 2 attributes that can be used to tune the behaviour of the connection to effectively disable or control “pre-fetch”. Tuning pre-fetch in this manner effectively means that the appliance can be made to deliver exactly 1 message to the SDK. This message must be acknowledged before the appliance will deliver the next message. The required attribute settings are as follows –

| 1 | FLOW_WINDOW_SIZE = 1 | This causes the SDK to inform the router of the delivery (to the SDK) of every message.Set as part of the FLOW properties in the SDK – C, .Net, Java |

| 2 | MAX_DELIVERED_UNACKNOWLEDGED_MSGS_PER_FLOW = n | This causes the router to stop delivering messages to the client SDK once there is n unacknowledged message(s) delivered to the SDK. In this example n=1.Set as a Queue attribute on the appliance.Set as a FLOW property in the C SDK. |

In this context if a client connects to a queue and has both FLOW_WINDOW_SIZE and MAX_DELEIVERED_UNACKNOWLEDGED_MSGS_PER_FLOW set to 1, then the router will deliver a single message to the SDK and will not deliver the next until the message has been ACKNOWLEDGED by the application. While the flow of messages are effectively blocked to this client any messages that exist on the queue will be sent, round-robin, to any other non-blocked clients on the same non-exclusive queue. This means that messages will not be sent to client SDKs until the clients are free to process the messages they receive and the possibility of queueing short hold messages behind long hold messages while there is free processing elsewhere is removed.

Explore other posts from category: DevOps

Mathew Hobbis

Mathew Hobbis