In the first part of this event-driven architecture myth busting series, I explored the first five common claims people tend to make about EDA and either confirmed or busted them. Now, I’m going to review the next set of claims that we encounter all the time. Let’s get right to it!

1. EDA could result in a pinball machine effect

The pinball machine effect is when one event triggering a chain of events with varying side effects. This phenomenon is common in big systems where peer-to-peer chain links increase between microservices. Starting a digital transformation journey using EDA, while fully knowing the issues that could arise from event chaining, will result in some serious technical debt.

One way to avoid an the pinball machine effect is to inspect the events in the system at runtime, or have an extensive documentation strategy breaking down all the events, topic subscriptions and publishing, along with the payload of every event. Both approaches come with their own set of challenges. A training strategy also needs to be in place for every new person joining the team. This is another reason why having an event portal acting as a one-stop-shop for managing event-driven architecture is crucial. An event portal lets you scan a live system and to visualize the events, topics, and applications.

Therefore, the claim stating that event-driven architecture could result in a pinball machine effect if not handled correctly is… CONFIRMED!

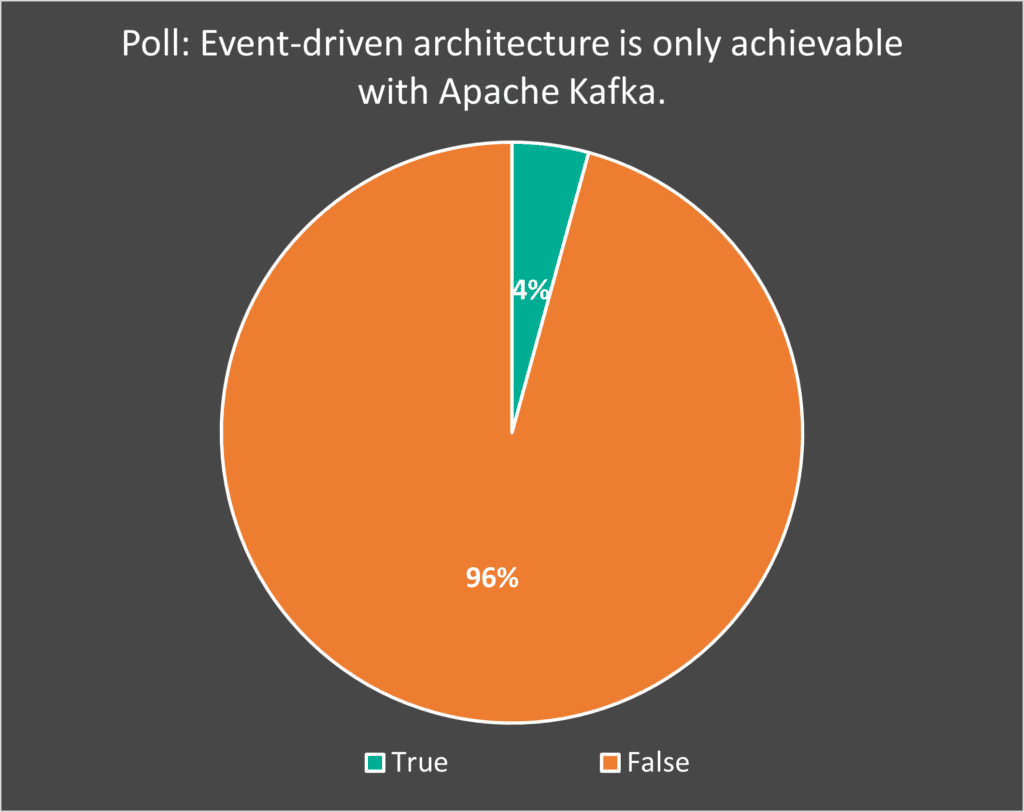

2. Event streaming solutions are only implemented using Apache Kafka

Poll Results (LinkedIn, Twitter):

At its core, Apache Kafka is a distributed commit log that was designed for aggregating a log data and streaming it to analytics engines and big data repositories. Operational use-cases have very different characteristics, and require tools that are useful beyond streaming analytics and logging data. Modern event brokers take into account complex topic filtering for streamed data, flexible message routing, high availability, disaster recovery and security. To better understand how and when to use Kafka vs another broker technology, I would recommend checking out the blog series: Why You Need to Look Beyond Kafka for Operational Use Cases.

Event-driven architecture is an architectural paradigm and Apache Kafka is a tool, so the myth that a paradigm like event-driven architecture is tied exclusively to a tool like Apache Kafka is… BUSTED!

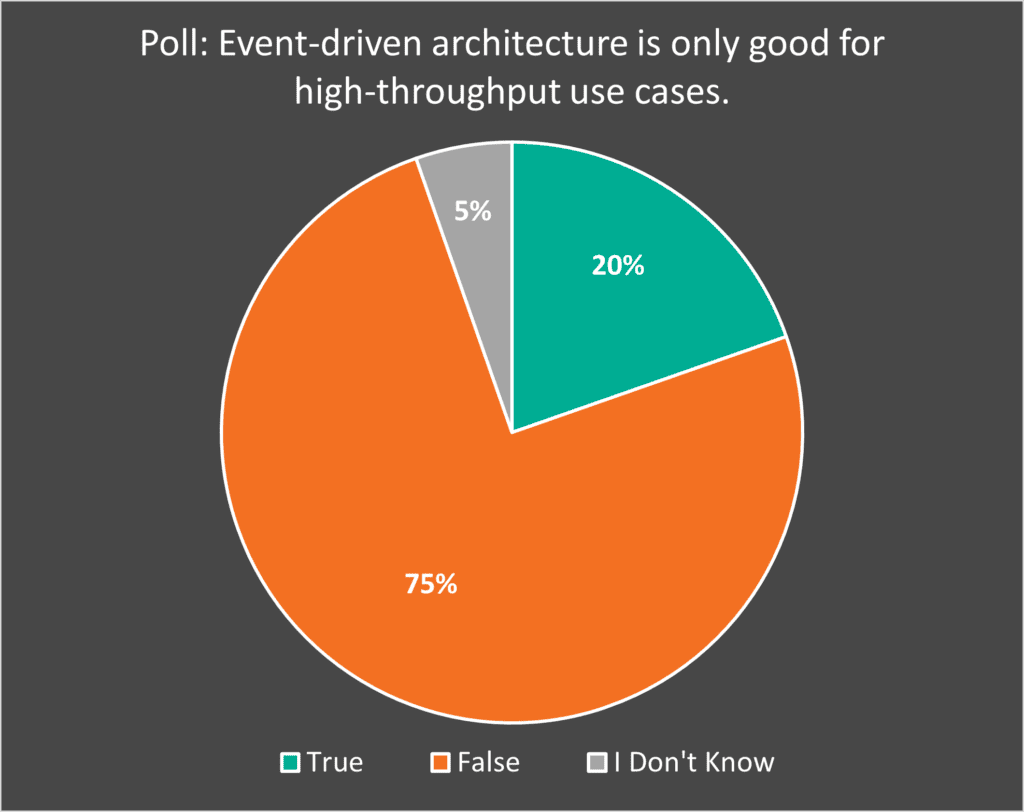

3. EDA is for high throughput use cases only

Poll Results (LinkedIn, Twitter):

Event-driven architecture can be used to decouple microservices, implement real-time processing, distribute of responsibility, and efficiently alert management across business units about situations that demand their attention. There is no strict dependency on high throughput traffic into the system. Event-driven architecture could also be used to implement command query responsibility segregation (CQRS) where, for example, command and control messages are sent to edge devices in manufacturing plants located in different geographical locations.

Business objectives like reducing cost, improving customer experience, and increasing corporate agility aren’t all always tied to high throughput , but are directly impacted by real-time communication and the accessibility of real-time data.

Hence, the myth that event-driven architecture is only applicable for high throughput use cases is… BUSTED!

4. Designing dynamic hierarchical topic structure is a challenge with EDA

Predicting how your organization’s architecture and business needs will evolve over time is challenging. Your topic hierarchy will change over time resulting in a trickle-down effect that impacts all applications directly coupled with topic. Topic versioning and change tracking requires you to coordinate how these topics are documented and communicated to the different stakeholders involved in consuming the topic whether it’s found in Excel sheets, internal documentations, or organizational Wiki pages such as Confluence.

Fortunately, you can find online extensive best practices for how to design a dynamic topic hierarchy structure that will meet your needs. Beyond designing a topic hierarchy, you will also need to build, manage, and govern the topic structure and ensure the necessary alerting is in place for the affected applications.

In summary, the myth that designing a dynamic topic hierarchy is challenging with event-driven architecture is… CONFIRMED!

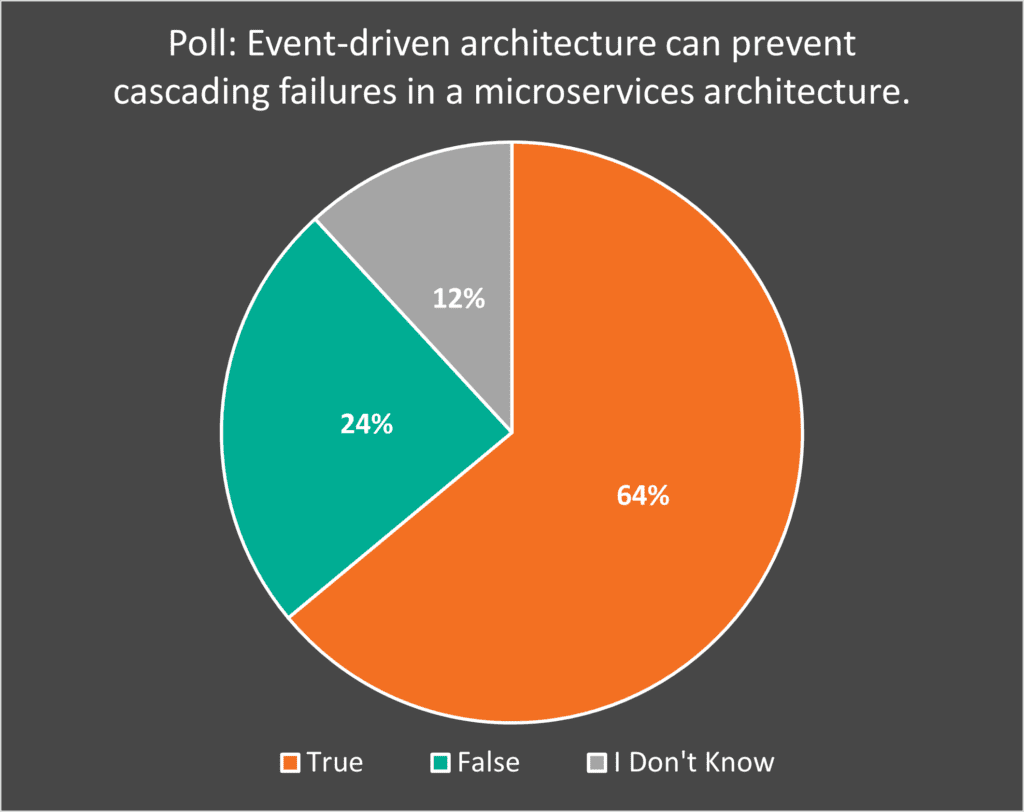

5. EDA can prevent cascading failures in a microservices architecture

Poll Results (LinkedIn, Twitter, Reddit):

REST-centric microservices architecture can suffer from a cascading failure behavior due to the implied coupling between the request-response nature of synchronous interactions. With event-driven architecture, having an event broker in the mix allows for the enqueuing of messages so one consumer’s inability to receive/process an event will not cascade back to the producer. The enqueuing of messages allows the subscriber to resume message processing after failure recovery and eventually ensure consistency across the system. An event broker insulates the producer of messages from the consumers and in return eliminates the possibility of message loss in the case of temporary service failures, redeploys, or downtime in any of the services.

So the myth that event-driven architecture alleviates message loss is… CONFIRMED!

BONUS! Exposing APIs on our legacy app or monolith makes it #eventdriven

Thanks to Thorsten Heller (@ThoHeller) for this contribution on Twitter! Exposing an API, in essence, is simply the process of offering access to your business logic through an interface. A data movement strategy has nothing to do with this exposure. So think about exposing an API like a restaurant releasing a new menu for their dishes: customers will be able to query the menu for the available meals they can order, however they will not be informed about new items just by looking at the menu (unless the customer continuously checks for new menus or new additions). So what would an “event driven” strategy look like for the restaurant? How can they can broadcast menu changes to all their customers in real-time you might? Well, one way is to announce the changes on a radio station and any customer tuning in to the station will receive the update in real-time. Another way is customers could subscribe to an SMS service and receive a text message every time a new menu is available. I’m hungry now!

So the myth that the process of exposing APIs in a legacy application or a monolith makes it event driven is… BUSTED!

I hope you enjoyed this myth busting series so far. Stay tuned for more in Part 3!

Explore other posts from categories: For Architects | For Developers

Tamimi Ahmad

Tamimi Ahmad