Logging is an important aspect of a distributed system, but some applications don’t even have proper logging — some only have file-based logging, others have very basic logging, and some have proper structure with rolling and rotations set up. Many have embarked on the journey of having those logs stored into a database of some sort, for storing and querying the mountains of data flooding in from the many systems in their enterprises. While this approach helps search the contents of the logs, it also presents some challenges. The first challenge is writing those large volumes of logs into the database, and the second problem is the amount of time required to be able to query the large volume of data in the database.

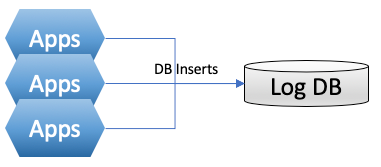

Figure 1: Common Pattern of Logging to a Relational Database

I’ll walk through some common architecture patterns around logging infrastructure, and how Solace offers an event-driven logging alternative that offers a few key advantages over other patterns. Then I’ll share the steps to configure Logstash as the log storage component that consumes a stream of log events via Solace PubSub+ Event Broker.

First Challenge: Ingestion

If you’re using a relational database, the first common problem you’ll encounter is that writing to a relational database is not a fast operation for a database. This isn’t a big deal for small-scale operations, but for many enterprises this proves to be a significant operational issue.

In the worst-case scenario, business processes are stuck waiting for logging operation to complete for several seconds while the actual customer transaction has completed within less than a second. This also bleeds to the infrastructure layer, where you have to increase the scale of your service infrastructure by two-fold or more to support the thousands of transactions that are hogging capacity, mostly to ‘wait’ for the blocking database writing operations.

Some people have learned to not make the database writing operation part of the main business process flow, or not use a relational database at all. There is more than one way to do this, especially with the vast options of technology stack we have today.

One pattern I would like to elaborate on is the use of a queueing mechanism as the intermediary pipeline. In this pattern, applications send the logs as messages to a message broker’s queue and immediately comes back to their transaction flow. This supposedly works better since sending to a queue should be much faster than a database insert operation, and the messages waiting to be written to database are safe and sound in the queue. Sounds clever, right?

It does reduce blocking time to ‘send’ the log entries and buffering if the next step of writing to an actual database becomes slow, but there are big challenges and missing benefits when you only rely on a queue instead of a publish-subscribe pattern. I’ll get back to this in a minute.

Second Challenge: The Query

Once you have all your log entries recorded into a database, it’s all good right? Not always. Depending on what kind of database you are using, writing to and querying the database can take way longer than you’d like them to. You might face problems such as querying the database taking ages to complete, or it simply giving you data from an hour ago.

The Case of Intermediary Queue

Let’s revisit the intermediary queue approach. What could possibly go wrong? Let’s take a closer look.

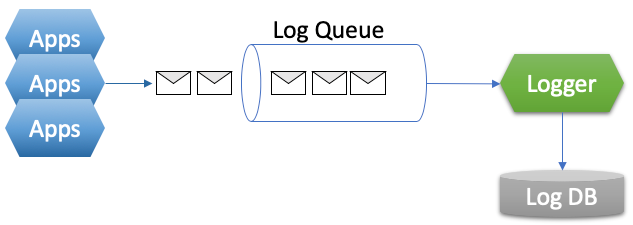

Figure 2: Log Queue

Imagine what would happen if the database is slow or simply stops working. Eventually, the queue will build up a massive number of unprocessed messages, and the broker will eventually stop ‘acknowledging’ the incoming log messages, leaving the applications again stuck waiting for the logging operations to complete. Kind of the same impact with the direct database write, just at a later time. Imagine if other critical business processes relying on the same message broker are also impacted!

Consider a not-so-distant future, where some other systems need the same set of logs. Then you’re looking at either querying the log database (fully aware of the delayed data and slow response time) or slapping another send-to-queue code into the apps to have a duplicated stream. Doesn’t it sound like much fun, does it?

Event-Driven Logging Architecture

What’s an event-driven logging architecture? Well, each log entry is an event that other enterprise applications can subscribe to, receive, and react to. Source applications just need to worry about emitting each log as an event and that’s it! Those events can be routed to many applications via a range of APIs and protocols, now or later, without touching the producer of those events.

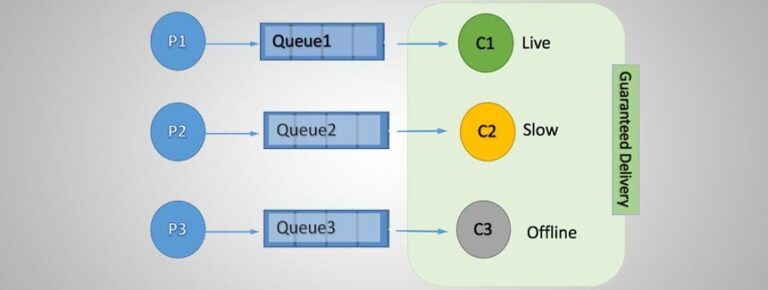

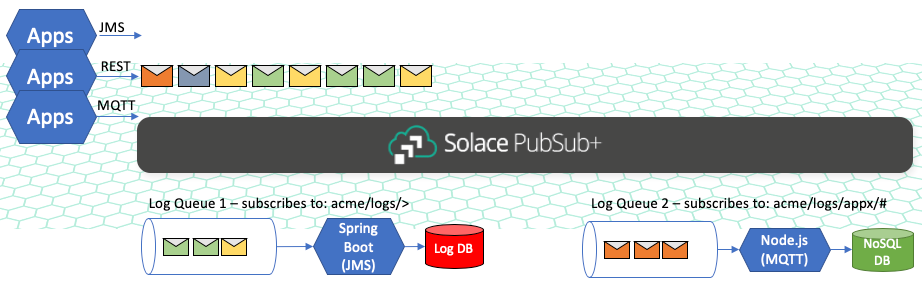

Figure 3: Event-Driven Logging

This diagram illustrates a few key points. An event can be sent simultaneously to many recipients with the publish-subscribe pattern. In this diagram, we’re publishing to topics and consuming from queues. The queue is acting like a subscriber and persists the events for a specific application to consume in a guaranteed fashion. Learn more about topic to queue mapping, one of Solace’s core concepts.

Each application can subscribe to whatever topics indicate the unique set of information they need. Note that this filtering is done by the broker, not after the events have been transferred all the way to the applications. In fact, the benefit of topic routing with wildcards is so great that we produced a video about topic wildcards!

Modern Search Stack and Big Data

Say you’ve modernized your technology stack, including your log database. You now have a bunch of new shiny big data technologies lying around, and a quick new search engine to query your logs. Let me guess, Elasticsearch or Splunk? Apache Spark? Kafka as the ingestion to Hadoop-based platforms? Don’t worry, we’ve got your back!

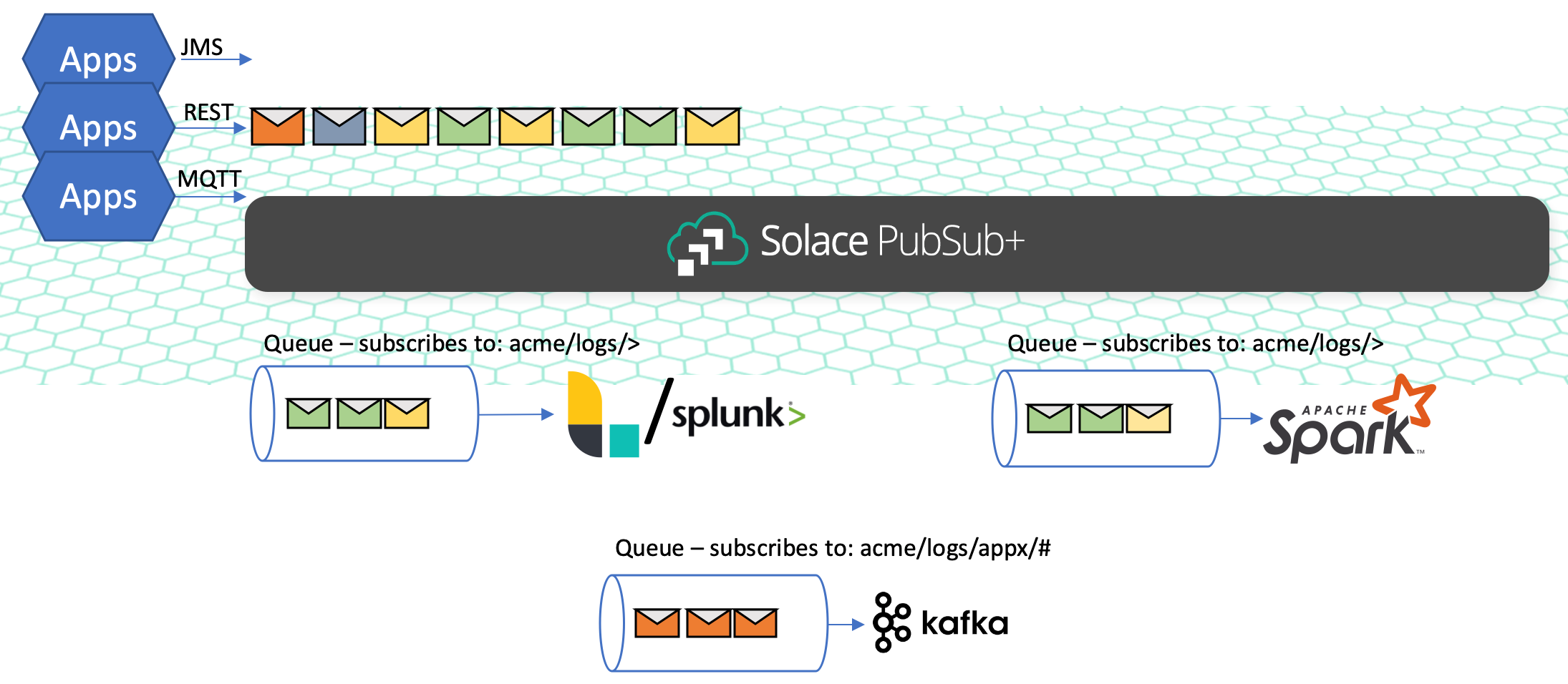

Figure 4: Event-Driven Logging – Modernized!

It’s the same event-driven logging architecture, but with fresh new applications subscribing to the log events!

I wrote a separate blog on how to integrate Solace PubSub+ Event Broker with Logstash as part of an ELK stack. Check it out!

Also take a look here for more stuff you can integrate with Solace.

What’s Next

With the power of event-driven architecture in your hands now, why don’t you try out stream processing or maybe a machine learning application that also subscribes to your log event stream either directly via topic subscription or in a guaranteed fashion via queue just like our sample with Logstash. The cloud is the limit!

Explore other posts from category: For Developers

Ari Hermawan

Ari Hermawan