In our industry, the meaning of terms often shift over time. Case in point, the term “real time” has been around for decades, but its meaning has changed several times.

Initially, the term real time was used in high performance computing circles to describe problems that required correlation between events within a latency budget. For example, a high-tech manufacturing process step that needs to be altered within 3 milliseconds of a given condition being identified. Those real-time computing systems were optimized for timing predictability.

Later, the term real time came to mean up-to-the-minute or second information – kind of the opposite of batch oriented. At the time, batch was still the dominant architecture for sharing information across systems and geographies due to the high cost of adequate WAN bandwidth and computing power. Making any system real time required serious cost justification, otherwise, batch prevailed.

Today, what drives real-time architectures has changed once again. The cost of networks and computing have dropped dramatically, to the point that the vast majority of applications are designed to propagate updates in real time. The increasing prevalence of cloud, mobile, Internet of Things and big data is causing rapid escalation in the volume of operations being performed and messages being sent in many dimensions simultaneously. This has shifted the challenge from justifying cost to managing scale. We call it the data deluge, but whatever you call it, it’s driving the need for both better accuracy and broader reach.

Today, what drives real-time architectures has changed once again. The cost of networks and computing have dropped dramatically, to the point that the vast majority of applications are designed to propagate updates in real time. The increasing prevalence of cloud, mobile, Internet of Things and big data is causing rapid escalation in the volume of operations being performed and messages being sent in many dimensions simultaneously. This has shifted the challenge from justifying cost to managing scale. We call it the data deluge, but whatever you call it, it’s driving the need for both better accuracy and broader reach.

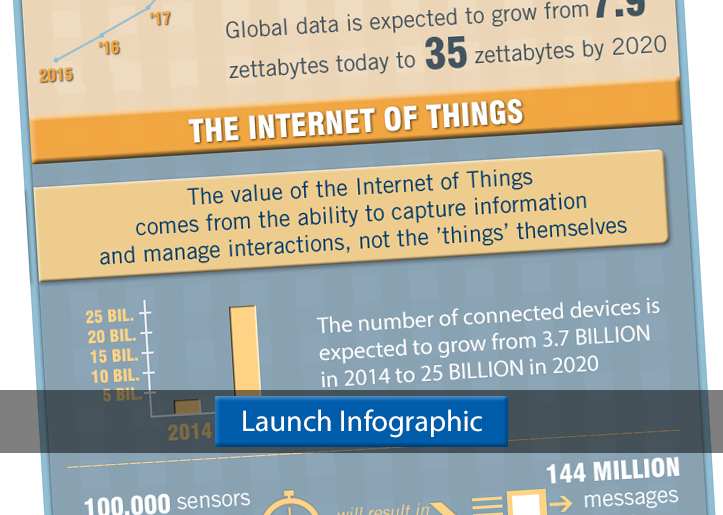

The rate of change boggles the mind, both looking backwards, and looking out towards the next five years. To visually highlight the real-time information challenges faced by companies of all sizes, we’ve created this infographic. Feel free to share this page, or the image directly.

Update: We’ve created a new infographic that introduces the ways Solace can help!

Explore other posts from category: Company

Solace

Solace