Having been previously involved in several large scale video and audio streaming implementations, my reaction, when a colleague first suggested to add an extra messaging layer for video streaming, I was nothing short of bewildered. That was, to be precise, 3 years ago. But is it such an outrageous idea? Given the way live streams are distributed today, in particular with the juxtaposition of user generated social live video and the proliferation of smartphones, we are literally riding on the parabolic rise of live video streaming traffic. And, it’s time to step back and view live streaming delivery from a different perspective.

One only needs to look at Facebook’s recent aggressive expansion into live video streaming with the launch of Facebook Live to appreciate the phenomenal pace at which the live streaming landscape is evolving. It goes without saying that the expectation of the era post 2015 is that the live video streaming system must be able to simultaneously stream to hundreds of thousands of viewers, if not millions, and it must also have global reachability. This is a mammoth task but is an area where, as I will highlight throughout this bog, Solace can uniquely add value.

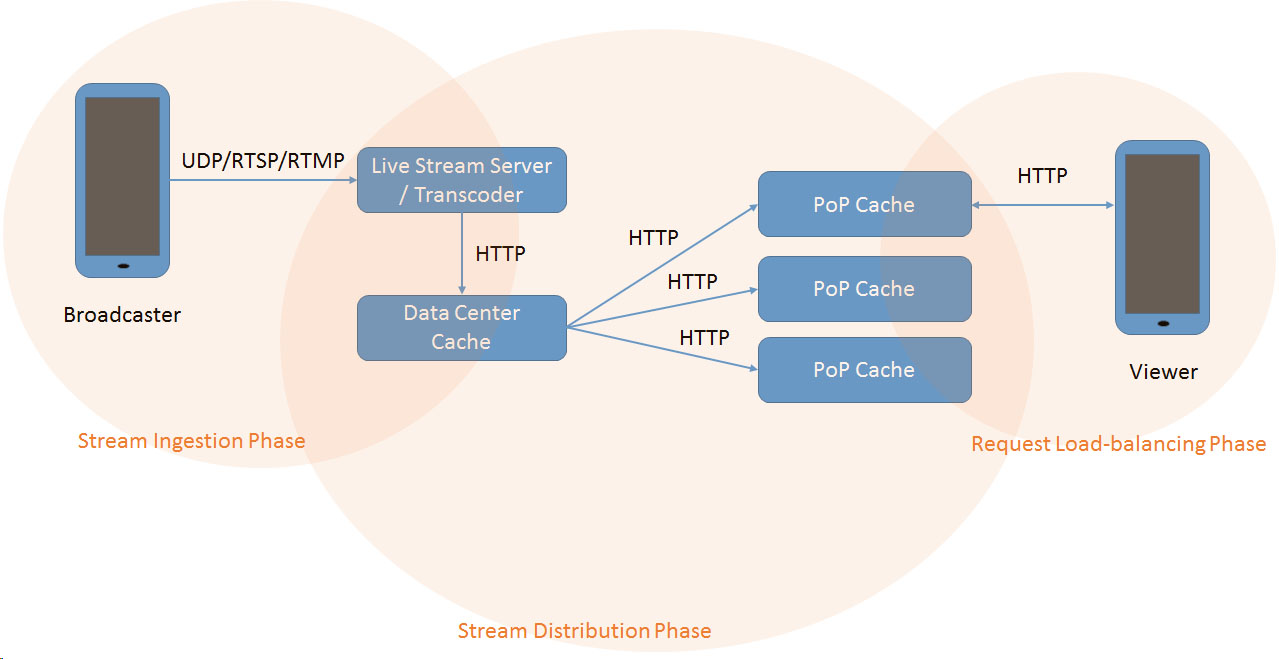

Let’s first look at how live video streaming is delivered from broadcasters to viewers in general:

- Broadcasters start live streams on their devices (phone/laptop/dedicated digital video recording device).

- The broadcasting device sends a UDP/RTSP/RTMP streams to a live stream server.

- The live stream server transcodes the streams to multiple bit rates if required (e.g. with MPEG-DASH protocol).

- The transcoded segments are continuously produced.

- The produced segments are pushed and stored in data center cache.

- To achieve scalability, segments are then send from the data center cache to caches located in point-of-presence (PoP).

- Viewers connect to the PoP caches to receive the live video streams.

Apart from accepting the live stream from the broadcaster, during the Stream Ingestion Phase, another key functionality of the live stream server is to perform the transcoding of the raw live video stream. As the viewing devices have different display capabilities, transcoding of the raw video stream is a necessary evil. Usually, stream server simultaneously transcode the raw content into multiple video format (h.264, mpeg2 etc.) of varying bitrate (100Kbps, 500kbps etc.) and sizes (320×640, HD, HD+ etc.). The processing required is extremely intensive and the sheer permutation of video sizes and associated qualities can be quite large.

In the Stream Distribution Phase, the stream operator also needs to trade-off between what stream quality, format to which geographical area to push the transcoded stream to, and the cost of such operation. Yet, at the same time, it must maintain the best possible viewing experience. As the number of live video stream broadcasters increase, the burden on the distribution and storage of live video stream caches increase exponentially. This is a non-trivial predictive optimization problem. And, to complicate the matter, there is also the problem of removing the live stream caches which are no longer relevant and stale.

Finally, in the Request Load-balancing Phase, how to guard against the PoP cache and the data center caches from the thundering herd problem? How to facilitate global load balancing if any PoP cache is overloaded?

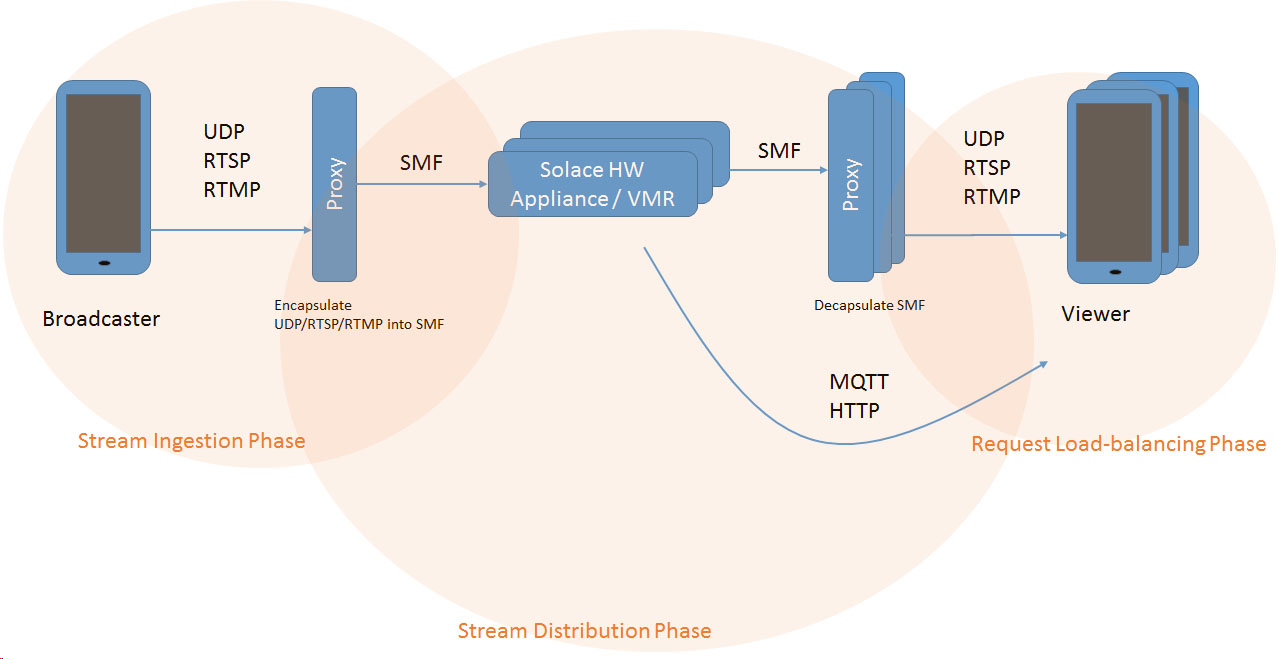

Let’s look at how Solace would fit into the above live streaming architectural context and begin with one example of how live video streams can be delivered from broadcasters to viewers:

- Broadcasters start live streams on their devices (phone/laptop/dedicated digital video recording device).

- The broadcasting device sends a UDP/RTSP/RTMP streams to a thin Solace specific proxy.

- The proxy resides within both the Broadcaster’s and the Viewer’s infrastructure.

- In the Stream Ingestion Phase, the proxy encapsulates the stream payload into SMF (Solace Message Format) and forwards to Solace.

- In the Stream Distribution Phase, Solace ensure that the stream is delivered (fan out) to the viewing device’s proxy.

- In the Request Load-balancing Phase, the Viewers register viewing requests with care-of receiving proxies and these proxies then decapsulates SMF into the original streaming transport format (UDP/RTSP/RTMP) and forwards the stream to its care-of viewer. If the viewer is able to receive the stream in other protocol such as MQTT/HTTP, then Solace can deliver directly to the Viewer bypassing the receiving proxy as well.

Since Solace move data base on topics, a Broadcaster can ingest its live video stream to a topic, e.g. channel/id_1, and any stream viewer subscribes to topic channel/id_1 will receive the live stream.

Likewise, a transcoder can monitor if there are any channel/id_1/> subscriptions, and if so, it can then subscribe to channel/id_1, take the stream and transcode it to a specific format and re-ingest back to the Solace Messaging Router with a topic of, say, channel/id_1/h264/320×160/50kbps. Any stream viewer that subscribes to topic channel/id_1/h264/320×160/50kbps will receive live video stream on channel 1 with h264 encoding at the resolution of 320×160 and bit rate of 50kbps.

Now, if there are other stream viewers which are subscribing to channel/id_1/h264/HD/600kbps. The transcoder that is monitoring channel/id_1/> subscription can detect this in real-time and then transcode to the specification that fits the subscription request from actual stream viewers.

Let’s move the discussion on to the distribution phase. One unique feature of Solace is the ability to mesh multiple Solace Messaging Routers together, through Solace bridging or Solace multi-node routing, out of the box. Then subscriptions between regions will be synchronized automatically therefore behaving as one “super-sized” Solace messaging system. As such, a live video stream ingested in one geographical region will be automatically distributed to any other region on-demand as long as there is a corresponding subscription with the same topic in that region. Likewise, if there are no topic subscriptions corresponding to any live ingested video feeds, then streams are not routed to that geographical region. Therefore, live streams are distributed on demand and the distribution burden across the wide area network can be substantially lowered due to the automatic dynamic management of topic subscriptions by Solace.

How about live stream viewing request load balancing? One useful application of the Solace Messaging Router is to leverage its message processing capability and use it as a request “shock absorber”. For example, a queue can be setup to subscribe to all incoming live video streaming viewing requests. Viewers request streams by sending it to a topic (as discussed earlier) that the queue is subscribing to. Multiple request filler can then process requests off this queue and respond to requests by routing the viewers to the most suitable Solace Messaging Router to connect to, taking into consideration the stream load spread by region geographically. Since all requests are funneled centrally to the queue, this allows load balancing to be calculated effectively. And, as the viewing demand raises, the way to increase the streaming capability is to dynamically deploy more Solace VMR (now referred to as PubSub+) on demand, for instance on Amazon AWS, or to deploy PubSub+ Cache, Solace’s proprietary caching technology.

Explore other posts from category: Use Cases

Robert Hsieh

Robert Hsieh