Home > Blog > For Architects

Subscribe to Our Blog

Get the latest trends, solutions, and insights into the event-driven future every week.

Thanks for subscribing.

Like any event broker, Solace Event Broker can be used for a variety of different use cases that if you were to simply deploy it following the deployment guide and best practices, you will most likely achieve the performance you are looking for. For example, if you are a retail supermarket who is looking to publish hundreds of messages a second through the broker, you will not have any issues. But if you have a use case that will push the limits of a single software broker, you need to get creative and fine-tune the performance of the broker.

In this blog post, I will show you ways you can squeeze performance out your broker and impress everyone at your company.

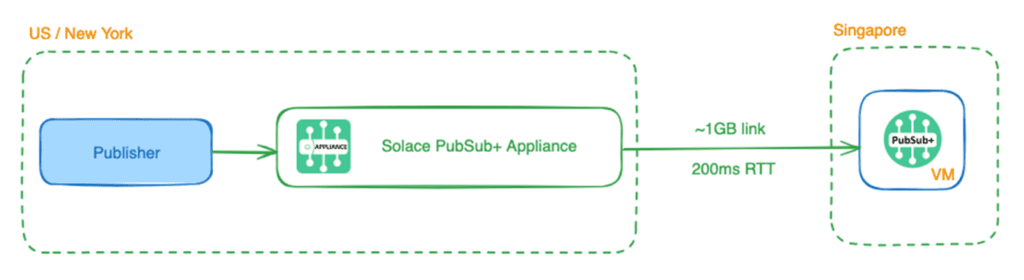

An example scenario I will focus on is of a software broker deployed in Asia getting data from the U.S. In a specific use case I worked on, the data was coming from a Solace appliance in New York over a VPN bridge to software broker, but the idea works with any publisher sending data over a VPN bridge.

When the software broker was deployed and bridge was established to the appliance, the default throughput was around ~2 MB/second which is very low, and the round trip time (RTT) is approximately 200 milliseconds between NY and Singapore datacenters.

Pick The Right Tier and Host

Solace Event Broker comes in a variety of sizing tiers, and each tier allows you to leverage additional cores and get better performance. These tiers and associated cores are:

- 1K Connections

- Standard Edition – 2 cores (limited to 10,000 msgs/s)

- Enterprise Edition – 2 cores (no throughput limit)

- 10K Connections – 4 cores

- 100K Connections – 8 cores

- 200K Connections – 12 cores

Note: Solace Cloud deployments have additional tiers which are not available with self-managed software broker.

You can find necessary information on minimum requirements for container/underlying host here for each tier.

Needless to say, the first step is to make sure you have picked the right broker tier for your use case and have deployed it on a host with appropriate resources (CPU cores, memory, storage etc.).

For this example, I decided to use 10K tier enterprise broker to be able to support our desired throughput.

Pick The Appropriate Storage

Solace Event Broker supports both in-memory (direct) and persistent (guaranteed) messaging. If your use case requires guaranteed messaging then you need to ensure you are using high-performance storage.

Obviously, picking an solid state drive over a traditional hard disk drive would give you much better performance, albeit at a higher cost. Similarly, if you are deploying the software broker yourself on the cloud, you will have a variety of options to pick from. Make sure you pick the right type of storage with high IOPS to get the performance you want.

At the broker level, you can measure disk latency (the speed at which the broker is able to access disk) to help you identify any bottlenecks being caused by the disk.

solace(configure/system/health)# show system health Statistics since: Dec 28 2023 17:26:41 UTC Units Min Max Avg Curr Thresh Events ------ ------- ------- ------- ------- -------- ------- Disk Latency us 1645 16067 6541 1911 10000000 0 Compute Latency us 974 23463 1272 1139 500000 0 Network Latency us 0 0 0 0 2000000 0 Mate-Link Latency us 0 0 0 0 2000000 0

Tweak the Publishing Window Size

My colleague, several years ago, wrote a very handy post on this topic and I definitely recommend checking it out.

“When an application publishes messages using persistent delivery mode, the publish window will determine the maximum number of messages the application can send before the Solace API must receive an acknowledgment from the Solace broker. The size of the window defines the maximum number of messages outstanding on the wire.”

You don’t generally need to change this property unless your applications are publishing to the broker over a WAN link (i.e. broker and applications are deployed in different regions).

The default value is set to 50 on Solace APIs with one exception: the JMS API, due to its defined spec, can only send one message at a time before receiving an acknowledgement. This means when an application publishes a message, the message has to go over the network to the broker, the broker needs to process it and (if applicable) send it to the backup broker, before sending an acknowledgement back to the application. Once the acknowledgement is received, the application can publish next message.

Note that there is a trade-off here — increasing publish window size can increase throughput, but also increases the number of messages in-flight that could potentially be lost (maybe due to network outage) and need to be re-published.

Enable Compression

This is an obvious one but one that gets overlooked easily. Solace Event Broker supports compression. Instead of sending the full payload size, you can instead send the compressed payload and hence, achieve a higher throughput.

When configuring compression, there are two modes you can select: optimize-for-size and optimize-for-speed. Here is more information about each mode from the doc:

“When a TCP listen port is configured to use compression, you can configure whether you want the event broker to compress the data in egress messages so that it is optimized for size or for transmission speed. In general, the optimize-for-size mode yields a higher compression ratio with lower throughput, while optimize-for-speed mode yields a higher throughput with lower compression ratio.”

Pick the optimize-for-speed mode for higher throughput!

I should add that compression is mostly useful for usecases where disk is not the limiting factor. Compression makes a great impact when the network is the bottleneck such as when dealing with direct messaging related usecases like market data distribution. When using guaranteed messaging (persistence), often disk is the bottleneck where enabling compression might not have a significant impact.

Tweak the host TCP settings

This is a very important one and one that not many people think of doing. Solace brokers run on top of the underlying hosts’s TCP stack. The connections established by publishers and subscribers with the broker are TCP connections so naturally, tuning the TCP stack is crucial.

For Solace Event Broker appliances, Solace has already tuned everything as much as possible so the only thing left to tune is the TCP Maximum Window Size which can be configured at the broker level. For Software broker, we need to tune the TCP stack of the underlying host/VM as well as the TCP Maximum Window Size at the broker level.

As mentioned earlier, many times you are looking to improve performance when either the publisher or subscriber is in a different region than the broker. In such cases, your application is establishing a connection over the WAN so WAN Tuning is required. To do so, you need to modify your TCP Maximum Window Size. Rule of thumb is to set the TCP Max Window Size to be twice of Bandwidth Delay Product (BDP). BDP can be calculated as

BDP (Bytes) = (max-bandwidth (bps) / 8) * RTT (µs) / 1,000,000

where RTT is round-trip time.

For our usecase, we have a max-bandwidth of ~900KB/s with RTT of 0.200 seconds (200ms).

BDP = 900KB/s * 0.200 = 900*1024*0.200 = 184,320 TCP Max Window Size = 2 * BDP = 368,640 / 1024 = 360 KB

Once calculated, TCP window sizes can be configured by following the instructions documented here. This change is at the broker level.

You can go a step further and make changes to the host level TCP stack configuration. Here is a good source for understanding how TCP tuning works and different parameters such as wmem and rmem.

After some further tuning, we updated the TCP stack of the underlying VM on which software broker was deployed on in Asia with the following commands and it improved the throughput instantly by ~5 times!

echo 68157440 >/proc/sys/net/core/rmem_max echo 68157440 >/proc/sys/net/core/wmem_max echo "4096 16777216 68157440" > /proc/sys/net/ipv4/tcp_rmem echo "4096 16777216 68157440" > /proc/sys/net/ipv4/tcp_wmem service network restart

See this doc for more information on Tuning Link Performance for WANs.

Optimize Egress Queue Sizes

As you make the TCP level changes mentioned above, you will be able to achieve higher throughput which means your consumers have to be able to keep up with higher message rates. If your consumers are unable to do so, the broker will start discarding direct messages and queuing up guaranteed messages.

To eliminate or reduce the discards, you should understand how egress queues work in Solace.

“The message first passes through one of the five per-client priority data queues. A scheduler then selects it and places it into a single, per-client Transmission Control Protocol (TCP) transmit queue.”

Source: Solace Docs)

The queue sizes can be modified to accommodate your usecase. To reduce Direct messaging discards, you can increase D-1 queue size which increases the number of messages that can be queued up to be delivered to a consumer. More information on how to make the change here.

Additionally, you should also modify the tcp stack of the host where consumer applications are running to ensure they are perfectly tuned to handle higher ingress throughput.

Conclusion

As a solutions architect, these are all the steps I follow whenever I am asked to help a customer squeeze more performance out of their event brokers. There is an art to how you implement each step since you have to tune several parameters to achieve the optimal results for your use case, but I hope you found this post helpful. As you go through these steps, feel free to tweak the parameters for your use case.

Solace Named an Event Broker LeaderIDC MarketScape positions Solace in the Leaders category for worldwide event broker software.Read the Excerpt

Explore other posts from categories: For Architects | For Developers

As one of Solace's solutions architects, Himanshu is an expert in many areas of event-driven architecture, and specializes in the design of systems that capture, store and analyze market data in the capital markets and financial services sectors. This expertise and specialization is based on years of experience working at both buy- and sell-side firms as a tick data developer where he worked with popular time series databases kdb+ and OneTick to store and analyze real-time and historical financial market data across asset classes.

In addition to writing blog posts for Solace, Himanshu publishes two blogs of his own: enlist[q] focused on time series data analysis, and abitdeployed.com which is about general technology and latest trends. He has also published code samples at GitHub and kdb+ tutorials on YouTube!

Himanshu holds a bachelors of science degree in electrical engineering from City College at City University of New York. When he's not designing real-time market data systems, he enjoys watching movies, writing, investing and tinkering with the latest technologies.

Subscribe to Our Blog

Get the latest trends, solutions, and insights into the event-driven future every week.

Thanks for subscribing.