Home > Blog > For Developers

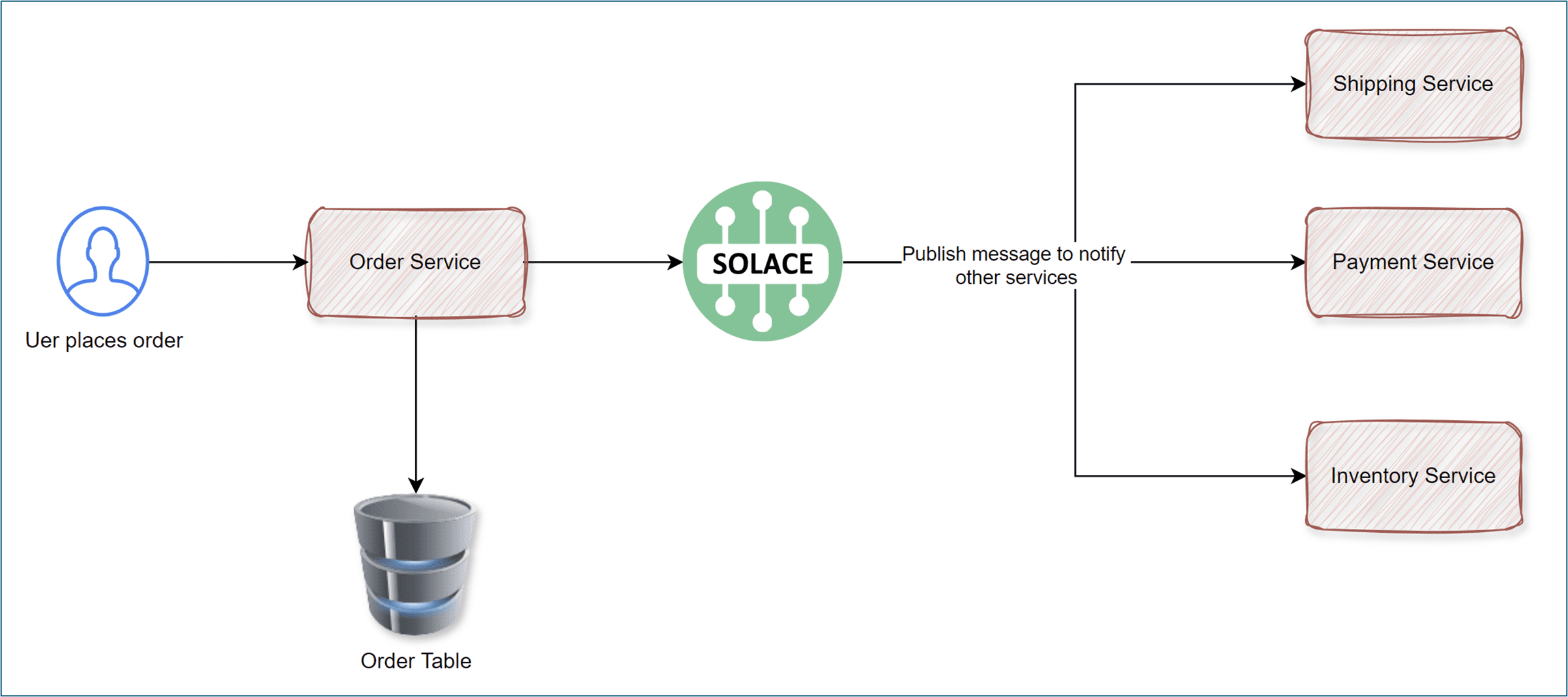

If you’ve designed large scalable systems with event-driven design before, you’ve undoubtedly encountered the dual write problem. I have faced it first-hand in production environments and it’s a genuine challenge. I have also heard war stories from many colleagues over the years about maintaining data consistency across systems.In a microservices architecture, your services will need to share data. It can be through REST HTTP calls for synchronous communication, but to ensure resiliency, the preferred way is through event-driven architecture (EDA). Let’s take an example of an online order service. The architecture for order service will look something like this:

The process in the order service would be as follows:

- Application must insert the new order in the database

- Send the event to the broker to Solace which in turn relays the events to respective services.

All rosy if both the steps are executed without any glitch. A few things can go wrong in this process.

Subscribe to Our Blog

Get the latest trends, solutions, and insights into the event-driven future every week.

Thanks for subscribing.

Introducing the Dual Write Problem

What if the application fails after the record is updated in the database? The database gets updated, but the notification was never sent out. How do you atomically update the database and send messages to a message broker and maintain consistency across systems?

Modifying the database and forwarding events to other services must be done reliably, otherwise you risk either updating the database and not notifying other services or not updating the database and notifying other services.

Two Ways to Solve the Dual Write Problem

Transactions

Wrapping everything under a transaction, unfortunately, this moves the problem elsewhere in our system. We don’t use distributed transactions if we’re building a system of microservices. These transactions require locks and don’t scale well. They also need all involved systems to be up and running at the same time.

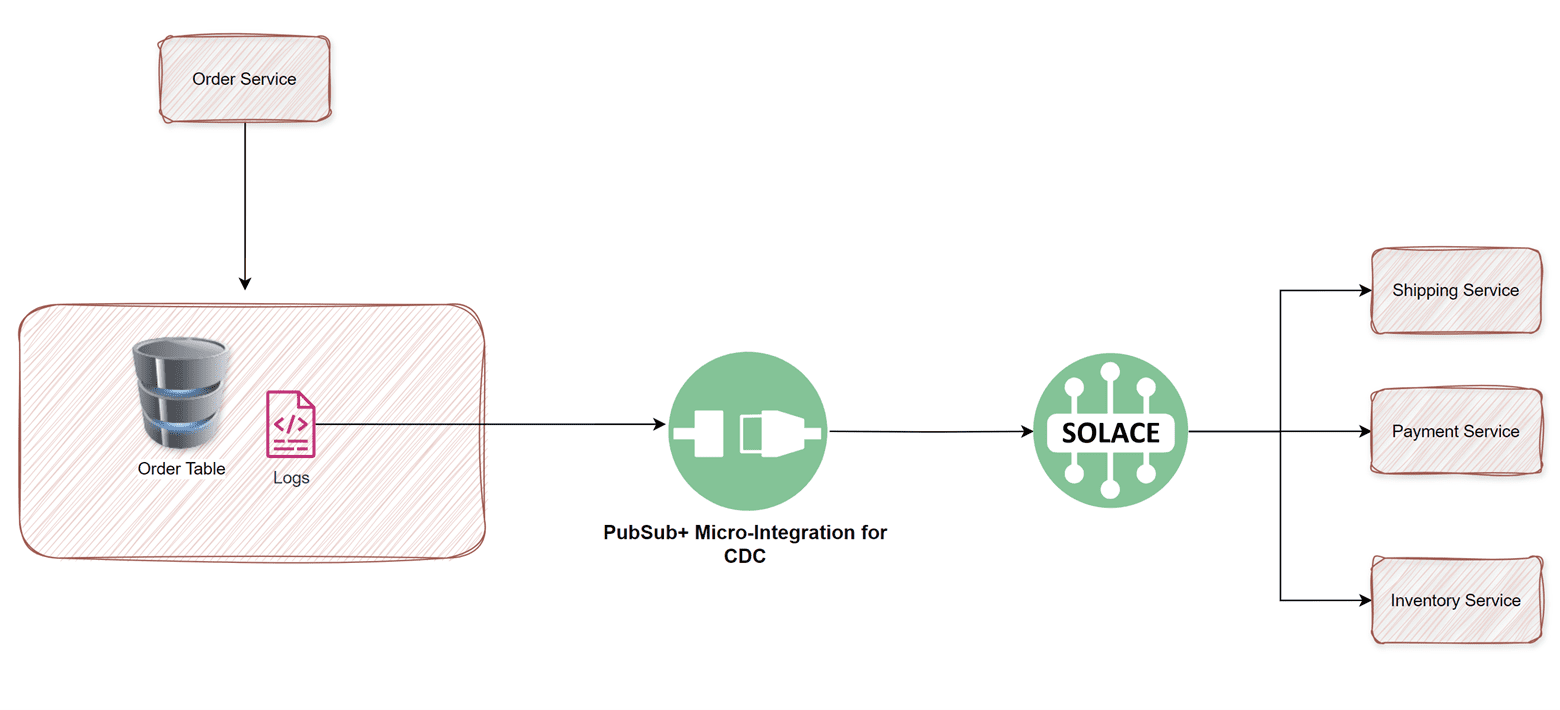

Change Data Capture

There are two elements of the change data capture approach:

- Binary logs/Transaction logs: Databases provide logs for every event on the database, and it’s possible to implement reactive action on those logs. For example, there are bin logs for MySQL, or in MongoDB you have OpLogs.

- A middleman to send the messages to message broker: This service will read the binary logs/transaction logs and publish the event in a message broker.

When an application saves data to the database, the CDC tool captures the change and ensures that the event is emitted reliably to the event broker. This is done independently of the application logic. Here’s how it works:

- Process the Order: The service processes the order and writes it to the database.

- Database Commit: After the transaction commits, the CDC tool captures the change.

- Emit Event: The CDC tool publishes the event to the event broker.

How Solace Helps Solve Dual Write Problem

Solace recently released a micro-integration product based on Debezium open-source CDC platform called Solace Micro-Integration for Debezium CDC. This new micro-integration event-enables your operational databases by publishing the changes (inserts, updates, deletes, etc.) to a Solace Event Broker and your event mesh.

You can also use the approach to:

- Incrementally Refresh Data Warehouse: CDC can be used to make incremental updates to a data warehouse.

- Enable Real-time Stream Processing: The technology can capture data changes in real-time, making it useful for real-time analytics applications.

- Train AI/ML Models: CDC can be used to update AI/ML models with new data. In this use case, CDC captures the changes made to a source database and updates the AI/ML models with the new data.

- Reduce processing overhead: CDC only sends the data that has changed, which reduces processing overhead and network traffic.

- Synchronize Systems: CDC keeps systems in sync and facilitates zero-downtime database migrations.

- Reduce Load on the Main OLTP Storage: CDC allows for reducing the load on the master transactional database or service, especially when dealing with a microservices database.

Conclusion

The most effective way to avoid dual write problem is to:

- Split the communication into multiple steps and only write to one external system during each step.

- Asynchronously capture the database commits and emit the changes as events.

How are you mitigating the dual write problem? If you like to not only listen to yourself (pun intended) but also to the experience we have in building scaled event-driven systems, feel free to reach out.

Explore other posts from category: For Developers

As a solution architect for Solace, Abhishek is keen on technology and software impacts on businesses and societies. His background is in the integration space and EDA with diverse knowledge in both on-premises and cloud infrastructures. He has helped enterprises in the airline, telecommunications and transportation and logistic industries. In his spare time, Abhishek enjoys spending time with his kid and having a go at his cricket bat and reading.

Subscribe to Our Blog

Get the latest trends, solutions, and insights into the event-driven future every week.

Thanks for subscribing.