Earlier this week, Solace in partnership with Arista and NetEffect released the test results of a collaboration to drive latency out of high-volume, high-speed market data configurations. These test results generated a lot of attention in the financial services sector not just because of the excellent results but also for the way we reported the benchmark by providing the statistical data customers need to interpret the results in a meaningful way.

Far too many benchmarks cherry pick and publish the good test results and obfuscate the unflattering ones. Worse still are the benchmarks that are designed solely to generate impressive performance results, but bear no resemblance to anything a customer could use. Those benchmarks are a disservice to the industry. They waste the time of the prospect and put the vendor on the defensive as the prospect digs to get the rest of the story, or as they fail to deliver the same results in a proof of concept.

To this end, we’re excited about the good work that the STAC Research Council is doing to verify and (where possible) standardize various financial benchmarks. We worked with STAC to do testing on our guaranteed messaging offering in the spring. The results were excellent, and the STAC team was very professional and easy to work with. We are also actively working with STAC to help define STAC-M2 which will standardize how market data delivery benchmarks are measured. When this benchmark is released, we fully expect to support its use and report our throughput and latency in accordance with its test suites. Because STAC-M2 is not yet finalized, we chose to run a highly transparent set of tests designed by Solace, Arista and NetEffect in the spirit of the standard to simulate real-life low latency deployments. The tests were configured as follows:

For each test, all messages were 100 bytes in size and individually routed by topic in the Solace Content Router to many subscribers. All traffic flowed along this path:

Feed application->publisher API->NetEffect TCP offload hardware->Arista Ethernet Switch->Solace Content Router->Arista Ethernet Switch->NetEffect TCP offload hardware->subscriber API->consuming application.

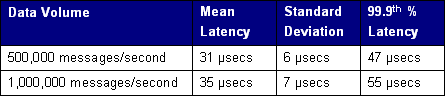

Measurements were from API to API — what is commonly called an ‘end-to-end’ test. The results were as follows:

It’s important to point out that we were not aiming to test the maximum capabilities of any of these three products. For example, with just two blades installed, a single Solace router can process 10 million messages per second (5 million in/5 million out).

Instead, we chose these two test configurations because they represent two popular real-world scenarios: 500K messages per second is about 20% above current peak load for all equities, futures and FX data in all US markets, and 1 million messages per second is about 20% above the current peak load for the OPRA feed. Technically we could have run the benchmark with five OPRA feeds running through one Solace content router and Arista Ethernet switch, but that doesn’t represent real-world customer configurations.

Yes, ideally we’d have performance measured and validated by a third party, but we didn’t want to keep this hot and very timely configuration from the hands of customers who could benefit from this right now. If we had waited for the standardized benchmarks and then commissioned testing, it is not clear when we would have been able to publish figures that would undoubtedly be very close to these. That’s why we chose to keep each other honest (between Solace, Arista and NetEffect) and assure that we were configuring in a way that could easily be reproduced in a customer lab or data center with the same equipment and publishing adequate data for customers to understand what we’ve done.

We would like to see all vendors of low-latency products lift the veils and improve the transparency of performance benchmarks. We are all in business to make our customer’s lives easier, not harder!

Explore other posts from category: Use Cases

Solace

Solace