If you’re like most IT technologists or leaders, you’re on a mission to make your systems more responsive, more real-time and more easily scalable. You’ve probably recognized that event-driven architecture is the best way to make that happen, but since you’re here I’m guessing you have questions: how to get started; how to overcome challenges you’ve run into; or how to put in place a surefire strategy for event-driven success.

Over the last 20 years Solace has helped many of the world’s best businesses embrace event-driven architecture as part of their digital transformation initiatives – yes, since before either of those were hot terms. I’m proud to say I’ve been part of many of those successful transformations, and I’d like to introduce you to a high-level three-step strategy we’ve applied many times.

Setting the Stage: The Stagnation of Legacy Infrastructure

If your company is like most, the applications that power your business – many of which have been doing their thing for years or decades – run in diverse environments including on-premises datacenters, field offices, maybe even manufacturing floors and retail stores. The lack of compatibility and connectivity between these environments (commonly shorthanded as “siloed”) forces your applications to interact via tightly-coupled synchronous request/reply interactions, or individually customized batch-oriented ETL processes or even bespoke integration.

There are two ways this kind of information causes stagnation that hampers innovation and responsiveness throughout your business:

- Rigid systems and complex interactions: The tight coupling of applications and bespoke integrations kill agility by making it hard to add new applications and runtime environments to your infrastructure, hard to modify processes to meet shifting business needs, and hard to dynamically scale systems up and down in response to demand.

- Stale data and slow responses: The request/reply and batch-oriented exchange of data means when something happens, information about that event sits around waiting for a chron job or for somebody to ask for it before your business can react to it or factor it into business decisions. Trying to reduce that time lag or distribute the information to more systems typically results in unacceptable scaling challenges on your systems of record.

You know you need to upgrade your architecture to support real-time event-driven interactions in order to become agile and responsive and to take advantage of technologies like the cloud, IoT, microservices and whatever comes next, but replacing applications en masses isn’t an option due to cost, risk and time. So how do you do that?

3 Steps to Success: Becoming an Event-Driven Enterprise

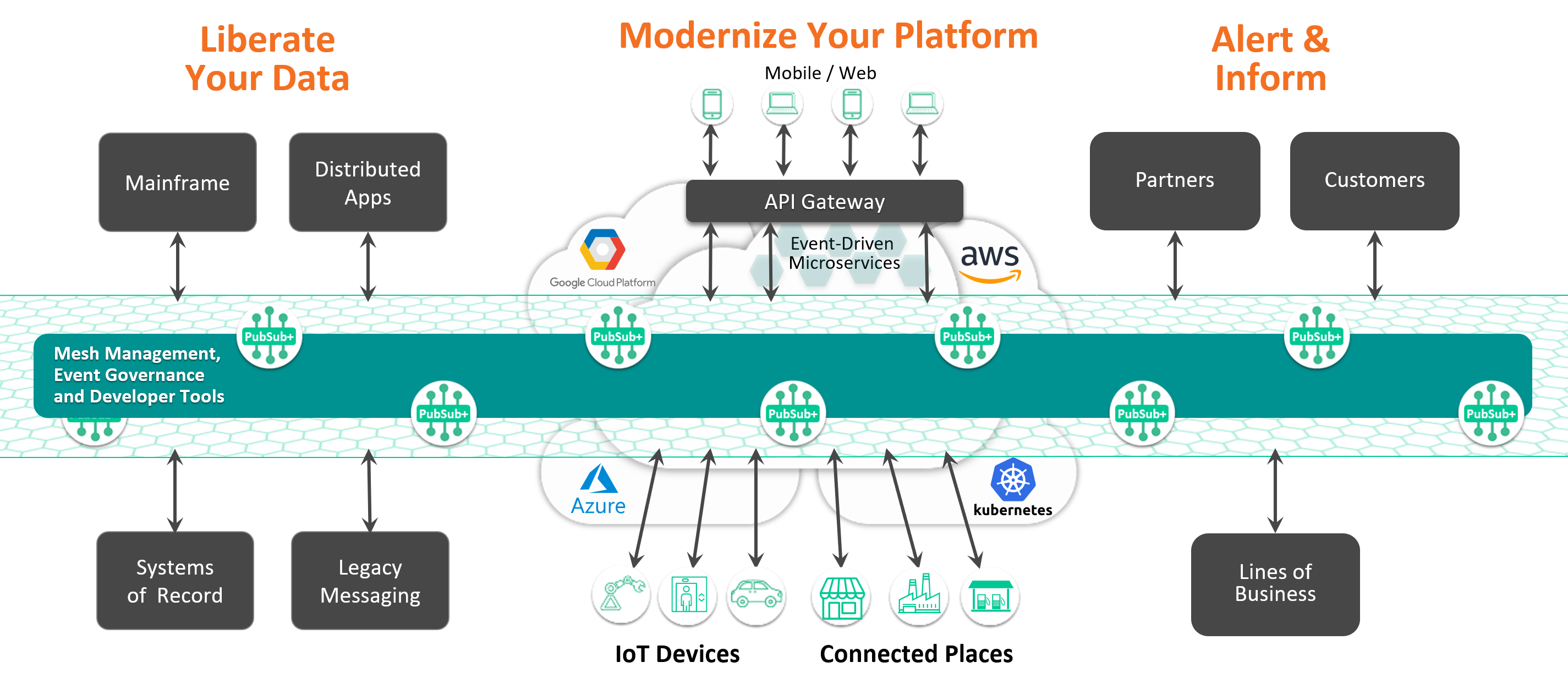

At a high level, if your organization wants to become an event-driven enterprise, you need to:

- Event-enable your existing systems so they can produce events, listen for and act on them

- Modernize your platform to support streaming across environments

- Alert & inform internal and external stakeholders including employees, customers and partners

Step 1: Event-Enable Your Existing Systems

The first step is to break down silos and liberate data by letting your applications publish events as they happen, and listen for and act on them.

To have the most business impact, you need to start with the right systems. Think about the “crown jewels” of your application stack – those at the core of your business that contain the information other systems across your ecosystem want access to, and that you’ve probably front-ended with increasingly overworked APIs. Your goal should be to liberate data by immediately exposing changes in these systems to any applications that want to know about them and are authorized to receive that information.

- In capital markets, they realized a long time ago that distributing market data in real-time would dramatically increase order flow – and thus revenue – so you’re talking about market data distribution systems.

- For credit card companies and payment processors, the crown jewels are those that manage transactions and customer information.

- For manufacturers, it’s their (SAP) ERP.

- In the airline business, it’s passenger, baggage & boarding systems.

- In the retail sector, the crown jewel systems are those that manage inventory and point of sale.

You enable those systems first because they contain the data many other applications need or that your people can innovate with. How exactly do you do that though? Different kinds of systems require different approaches:

- For your SAP systems of record, our partner ASAPIO provides technology that can extract events in real-time and stream them onto an event mesh.

- You can give your existing middleware products the ability to send information to your event mesh using messaging bridges.

- You can connect your mainframe applications to your event mesh using bridges into MQ, polling applications for changes once and publishing them to the event mesh, or using JDBC connectors or CDC software to gather changes from your application databases. In some cases, our clients modified their mainframe applications to publish to their event mesh as this was such critical information to expose broadly to the rest of their systems.

- Some existing applications and ESBs can connect directly to your event mesh using Solace JCA, JMS or REST.

- You can liberate data that’s currently only accessible via synchronous RESTful request/reply interactions by publishing it to your event mesh using PubSub+ in microgateway proxy mode.

The goal across the board is to get these events streaming in real-time between those legacy systems and your modern microservices, analytics and SaaS applications.

The first advantage of doing so is that it gets information streaming to existing consumers in real-time, which can enable more responsive customer service, faster resolution of problems and smarter decisions. The real power, however, is that you can now give as many systems or lines of business as you want access to the information, without ever worrying if or how doing so will affect the core systems or others accessing them. This can make a huge difference to your business as it fosters innovation by making it far easier to access this information.

Step 2: Modernize Your Platform

For a while, some thought hybrid cloud and multi-cloud architectures were a stepping stone to moving everything to the public cloud; most now believe hybrid and multi-cloud is actually an end state.

As Santhosh Rao, Senior Director Analyst, said in March 2019 at the Gartner IT Symposium/Xpo in Dubai:

Hybrid architectures will become the footprints that enable organizations to extend beyond their data centers and into cloud services across multiple platforms.”

That means you need a way to stream events across multiple simultaneously active runtime environments, and even be able to shift workloads between them depending on which offers the best performance, cost or availability at a given time.

With that in mind, the second step to becoming an event-driven enterprise is modernizing your application environment so you can take advantage of more dynamic cloud infrastructure, public, private or often both, to build more agile microservices. You want to integrate with SaaS applications that you don’t need to manage yourself, and take advantage of innovative platforms in public clouds to support machine learning, analytics and other functions. You probably have a strategy for that beyond your event-driven initiatives, but face a few key challenges:

- How do you extend the flow of events from the systems you tapped in step 1 to your new cloud-based applications? And how do you connect the rest of your ecosystem, such as your factories, restaurants, elevators, vehicles, gas stations, and stores into this event-driven world?

- How do application developers discover these events from your systems of record, your IoT devices or your field locations to build new applications? How do you govern and track which applications have access to what information? And how do you evolve your event definitions over time and communicate changes or assess the impact of change?

The first set of questions, about connectivity and distribution, are addressed with an event mesh – a system of interconnected event brokers, usually running in different environments, which distributes events produced in one part of your enterprise, such as your non-cloud systems of record, to applications running anywhere else. An event mesh routes real-time events in a lossless, secure, robust and performant manner, and does it dynamically without configuration – like a corporate Internet of events. An event mesh can also link IoT devices and extend to locations such as stores, restaurants and manufacturing sites.

The second set of questions boils down to the management of events. As mentioned above, you need a way for developers to find what events are being produced, what information they carry and how to access them. Your architects need to understand application interactions, choreography and information flow. Your data governance team needs to know what systems produce and consume what information. This is where an event portal comes in. An event portal provides a single source of truth to understand and evolve everything about your events – the schemas of the data they carry and their relationships with applications across your entire enterprise.

With an event mesh seamlessly distributing events between applications, devices and user interfaces across cloud, on-prem and IoT environments, and an event portal making it easy to collaboratively manage all of those events and event-driven applications, it’s time for step 3.

Step 3: Alert and Inform

Now that your architecture is real-time and event-driven, and you’ve liberated data from closed systems and silos, you can interact with partners, suppliers and customers in an event-driven manner by alerting them about events of interest, and ideally receiving events from them. By eliminating the need for batch-based ETL/FTP, and the load and lag of constant API polling by your partners, and event-driven interaction provides the benefits of more real-time responsiveness to extend beyond your IT systems.

Examples from our client base include:

- Manufacturers that interact in real-time with suppliers to maintain a more accurate and up to date view of their supply chain.

- Consumer packaged goods companies that immediately push updated catalogs to their distributors as changes are made to them.

- Clients who provide dashboards of information to clients of theirs who now want to stream changes in real-time for their clients to more easily integrate into broader dashboards and analytics.

For use cases such as these, you want to asynchronously push events to third-party applications, ideally without needing to support software connectors or libraries. This is where open standards play an important role, and why Solace supports several options:

- For low-rate event notifications, such as the catalog example above, a good option is to use REST with our webhook functionality. When an event is to be delivered, the Solace event mesh can simply issue an HTTP POST to your 3rd party web server or API gateway to notify them in a very simple interoperable manner.

- For higher-rate streaming event delivery, MQTT and AMQP are great options that can be used to deliver events in such a way that consumers can use open source or vendor client libraries to consume them.

Conclusion

These three steps approximate the journey we’ve been on with many of our clients. The key, as with most transformation projects, is to start small but with an end architecture in mind, get some experience and wins, and then learn, adapt, and take the next step. I hope this is useful to you and wish you good luck in your journey to becoming an event-driven enterprise!

Explore other posts from categories: Business | For Architects

Shawn McAllister

Shawn McAllister