MakeUofT is Canada’s largest makeathon. Similar to a hackathon, it’s a place where projects come to life; a makeathon focuses on hardware-based projects where students build something from scratch through hardware and software integration.

The theme for MakeUofT 2020 was connectivity and machine learning. As a prime sponsor of the event, Solace challenged participants to make the best use of Solace PubSub+ Event Broker to stream events and information across cloud, on-premises, and IoT environments.

Alok Deshpande, a graduate from the University of Toronto in Electrical Engineering, participated with his group members in MakeUofT 2020 and chose Solace’s technology to make their project. Below, he shares how he and his group designed and developed their project.

Inspiration for the Project

Coronavirus is currently all you hear about in the news, and given that it’s a worldwide pandemic, this is quite reasonable. However, emergency events like disasters are still going on, even if you don’t hear about them as much. They can lead to the loss of lives and resources because of delays in notifying authorities, especially during times like these.

Natural disasters in remote areas, in particular, can go unnoticed because of personal and political reasons. For example, last June there was an issue here in Canada where wildfires were allowed to burn for extended periods of time because there weren’t enough members of staff available to monitor them. While detection by satellite imaging helps, clearly automatic real-time notifications from disposable sensors in the field would have provided supplementary support.

Sounds like a job for… Solace PubSub+ Event Broker!

The Telus-Telyou Project

Back in February, I worked on a team makeathon project at MakeUofT 2020 to develop the proof-of-concept of a monitoring service for this purpose, which we called Telus-Telyou. The goal was to develop connected sensor nodes that would automatically trigger warnings on connected endpoints based on their specific use. For example, in the case of fire detection, the endpoint might belong to a fire department.

There were three main requirements in this project:

- The nodes should be transmitting over a cellular connection to enable both long-distance and reliable monitoring.

- Many nodes should be able to provide a stream of notifications to many endpoints.

- To ensure the nodes remained low-power, until a message would be provided by an end-user, the event would be recorded in low resolution

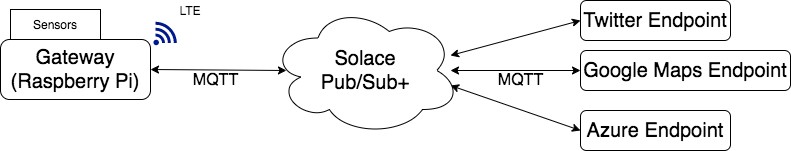

Fig. 1: General concept diagram

As shown in the diagram above, the connected nodes would transmit data to the cloud, to which multiple users could subscribe to receive notifications. Users could also publish messages to configure the nodes.

Our Design

Based on the available resources in the makeathon, my team and I decided that the sensory data could simply be temperature, humidity, GPS readings, and photos from a camera. These would be collected from a gateway computer and transmitted over LTE.

On the other end, the three endpoints were chosen to be social media (Twitter), mapping (Google Maps), and cloud computing services (Microsoft Azure) since these would simulate real-world clients in this proof-of-concept. The cloud service was chosen in particular because it would allow for integrating AI models to make predictions based on the raw sensor feed, as described in the section below on the extension to this project. Mapping was of course incorporated to ensure the end-user would be able to identify the location of the hazard.

Fig. 2: Diagram of the system architecture

Between them, we chose to incorporate an event broker to handle the messaging, based on the second and third requirements. After looking into some options (including Azure’s own broker), we decided that Solace PubSub+ Event Broker was the way to go because it wouldn’t tie the project to any given service.

Building Telus-Telyou

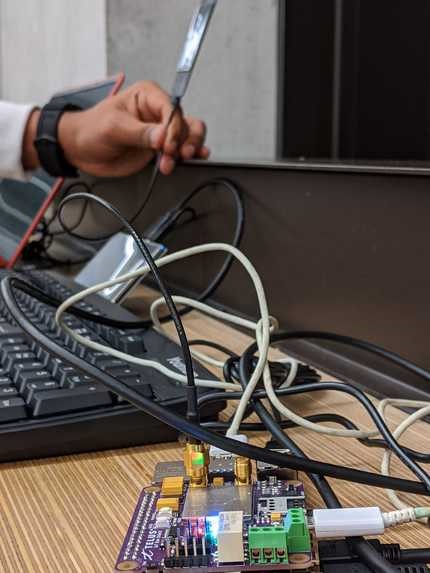

To physically build the project, we used a Raspberry Pi computer as the gateway, connected to a Telus LTE modem shield and a RaspiCam camera. My teammates interfaced the sensors on the HAT as well as the camera to the Pi using provided APIs. We then configured the modem to transmit point-to-point so that we’d be able to communicate over TCP to PubSub+ Event Broker.

Fig. 3: Hardware – Raspberry Pi and LTE shield

After this came the fun part – setting up the event broker!

I used the Paho MQTT client to set up the Raspberry Pi to continuously publish the temperature, humidity, and GPS data, since these are tiny payloads.

class IoT_Client(paho.mqtt.client.Client):

def __init__(self,node_ID):

self.clean_session=False

self.publish_detailed = False

self.node_ID = 0 #User-defined

#A dictionary of requested measurements, with numeric keys and string identifiers as values

self.sensor_data_enum = { idx : type_str for idx, type_str in enumerate(['temp', 'humidity', 'lat', 'longitude'])}

def on_connect(self, client, userdata, flags, rc):

self.subscribe("nodes/{self.node_ID}/detailed_request", 0)

def add_measurement(meas_name:str):

self.self.sensor_data_enum[len(self.self.sensor_data_enum)+1] = meas_name

def getPubTopic(datatype_idx, event_stream):

return f"nodes/{self.node_ID}/{self.sensor_data_enum[datatype_idx]}/{event_stream}"

def getPhotoPubTopic(event_stream):

return f"nodes/{self.node_ID}/camera/{event_stream}"

......

......

try:

#Get sensor feed continuously. Could be modified to pause conditionally

while True:

#Get sensor data from wrapper. Input is the dictionary of requested measurements

# Returns sentinel NaN if a measurement type is absent or the measurements are faulty

sensor_data, timestamp = getSensorData(iot_client.sensor_data_enum)

for val, idx in enumerate(sensor_data): #For each measurement type in data requested… if faulty publish diagnostics

if val == np.nan:

iot_client.publish( getPubTopic(idx, 'diagnostics'), json.dumps({"error":'Absent or faulty'}) )

else:

payload = {"node": iot_client.node_ID, "timestamp":timestamp, iot_client.sensor_data_enum[idx]: val}

payload = json.dumps(payload,indent=4)

iot_client.publish( getPubTopic(idx, 'measure') , payload) #…Otherwise publish measurements

.......

except:

iot_client.loop_stop()

iot_client.disconnect()

I also set it up to subscribe to requests to publish photos, since they would be relatively larger messages and should only be sent infrequently.

.......

#Set flag to publish more detailed information only when receive callback

def on_message(client, userdata, msg):

client.publish_detailed=True

......

iot_client.on_message = on_message

.....

#Get sensor feed continuously. Could be modified to pause conditionally

while True:

......

#Publish more detailed messages (just photo stream, for now)

if(publish_detailed==True):

publish_detailed = False #Clear flag once request acknowledged

image_contents_arr = getImage() #Wrapper to take image using RaspiCam and read from file

im_bytes = bytearray(image_contents_arr) #Convert to byte array for serialization

im_payload = json.dumps( {"node": client.node_ID, "timestamp": timestamp, "camera": im_bytes}, indent=4)

iot_client.publish( getPhotoPubTopic(iot_client.node_ID, "measure"), im_payload )

.......

Specifically, these requests would come from the end client, which would publish based on the end user’s interaction (not shown here). For this use case, we assumed that the end-user would review the sensory data and make a judgement based on the likelihood of an emergency as to whether to request a photo stream.

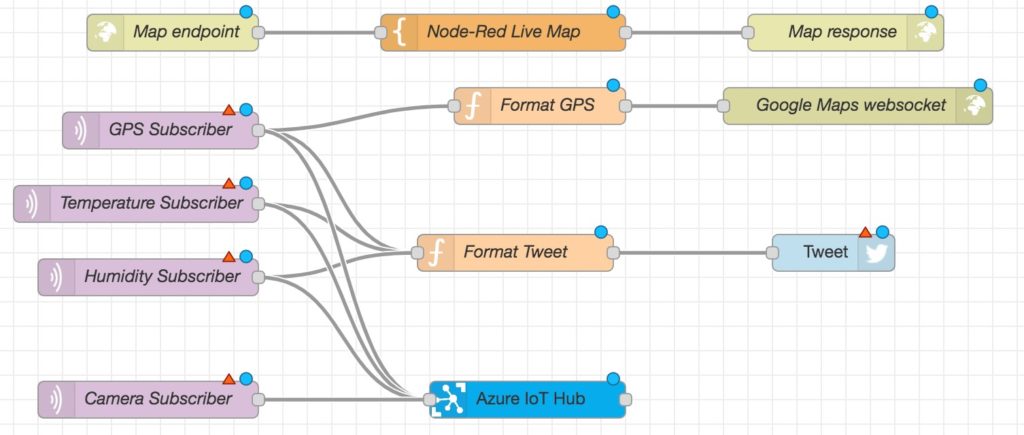

Finally, we used Node-Red to connect the Twitter, Azure, and Google Maps endpoints.

Fig. 4: Picture of node-red flow

The following screenshot shows part of the system in action, as it publishes messages to Twitter when the temperature exceeds an arbitrary threshold:

Fig. 5: Image of Tweets

Extension: Smart Public Camera

After the makeathon, we decided that this system could be greatly improved by leveraging the AI capabilities on Azure. This would allow end-users to avoid having to constantly monitor it. It would also solve the problem of having to run inference on the edge device, since many (unlike the Raspberry Pi) have limited memory.

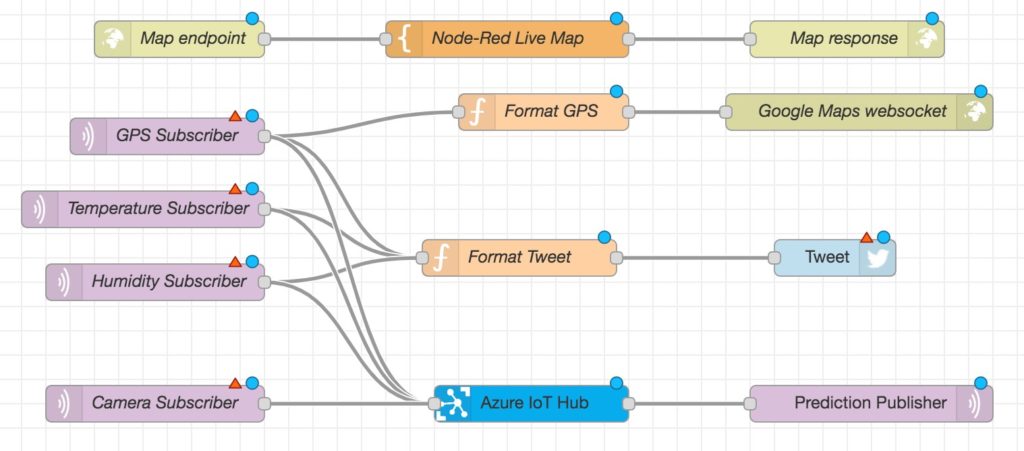

To achieve this, we set up a pipeline on Azure ML where a Stream Analytics job would consume the messages from IoT Hub and a predictive model and then feed the results back to IoT Hub. From there, the prediction using the sensor data was published to a new topic called ‘/prediction’, as shown below. Other clients could then subscribe to this prediction.

Fig. 6: Picture of modified node-red flow

Unfortunately, this use case didn’t apply for our original application, since there were no disaster prediction AI models we found that would make use of our limited sensor data.

The alternative application we considered was in civil emergencies, such as identifying the need for help from cameras installed in a city. Since we had a RaspiCam, we imagined it could be installed similarly and an SOS gesture detected. After finding a pre-built gesture detector on Azure, we fed to it images of hand gestures taken with the RaspiCam and had the AI model predict whether the hand was raised.

The video below shows the prediction from the model – a bounding box when the gesture is detected – superimposed on the feed from the camera. Incidentally, the choppiness (low frame rate) is due to a combination of the slow rate of inference and of the rate of MQTT publications.

What We Learned

For many of us, this was our first time working on an IoT project, so it was fun to see how easily a physical device could be connected to the cloud and web services we are familiar with. My team and I discovered how easy-to-use and versatile Solace’s PubSub+ Platform is for this kind of applications. The started examples in Python and Node.js were helpful to get up and running, and the Web Messaging demo (‘Try Me!’) particularly helped for just testing out the API. We also learned a lot about the event-driven architecture from talking with Solace employees, who were very helpful. For our next project, we hope to try other Solace messaging platforms!

Check It Out Here

For more information, you can find our project on GitHub.

Explore other posts from category: For Developers

Solace Community

Solace Community Alok Deshpande is a recent graduate from the University of Toronto in Electrical Engineering. His interests lie in embedded development and machine learning for IoT applications.

Alok Deshpande is a recent graduate from the University of Toronto in Electrical Engineering. His interests lie in embedded development and machine learning for IoT applications.