Home > Blog > For Architects

Subscribe to Our Blog

Get the latest trends, solutions, and insights into the event-driven future every week.

Thanks for subscribing.

In the previous article (Event Driven Integration: Why now is the time to event enable your integration) I introduced how changes in data volumes, connectivity levels, consumption models and customer expectations are changing the way that we need to architect the flow of information. I explained how an event-driven approach can help address these external factors. In this article, I will dive deeper into what event-driven integration is, and what the benefits of adopting this approach are.

What is Event-Driven Integration?

Event-driven integration can be defined as a way of integrating systems that empowers components to share real-time business moments as asynchronous events using the principles of event-driven architecture (EDA). You can think of EDA as a blueprint, and event-driven integration as the way of implementing that blueprint. It will use the optimal set of technologies, design methodologies and best practices to bring about the most resilient, and scalable implementation.

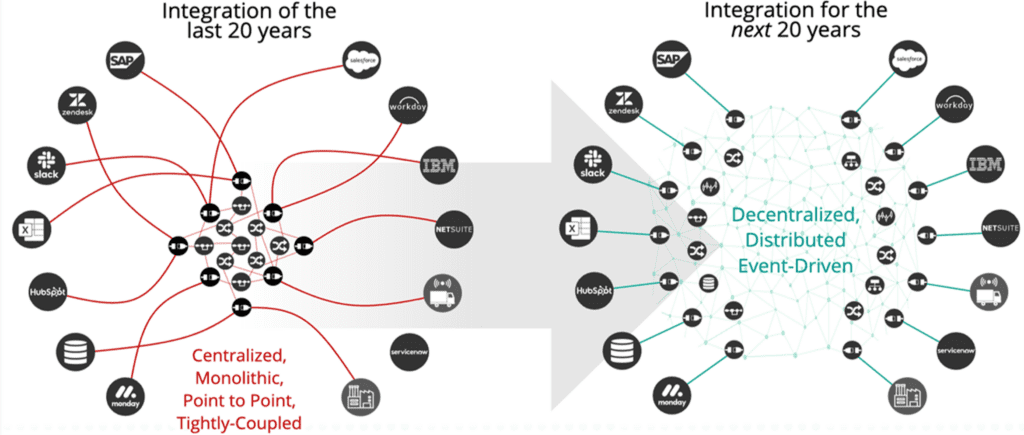

Traditional forms of integration such as ESB and iPaaS put integration components at the center, which has some downsides:

- Integration components are coupled into one deployable artefact, leading to scalability and agility challenges.

- Connectivity to remote data sources increases the risk of higher latency and network failures.

Event-driven integration looks at integration infrastructure with two different “lenses”:

- Core: refers to part of the system that focuses on facilitating data/event movement.

- Edge: refers to the part of the system that has to do with event processing i.e. connectors, transformers, content-based routers etc.

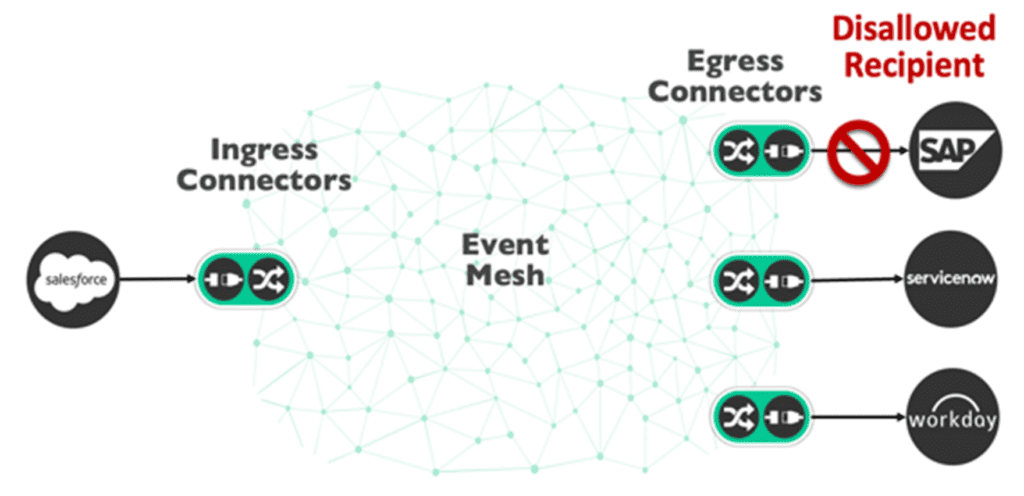

In essence event-driven integration turns integration “inside out” by pushing complex integration processes to the edge while leaving the core free to move data across environments (cloud, on-prem) and geographies, as shown here:

This approach is focused on maximizing the flow of events and information while improving your system’s scalability, agility, and adaptability.

Event-Driven Integration’s “Core” Effect

In the previous piece, I indicated that developers usually opt for a more tightly coupled approach to integration because REST is the approach they’re most familiar with. This approach is basically a risk mitigation strategy, in which one needs to deploy fewer assets due to capacity allocation concerns and reduce external “network chatter” caused by unreliable protocols (i.e. HTTP) and networking infrastructure.

An event platform can mitigate some of the infrastructure risks by more effectively managing traffic. However, even with a reliable event infrastructure in place, if you follow the core event-driven integration tenet of moving complexity to the edge, which edge do we move it close to: at the data source or at the destination?

It’s better to decompose the complex integrations in the core into micro-integrations that you deploy and distribute at the edge. This way you can have micro-integrations close to both the source and destination of the data. This reduces network and connectivity risks and lets you deploy and reuse logic/processing components for many integration scenarios.

A well-designed event platform can scale from a single event broker to an event mesh containing multiple connected event brokers (I’ll dive into event mesh in the next chapter…stay tuned!) and can support a variety of event exchange patterns such as publish-subscribe, peer-to-peer, streaming, and request-reply.

By decoupling assets, the event platform:

- Enables individual edge component scaling.

- Prevents errors in one component from impacting other components.

- Allows for individual evolution/change of components without impacting others.

The event platform makes an ideal “core” for integrating distributed systems as it enables the entire system to scale better and have a higher degree of agility, flexibility and resilience.

Edge Components

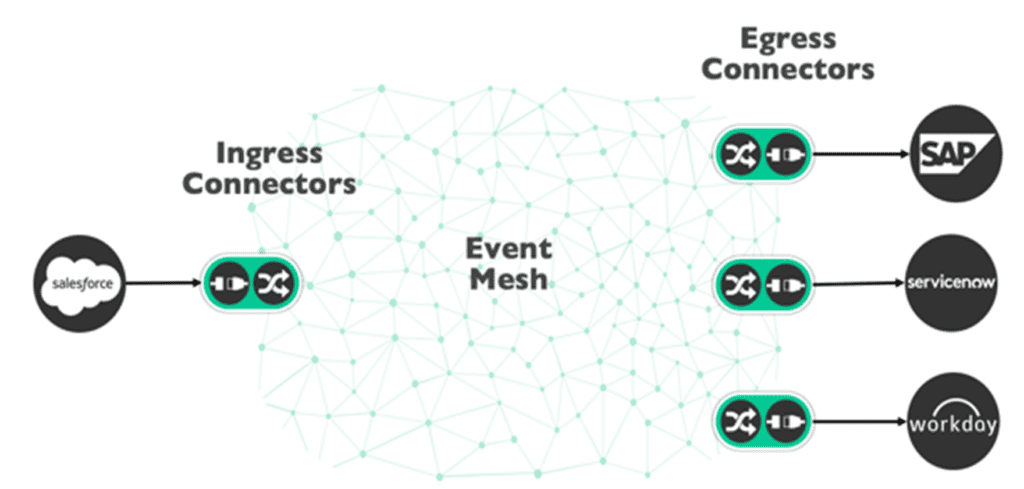

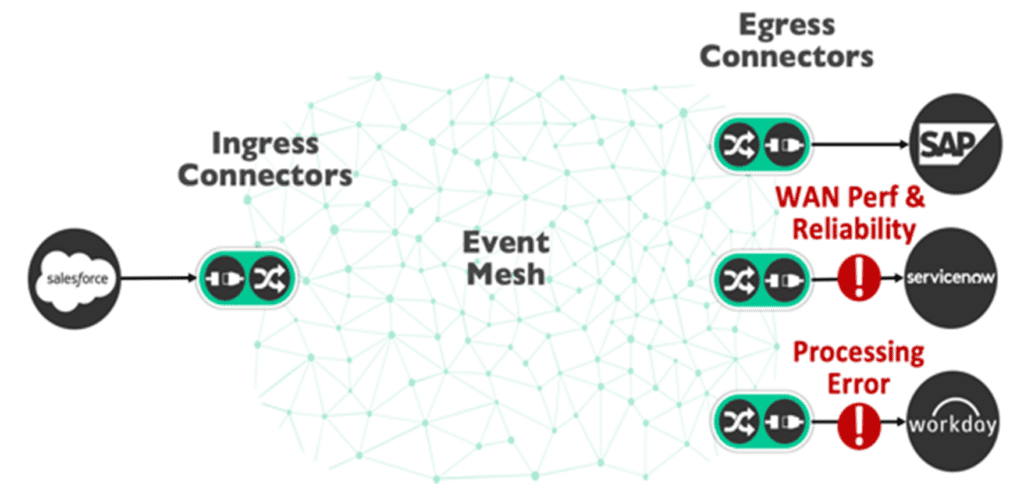

While the event distribution layer at the core gives event-driven integration the ability to streamline event flows, the edge components are what enable connectivity and event processing.

Connectors and event processors can be combined to create what many call “micro-integrations.” Think of micro-integrations as a type of microservice that is focused on changing data in motion. The type of change will be dictated by the business function the micro-integration supports and serves. Edge components perform the following functions:

- Ingress connectors retrieve data from data sources, transform the data to a domain specific canonical event model and publish it on the mesh;

- Egress connectors subscribe to events on the mesh, transform them to the destination format, and update downstream data sources.

- Event Processors include transformers, content-based routers and schema/content validators – and may contain any type of business logic.

Placing connectors (both ingress and egress) close to the data sources reduces connectivity and data access risks; this improves reliability, consistency, performance and makes network configuration easier. Micro-integrations can be built using open-source frameworks or using off the shelf components provided by many iPaaS/Integration vendors like Boomi and MuleSoft.

In summary, one of the greatest features of moving integration to the edge is that you can combine any type of edge component to deliver on a particular business functional requirement. This flexibility ensures that you can focus on the success of the integration architecture as a whole, and not be hampered by a particular technology or platform.

Technical Benefits

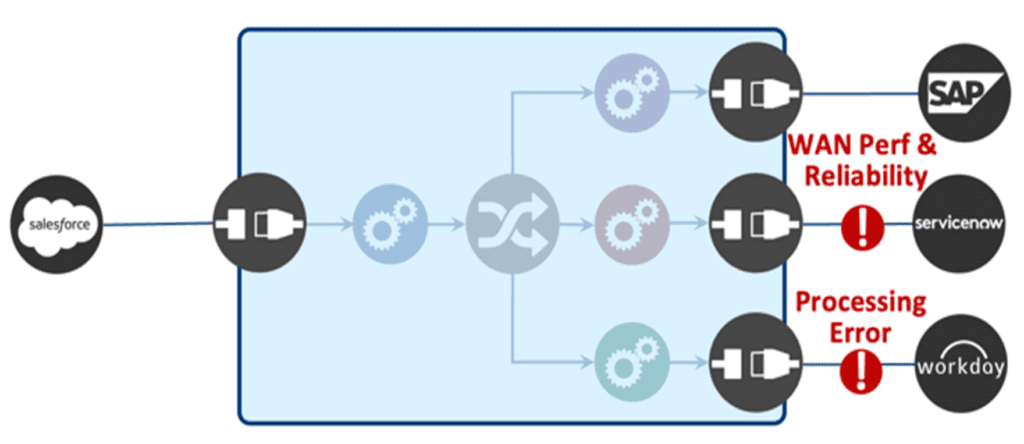

Traditional integration architecture looks something like this:

They usually poll for data via REST connectors, have some hard-coded set of mappings and transformations to determine routing, and use REST connectors to forward messages to recipients.

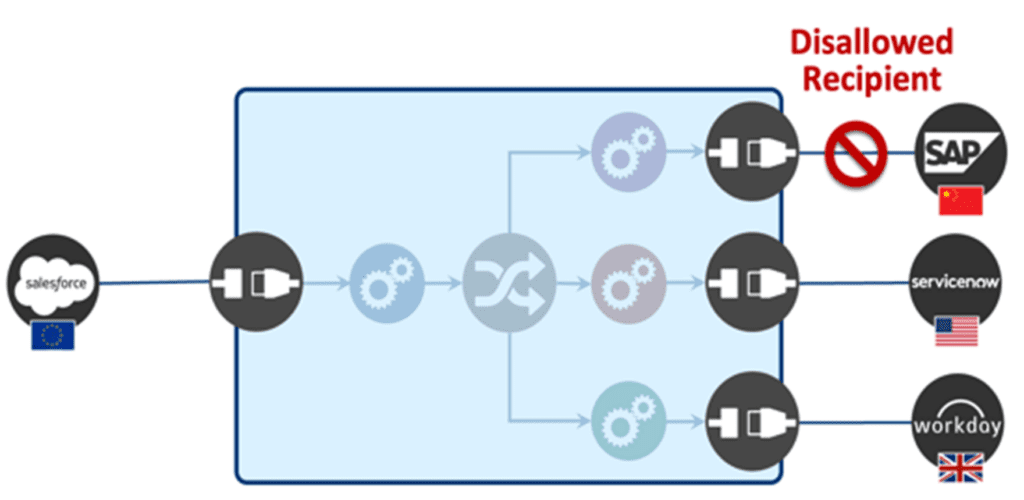

This approach usually leads to brittle connections between components in different clouds or geographies, and forces individual components to enforce ever-changing data privacy regulations with minimal knowledge.

By contrast, event-driven integration decouples all components, is fully event-driven, and leverages all the capabilities of an event mesh to enable better performance, scalability and efficiency. Many of the issues that event-driven integration solves are related to the high degree of coupling that traditional architectures engender.

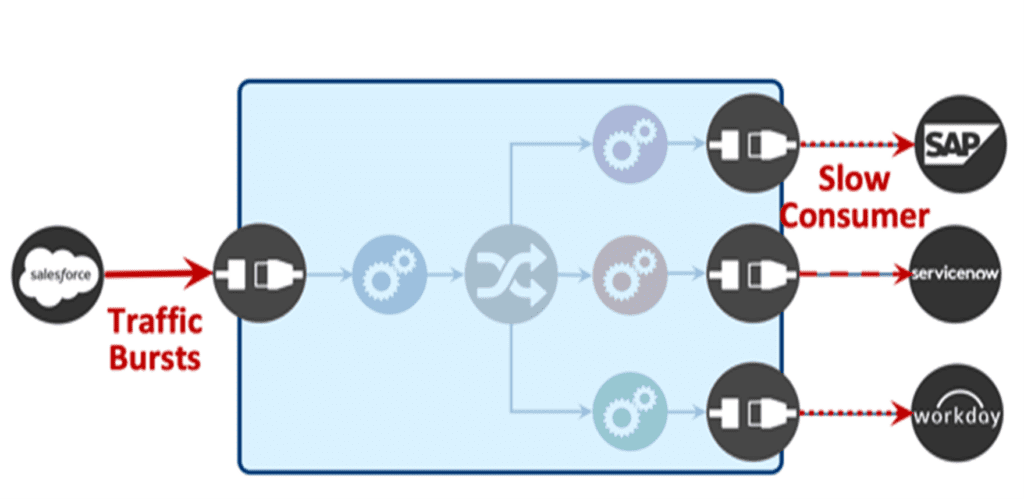

Shock Absorption

Speed mismatch is another term for the fast producer slow consumer paradigm. Without shock absorption, consuming systems can be overwhelmed and that can lead to dire consequences (i.e. lost orders, poor customer experience, etc.).

With event-driven integration, the event platform is able to buffer an event stream until subscribers are able to consume events at their own pace. The buffering (or shock absorption) is essential for guaranteeing the stability and resiliency of the architecture.

Handling Lack of Availability

In a distributed architecture not all components are available for processing at the same time, all the time. In addition, event loss is also undesirable, as it can lead to many issues such as lost orders, missed cancellations, incomplete transactions, and an inaccurate understanding of inventory levels or the location of a delivery truck. This makes it imperative that event-driven integration guarantees event delivery no matter what.

The event platform can store events in the sequence of their emission and deliver/forward them to consumers when those consumers become available. This ensures that operations continue even when certain components fail or are unable to receive new messages because they’re disconnected or backed up.

Error Handling

Hardware, network and even processing failures can occur frequently in distributed systems, and it is critical that these failures do not affect the system as a whole. The key is to handle these errors in ways that minimize the mean time to recovery (MTR) and minimize disruption all the while keeping the system as simple as possible.

Errors can typically be placed on an error handling queue, which can in turn be handled by a “repair station” that can address the root cause event, take remedial action (i.e. reinitiate the transaction if needed), and notify personnel about the cause and impact of the error.

Many distributed systems falter in the handling of distributed errors because of their inability to scale well, and cover dispersed geographies uniformly. An event platform can make error handling much easier by addressing these issues.

Evolution of Event-Driven Integration

Arriving at an optimal event-driven integration implementation does not happen overnight. It’s an evolutionary process that takes time and is measured in incremental milestones. The evolution impacts both the business and the technology landscapes.

From a business perspective, one of the first stages of successful event-driven integration is the rapid improvement of key user/customer experiences. Those experiences are further enhanced by the aggregation of other pieces of information that are being extracted from across various information silos. Access to a diverse set of information streams enables the creation of innovative new products and services that improve user experience and potentially help the business create new revenue streams by tapping into new markets.

From a technology perspective, one of the first benefits event-driven integration brings to fruition is the decoupling of components and the ability to serve as a “shock absorber” that gracefully deals with unexpected bursts of traffic. Another key benefit is scaling the system across multiple geographies and clouds. Once the core foundation is in place, you can implement various patterns of integration and publicize them to drive reuse and automation across your enterprise.

Business Impact and Benefits

Here are a few areas in which you can expect to benefit from event-driven integration.

Improved User Experience

Customer experience is a critical part of any business strategy because it leads to loyalty, a larger customer wallet share, and longer-term retention.

Achieving a great customer experience, however, is no mean feat, and there are many business and technical challenges encountered along the way.

A negative customer experience could be driven by online transactions taking too long to complete, lost or misdirected orders, or e-commerce site slowing down or even crashing due to too much traffic.

There are many technical factors that could lead to a negative customer experience. For instance, traffic bursts could degrade performance and lead to instability. Slow processing or unavailable components could impact the operation of other related/connected components, or even adding new components to an existing data flow could lead to downtime, and the potential insertion of unexpected errors.

These issues are experienced on all traditional integration platforms (iPaaS, ESB etc.).

Event-driven integration addresses these issues in a few ways:

- Being able to absorb incoming bursts of traffic would allow components to consume events at their own speed, guarantee event delivery, increase stability, and eliminate the need for either rate limiting or over-provisioning.

- Having the components decoupled from one another and connected to the core event platform would also make it easy to scale each component individually and make it easier and less risky to add new components, by removing the need to change the existing infrastructure.

From a business perspective, the adoption of event-driven integration would help ensure that users get consistent real-time information, and that they would not experience downtimes/outages or duplicate/lost orders.

From a technical perspective a higher degree of decoupling would increase the rate of component reuse, it would eliminate the need for point-to-point connections, and would drive a higher overall degree of reliability, stability and resiliency.

Information Consolidation and Availability

Having the right information at the right time and in the right context, is the key to making the right decision and taking the correct action. The reality however is that in modern enterprises, information is dispersed across multiple systems.

From a business perspective this can lead to business divisions in different geographies or clouds having out-of-sync, stale, or inconsistent data. It could also lead to embarrassing and costly data privacy violations, and even making it so customers can’t get a single view of their own data and digital assets.

The technical causes for these experiences could be related to brittle connections between components in different clouds or geographies, or to routing logic being hard coded into applications (or an iPaaS solution) or individual components trying to enforce everchanging data privacy regulations with minimal external visibility.

To solve this problem of data silos, you need to create a unifying fabric across all systems and geographies. With event-driven integration, you could scale out the event platform by adding more geographically distributed event brokers and linking them to form an event mesh. Integration applications would then be able to reside at the edge, near the data sources, and connect the source or destination data source to the event mesh. Lastly to guarantee data security, you could manage access control at the event path level.

One of the key benefits of adopting an event-driven strategy is the ability to aggregate event streams from multiple sources into a unified “truth stream”. Another such benefit is the ability to enforce data privacy rules at the infrastructure level by controlling who can publish where and who can consume what data. This would all culminate in the ability to automatically route business “moments” to interested components, no matter where they are.

Faster Innovation

Businesses are always looking for new ways to drive revenue and improve profit margins, and the key to growth is innovation. Innovation can take many forms, ranging from offering service/product bundles at different price points to creating brand new digital product lines. Not innovating fast enough can lead to unsatisfied and impatient customers waiting for new and improved experiences.

An inflexible and coupled integration architecture can hamper innovation by requiring longer development times and higher costs to deliver new solutions; additionally, often adding new features can break existing ones due to coupling.

Some of the technical causes that hamper agility are steeped in an inability to consume real time data for more accurate decision making. That is typically due to the fact that the system either has not been designed to support events, or if it has, the event sources often provide only siloed information, and thus do not offer a complete view of a given situation.

Another reason could be the inconsistent usage of design patterns and coding standards, e.g. reusing payload schemas across both REST-applications and event-driven applications, to drive more asset reuse and improve architectural synergy. Lastly the tooling and infrastructure provisioning processes could be inadequate, and not supportive of a streamlined digital asset management lifecycle.

Event-driven integration can improve innovation in a variety of ways. For instance, a decoupled architecture localizes the impact of changes so other components aren’t affected.

The use of a scalable event platform reduces the need for extensive configurations in scaling and it creates a seamless plug-and-play environment for both producers and consumers of information. It also makes it easy to access real time data, facilitating a better user experience and more accurate decision making. Lastly, having a digital asset catalog that provides access to event definitions and event APIs facilitates discovery and reuse.

With these items in place, enterprises can accelerate innovation and better adapt to market shifts and demands by more quickly launching new products, creating new revenue channels, and breaking into new markets.

Conclusion

Event-driven integration presents a different way of building distributed systems. It’s approach of turning traditional integration “inside-out” (i.e. putting complex integration processes at the edge while keeping the core focused on event and data movement) makes your system faster and more scalable, flexible, and resilient.

Because event-driven integration takes the traditionally larger integrations components and transforms them into simpler “micro-integrations,” it makes the system more adaptable and easier to accommodate change.

There are both business and technical benefits to the approach put forth by event-driven integration, benefits that translate into both easier ways to drive new sources of revenues, as well as ways to reduce costs. Event-driven integration is a big milestone in the evolution of integration, a milestone that points the way to a path where technology can keep up the pace with both market demands and technology evolution.

Explore other posts from categories: Event-Driven Integration | For Architects

Bruno has over 25 years of experience in IT, in a wide variety of roles (developer, architect, product manager, director of IT/Architecture, ), and is always looking to find ways to stimulate the creative process.

Bruno often takes unorthodox routes in order to arrive at the optimal solution/design. By bringing together diverse domain knowledge and expertise from different disciplines he always tries to look at things from multiple angles and follow a philosophy of making design a way of life. He has managed geographically distributed development and field teams, and instituted collaboration and knowledge sharing as a core tenet. He's always fostered a culture founded firmly on principles of responsibility and creativity, thereby engendering a process of continuous growth and innovation.

Subscribe to Our Blog

Get the latest trends, solutions, and insights into the event-driven future every week.

Thanks for subscribing.