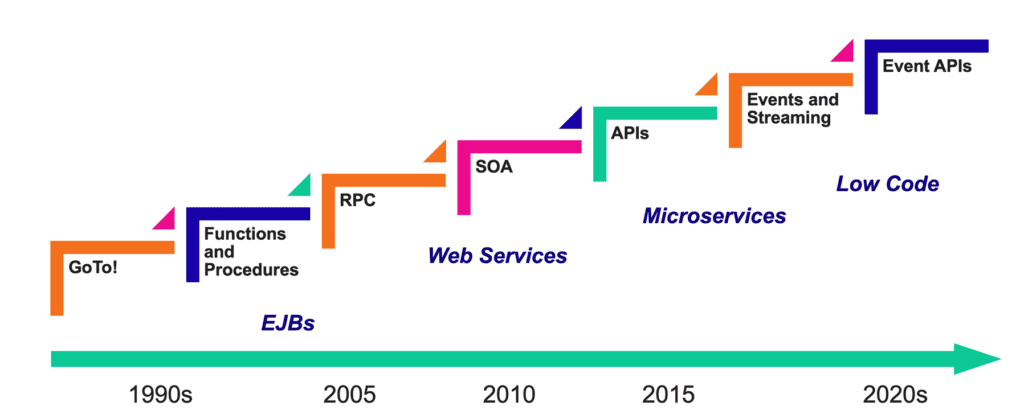

A History of Services and APIs

Enterprise application landscapes consist of inter-connected and inter-related applications. The world of application integrations has been constantly evolving over several years. Over time, we’ve observed a trend towards more modular and data model-focused integration components, and an increased emphasis on real-time processing. Beginning with EJBs in the 90s, progressing to Webservices and Service-Oriented Architecture (SOA) in the early 2000s, and finally evolving to domain-specific microservices, we are seeing a continual progression towards more efficient and effective integration solutions.

Real-time Updates and Events

As businesses integrate into the real world and approach consumers, the connections and interactions between these apps and businesses become increasingly complex, leading to issues such as component unavailability, scaling difficulties, and tight coupling.

In addition to API implementation and maintenance challenges, businesses face another issue: responding to and adapting to changes in their operating environment. With the rapid advancement of technology and widespread Internet access, consumers now expect quick responses from enterprises. These changes in business, real-life, and technology systems can be seen as events.

An event is essentially a significant occurrence that triggers processing within a system. Events can be classified into:

- Business events: Represent business transactions or changes in business state.

- System events: Represent system-level occurrences, such as the completion of a task.

- Technical events: Represent lower-level technical occurrences, such as a message being sent or received.

While these events are generated organically, they must be captured, interpreted, and processed in a well-organized and designed manner to make sure that they are efficiently utilized.

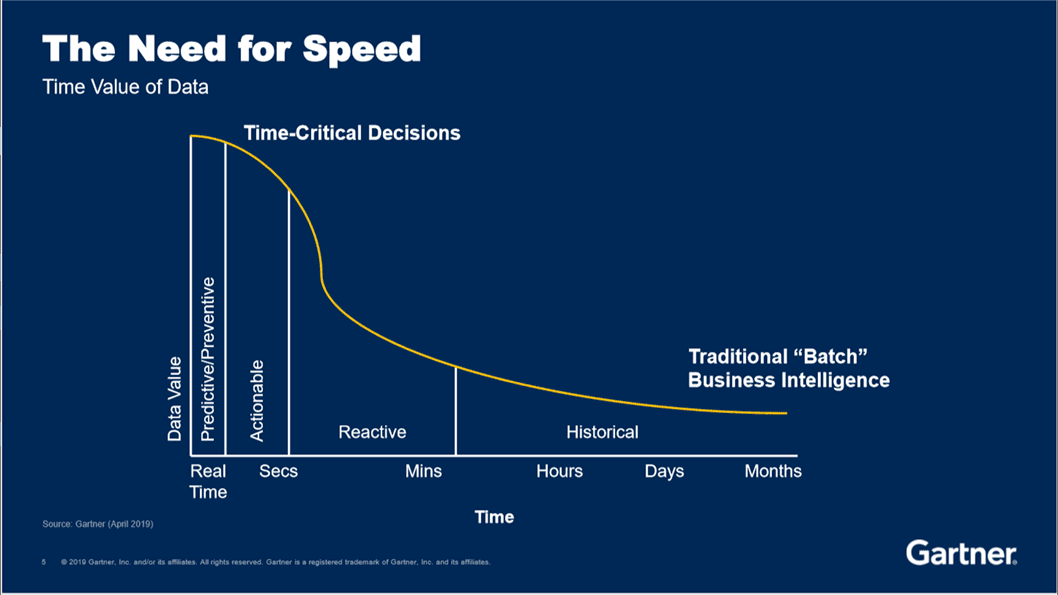

A Gartner study demonstrates that the value from data and events increases with the speed of their usage.

Source: Gartner “Stream Processing: The New Data Processing Paradigm” 9 April 2019, Sumit Pal

Businesses and architects need to process data and events efficiently to maximize their value. This has resulted in the widespread adoption of event-driven architecture across various industries.

Synchronous Microservices: Strengths and Weaknesses

Talking about modern application architecture, microservices stand out. Most contemporary APIs are designed as microservices, which are a collection of loosely connected services providing business capabilities. This microservice architecture allows for seamless delivery/deployment of large and complex systems. Although various architectural styles like GraphQL, gRPC, etc. exist for developing microservices, the preferred choice for most developers and architects is REST (representational state transfer).

A well-designed microservice should have the following architectural features:

- Facilitate flexible integration with new functionality through loose coupling

- Allow multiple distributions of data elements to improve processing efficiency

- Implement deferred execution to enhance response time, robustness, and performance

- Ensure dependable delivery for robustness in the face of faults and imbalanced speeds.

However, REST-based microservices may not fully meet these expectations and face challenges such as:

- Difficulties in inter-process communication

- Complex and unreliable state in case of failures

- Slow response time and negative user experience due to complicated orchestration

- Limited scalability and resource utilization

- Obstacles in adding new services, leading to a fragmented monolithic architecture

- The one-to-many pattern can be difficult and unsustainable.

Event-Driven APIs to the Rescue

Synchronous microservice limitations can be overcome through asynchronous interaction, event-driven architecture, and event-enabling traditional microservices. Taking advantage of the constant flow of business and technical events by acting on them promptly.

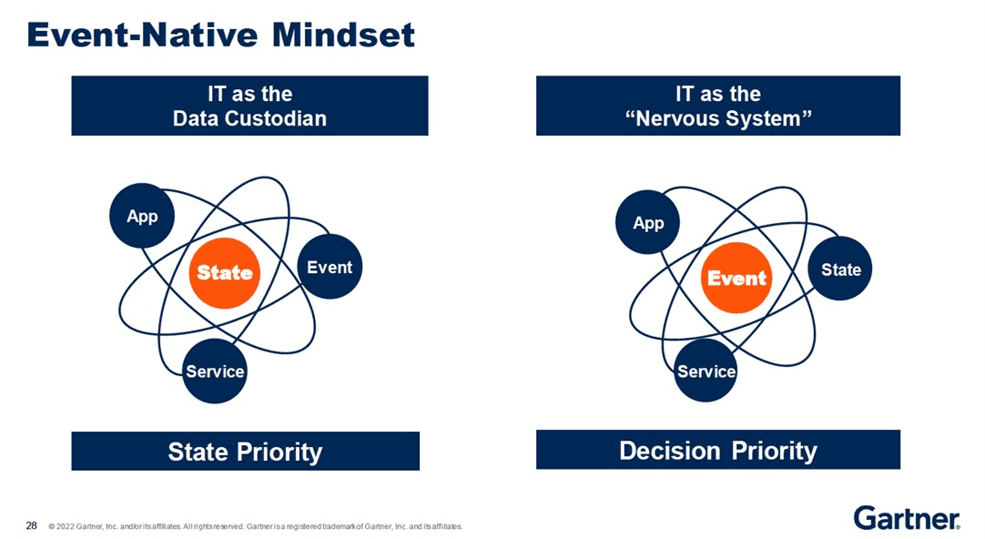

As awareness of the importance of events and event-driven architecture grows, architects and developers are exploring ways to integrate events into microservices. However, successful adoption also requires a change in mindset and approach from business stakeholders, product owners, and architects. This shift involves moving from a data-centric approach to one that uses events to drive business decisions and logic. Full event-native adoption is necessary to fully leverage the benefits of events throughout the various stages of the business.

Source: Gartner Symposium Presentation “Strategic Trends in Application Platforms and Architecture”, Yefim Natis, November 2022

Modern APIs are predominantly based on microservices, but events and event-driven architecture are becoming increasingly important. The future of APIs lies in combining the strengths of APIs and event-driven architecture to create event-driven APIs.

This prompts the question, what exactly are event API products? Similar in idea to regular API products, the distinguishing factor is that event API products utilize real-time data instead of stored data. REST APIs are effective for basic request-reply commands aimed at a single consumer, whereas event-driven APIs are ideal for disseminating time-sensitive information to multiple recipients.

Event-driven APIs deliver the following benefits:

- Scalability: Event-driven APIs allow systems to scale horizontally, as events can be processed by multiple subscribers in parallel. This makes it easier to handle high volumes of requests.

- Real-time data: Event-driven APIs enable real-time data processing, enabling systems to respond to events instantly, instead of having to repeatedly poll for updates

- Decoupled systems: Event-driven APIs promote decoupled systems, as they allow for loose coupling between systems. This means that systems can evolve independently, and changes in one system don’t necessarily impact the other.

- Flexibility: Event-driven APIs allow for more flexible and dynamic data flow between systems, as events can be generated and processed by different systems, at different times, in different ways.

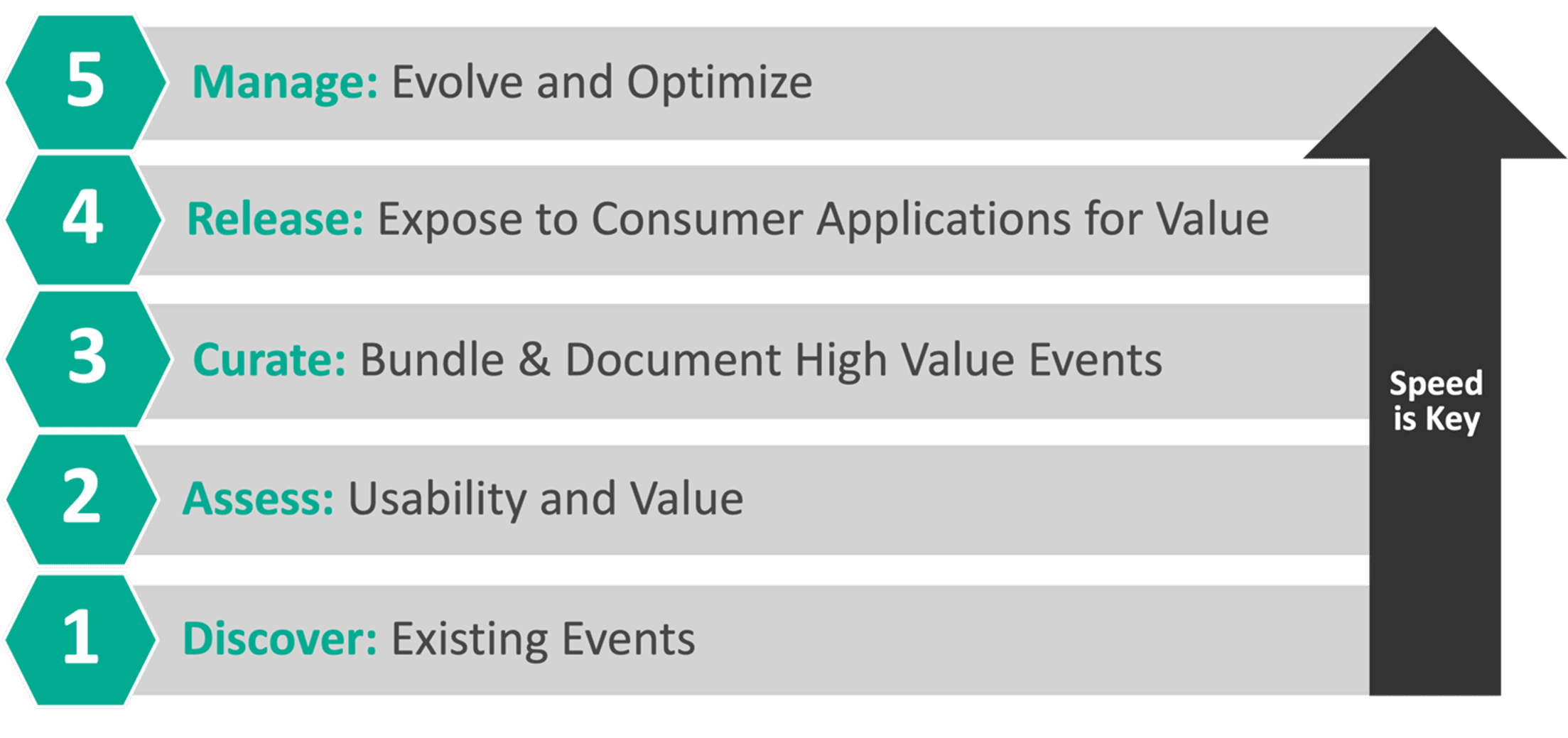

Event-Driven APIs: How to Ideate, Develop and Operate

Having discussed event-driven APIs and their benefits, the next step is to explore how to design them. As with any complex system, a well-planned process is essential to create a digital value chain.

At Solace we have a 5-step process to achieve this:

1. Discovering Events

To improve a system, it is necessary to understand what exists currently. Thus, the first step is a discovery process to identify and document all relevant events. This includes analyzing IT landscape, applications, and business processes to uncover:

- Data schemas to understand the transported data

- Topic addresses for accessing and utilizing events

- Application interfaces for producing, consuming, and processing events.

2. Assessing Usability and Value

After discovering and cataloging all events, they must be evaluated for their potential value and usability. While large enterprises may generate a large number of events, it is important to categorize them as business, system, or technical events.

This classification helps to determine the actionable value and worthiness of exposing these events across the enterprise through event streams. Making event streams more accessible and widespread throughout the organization can improve integrated systems and increase synchronization among different parts of the organization.

3. Bundling and Documenting High Value Events

After identifying high-value events, the next step is to bundle them into a cohesive event API product. By focusing on a problem statement, different events can be combined and processed in a flexible way to deliver business value.

As with any API product, it should be easy to:

- Discover the event API product

- Document and distribute the event API product in a machine-readable format

- Manage the event API product

- Have supporting code generation and developer-focused tools for the event API product.

4. Release the Event API Product to Generate Value

Here are a few steps to release the event API product:

- Test the API thoroughly to ensure it meets quality and performance standards.

- Create documentation that outlines how to use the API, including code examples and API reference.

- Set up a system to handle support and feedback from users.

- Integrate the API with existing systems and platforms.

- Launch the API and make it available for your target user base.

- Offer training and support to help users get started.

- Continuously monitor and improve the API based on user feedback.

5. Evolve and Optimize the Event API Product

As with any product, there is always scope to improve, processes that change, new use cases that are identified. Managing the lifecycle of your event API product to keep up with these changes is crucial to finding and maintaining success.

Summary

Transitioning from synchronous to event-driven APIs modernizes the enterprise and unlocks real-time events and information for developers, architects, product owners, and business stakeholders. This evolution positions the enterprise to react quickly and effectively to the fast-paced world.

Explore other posts from categories: API Management | For Architects | For Developers

Hari Rangajaran

Hari Rangajaran