What better way to kick off 2017 than by analyzing some upcoming banking regulations, right? OK, that might not sound like your idea of a good time, but there’s at least one worth talking about. It’s so huge, so impactful and so fundamental to the financial services industry that it presents a unique opportunity to reconsider technology design in a way that unifies back- and front-office functions across.

Fundamental Review of the Trading Book, or FRTB, is due to hit in 2019 and its scope is so broad that many in the business jokingly refer to it as “Basel IV.” Not only that, but FRTB’s requirements are so explicit they will definitely influence technology strategy – which presents a unique opportunity because most banks are actively looking for ways to cut cost and complexity with clouds and virtualization.

Among other things, FRTB will require banks to report their actual risk relative to their stated risk appetite, which will hopefully eliminate the need for the kind of bailouts it took to square things away in 2008. That means the 20-year-old “Value at Risk” (VaR) measurement of risk will be supplanted by Expected Shortfall (ES). ES provides a much more accurate picture of performance under extreme but rare market conditions, and is way more conservative than VaR. By establishing their ES, banks can set aside capital buffers by risk weighting, instead of through mark-to-market pricing.

So where does the technology come in? In order to calculate ES under various simulated stress conditions, banks must model the data conditions across all asset classes (e.g. credit, equities and FX) across all risk factors (which can number in the thousands) as part of a process called the Internal Models Approach. Where Monte Carlo simulations (which are compute intensive in their own right) have been used for revaluations to date, banks will now need to simulate risk with times-series up to 12 years, using algos to back-fill missing data points to find the time of greatest stress. And they’ll need to perform these simulations all the way down to establish the P&L of each trading desk.

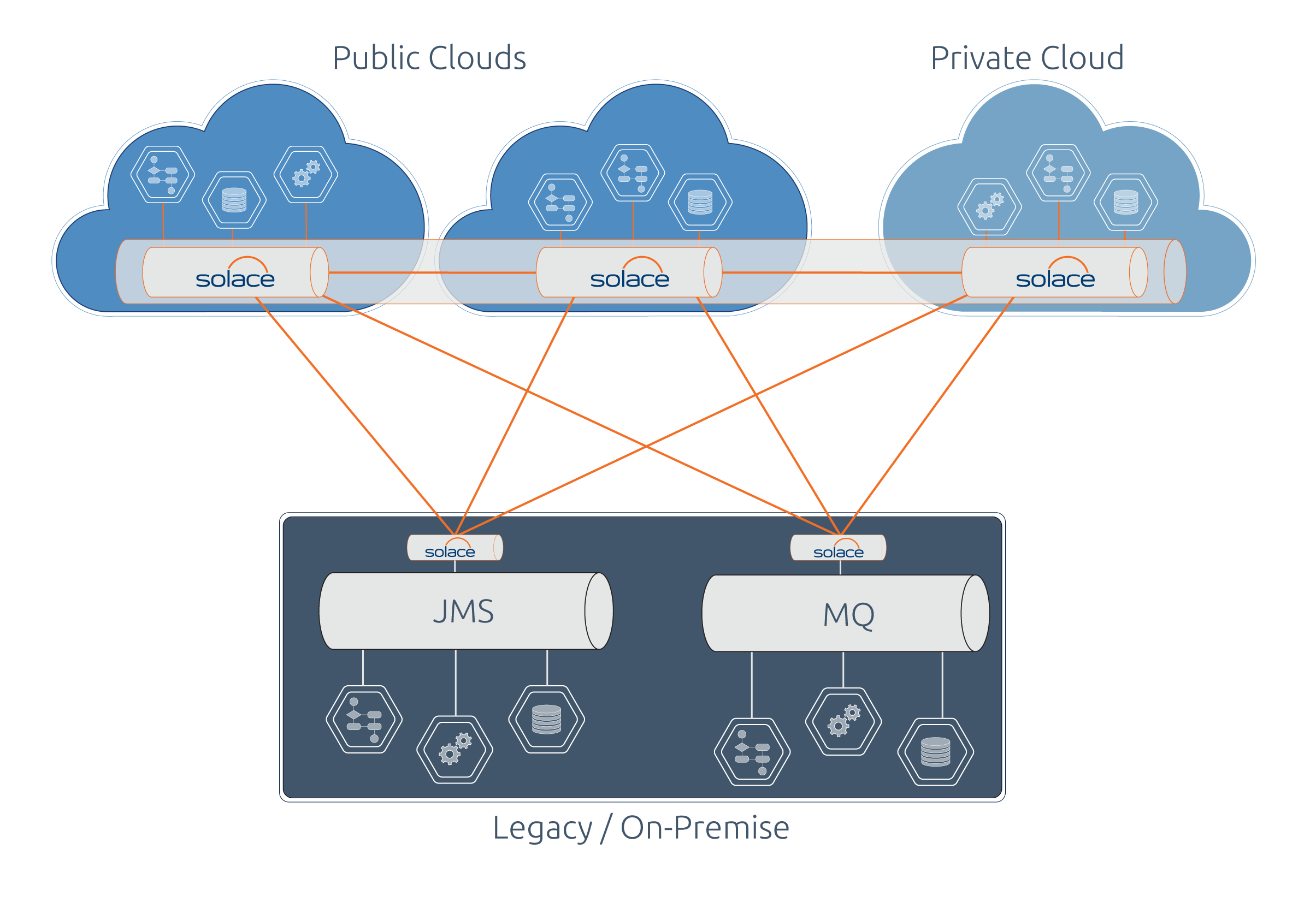

It’s a massively compute-intensive, data-intensive and storage-intensive requirement. In order to cost-effectively perform such simulations at scale, banks will realistically have to adopt a hybrid computing model that combines on-premise and cloud/visualized systems. Some banks have already begun utilizing such hybrid systems to do things like calculate XVAs and implement BCBS risk data aggregation. They run most compute cycles on-premise, and spin up additional processing in the cloud as needed during periods of market volatility. In essence, these banks are trading IT power to free up more capital.

It’s a massively compute-intensive, data-intensive and storage-intensive requirement. In order to cost-effectively perform such simulations at scale, banks will realistically have to adopt a hybrid computing model that combines on-premise and cloud/visualized systems. Some banks have already begun utilizing such hybrid systems to do things like calculate XVAs and implement BCBS risk data aggregation. They run most compute cycles on-premise, and spin up additional processing in the cloud as needed during periods of market volatility. In essence, these banks are trading IT power to free up more capital.

In fact, a number of Solace customers are using real-time messaging to integrate transaction processing platforms, which have historically run on-premise, with centralized FRTB services in the cloud. They typically deploy appliances in the hub closest to the liquidity sources, and Virtual Message Routers (2018 Editor’s Note: the Solace Virtual Message Router is now referred to as Solace PubSub+) in the virtualized environment next to the modeling engines. Such a system meets all of their needs for guaranteed messaging (trade confirmations), slow consumer handling (as part of ingestion into the big data lake) and fanout (to multiple IMs) so architects and developers can focus on meeting business requirements related to business logic and data modeling.

FRTB’s risk management requirements are just one of the kinds of regulatory and commercial changes that will force financial firms to get better at capturing, distributing and processing massive amounts of information across interconnected datacenter and cloud environments. Seems clear to me that companies who want to keep up with such changes need to consider messaging mission-critical technology, and make sure they put in place the most flexible, scalable data movement platform they can find. Next blog post – will we see a need for realtime ES?

Explore other posts from category: Use Cases

Paul Nash

Paul Nash