Home > Blog > For Architects

Subscribe to Our Blog

Get the latest trends, solutions, and insights into the event-driven future every week.

Thanks for subscribing.

Why Integrate Fabric and Solace?

The combination of Solace’s robust event streaming capabilities and Microsoft Fabric’s comprehensive analytics toolset opens up new possibilities for organizations looking to:

- Streamline real-time data processing

- Enhance decision-making with up-to-the-minute insights

- Unify data streams across multi-cloud environments

- Scale analytics operations effortlessly

- Power AI workflows

By leveraging Solace’s Kafka Bridge and Fabric event streams feature, you can create a seamless pipeline that allows events from various sources to flow into Microsoft Fabric’s powerful platform.

In this guide, we’ll walk you step-by-step through the integration process, from setting up Solace Event Broker to configuring data flows in Microsoft Fabric Real-Time Intelligence. Whether you’re a data engineer, a solutions architect, or a business analyst, this tutorial will equip you with the knowledge to implement this powerful integration in your own environment.

Let’s dive in and unlock the potential of real-time event-driven analytics!

Key Technologies and Concepts

Solace Platform and Event-Driven Architecture

Solace Platform is an event-driven integration and streaming platform that lets you get data flowing between applications, devices, and people. You can use Solace Platform to build an event mesh that allows events to flow dynamically across environments and around the world. This breaks down data silos and enables truly distributed event-driven systems. The platform’s built-in Kafka Bridge is a simple solution for integration with Kafka-based systems like Microsoft Fabric Eventstream.

Microsoft Fabric and Real-Time Intelligence Eventstream

Microsoft Fabric unifies various data analytics tools, providing a comprehensive suite for data storage, processing, and analysis. Within Fabric, event streams play a key role in real-time analytics, offering a scalable, Kafka-compatible interface for ingesting and processing streaming data.

Real-Time Intelligence is a powerful service that empowers everyone in your organization to extract insights and visualize their data in motion. It offers an end-to-end solution for event-driven scenarios, streaming data, and data logs. The event streams feature (represented as an Eventstream item) in Fabric Real-Time Intelligence allows you to gather real-time data from various sources, transform it, and route it to multiple destinations. It excels at handling large volumes of real-time data while providing advanced stream processing capabilities. Its tight integration with other Fabric services enables complex analytics workflows that combine real-time data with historical insights.

The combination of Solace Platform and Microsoft Fabric’s event streams feature creates a powerful pipeline for real-time event streaming and analytics. This integration allows organizations to leverage Solace’s robust event management within Fabric’s rich analytics ecosystem, opening up new possibilities for immediate data-driven insights and decision-making.

The Integration Process

Prerequisites

Before beginning the integration process, ensure you have the following accounts set up:

- Solace Platform Account

- An active Solace Platform account

- Active broker and permissions to manage Message VPNs and Bridges

- A test non-exclusive queue, i.e. “hr/people/person/hired/v1″

- Microsoft Power BI or Fabric Account

- An active Power BI or Fabric account. If you don’t have one, start a free 30-day trial of Power BI Pro.

- Access to Microsoft Fabric ‘https://app.fabric.microsoft.com/’ with your account. You can get a Microsoft Fabric subscription. Or, sign up for a free Microsoft Fabric trial.

These accounts are essential for accessing the necessary services and resources for the integration. We’ll cover additional requirements and configurations in the subsequent steps of the integration process.

Step 1: Configure Microsoft Fabric and an eventstream

In this step, we’ll set up Microsoft Fabric and configure an eventstream to receive data from a Solace event broker.

- Access Microsoft Fabric:

- Log in to the Microsoft Fabric portal (https://app.fabric.microsoft.com)

- Select ‘Real-Time Intelligence’ from the homepage after logging in.

- Create a new Fabric workspace:

- Click “Create a workspace”.

- Provide a name for your workspace.

- Select ‘License mode’ in Advanced setting. You may select ‘Trial’ or ‘Fabric capacity’ with F8 SKU or ‘Premium capacity’ with P1 SKU if you have access to them.

- Click “Review + create”, then “Create”.

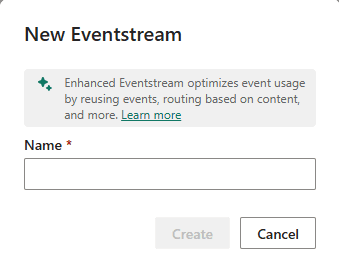

- Set up an eventstream:

- Click “Create” in the left sidebar.

- Select “Eventstream” under “Real-Time Intelligence”.

- Name your eventstream (e.g., “SolaceIntegrationStream”). To learn more, refer to Create an eventstream in Microsoft Fabric – Microsoft Fabric | Microsoft Learn

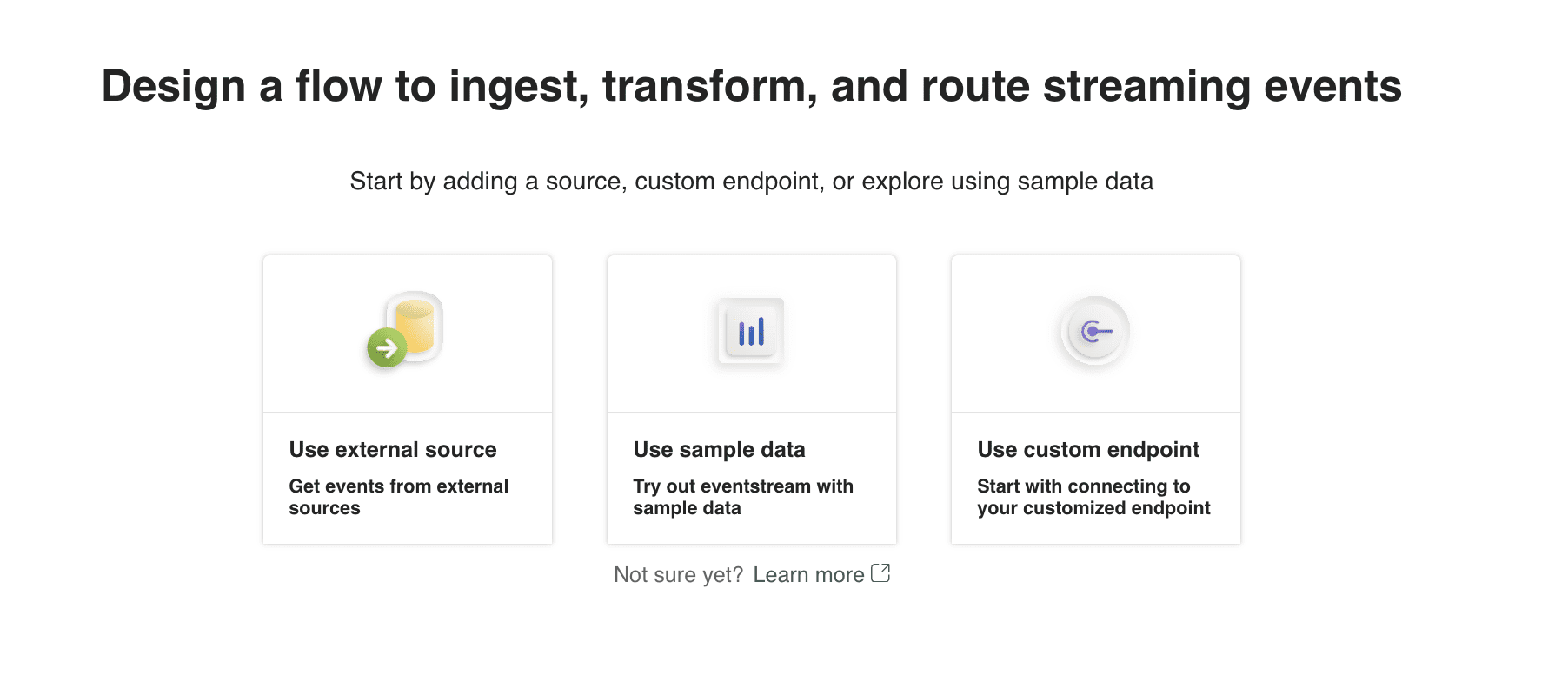

- Select “Use Custom Endpoint”.

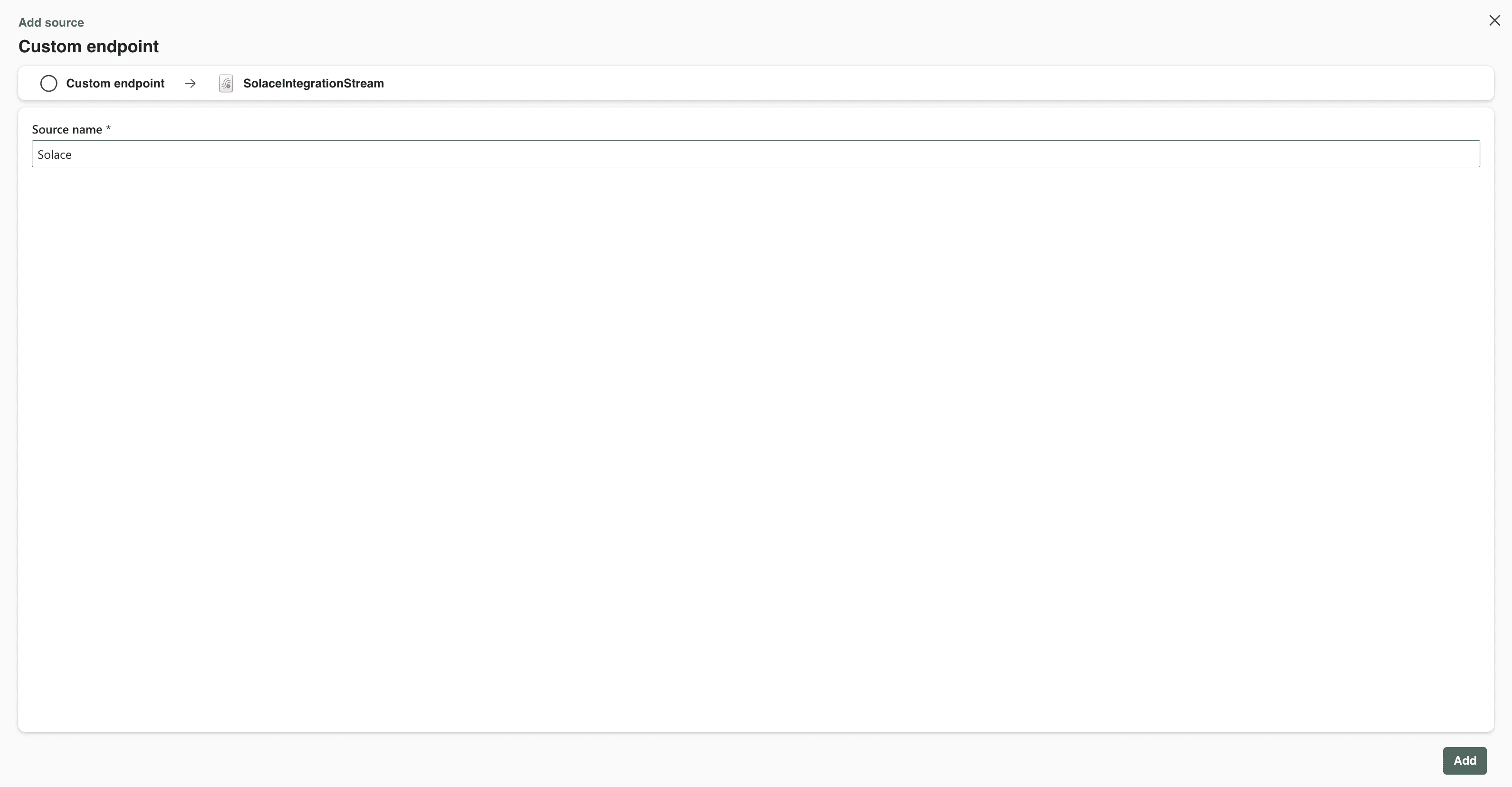

- Name your custom endpoint (e.g. “Solace”) and click Add.

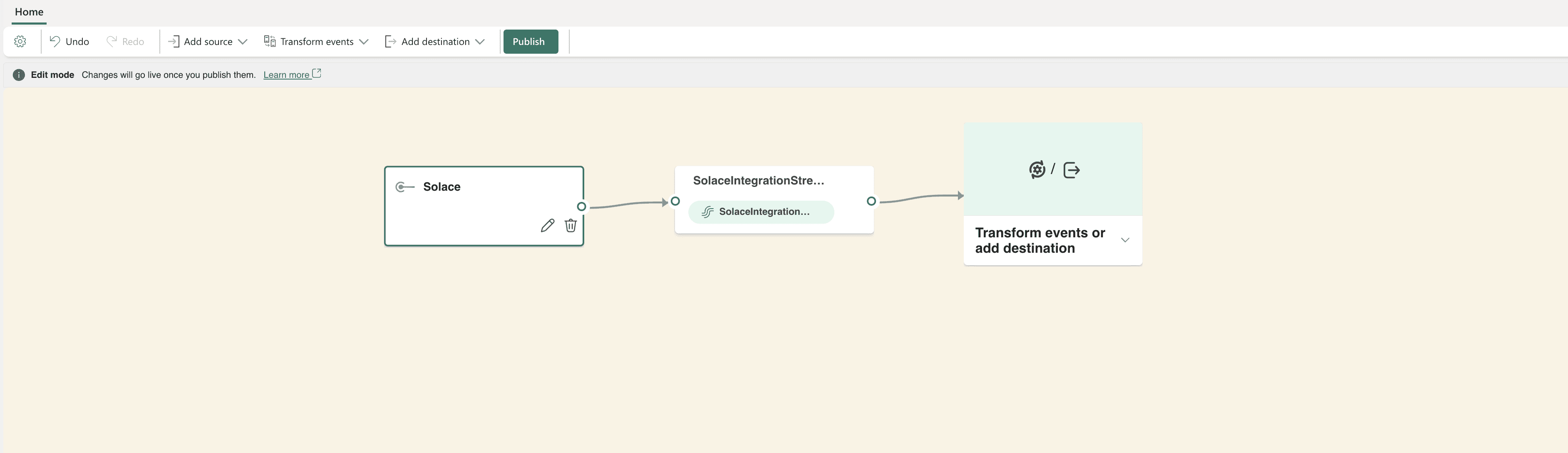

- Publish the event stream.

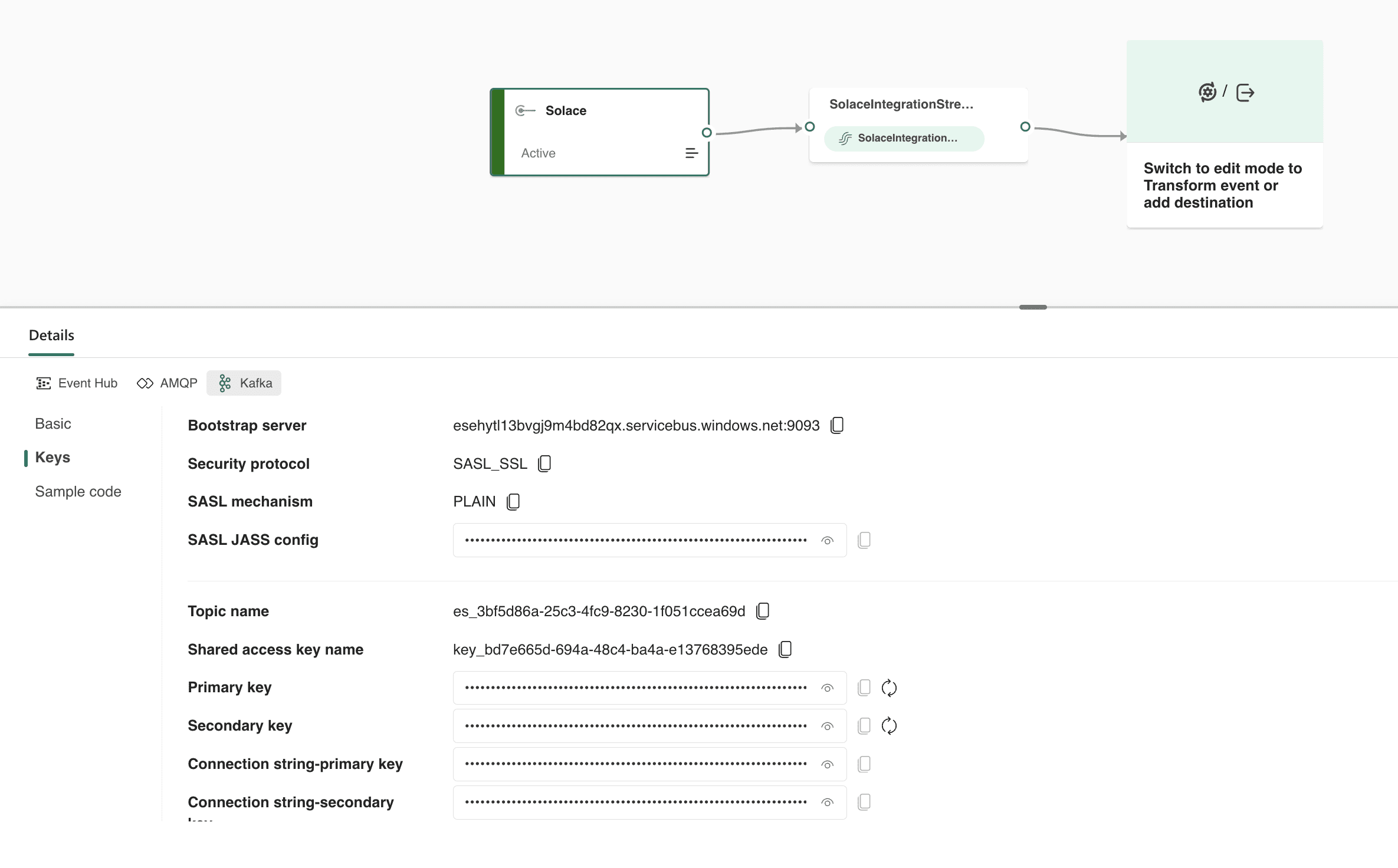

- Select the Source and In the bottom panel, select “Kafka”, then “Keys”.

- Note the Bootstrap Server URL, Topic Name and “Connection String – Primary Key” for your broker configuration.

- Click on “Sample code” and notice how it uses is “$ConnectionString” as the username and the connection string itself as the password. This will become important later!

Your Microsoft Fabric environment is now set up and ready to receive events from Solace. In the next step, we’ll connect Solace to Fabric using the Kafka Bridge.

Step 2: Set up Solace Kafka Bridge

In this step, we’ll guide you through the process of setting up a Solace Event Broker in your Solace Cloud account. This broker will serve as the foundation for our event-driven architecture.

- Log in to your Solace Cloud account at https://console.solace.cloud/ or your dedicated SSO URL.

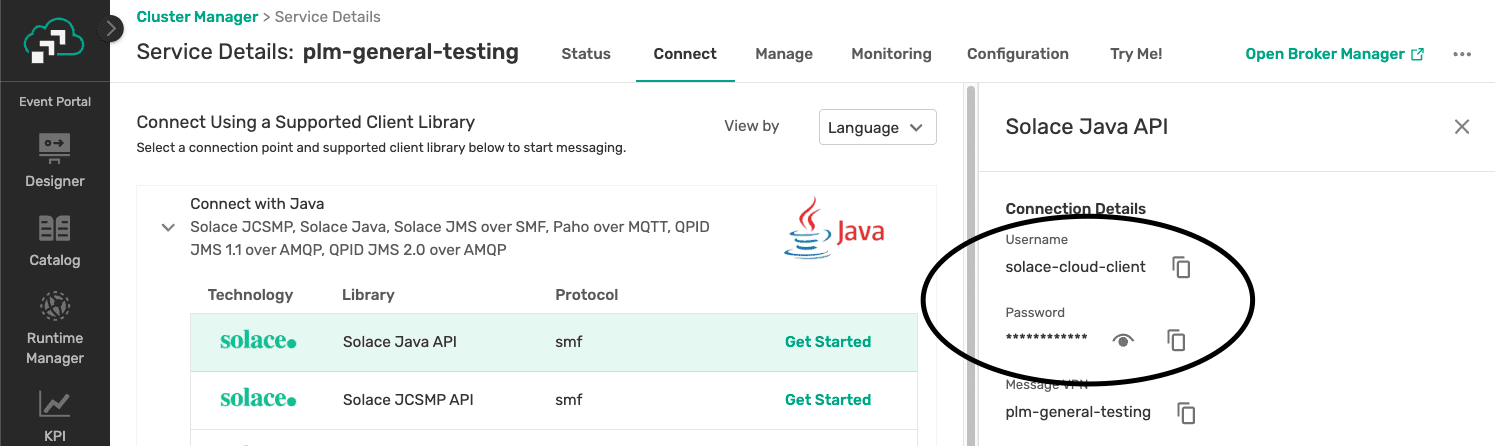

- Access your broker:

- Select “Cluster Manager” and the broker you would like to configure.

- Select Connect in the top menu and choose any connection method. Note the username and password, you’ll need this to test later.

- Select the Manage tab of our broker.

- Select “Message VPN”.

- Select “Bridges” in the left menu.

- Click “Kafka Bridges” on the top menu and then “Kafka Senders”.

IMAGE QUESTION - Select “+ Kafka Sender”.

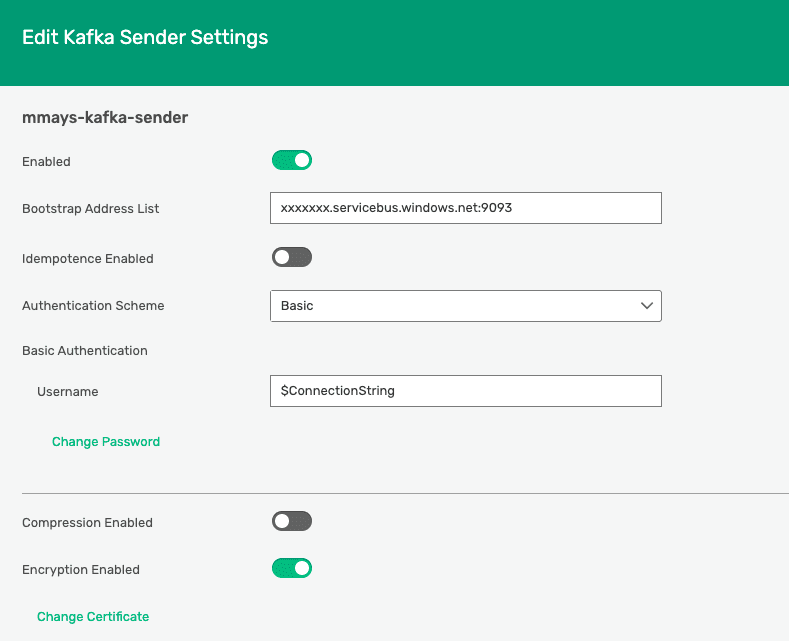

- Enable the Kafka Sender.

- Enter the URL from your Eventstream source config.

- Set Authentication Scheme to Basic.

- Enter the username “$ConnectionString”. This is not a variable here, actually use this exact string for the username.

- Enter the password, which was the value of Connection string-primary key in Fabric.

- Save your new Kafka Sender!

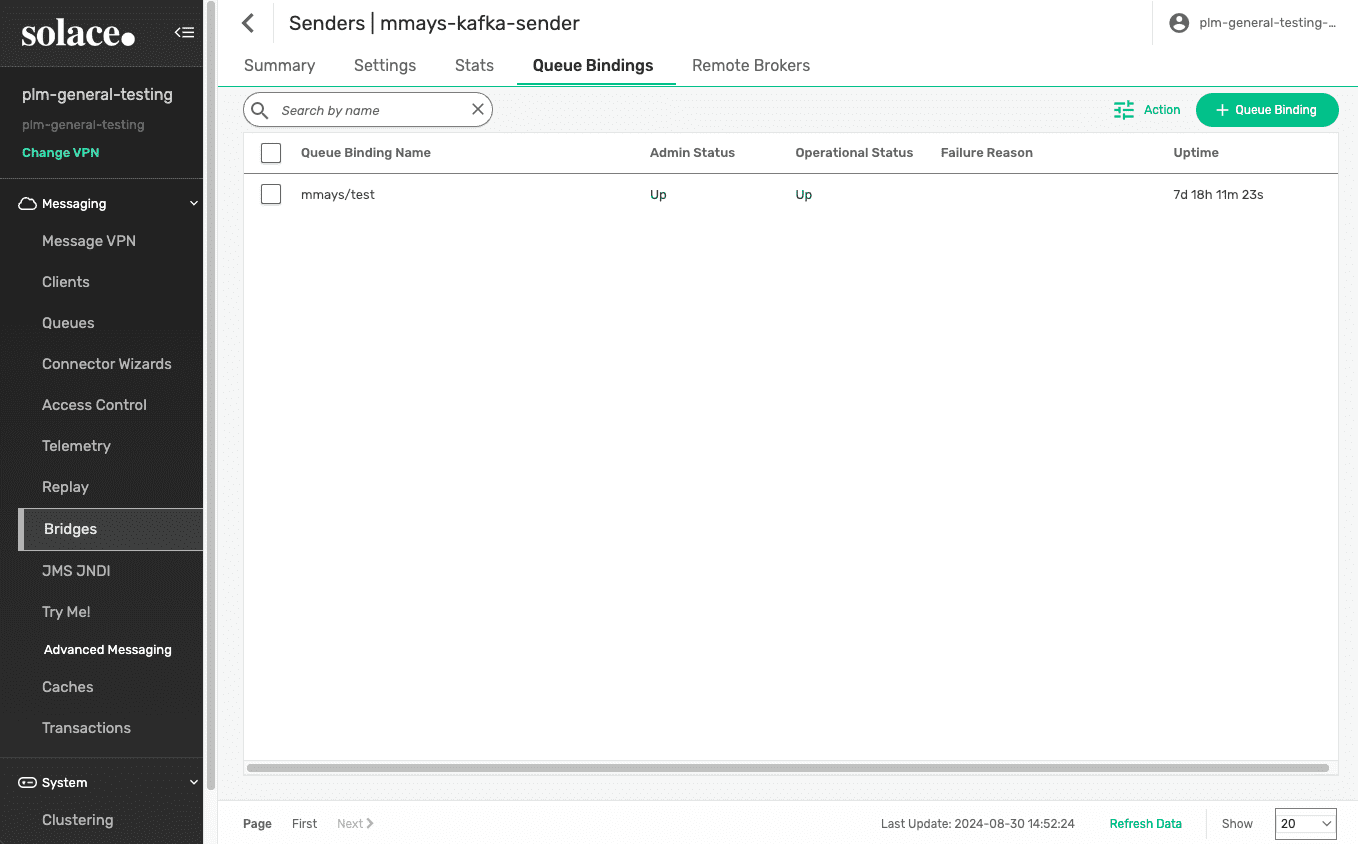

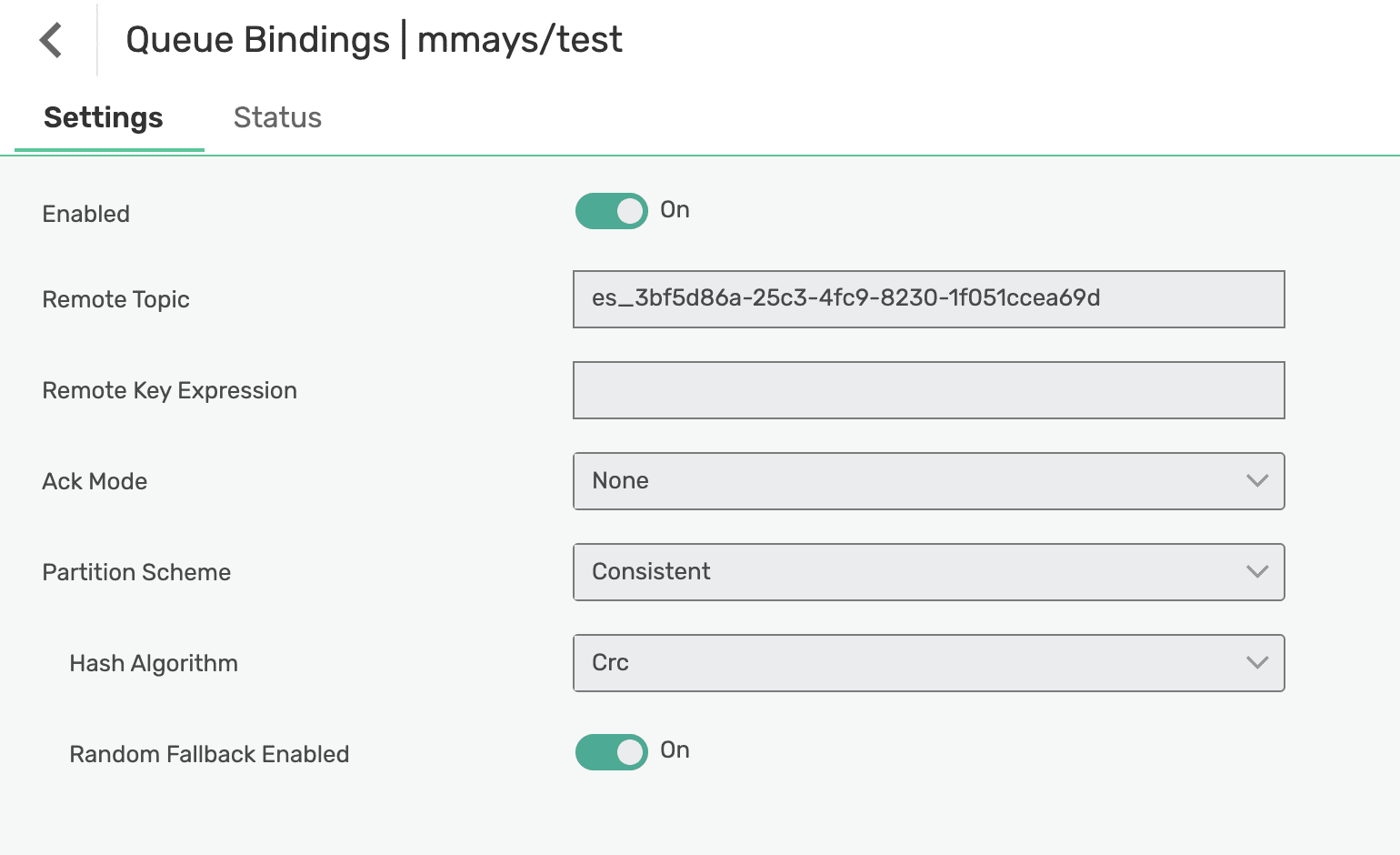

- Select Queue Bindings on the top menu of your new Bridge.

- Select “+ Queue Binding”.

- Select your Queue. (For testing purposes, make sure it is set to Non-Exclusive.)

- Set Remote Topic to the Topic Name listed in the Kafka settings of your custom application in Fabric that you noted earlier.

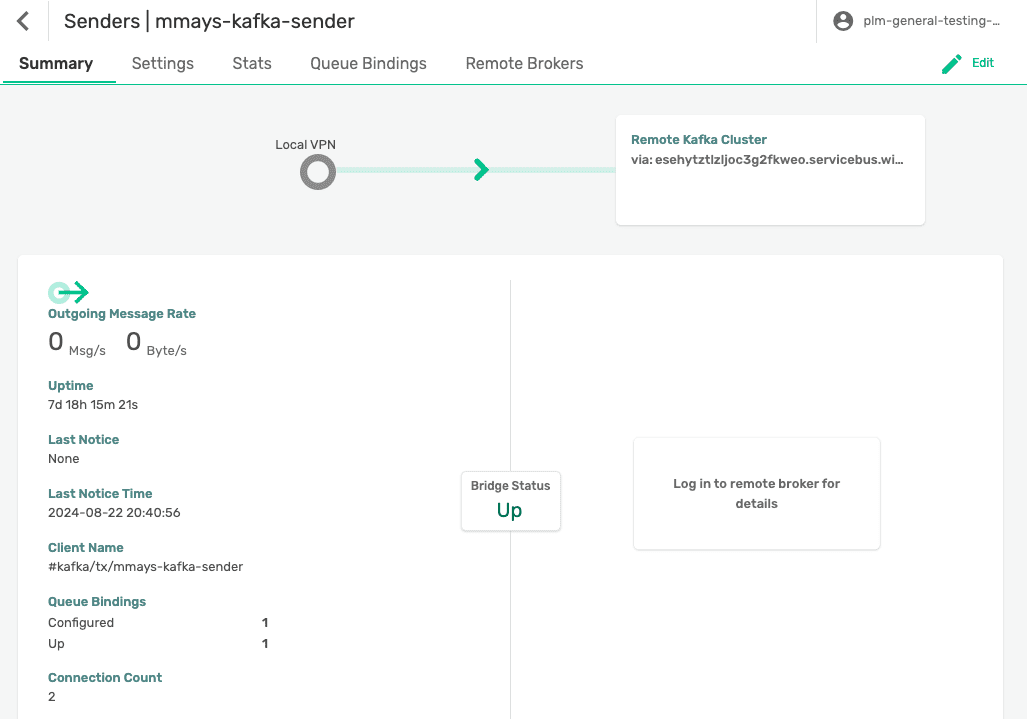

- On the summary tab, your Bridge should now be active with binded queues.

You are now ready to test the connectivity from end to end!

Step 3: Test the Connection

- Use Solace’s Try-Me! feature to publish a test message to your Solace topic.

- Select Try Me on the left sidebar.

- Configure Try Me! Publisher.

- Keep the default URL but update port to 443 instead of 1443.

- Enter the broker username and password you noted earlier.

- Enter your test Queue, i.e. “hr/people/person/hired/v1″. This is bound to the remote topic you configured in the Queue Binding.

- Select Persistent.

- Configure Try Me! Subscriber

- Connect with same username and password.

- Subscribe to same queue.

- Enter a test JSON message in the Publisher and send. Make sure it is valid JSON, either an array of objects or line separated objects. Example: [{“name”:”John”, “age”:30, “car”:null}]

- Make sure your test message appears in the Try Me! Subscriber. Now let’s confirm it also made it’s way to Fabric!

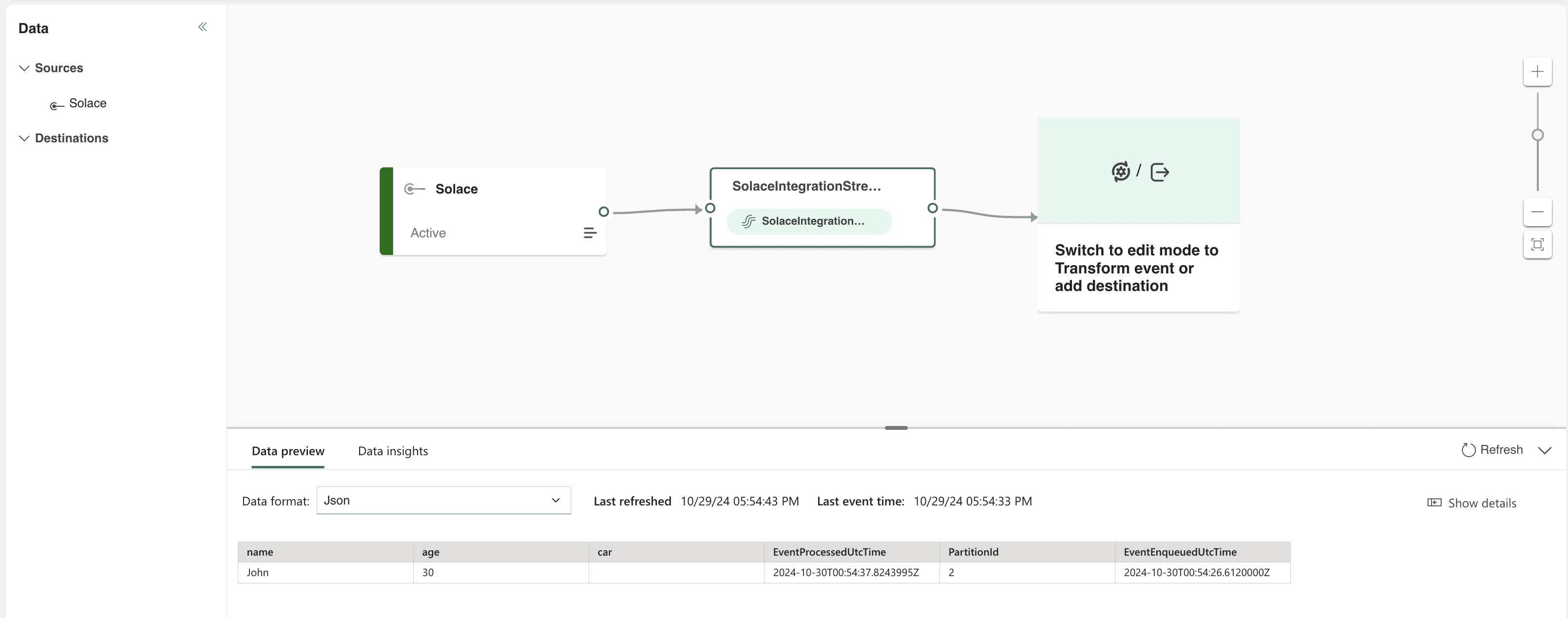

- Confirm receipt of the message in Fabric:

- In Fabric, select your eventstream.

- Select Data Preview and JSON.

- Your message should appear in Fabric:

- If you do not see your message, make sure your queue is non-exclusive, or close out the Try Me! test and try again. Also make sure your message is well-formed JSON, either!

Your event broker should now be connected to Microsoft Fabric via the Kafka Bridge. Events published to the configured Solace topics will flow into your Fabric event stream, ready for further processing and analysis.

Processing, Analyzing and Visualizing Solace Events with Microsoft Fabric Real-Time Intelligence

When integrating Solace with Microsoft Fabric, an eventhouse can be used to handle and analyze the large volumes of real-time event data coming from Solace. Eventhouses in Real-Time Intelligence are specifically designed to efficiently manage real-time data streams, allowing organizations to ingest, process, and analyze data in near real-time.

Let’s consider a situation where a company is using Solace to stream various types of event data, such as IoT sensor readings, application logs, and financial transactions. As these events flow into Fabric, an eventhouse can handle this diverse, time-based streaming data. Eventhouse excels at processing structured, semi-structured, and unstructured data, making it ideal for the varied event types typically seen in Solace implementations. The data is automatically indexed and partitioned based on ingestion time, facilitating efficient querying and analysis.

Within the eventhouse, you can create KQL (Kusto Query Language) databases to store and query your Solace event data. These databases provide an exploratory query environment for data exploration and management. You can enable data availability in OneLake at either the database or table level, allowing for flexible data storage options. The eventhouse also offers unified monitoring and management across all databases, providing insights such as compute usage, most active databases, and recent events. This capability is particularly valuable when dealing with multiple event streams from Solace, as it allows you to optimize performance and cost across your entire event processing pipeline.

You can export Kusto Query Language (KQL) queries to a Real-Time Dashboard in Real-Time Intelligence as visuals and modify their queries and formatting as needed. This integrated dashboard improves data exploration, query performance, and visualization.

Conclusion

Integrating Solace Platform with Microsoft Fabric opens up powerful possibilities for real-time, event-driven data analytics. This guide has walked you through the key steps to establish this integration:

- Set up a Solace Event Broker and a Kafka Bridge with a queue binding.

- Configure Microsoft Fabric and an eventstream, preparing the environment to receive and process our event data.

- Connect Solace to Microsoft Fabric using the Kafka Bridge, enabling event flow between the two platforms.

You also learned how you can analyze and manage the large volumes of real-time event data in a Fabric eventhouse.

By leveraging the strengths of Solace Platform and Fabric, you can build scalable real-time data analytics solutions that can drive informed decision-making and enhance your organization’s agility in responding to events as they unfold.

Special thanks to Xu Jiang with Microsoft for reviewing this post and providing feedback.

Other Useful Resources

Explore other posts from categories: For Architects | For Developers

Matt Mays is principal product manager for integrations at Solace where he is turning integration inside out by moving micro-integrations to the edge of event-driven systems. Previously, Matt worked as senior product manager at SnapLogic where he led several platform initiatives around security, resiliency and developer experience. Before that, Matt was senior product manager at Amadeus IT Group, where he launched MetaConnect, a cloud-native travel affiliate network SaaS that grew to serve over 100 airlines and metasearch companies.

Matt holds an MFA from San Jose State University's CADRE Laboratory for New Media and a BA from Vanderbilt University. When not working on product strategy, Matt likes to explore generative art, AR/VR, and creative coding.

Subscribe to Our Blog

Get the latest trends, solutions, and insights into the event-driven future every week.

Thanks for subscribing.