Yesterday I was joined by a few of the leaders of our product team to debut and demonstrate some of the really cool capabilities we’re adding to PubSub+ Platform. Our main goals these days are to make it easier for enterprises like yours to integrate applications in an event-driven manner, and to manage the resulting event mesh.

You can watch the webcast on demand here, and I’ll summarize the high points below.

One of the big pieces of news is that you can now purchase and deploy PubSub+ Platform within the Google Cloud Marketplace and the RedHat Ecosystem Catalog, and from SAP via an OEM arrangement as SAP Integration Suite, Advanced Event Mesh. And of course we’re still in the AWS and Azure marketplaces.

Easier Integration of Kafka, MQ and More!

We’re always introducing new adapters and APIs that let you make other applications and infrastructure components (like ESBs, iPaaS environments and even other event brokers) part of the event mesh you build with our technology, but frankly we’re on fire right now! Here’s a summary of what we’ve been up to:

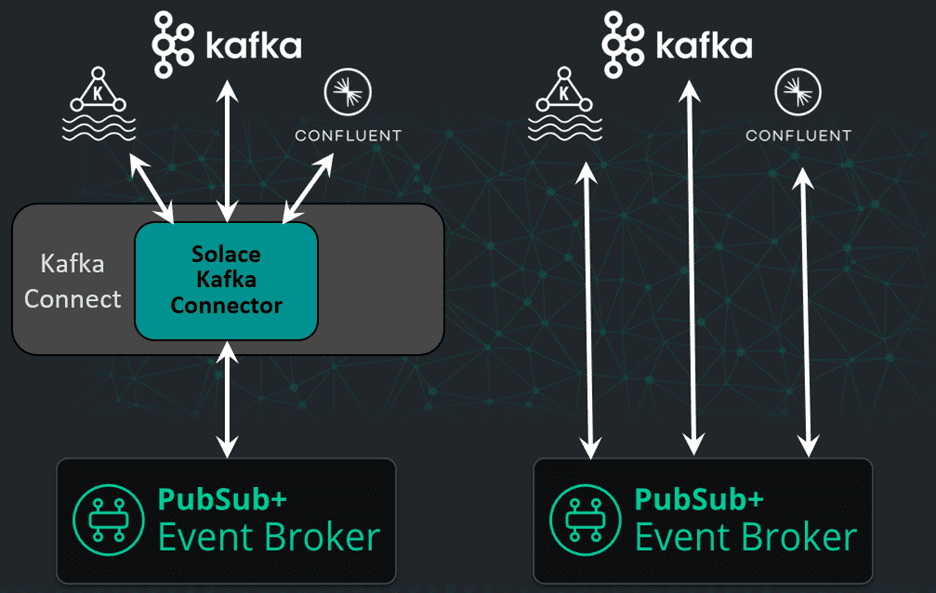

Fully Integrated Kafka Client Bridge

Our Kafka source and sink connectors are effective and popular, but they’re still separate components and you need a Kafka Connect infrastructure to run them. That’s why we’ve decided to bake Kafka connectivity into our event broker so you can connect Kafka clusters to your event mesh with simple configuration commands on the broker. This built-in integration reduces cost as well as architectural and operational complexity, and we’ll be releasing it early next year.

Open Source Connectors

One of the other big things we’re introducing to help you more easily integrate more assets into your event mesh is open source connectors based on the Spring Cloud Stream Applications project. They’ll utilize our existing Spring Cloud Stream binders for publishers and consumers, and be standalone apps that you can run with or without Docker, and deploy into Kubernetes.

These rich connectors will be HA-capable and generate metrics that help you understand their performance and utilization, either through a built-in display or integration with tools like Cloudwatch, Datadog, Dynatrace and Elastic.

We’ll be launching the first of these connectors, for IBM MQ, very soon, with a connector for TIBCO EMS to follow. From there we’ll launch new connectors based on consumer demand, with some likely candidates being Java database connectivity (JDBC), Debezium for change data capture, and (S)FTP. They’ll be available as open source, with the option to pay for support.

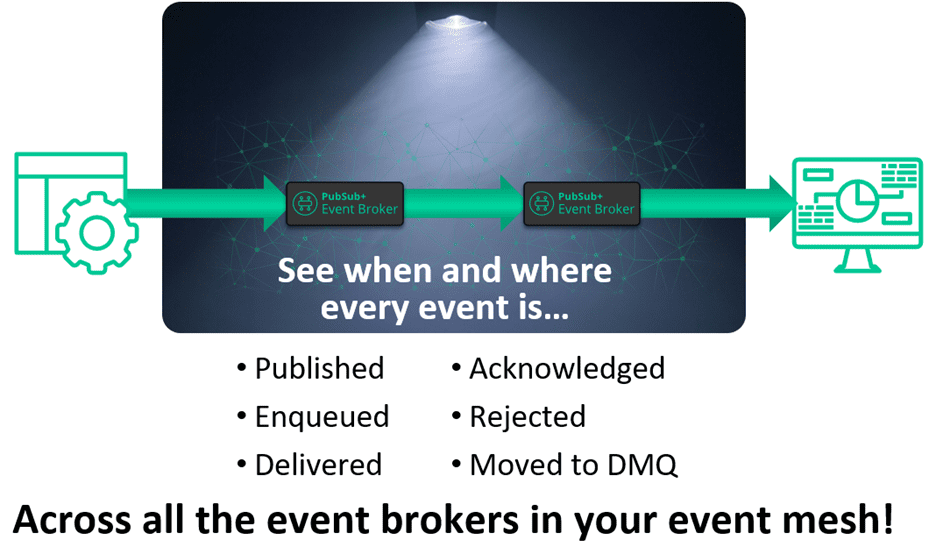

Distributed Event Tracing Shines Light on Your Event Mesh

We know that sometimes an event mesh can seem shrouded in mystery. Events go in, and they come out, but you don’t always have the deep, detailed visibility into what happens along the way. In the case of failures, slowdowns or undelivered messages, this can leave you unable to answer some seemingly straightforward questions: Was that message published? Where did it go? Who got it? Who was supposed to but didn’t? Which microservice is too slow? And forget about the “why” that follows each of those!

We’re excited to announce that soon you’ll be able to get answers to those questions and more, thanks to the event tracing functionality we’re adding to PubSub+ Platform. Our distributed tracing functionality emits trace events in OpenTelemetry format so you can collect, visualize & analyze them in any OpenTelemetry compatible tool such as Jaeger, DataDog, Dynatrace and many others.

You’ll be able to tell not just if a given message was published but exactly when and by whom, where exactly it went, down to individual hops, who received it and when…or why not!

This hot new distributed tracing functionality will let you observe events on a step-by-step basis so you can easily troubleshoot delivery and performance problems, and ensure the completion of business transactions. You can watch Ed Funnekotter, VP of our core engineering team, demonstrate an early version of distributed tracing here, and the feature will be available as part of PubSub+ Platform in the fall of 2022. Ed showed how a retailer was able to quickly troubleshoot missing order status events not reaching their analytics application using distributed tracing.

What is distributed tracing and how does OpenTelemetry work?How distributed tracing solves issues in the synchronous API world and why it fits even better into event-driven architecture.

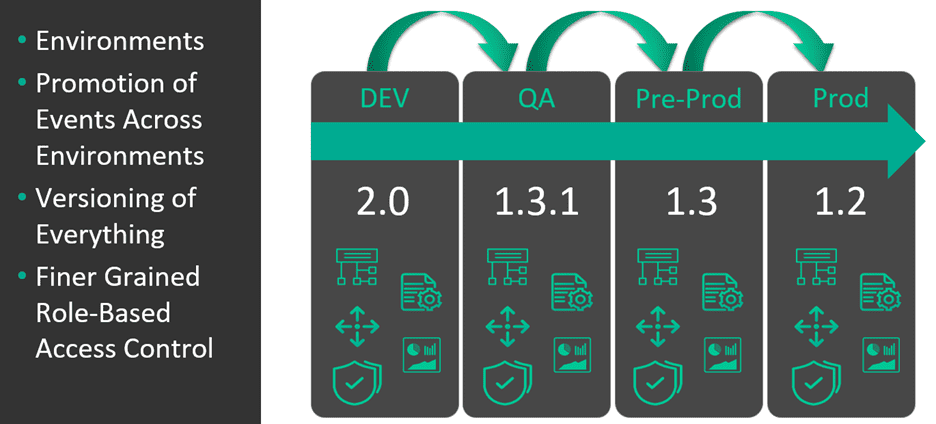

Event Lifecycle Management

The addition of sophisticated lifecycle management features to PubSub+ Event Portal, like finer-grained role-based access controls, object versions, lifecycle states and environments, will help you more effectively govern your event mesh to ensure consistent development practices and security.

Applications produce and consume different events as they evolve and are promoted along the software delivery path from dev to QA to pre-prod to prod. That means you need to do the same type of promotion with your definitions for events, schemas, and applications so everyone can know what version of what events or schemas are available in which environment. This also requires that you be able to version these artifacts so you can have different versions of the same event or schema in dev, QA, pre-prod, and prod as you evolve them or deprecate them. Without this type of lifecycle management, if you have a few 100 or 1000’s of events across 3-4 environments, how can you possibly manage the interdependencies of shared schemas, events or applications without negative impacts?

Yesterday we unveiled a bunch of lifecycle management capabilities that will let you do exactly that, and Event Portal Product Manager Darryl MacRae demonstrated how they work. He showed off how to determine why an order management system was publishing order status events without the customer ID because it was using a deprecated event. He then tested the new version of the application in the dev environment before promoting it to production. You can watch Darryl’s demo here.

Last but Not Least: Self Managed Event Portal

I always love to close with “one more thing” à la Columbo, and this time it was the fact that we’ll soon let you run PubSub+ Event Portal either on-premises or in your own cloud.

Today PubSub+ Event Portal is only available as a service as part of PubSub+ Cloud. There are lots of advantages to that, of course, but for customers in heavily-regulated industries, or where using a SaaS is not yet easy for some reason, it can be difficult or impractical to evaluate or use the software.

To address that, we are making Event Portal available for proof of concept use this summer as a Kubernetes microservices application that you can run in your own Kubernetes no matter where that cluster is deployed – so you can satisfy your regulatory requirements.

Conclusion

This was our second official product update, and I’d really like to know what you think! Do you find them useful? What would you like more of? Drop me a note at shawn.mcallister@solace.com or sound off in our developer community.

Explore other posts from categories: For Architects | For Developers | Product Updates | Products & Technology

Shawn McAllister

Shawn McAllister