Google Cloud Bigtable via Cloud Functions

This integration guide describes integrating your event mesh with Google Cloud Platform (GCP) Bigtable, by way of GCP Cloud Functions.

The easiest way to integrate with Bigtable is via Cloud Functions. It also helps using Cloud Functions as the glue because you can do any message validation, and pre-formatting before writing them to Bigtable.

Features & Use Cases

Here is what you need to do to setup the integration:

-

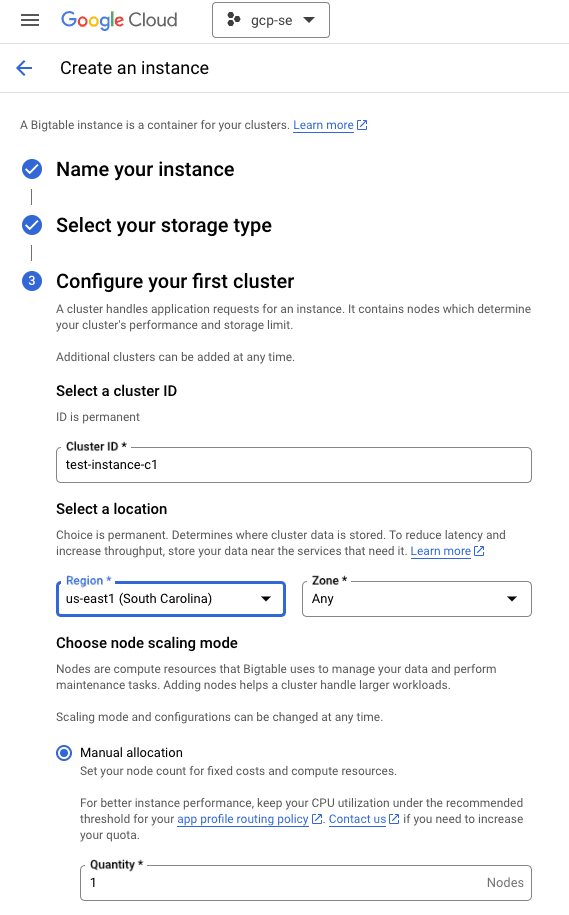

Create an instance of the BigTable, easy to do. No need to create the table yet.

-

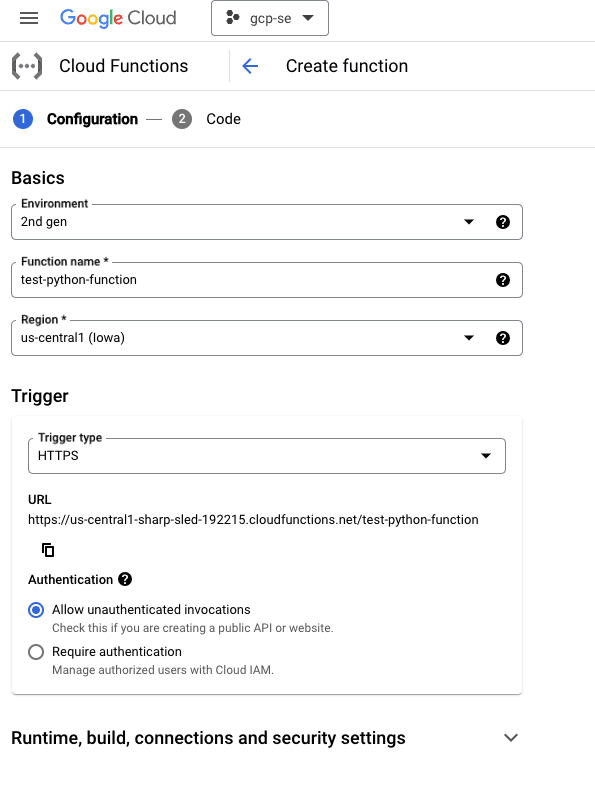

Once the instance is created, you can use the following Cloud Functions code. You will need to create a Cloud Function – set the trigger type to HTTPS and select “Allow Unauthenticated Invocations” option

-

I used python 3.12 for my function but you can use any other language as well. See the attached code which you can upload in Cloud Functions. Make sure requirements.txt has the necessary libraries specified. (code is at the bottom of the page)

-

Take a look at GCP’s sample code here as well: https://github.com/googleapis/python-bigtable/blob/main/samples/hello/main.py

-

You will need to tweak the BigTable schema as needed.

-

-

Once you have the Cloud Function setup, you can use our docs to setup an integration between Solace and Cloud Functions. This was super smooth and took less than a minute.

-

Finally, send a message to the topic that your Solace queue has mapped and wait for the message to be written to BigTable.

-

The test was done via PubSub+ Try Me and the payload was: {‘stock’:’aapl’,’price’:180}

-

requirements.txt ---------------- functions-framework==3.* google-cloud-bigtable

main.py

----------

import functions_framework

import os

@functions_framework.http

def hello_http(request):

import argparse

import ast

import datetime

from google.cloud import bigtable

from google.cloud.bigtable import column_family

from google.cloud.bigtable import row_filters

# Capture the message payload that is passed from Solace

# You will need to make sure that the incoming message format is appropriate for the data that needs to be writting

# For this sample, the incoming message that's published is in JSON format

# {'stock':'aapl','price':180}

data = request.data

# Project information

project_id = "<ENTER_YOUR_PROJECT_ID>"

instance_id = '<ENTER_YOUR_INSTANCE_ID>'

table_id = '<ENTER_YOUR_TABLE_ID>'

# The client must be created with admin=True because it will create a

# table.

client = bigtable.Client(project=project_id, admin=True)

instance = client.instance(instance_id)

# [START bigtable_hw_create_table]

print("Creating the {} table.".format(table_id))

table = instance.table(table_id)

print("Creating column family cf1 with Max Version GC rule...")

# Create a column family with GC policy : most recent N versions

# Define the GC policy to retain only the most recent 2 versions

max_versions_rule = column_family.MaxVersionsGCRule(2)

column_family_id = "id"

column_families = {column_family_id: max_versions_rule}

if not table.exists():

table.create(column_families=column_families)

else:

print("Table {} already exists.".format(table_id))

# [START bigtable_hw_write_rows]

rows = []

column = "payload".encode()

row_key = str(datetime.datetime.utcnow()).encode()

row = table.direct_row(row_key)

row.set_cell(column_family_id, column, data, datetime.datetime.utcnow())

rows.append(row)

table.mutate_rows(rows)

# [END bigtable_hw_write_rows]

return("200")