Here’s why you may need both

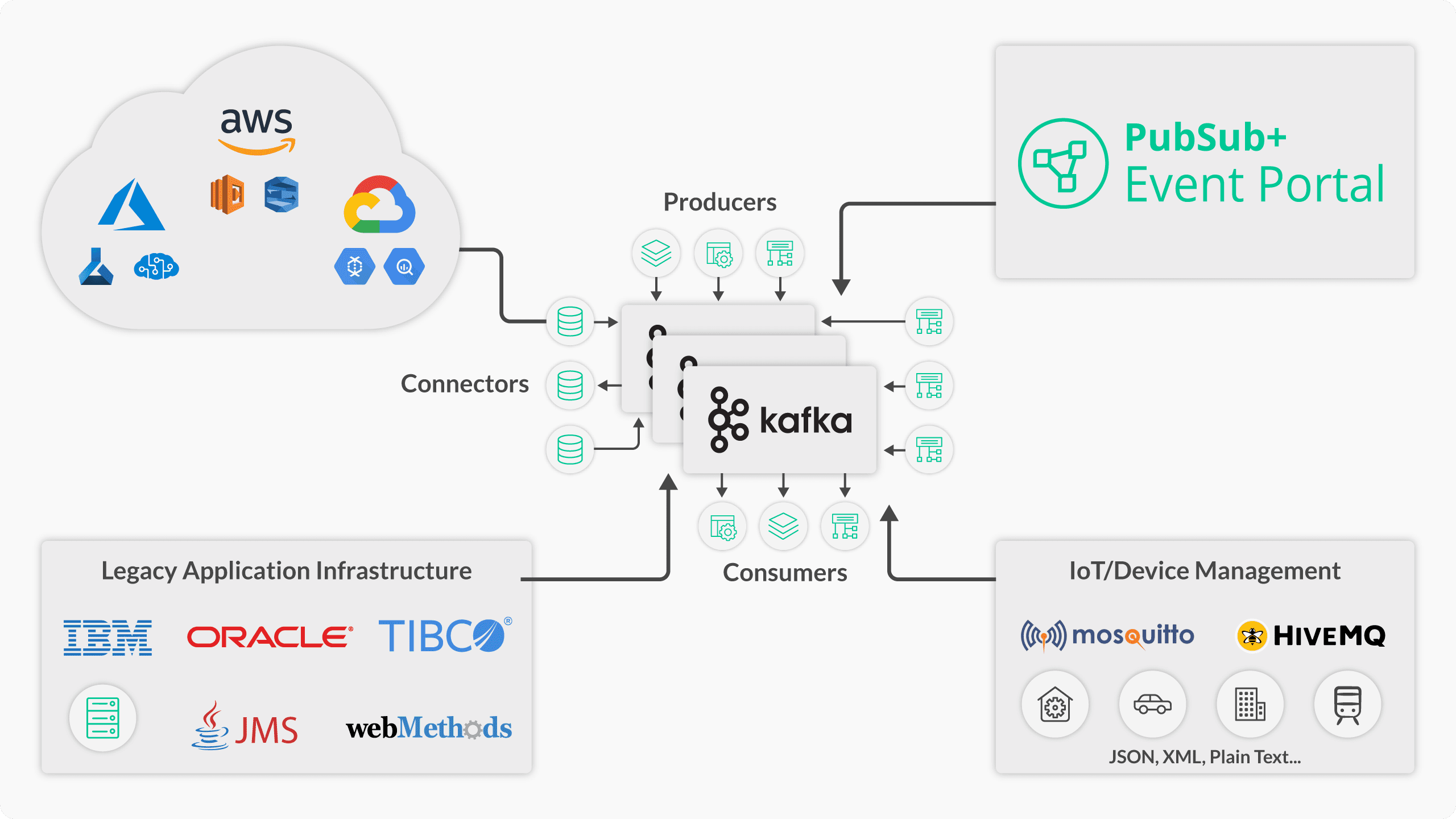

Solace and Kafka brokers

-

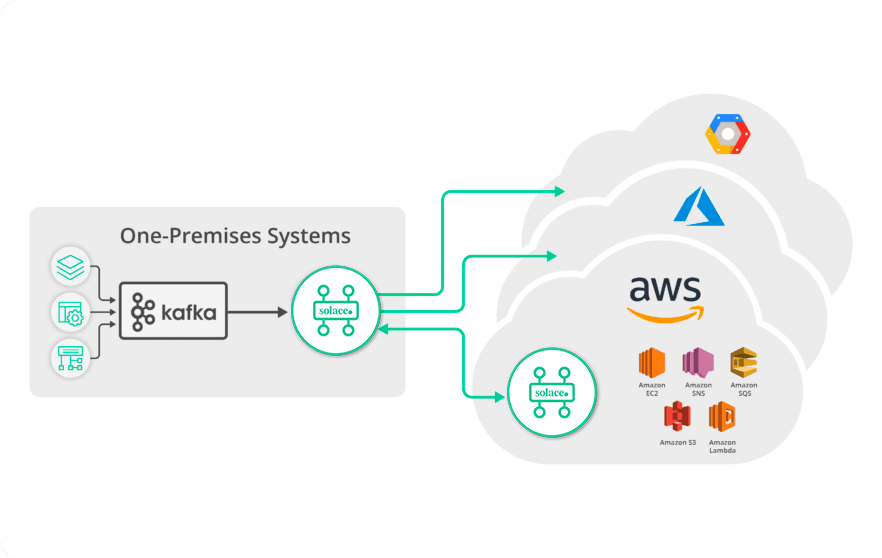

Running real-time analytics across the hybrid cloud?

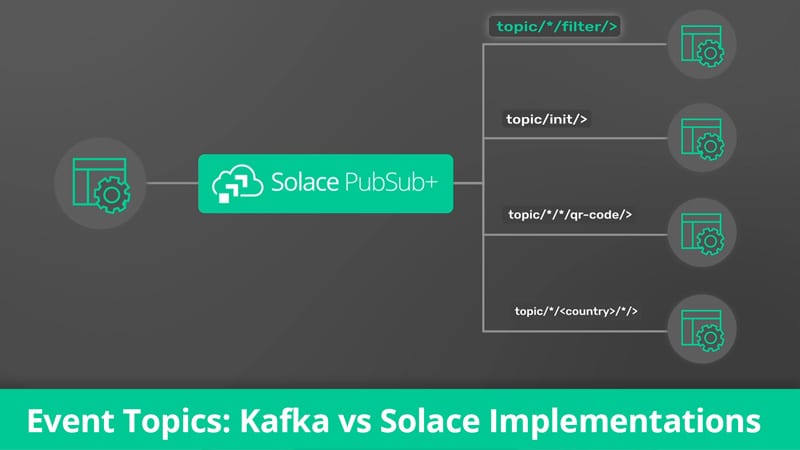

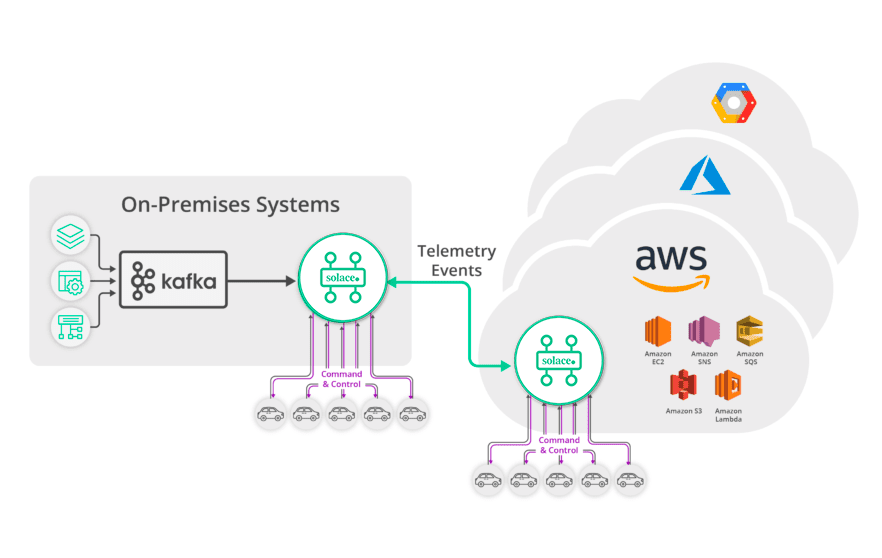

Connect a Kafka event stream to a Solace event broker natively or using external connectors to route a filtered set of information to a cloud analytics engine. Solace keeps bandwidth and consumption low by using fine-grained filtering to deliver exactly and only the events required.

-

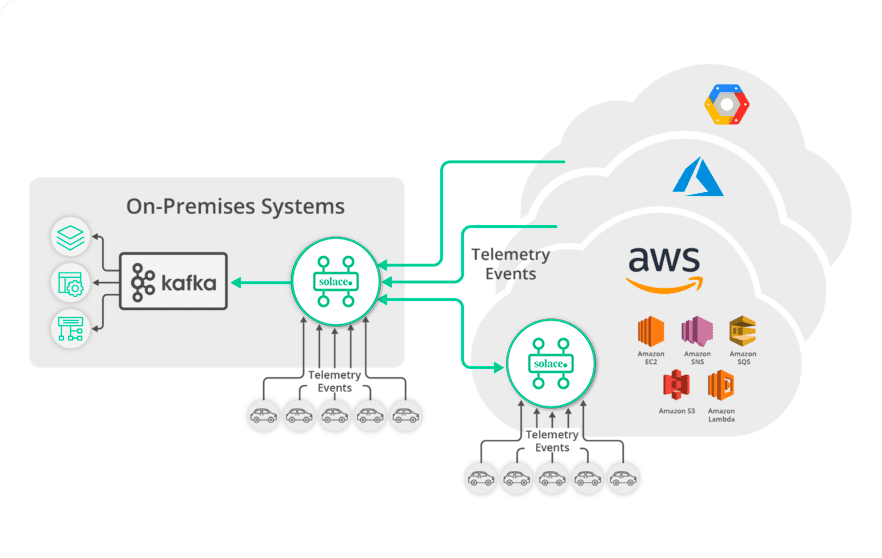

Want to ingest high volume web and mobile device data to Kafka for aggregation and analytics?

Solace supports MQTT connectivity at massive scale, enabling reliable, secure and real-time communications with tens of millions of devices or vehicles so you can stream data to Kafka for aggregation or analytics. And Solace supports a variety of popular and open standard protocols and APIs, so you can stream events to Kafka from all your applications, running in all kinds of cloud and on-premises environments.

-

Trying to stream events recorded in Kafka to connected devices or vehicles?

In addition to supporting the inbound aggregation of events from millions of connected devices, Solace supports bi-directional messaging and the unique addressing of millions of devices through fine-grained filtering. For example, with Solace and Kafka working together you could send a tornado warning alert to a specific vehicle, or all vehicles in or approaching the affected area. The Sink Connector allows Solace to send record events placed in a single Kafka topic to whatever vehicles satisfy a given condition or topic, whether that’s as general as being in the tri-county area or as specific as a single vehicle.

Learn more

about Solace Connectors for Kafka Take me

to Integration Hub

And if you need to discover, visualize, and catalog your Kafka event streams, then add Solace Event Portal

You may be thinking, “Event Portal sounds great, but we use Kafka brokers, not Solace”.

Well, we’ve got good news! Solace Event Portal has a flexible discovery agent, and you don’t need Solace event brokers to use it, it can discover your Kafka streams.

With any large enterprise, there’s more at play than just Kafka native applications. You’ve got cloud providers, legacy systems, and IoT devices that have event streams that need to be managed. Solace Event Portal for Kafka can manage these heterogeneous event streams and make it easy for your enterprise to discover, visualize, catalog, and share your Apache Kafka event streams, including those from Confluent and Amazon MSK.

Connect a Kafka event stream to a Solace event broker natively or using external connectors to route a filtered set of information to a cloud analytics engine. Solace keeps bandwidth and consumption low by using fine-grained filtering to deliver exactly and only the events required.

Connect a Kafka event stream to a Solace event broker natively or using external connectors to route a filtered set of information to a cloud analytics engine. Solace keeps bandwidth and consumption low by using fine-grained filtering to deliver exactly and only the events required. Solace supports MQTT connectivity at massive scale, enabling reliable, secure and real-time communications with tens of millions of devices or vehicles so you can stream data to Kafka for aggregation or analytics. And Solace supports a variety of popular and open standard protocols and APIs, so you can stream events to Kafka from all your applications, running in all kinds of cloud and on-premises environments.

Solace supports MQTT connectivity at massive scale, enabling reliable, secure and real-time communications with tens of millions of devices or vehicles so you can stream data to Kafka for aggregation or analytics. And Solace supports a variety of popular and open standard protocols and APIs, so you can stream events to Kafka from all your applications, running in all kinds of cloud and on-premises environments. In addition to supporting the inbound aggregation of events from millions of connected devices, Solace supports bi-directional messaging and the unique addressing of millions of devices through fine-grained filtering. For example, with Solace and Kafka working together you could send a tornado warning alert to a specific vehicle, or all vehicles in or approaching the affected area. The Sink Connector allows Solace to send record events placed in a single Kafka topic to whatever vehicles satisfy a given condition or topic, whether that’s as general as being in the tri-county area or as specific as a single vehicle.

In addition to supporting the inbound aggregation of events from millions of connected devices, Solace supports bi-directional messaging and the unique addressing of millions of devices through fine-grained filtering. For example, with Solace and Kafka working together you could send a tornado warning alert to a specific vehicle, or all vehicles in or approaching the affected area. The Sink Connector allows Solace to send record events placed in a single Kafka topic to whatever vehicles satisfy a given condition or topic, whether that’s as general as being in the tri-county area or as specific as a single vehicle.