Enterprise Integration is Changing

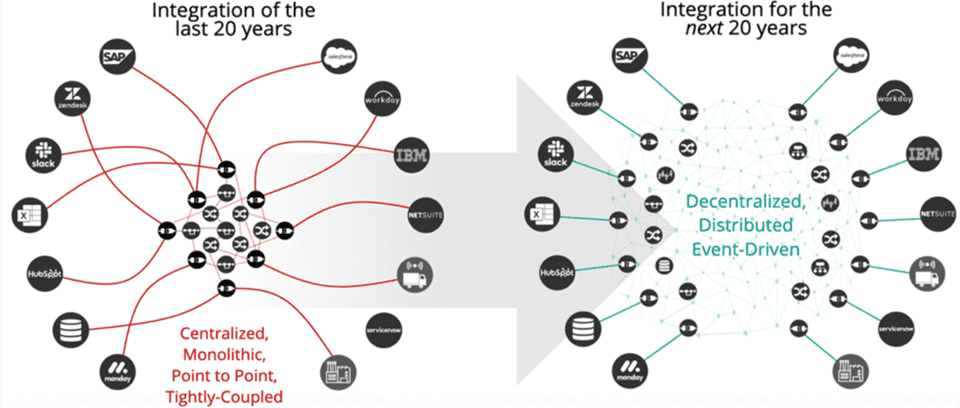

In the ever-evolving landscape of software/system architecture and integration, the transition from enterprise service busses (ESBs) of the service-oriented architecture (SOA) era to today’s integration platforms as a service (iPaaS) and the new, modern event-driven integration paradigm marks a significant shift. The differences between these eras are profound, with modern approaches and business requirements causing a significant change in how systems communicate and interact.

To explain this fully, I will discuss the decline of ESBs and centralized integration; the ways in which decentralization and heterogenous runtimes (cloud, multi-cloud, self-managed, on prem) has caused businesses to have many integration tools; how businesses today face challenges in providing real-time customer and user experiences; and why we need a new way of thinking about the problem.

The Decline of ESBs and Centralized Integration

During the early 2000s, SOA and SOAP-based web services reigned supreme, and the major vendors of their time (IBM, BEA, Tibco) championed the deployment of ESBs for orchestrating communication between disparate systems. Although ESBs were meant to remove extract, transform and load (ETL) software as a central, monolithic hub style of integration, it turned out that ESBs became a similar monolithic integration platform. The limitations of ESBs became apparent as systems grew in complexity. Centralized integration led to bottlenecks, scalability challenges, and increased coupling between services. In addition, vendor lock-in with specialized skill sets, and centralized integration teams caused cost to rise and agility to decrease.

All the while, these same vendors also proposed the use of message-oriented middleware (MOM) with their ESBs. These integrations were extremely cumbersome for the integrator as standards during this time were complex and not widely adopted, the catalogs and registries were immature at best, and mostly nonfunctional (think UDDI). Thus, this type of integration was viewed as complex, expensive and was only used in specific use-cases and contexts and rarely were the messages/events exposed for discovery and reuse. It delivered asynchronous (and typically point-to-point) integration between applications, while failing to deliver on the promise of a real-time digital nervous system of business information.

- Summary: Integration was hard, expensive and generally, very synchronous service specific.

Cloud, SaaS, De-Centralization and Integration

Over time, experts realized a few fundamental things that caused ESBs to slowly die out:

- SOAP was a tricky protocol that was inexorably tied to XML for message formatting, whereas REST offers a simpler way of exchanging information using standard HTTP methods.

- Cloud is here, and people want to create integrations and not have to run/manage the runtime, i.e. provide integration as a service.

- Software as a service (SaaS) based application integration should be easy and move more towards application/business integrations.

- Integration should be less technical and enable better business participation.

Thus, a brand-new technology category was created called the integration platform as a service (iPaaS). Gartner describes the iPaaS as “a suite of cloud services enabling development, execution and governance of integration flows connecting any combination of on premises and cloud-based processes, services, applications and data within individual or across multiple organizations.”

Some vendors (Mulesoft for example) took their ESBs and turned them into an iPaaS, while others created net-new implementations designed to meet the new integration requirements (Boomi, Jitterbit, etc).

Great, businesses had more modern integration tools to standardize on and streamline their integration practices…or not.

A big limitation of traditional iPaaS implementations is that they rely on polling to detect changes, leaving significant changes unnoticed for seconds, minutes, or even hours. As consumers demand more from enterprises, those seconds negatively affected a user’s experience. In today’s business environment, making better decisions by simply integrating applications is not enough. To stay ahead of their competitors, enterprises must create better customer experiences and quickly make smart decisions in response to shifting market conditions. To achieve those goals, they must move beyond simply getting information from point A to point B because time matters and context matters, but realize, it’s not just about technology being the source of the problem…

Major changes were happening within organizations globally. Centralized IT teams and centers of excellence were largely being phased out and LOB/domain/product teams were being given the freedom to choose which technologies would enable them to accomplish their work the fastest. Without the centralized gatekeeper, teams could deliver new capabilities faster and in a more agile way, but this lead to a proliferation of tools and licences (integration included) and the rise of “shadow IT”.

In addition, the lack of enterprise visibility led to the duplication of work and redundant capabilities (a waste of money, resources, and impacts time to market of non-duplicative capabilities). One team would choose Camel, the next Boomi, yet another Mulesoft, etc. Sometimes multiple teams would choose a specific iPaaS, but they would be licensed separately, thus removing size/quantity pricing incentives (increasing cost). In addition, there was no “integrator of integrators”, thus integration between teams/LOBs were done in point-to-point fashion and many different service catalogs began to dot the enterprise landscape.

The Modern, Real-time, Distributed Enterprise

Enterprises today are increasingly under the strain of many distributed legacy systems, cloud modernization and customers demanding better digital experiences. All three of these strains have historically resulted in complex, tightly coupled and orchestrated integrations, which are often everchanging. Fundamentally what integration requires, at its core, is data. Today’s data and integration challenges include:

- Businesses need to distribute system of record data across the enterprise and to business partners.

- The volume of data is increasing, data is getting more detailed, and it’s flowing from more and more source systems.

- Data though is often thought of after its stored and is query-able via a web service, or stored in a data lake/warehouse on which analysis can be performed. This is too late in its lifecycle.

- Data sources are increasingly distributed which can be a source of frustration when doing integration.

But how did this all this data come to exist?

Data is created every time something happens in an enterprise. The speed with which systems are updated with this data varies widely from daily/weekly batch jobs to real-time. In the past, systems were made to simply automate human activities, i.e. data was collected, turned in and people counted/tabulated/reconciled the data and put the output into a system. In fact, the first instance of batch processing dates to 1890, when an electronic tabulator was used to record information for the United States Census Bureau. Census workers marked data cards (punch cards) and processed them in batches. Meanwhile in 2024, many businesses still rely on batch, even though we now are nearly always in a connected state.

Remember the statement that data is generated all over the place by distributed systems? Consider a large grocery retailer with hundreds of stores, several data centers with legacy systems, and Azure cloud and GCP for analytics. The need for data and changes to be processed by many systems, located all over the enterprise, is obvious. The question is, how do we make integration react as the business changes, and these change data events democratised so that users can easily make use of it, when it is produced? That is the premise and goal of event-driven integration.

Event Driven Integration is Here

Event-driven integration is an enterprise architecture pattern that leverages the best capabilities of event brokers and integration platforms and applies the methodology of microservices to integration. By combining the data transformation and connectivity of integration tooling with the real-time dynamic choreography of an event broker and event mesh, enterprises can ensure their integrations are reliable and secure, and offer the flexibility to stay agile.

Events are crucial counterparts to synchronous APIs, and business solutions can be composed of a blend of the two. This “composable architecture” is widely promoted by analysts and other industry experts. In the simplest terms, an event is something that happens. In IT terms, an event represents a change in the state of data, such as a sensor signaling a change in temperature, a field changing in a database, a ship changing course, a bank deposit being completed, or a checkout button being clicked in an e-commerce app.

Often, the sooner an enterprise knows about an event and can react, the better. When an event happens, an application, device or integration tooling sends a event. An event is simply a communication of data that has had a change of state. At a technical level, an event is transmitted via a message containing the data and metadata such as the topic (i.e. a tag that provides context about the event).

Source applications and systems generating events/updates need to send them to an event driven core (event mesh) and interested applications can then be updated by/consuming from the event driven core. So, source and target applications are decoupled and one-to-many event distribution is made easy, fast and scalable. This is as opposed to tightly coupled, synchronous, point-to-point integrations as most are done today.

Turning Integration “Inside out”

Traditional forms of integration such as ESB and iPaaS put integration components at the center, which has some downsides:

- Integration components are coupled into one deployable artefact, leading to scalability and agility challenges.

- Connectivity to remote data sources/targets can cause networking challenges and increases the risk of higher latency and network failures.

- Integrations are synchronous in nature – which can lead to challenges with robustness (cascading failures), scalability for data synchronization use cases and performance

- Integration flows are tightly coupled and orchestrated, leading to even more robustness and loss of agility as more integrations need to be developed

Event-driven integration looks at integration infrastructure with two different “layers”:

- Core: refers to part of the system that focuses on facilitating data/event movement.

- Edge: refers to the part of the system that has to do with event integration i.e. connectors, transformers, content-based routers etc.

In essence event-driven integration turns integration “inside out” by pushing complex integration processes to the edge while leaving the core free to move data across environments (cloud, on-prem) and geographies via an event-platform as shown here:

This approach is focused on maximizing the flow of events and information while improving your system’s scalability, agility, and adaptability.

An event platform can mitigate some of the infrastructure risks by more effectively managing and distributing data. However, the overall key is to integrate with systems and applications as close as possible. Complex integrations can be decomposed into micro-integrations that you deploy and distribute at the edge. This way you can have small, single in purpose micro-integrations close to each of the source and target of the data. This reduces network and connectivity risks, simplifies network configuration and lets you deploy and reuse logic/processing components for many integration scenarios.

A well-designed event platform can scale from a single event broker to an event mesh containing multiple connected event brokers (I’ll dive into event mesh in the next chapter…stay tuned!) and can support a variety of event exchange patterns such as publish-subscribe, peer-to-peer, streaming, and request-reply.

Capabilities to look for in an Event-Driven Integration Platform

Hopefully at this point you understand the need for event-driven integration, but let’s discuss the key platform capabilities that you should be looking for. These capabilities are divided into two main component areas: 1) the integrations at the edge that connect source/destination systems, and 2) the core that is responsible for transporting and routing events and information.

Why You Need Micro-Integrations

Micro-Integrations revolutionize enterprise connectivity by breaking down monolithic integration flows into small, manageable, purpose-built components. Each micro-integration includes a connector for data flow between an event broker or event mesh and other systems. They can also include functions such as payload transformation, data enrichment, and header modification. By focusing on specific, narrowly defined tasks, micro-integrations facilitate easier design, modification, and scalability, reducing the risk and complexity typically associated with traditional integration methods. Essential for event-driven architectures, micro-integrations ensure your enterprise systems communicate in real-time, enhancing overall operational efficiency and adaptability.

Tenets of Micro-Integrations

Micro-integrations differ from traditional integrations and connectors in a few unique ways.

Like microservices, micro-integrations are small, single in purpose capabilities which are independently scalable. Their goal is to be as loosely coupled as possible to ensure changes and enhancements can be made without causing other micro-integrations to fail. The term “microservice architecture” is used to describe a particular way of designing software applications as suites of independently deployable services. Common characteristics include organization around business capability, automated deployment, intelligence in the endpoints, and decentralized control of programing languages and data.

All these same core tenets are directly applicable to micro-integrations. For example, micro-integrations are built around a given business case that will provide value to the organization. They are all independently deployable, are placed as close to the source and target system as possible (intelligent endpoints) and support decentralized control of data. In fact, I would claim the primary outcome of micro-integrations is data centric in that it liberates and democratizes your data, making it available in motion while being easily exposed, understood and shared.

Benefits and Advantages of Micro-Integrations

As a key element of event-driven integration, micro-integrations offer the following advantages:

- Greater Agility: Micro-integrations make it easy to change the way applications and services interact with one another by letting developers reuse them and the events they produce in new ways. They perform one function for one source or target application which also makes them easier to change with less effort, risk and potential impact on other systems integrated to this same flow. They are also deployed in a loosely-coupled manner which facilitates changes at one integration point without affecting any others.

- Faster Data Distribution: Micro-integrations offer higher performance than traditional integration solutions, while simplifying networking configuration, because they are deployed close to source and target applications. Micro-integrations provide fast streaming capabilities to and from fast data sources/targets such as messaging systems, databases (via CDC), analytics engines and data lakes.

- Better/Easy Scalability: Micro-integrations facilitate scalability in two ways:

- First, updates from a source system can be delivered to an almost infinite number of target systems without performance impact by using many target micro-integrations around the event broker/mesh.

- Second, you can easily and dynamically support an increasing amount of information/traffic flowing to or from a single application by deploying more instances of a given micro-integration, i.e. horizontally scaling at the micro-integration level.

- More Flexibility: Different technologies can be used at the source or target sides depending on best fit technology, commercial reasons or technology evolution, but still adhere to the same overall integration architecture.

Already have Integration Tools and Want Flexibility in Choice?

Micro-integrations are not technology dependent. While Solace does offer tooling you can use to create micro-integrations, you can also use whatever integration tooling your company has in place. For example, you may have one or more iPaaS solutions within your company, along with SAP, Apache Camel, Spring Integration and many others, The key is to use whatever tool best matches your needs, skills and budget. To be a micro-integration, however, it must conform to the tenets I explained above.

We at Solace want to make embracing and creating micro-integrations easy. To that end, Solace has developed several ways you can to develop micro-integrations within your organization. These include our own PubSub+ Micro-Integrations, SAP via the Solace Integration Services (IS) Flow Generator, Salesforce via Solace Mulesoft Flow Generator, and our Solace Boomi Connector.

Core Capabilities Needed in an

Event-Driven Integration Platform

Now that you understand the tenets, benefits and advantages and tooling to satisfy your edge integrations, let’s shift attention to the event platform core capabilities. Just like in fitness, without a strong core, your ability to perform will be severely limited and the same is true as well with event-driven integration.

There are four key capabilities to consider when considering your event platform core:

- Event Routing/Filtering

- Event Federation

- Lossless Delivery and Resilience

- Self-Service Access and Governance.

I’ll discuss each of these capabilities in the following sections in an implementation-agnostic fashion.

Event Routing/Filtering

With event-driven integration, micro-integrations can collaborate by asynchronously reacting to events, instead of synchronously polling for changes or relying on an orchestration layer. To get the correct event to the right micro-integration and end-system/component, there needs to be a way to asynchronously deliver events. The event broker performs exactly this task. Sounds simple but it requires more thought.

A micro-integration usually needs a subset of the event stream. For instance, a micro-integration for a warehouse pick management system only needs “new order” events that are in its delivery zone. Moreover, if an order is cancelled then this warehouse pick management system needs to receive the right cancellation event in order to act and put back items already picked. Thus, it needs to flow to the right target micro-integration that processed the original “new order” request in order to maintain proper sequence of processing.

Typically, you are faced with choosing routing on a topic that is static and predefined, like those typically provided by log-based brokers such as Kafka, Azure Event Hubs, Pulsar, etc. OR dynamic, smart topics provided by smart event brokers such as Solace, ActiveMQ, RabbitMQ, etc.

Log-based Broker Routing/Filtering

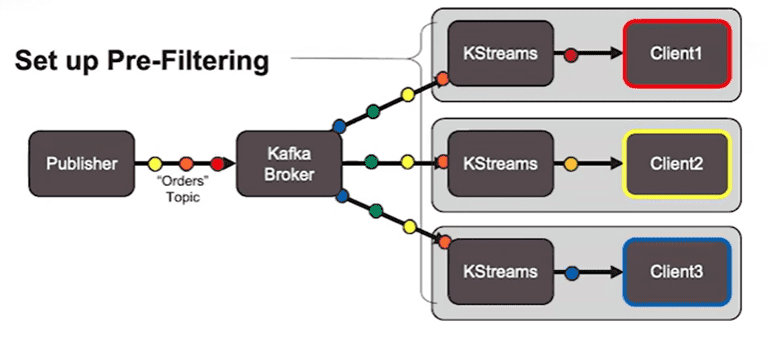

A log-based event broker cannot provide filtering and in-order delivery at the same time because events from different topics get put into two different log files within the broker. This means that architects need to design coarse-grained topics that contain all the events, which then need to be filtered on the consumer side. The cost of this approach can be measured in latency, complexity, performance, scaling and network and application costs.

Scenario 1: Pre-filtering

A log-based broker like Kafka will send all the events in a stream and a tool like KStreams can be used to filter it on the client side.

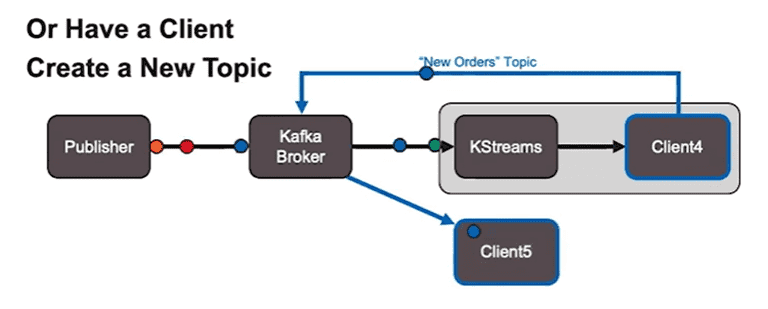

Scenario 2: Client creates new topic

As you can see, filtering becomes a complex and bespoke solution depending on the use case and consuming client needs.

Smart Topic Routing/Filtering

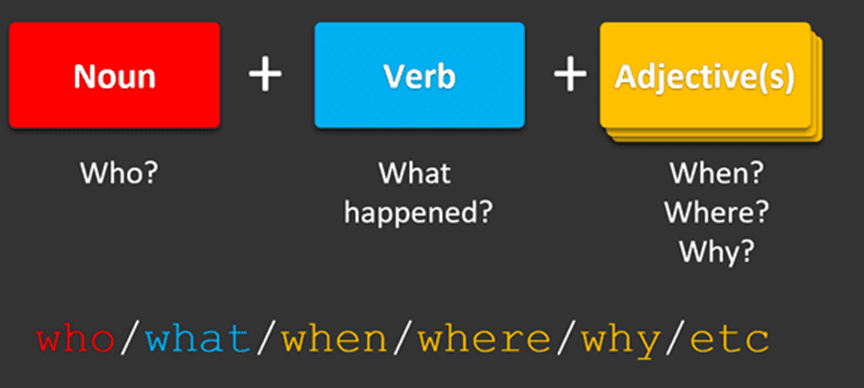

An event’s topic could alternatively be defined via a topic taxonomy, making it easy to publish and consume and to describe to the business and other stakeholders. It also enables routing and filtering without orchestration or other processing intervention.

A simple way to explain this is to think of a topic as a sentence structure with a noun-verb-adjective. In practice, this could be: the noun contains the company/division plus a business object, the verb contains the action that occurred to the data or resource and may contain a version then the adjectives cover the rest of the key event information.

The target micro-integration can subscribe to any part of the topic and receive exactly what they need. The term used for this is Smart Topics. These Smart Topics are set by the event publisher and contain context about the event that consumers can filter upon.

A hierarchal topic structure using noun, verb, adjective as the model, where the levels define different attributes and can be used for subscribing, routing, and access control.

This type of filtering is important in operational use cases since integrations need to act discretely on the events they need to perform to their integration function – which is typically a subset of the entire stream. This is an important construct that works seamlessly with how events need/should be federated across the enterprise.

- Conclusion: Leverage smart topics for event-driven integration

Event Federation

Medium to large size enterprises leverage applications and systems that are deployed in a variety of public clouds, private clouds, self-managed infrastructures and even stores, warehouses, factories, distribution centers. In addition, they are using more and more 3rd party provided SaaS services. The fact that your enterprise is largely distributed is a shock to no one. Since events provide the highest possible value of your data (time value of data), the question becomes: how to distribute them across your enterprise in order for your micro-integrations to function.

Event Brokers typically support this in one of two ways: 1) via configured replication (similar to database replication) or 2) dynamic event routing. Choosing the right event federation strategy is important to properly support ubiquitous self-service access to data AND simplify your infrastructure operations. Let’s investigate further:

Configured Replication

Log based event brokers typically support configured replication. For example, within the Kafka ecosystem mirror-maker and replicator move events between Kafka clusters by configuring which predefined topics and associated events should be copied. This allows for topics to replicated to other clusters in a reliable and fault tolerant manner. Data is replicated whether or not there are active consumers on the topics resulting in data being replicated whether is it needed or not.

This has a benefit for cases such as consistency and continuity of operations in cases of disaster. In cases where data needs to be federated based on usage patterns and needs, it becomes expense and onerous for administrators to keep the configuration of what to replicate in sync with consumer usage patterns. Since log-based brokers store historical events within topics, it makes sense that its replication pattern mirrors that of databases.

Dynamic Event Routing

As previously mentioned, the challenge for the modern enterprise is how to move events across an infrastructure that is not just geographically dispersed but also exists in separate and heterogeneous clusters in a way that is efficient, scalable, and economical.

Smart topic-based event-brokers think of routing more like networking than databases. What’s important to them is the ability to route data (like packets) in the quickest, most efficient way possible. Since event-driven integration’s foundation relies upon the publish and subscribe integration pattern, we can leverage subscriptions to indicate how the data should be distributed through the enterprise. If there are no durable or non-durable subscriptions to a given event, it is dropped at the first broker. Conversely, if there are subscribers that are distributed though out the enterprise, the data will flow to the broker(s) where the application is connected and only to those brokers. This architecture also helps when network failures occur such that applications can stay connected and working despite network outages.

Dynamic event routing is enabled by something called an event mesh – a network of event brokers designed to smoothly and dynamically transport events across any environment. It solves the problem of critical applications and systems being able to integrate in a reliable and timely manner via the event data required to process interactions and transactions that drive the business.

- Conclusion: Dynamic event routing via an event mesh best supports event-driven integration

Availability and Data Loss Avoidance

Integration is all fun and games until something goes wrong: network problems, application failures, Cloudstrike updates (too soon?). Micro-integration outages can cause a dizzying multitude of scenarios in which event/data loss can occur.

Lossless Delivery

For operational use cases, lossless delivery is almost always a requirement because the next operational integration step cannot happen if there is a message loss. Lossless delivery is also critical for applications and systems themselves, like banking, where the cost of a lost event isn’t just measured in the event itself but in the revenue impact on the organization – which in stock trading or retail scenarios can be very high.

In very high throughput and complex environments, this is very difficult to achieve. This is where guaranteed messages come in. Event brokers need to enable guaranteed/lossless delivery between micro-integrations, applications and microservices at scale without significant performance loss. Scale that also supports bursty traffic that occurs during Black Friday types of events for example.

The event broker provides the shock absorption and guaranteed delivery and supports persistent messaging and replay that enable lossless delivery.

Guaranteed messaging can be used to ensure the in-order delivery of a message between two micro-integrations, even in cases where the receiving micro-integration is offline, or there is a failure within the network.

Two key points about guaranteed messaging:

- It keeps events across event broker restarts by storing them to persistent storage

- It keeps a copy of the event until successful delivery to all clients and downstream event brokers in the same event mesh have been verified.

Resilience

Event-driven integration needs resilience to a variety of different problems and outage types. Event brokers, thankfully, have been built for reliability like network (or physical) layer technology versus application-layer technology. What we mean by this is that unlike applications that depend on external databases, in memory caches, web servers and the like, event brokers are simple in that they depend on a data store for events and the state of those events.

Because of this, high availability and disaster recovery are also dramatically simplified by the lack of moving parts. More importantly however, to the integration components are made smaller and simpler in that it’s the responsibility of the broker to take care of HA and DR requirements for data and events in transit.

For disaster recovery, events and their delivery states should be propagated to another broker cluster to a) ensure continuity of operations and b) to seamlessly take over event processing, without dealing with a backlog of duplicate, already processed events. Again, this dramatically simplifies off nominal scenarios and adds robustness and resilience to your integrations

- Conclusion: Make sure you evaluate which event broker best supports lossless delivery and resilience for your event-driven integration platform.

Self Service Access and Governance

It’s easy to get narrowly focused on the runtime aspects of event-driven integration. Afterall, if the runtime integration and core is not technically feasible, there would be no integration! To do so however would be a mistake. The API world has shown us time and again that without thinking about how your end users (developers and architects, typically) would get access to APIs then it’s all for naught. In addition, who should have access to APIs, change management of APIs are all examples of where Governance provides value. The same is true with event-driven integration!

As we create events at runtime with micro-integrations, these events (and corresponding data payloads) need to be defined and placed into a searchable repository so that they can be found, understood and reused. Without this capability, developers, architects and product managers are constantly scouring out of date documentation OR calling meetings (as if we need more of those) to ask where data is available and how they can get access. But it goes beyond just having definitions in a repository! How can you get developers to follow the journey of discovering events that have value, getting approval for access, and provisioning the entitlements to the event broker so they can get access in minutes not days or months? That’s what you need to be looking for to properly enable your integration teams to rapidly create micro-integrations!

Without some control and guard rails, however, teams could be improperly designing events, consuming events they shouldn’t, and simply not following best practices. This is where governance comes in. Governance, the term, has a negative connotation due to tooling and processes which were overly complex and cumbersome to use. To that end, you should be looking for capabilities to:

- Apply role-based access controls on events to protect them from unauthorized view and change.

- Support automation-facilitated approval processes for runtime access to prevent event/data leakage.

- Support domain-driven design.

- Provide best practices on smart topic design.

- Curate events into high value event APIs and products that are thereby exposed across the enterprise and/or to interested 3rd-party partners and users.

- Support event platform infrastructure provisioning mechanisms that can be chained into your own application DevOps pipelines

- Conclusion: Prioritize self-service access and governance.

Solace PubSub+ Platform for Event-Driven Integration

Solace was founded in 2001 to provide event-broker technology. Over the years, it expanded its portfolio with products that combine event-broker technology with event management to help enterprises adopt event-driven applications, EDA and now event-driven integration. Numerous customers in a variety of industries—including financial services, automotive, gaming, food and beverage and retail—use Solace to power their enterprises. Solace works with customers to facilitate the adoption of event-driven methodology and assist them with an event-driven integration platform to facilitate modern, real-time integration needs.

To learn more about Solace and its unique approach to event-driven integration, please visit: Solace for Event-Driven Integration.

As Solace’s Field CTO, Jonathan helps companies understand how they can capitalize on the use of event-driven architecture to make the most of their microservices, and deploy event-driven applications into platform-as-a-services (PaaS) environments running in cloud and on-prem environments. He is an expert at architecting large-scale, mission critical enterprise systems, with over a decade of experience designing, building and managing them in domains such as air traffic management (FAA), satellite ground systems (GOES-R), and healthcare.

Based on that experience with the practical application of EDA and messaging technologies, and some painful lessons learned along the way, Jonathan conceived and has helped spearhead Solace’s efforts to create powerful new tools that help companies more easily manage enterprise-scale event-driven systems, including the company’s event management product: Solace Event Portal.

Jonathan is highly regarded as a speaker on the subject of event-driven architecture, having given presentations as part of SpringOne, Kafka Summit, and API Specs conferences. Jonathan holds a BS Computer Science, Florida State University, and in his spare time he enjoys spending time with his family and skiing the world-class slopes of Utah where he lives.