Machine learning is an element of artificial intelligence, namely use of computer algorithms (machines) that can automatically adapt and theoretically improve their operation (learn) by building a model based on sample data, making predictions about the outcome of particular action it decides to take, and factoring the actual result into similar decisions it makes in the future. Machine learning algorithms help computers perform remarkably sophisticated actions like detecting fraud, recognizing speech and images, recommending products to consumers, and controlling equipment and vehicles like self-driving cars.

Running a machine learning algorithm/program on your data is only part of the puzzle – once you get the signal or result, you need to take action on it, typically in combination with other data that is coming in from other sources. Finally, once you’ve done the processing of the output you may want to signal the result to a number of microservices that may be geographically distributed. This is where you can combine the power of machine learning with event driven architecture to signal the result to all interested parties.

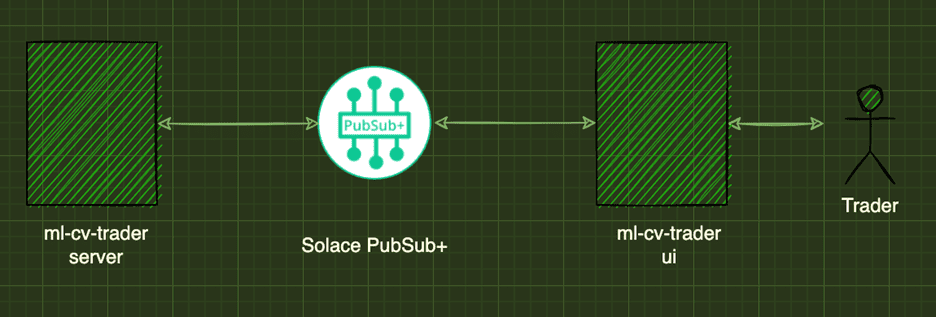

To demonstrate this concept, I built an interactive demo I called “ml-cv-trader” which is short for machine learning-computer vision-trader. The application simulates a trading session that lasts for a minute while you use your hand signals to buy/sell/hold a portfolio of stocks. The machine learning uses a tensor-flow.js backed library to determine the hand signal, map it to an action of buying/selling/holding stocks, and ultimately sends the result back to the serverside for processing. In this blog post, I’ll list out the technology and the architecture involved in making this demo work.

The Stack

Here is the stack I used to build the demo:

- Svelte – The Svelte JavaScript framework has become my go-to framework for programming interactive UIs. Among many other things, what’s great about this framework is that a Svelte App compiles down to pure JavaScript and your resultant web-apps become less bloated.

- Tailwind CSS – Tailwind is utility first CSS framework that allows you to configure your CSS and not “code” it – all this without the bloat of other CSS frameworks.

- Node.js – A Node server will acts as the backend for this project responsible for sending data to the Svelte front end and responding to requests

- Solace PubSub+ Event Broker – An enterprise grade event broker that has its roots in low latency messaging, making it it ideal for this demonstration.

The Architecture

Here is the high-level architecture that I’ll break down and explain below:

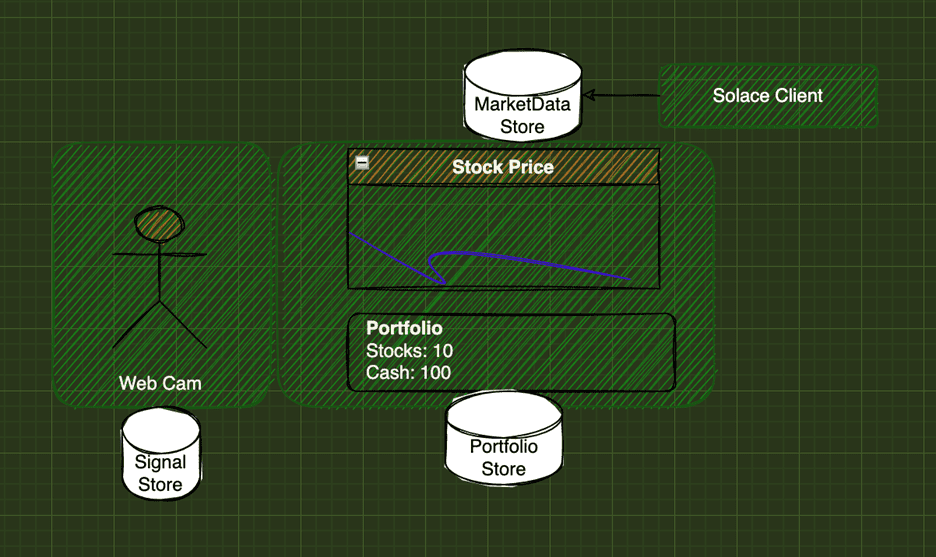

User Interface

There is a lot that actually takes place in the UI: listening to market data events, detecting gestures that take place on the webcam (via the handtrack.js library), and sending streams to the event broker. Key to the front-end is the Svelte JavaScript framework that binds everything together. The UI consists of multiple Svelte components that are developed in isolation. By making elaborate use of Svelte Stores, I enabled the different components to share state. This diagram shows some of the interaction between the components and associated Svelte stores:

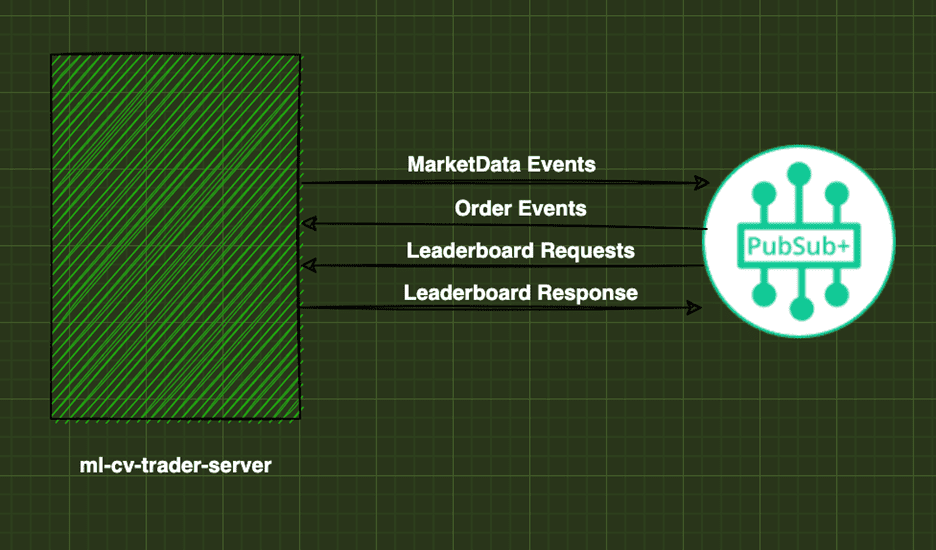

Back End

The back end consists of a Node.js server that has a few responsibilities:

- Produce market data events

- Save trading session results

- Returning query results for the leaderboard

Solace PubSub+ Event Broker

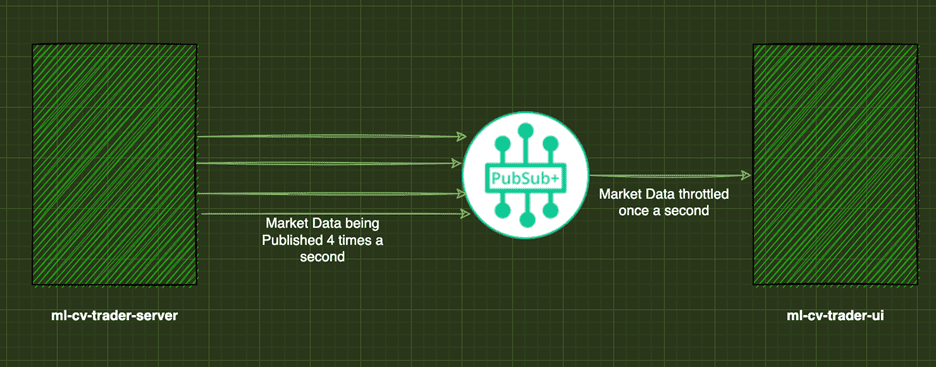

Solace PubSub+ Event Broker is an enterprise-grade event broker that supports many unique features such as a native WebSocket streaming protocol allowing you to use it natively with your HTML5 web-apps, fine grained filtering of event streams, event mesh, access control lists to restrict who can publish/subscribe to what data.

Another rather unique feature of the Solace PubSub+ Event Broker is the ability to throttle event streams to subscribers using a feature called “eliding”. In our instance, the back-end server is sending data updates 4 times a second – but we want to only expose the front-end client to a lower frequency of updates – in this case, once a second. By using this feature, the Solace PubSub+ Event Broker can throttle fast streams going to a client that doesn’t need it as quickly.

Conclusion

Generating interesting insights about your data is only part of what machine learning offers; to reap the real rewards you need the ability to react to them in real-time. Using an event mesh to distribute your insights is the best way to do so.

If you want to look at the code behind this application, visit the following GitHub repos:

Explore other posts from category: For Developers

Thomas Kunnumpurath

Thomas Kunnumpurath