When deploying your kdb+ estate, you may have struggled to efficiently and easily replicate your kdb+ instances around the globe or in the cloud. While there are many strategies that you can use, such as chained tickerplants or duplicating your feedhandler processes, all of them add complexity. In this post, I will introduce why you might want to replicate kdb+ instances, and explain how you can easily accomplish this natively with q and Solace PubSub+.

Why replicate your kdb+ instances?

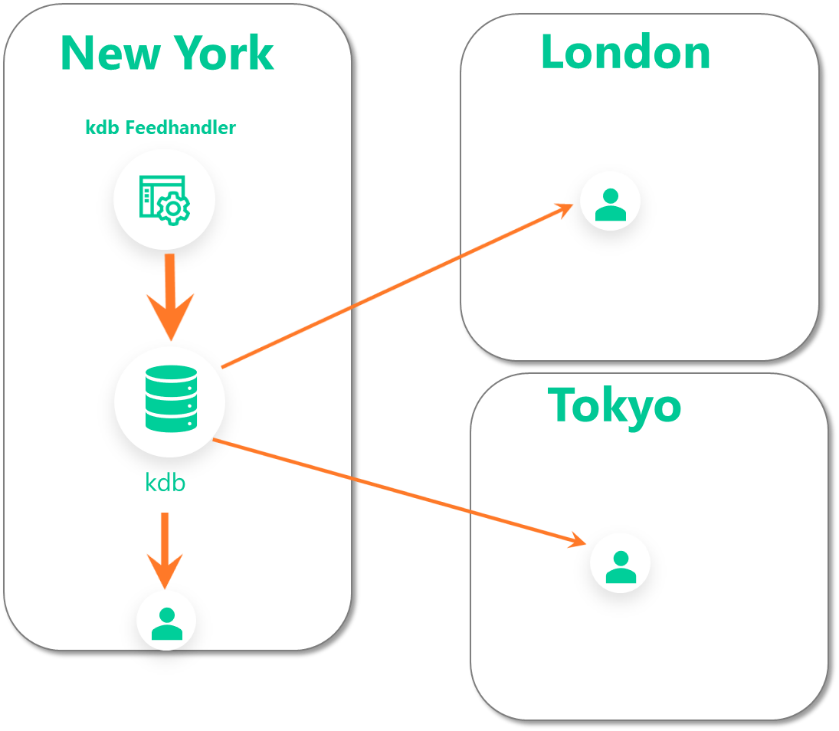

Your initial deployment of kdb+ applications may look something like this image, where you start out with your feedhandler pushing data into your kdb instance in New York and users across the globe are accessing the instance. As your user base grows, you may scale out your kdb+ instances horizontally, but you still incur latency costs as every query/response has to transit the WAN. So, as your user base grows, this architecture may not work as well.

There are a few issues we would like to solve here by replicating your kdb+ instances across the globe:

- Reduce latency – If you have a number of users querying in every location, you want to reduce the time it takes for the results to be seen by the end user.

- Reduce load on your kdb infrastructure – The longer it takes a query to execute and the results to be delivered, the less responsive your kdb+ instance becomes.

- Improve resiliency – Having all your kdb+ infrastructure in a single location results in increased probability of a failure condition affecting your entire userbase.

Considerations for your kdb+ replication stack

While there are various methodologies you may employ to achieve replication for your kdb+ instances, you would want to ensure the following:

- WAN latency does not affect the operation of your kdb+ instances. In the case of a WAN network outage/latency across your WAN links, you would want to ensure that this would not impact the performance of your kdb+ instances.

- Native integration with q. Rather than relying on infrastructure replication techniques such as SRDF, you would want the replication technique to be natively integrated with q.

- Control what is being replicated. Since the replication technology is natively implemented in q, you now can implement logic to what exactly is being replicated or even enhance the data that is being replicated.

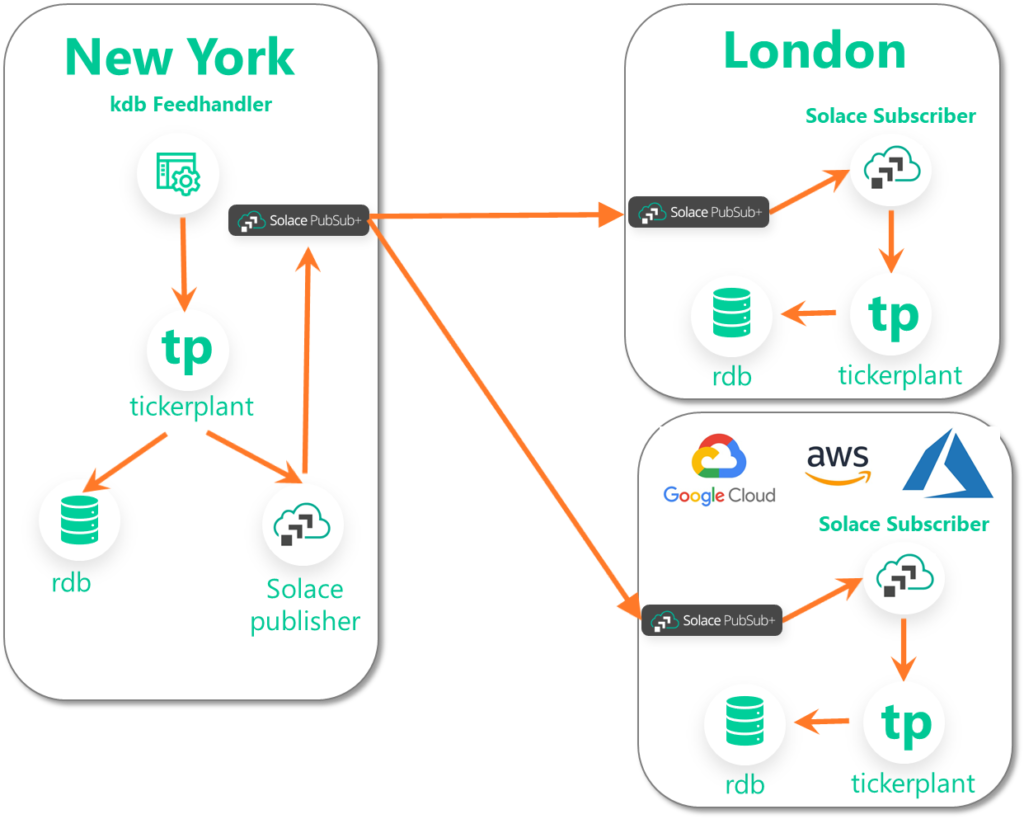

Replicating your kdb+ data with Solace PubSub+

As discussed in the previous post, using the Kx Fusion Interface you can natively send messages to Solace PubSub+. Combining this capability with subscribing to your tickerplant, you can send updates to a Solace PubSub+ Event Broker in one region, have Solace PubSub+ forward the message to brokers in another region, and then push the updates into your tickerplant in the other region. The benefit of this pattern is that it can even be extended to the public cloud.

Creating an Event Mesh

Creating an event mesh – a cluster of brokers – sounds complicated but it’s actually extremely simple with Solace PubSub+. Follow these instructions in this video to link brokers together.

A sample implementation

Consider a single table quote with the following schema:

|

quote |

|

time |

timestamp |

sym |

symbol |

bidPrice |

float |

askPrice |

float |

bidSize |

integer |

askSie |

integer |

quote:([]time:`timestamp$();sym:`symbol$();bid:`float$();ask:`float$();bsize:`int$();asize:`int$())

Now to set up a process to subscribe from your tickerplant and publish onto Solace PubSub+, you could do something like this:

sendToSolace:{[t;d]

if[not 98h=type d;:(::)];

d:update `g#sym from d

s:exec distinct sym from d;

topics:{[t;s] "/" sv ("solace/kdb";string t;string s)}[t] each s;

json:{[d;s] .j.j select from d where sym=s}[d] each s;

.solace.sendDirect'[topics;json]

}

upd:sendToSolace;

In a nutshell, the code above will retrieve the name of the table and the sym from the record, construct a well-defined topic (example: solace/kdb/quote/AAPL), and send the record(s) as a json record onto Solace PubSub+.

Now, in the other geography/location you will set up a Solace PubSub+ subscriber using something like the following code:

onmsg:{[dest;payload;dict]

j:.j.k "c"$payload;

h(".u.upd";`quote;(.z.P;exec `$sym from j;exec "f"$bid from j;exec "f"$ask from j;exec "i"$bsize from j;exec "i"$asize from j))

}

.solace.setTopicMsgCallback`onmsg

.solace.subscribeTopic[`$"solace/kdb/quote/>";1b];

The code above will subscribe to all messages that start with the topic solace/kdb/> and push into the quote table.

Conclusion

Using Solace PubSub+ with kdb+ gives you a low-touch and frictionless way to achieve a robust replication strategy for your kdb+ stack. The Solace PubSub+ Event Broker is also completely free to use as a docker container. You can find all code referenced above in the kdb-tick-solace repo.

kdb+ Solace Integration Repoby solacese

kdb+ Solace Integration Repoby solacese

Explore other posts from categories: For Architects | For Developers

Thomas Kunnumpurath

Thomas Kunnumpurath