If you look around, you might think the matter of real-time IoT data streaming has been settled. You get real-time alerts about stock prices, an alert from the pizza shop when your pie goes in the oven, when it’s ready, and when it’s on its way, and even a goal scored by your favourite team pops up on your phone.

It feels like so many things we used to wait for are now near-instant. All the niggles have been (or quickly are being) ironed out. But is that really the case? No.

When you read stats like “by 2025 we can expect to see a total of 30 Billion IoT devices, up 50% from 20 Billion in 2020.” you are awed, but then what’s it worth? Why do “things” around us have to get “internet-enabled”? Especially since things we do are getting “faster” anyway… That’s where the power of IoT, data streaming, and its ecosystems come in.

Real-Time Data Streaming – A Business Opportunity

IoT is creating new opportunities for businesses, individuals, and society as a whole. For example, in the case of autonomous vehicles, people with varying levels of ability will be able to travel and commute with a greater degree of independence. Concurrently, the car manufacturers will be able to offer in-car services for maintenance and entertainment.

If you take an even broader perspective, the insurance companies appear convinced that autonomous vehicles will be safer than human-driven cars. This will lower their risk and enable them to expand their coverage at a lower premium. The same goes for a city or highway division, which can potentially make commuting faster (and safer) by governing speed limits in real-time and better managing traffic density with smart, autonomous public transit options.

People like us benefit from the outcome, but it is a series of complex analysis and decision making at the system level that’s making it all happen. This is where real-time data streaming between IoT systems comes into play.

To give you a deeper perspective, I interviewed our resident IoT expert – Vats Vanamamalai. Vats has extensive experience in two lifeline sectors – Telecom and Energy. Here is a transcript of what we talked about:

It seems like organizations in almost every industry can articulate their problems and a vendor can build a custom package for them and that’s it. Is there a problem with that approach?

Vats: Yes. The challenge with point-solutions is that they focus on closed-loop problems. For example, imagine an actuator that’s connected to a thermometer in a refinery, where any change in temperature triggers action by the actuator. There is little room or need for decoupling there, but when you look at the refinery as a whole, where the health of the actuator matters, the number of times the actuator was activated matters. You need to record what’s going on, then your IT and operations components need to be able to communicate and track activities that interest you.

Can you explain what you mean by “interest” here? What’s to gain by simply observing what’s going on?

Vats: Say events are propagated to several systems that are interested in them. Some of those systems might be on site and need to dispatch a technician if three incidents of high temperature occur, while other systems need information about those incidents so they can do more complex modeling and analysis. Such capabilities are typically leased in the cloud, but the action still has to happen at the edge.

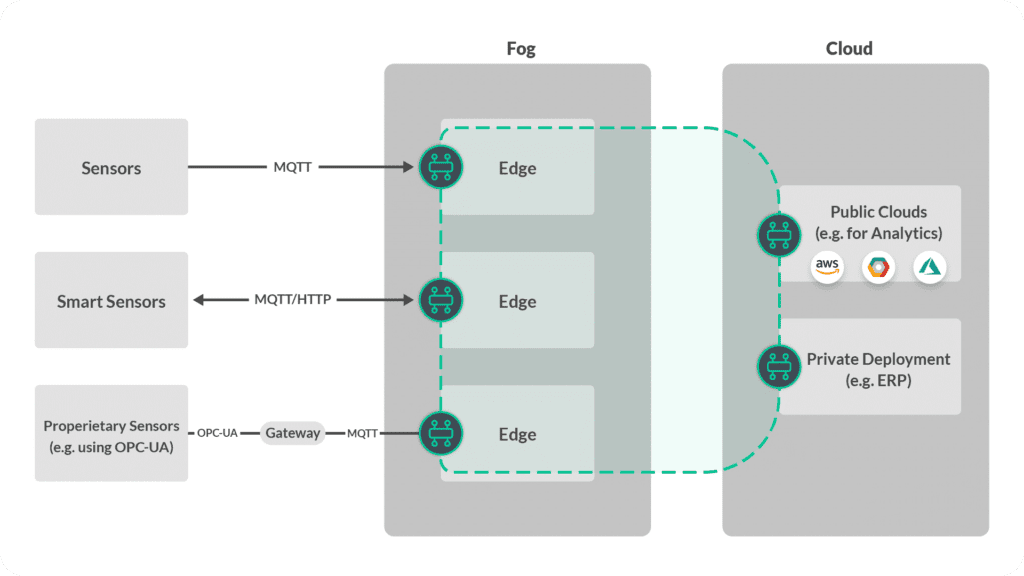

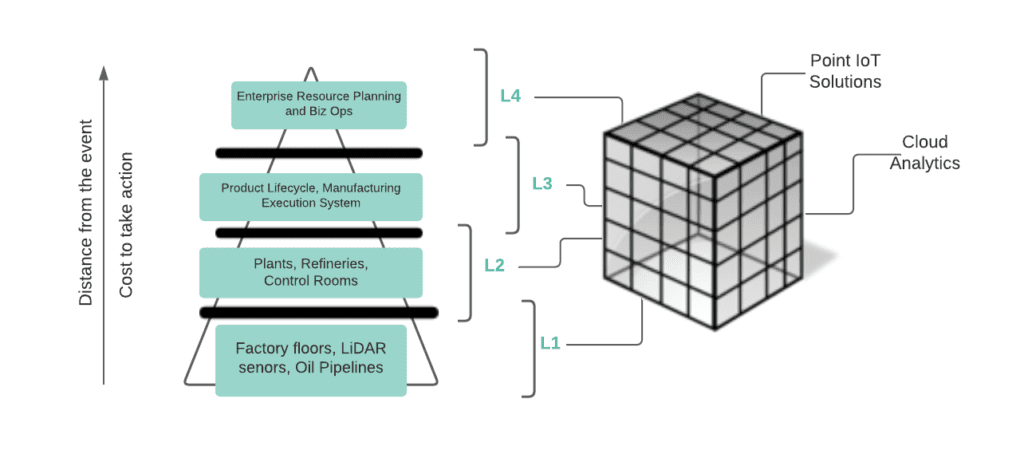

I often say, infer in the cloud and act at the edge. As an example, here is how an event mesh organizes the levels of industrial IoT.

That looks straightforward. Is there something architects tend to underestimate in terms of complexity?

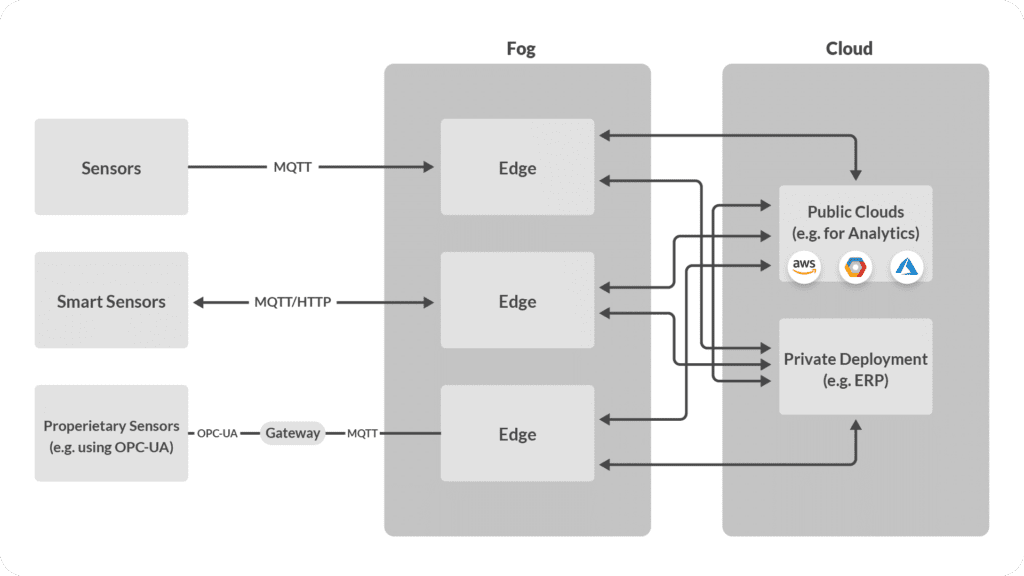

Vats: Yes, let me take the event mesh out of the picture and show you what can potentially lie right under it.

As applications are becoming more widely distributed across various on-premises and multi-cloud environments as microservices, there are new bottlenecks appearing.

The edge layer has a lot to share for analytics purposes, but it is hard to manage because you need to bridge the traffic from datacenters or on-prem applications to your core which might be running in a public cloud environment. In the case of an oil extraction and processing operation, the data might end up travelling thousands of miles before it means anything to the business. Now imagine trying to leverage the best-of-breed capabilities of several commercial off-the-shelf solutions in this set-up. Some come from bespoke, point solutions by GE or Bosch, then there are machine learning solutions from Google Cloud, and the data archiving works best in AWS, for example.

It’s clear you need a solution for efficient data distribution among large numbers of consuming applications on the edge, cloud, or hybrid cloud now more than ever.

Can you explain this handshake of IT and OT systems, and what’s still to solve there?

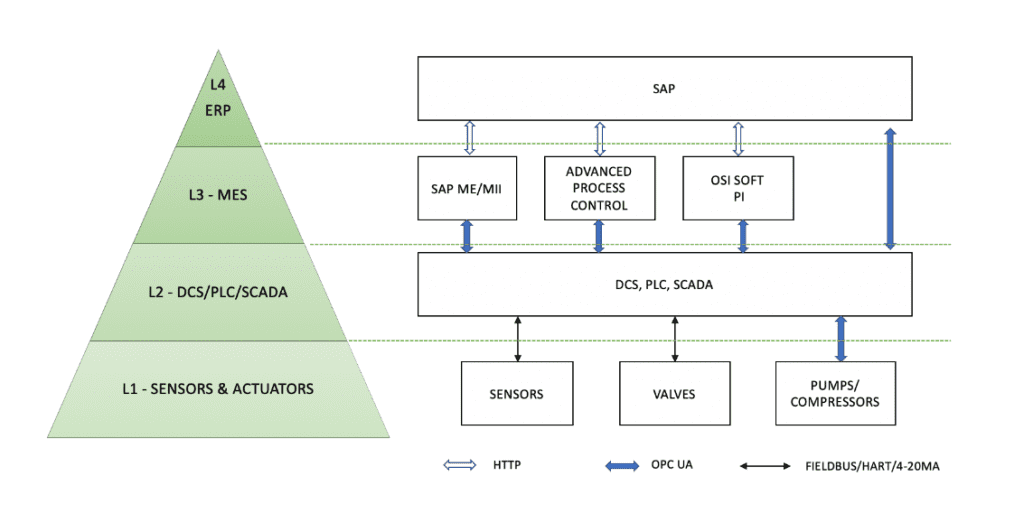

Vats: Sure. Let’s zoom in on Industry 4.0, which has parallels in other sectors so all architects should be able to see their IT and OT systems needing to blend at some level:

Data exchange between OT and IT systems is continuous, and discrete process manufacturing industries – such as oil and gas, energy production, chemical plants, car manufacturing, etc. – follow guidelines specified by ISA/ANSI 95. ISA/ANSI 95. These specs define automated interfaces between applications and industrial control systems, and define four levels into which technologies and business processes fit. Here is how L1 to L4 stack up:

Level 4 systems are often integrated and exchange data with systems in level 2 and level 3. There is a lot of tight coupling here, with modules and code that fit this and only this reference architecture.

Recent Industry 4.0 and IIoT trends spurred by cloud computing, analytics, and human-machine interfaces have enabled more efficient ways of solving problems in asset utilization, equipment monitoring, safety/health, and predictive maintenance. These solutions often require data exchange among microservices distributed across cloud and on-premises environments.

As the requirements for Industry 4.0 and IIoT evolve, the tight coupling and synchronous polling architecture of OPC UA impacts the performance of the source PLC/DCS systems, which are otherwise designed for process control.

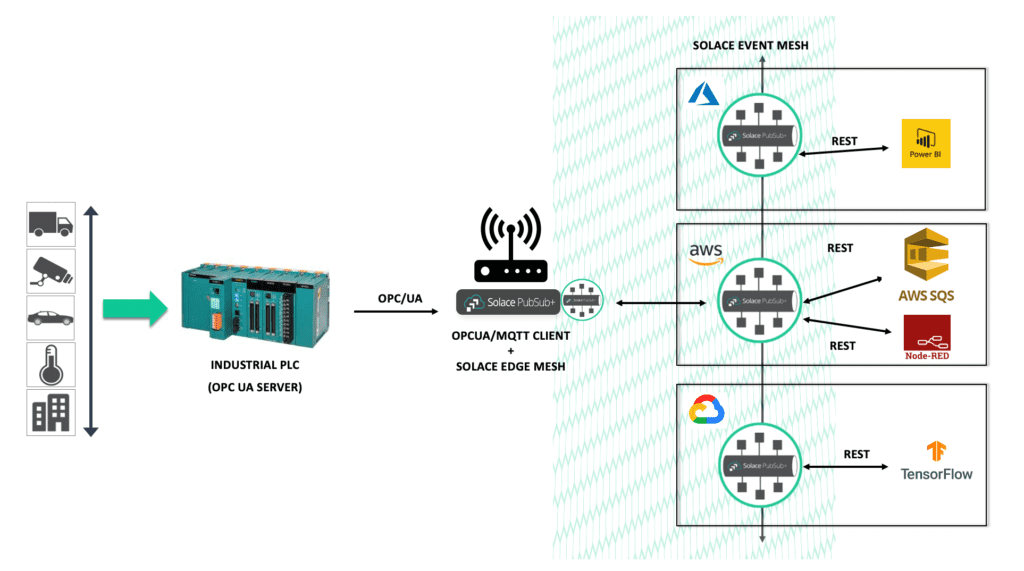

Here is a real-life example: With an event mesh in place, the PLC/DCS data doesn’t have to be integrated in a bespoke point-to-point manner with each of the consuming applications. Instead, it can fan-out sensor OPC event streams via an OPC UA-MQTT gateway into an event broker. PubSub+ Event Broker can make process control data available asynchronously to a large number of consuming applications in a decoupled way across the event mesh.

Event-Enabling Industry 4.0 and IIoT Data Streams from the Edge Using OPC UA and an Event MeshAn event mesh coupled with OPC UA eliminates data bottlenecks and event-enables IIoT and Industry 4.0 data at the edge and across cloud applications.

In the illustration below, the PLC publishes sensor streams over OPC UA to an edge gateway where OPC UA streams are converted to MQTT streams which are published to a Solace edge broker. The edge broker is bridged into a larger event mesh made up of PubSub+ event brokers deployed on multiple public and partner clouds.

In this example, the consuming applications are distributed in the three public clouds: Power BI on Azure Cloud, Tensor Flow ML on Google Cloud, and Node Red, SQS on AWS Cloud.

Conclusion

I hope you found my chat with Vats about real-time IoT data streaming useful and feel excited about the prospects of implementing event-driven architecture. An easy way to think of an event mesh in the context of IoT is this: An event mesh is an architectural construct where you can connect your IoT/services/platform and offload all the heavy work of real-time data streaming.

Technically speaking, here is what you gain:

- Dynamic, bi-directional event streaming across hybrid- and multi-cloud environments.

- Easy translation and communication between diverse devices, applications (new and old) and integration technologies.

- Fast, reliable and secure event streaming.

From a business perspective, this real-time data streaming between a wide variety of IoT “things” will help power use cases like:

- Preventive maintenance of machines and devices

- Real-time visibility and control of transportation, logistics and supply chains

- Smart transit and other smart city initiatives

- Next gen product lifecycle management

Learn more about our value proposition for IoT use cases and how to bring event driven thinking to your enterprise.

Explore other posts from categories: For Architects | Use Cases

Gaurav Suman

Gaurav Suman