This blog is part of a series on modernizing your post-trade infrastructure with an event-driven approach using PubSub+ Platform. In previous posts, my colleague Vidya described how event-driven architecture addresses the challenges associated with post-trade transaction processing in capital markets, and Victor explained why you need an event-driven post-trade system to harness the power of reference data.

Now I’d like to give you an example of how the Solace PubSub+ Platform has been deployed to distribute trade events with multiple post-trade systems at a number of our customers. I’m going to describe a common use case seen across capital markets customers and how Solace PubSub+ Event Broker has been used to solve the associated challenges.

The post-trade processing environment is complex with a wide range of systems, components, business relationships and a plethora of events flowing between them. The challenge is to ensure that the various events generated in this landscape get to where they need to be. Dissemination of post-trade data to the downstream systems is critical. For example, if the stock and cash position event is not routed to the consumers in real time, the organization is exposed to settlement risk.

PubSub+ event brokers have been deployed in various investment banks, prop trading firms, hedge funds, and stock exchanges to simplify the distribution of data between systems on the trading front office and in the back office where post-trade processing systems handle settlements, reporting, market surveillance, data-warehousing, etc. Our event brokers excel at handling slow and disconnected consumers without affecting any online consumers, making it a strong choice for serving as the integration fabric between trading systems and post-trade downstream systems.

Trade Distribution in Capital Markets

A simple capital market landscape (for stock exchanges) consists of a front office, a middle office, and a back office.

The front office is responsible for the trading and the flow of orders. It is where all the action starts with orders being placed by clients via order entry or FIX gateways. The heart of the front office is the matching engine, which aggregates outstanding client orders and decides how to fill them by matching buy and sell orders to form a trade. The matching engine generates very high volumes of trades with latency measured in microseconds.

Trades generated in the front office are aggregated and distributed to post-trade systems in the middle and back offices, which are responsible for the post-trade processing activities, such as performing settlements, reporting, calculating risk, warehousing data, performing trade surveillance, etc.

Challenge of Distributing Events Reliably at this Volume

How do you ingest and distribute the deluge of data generated in the front office, across the various interested systems in the back office, in a fast, robust, and secure manner. The main requirements are as follows:

- Distribute the post-trade data to multiple downstream systems.

- Maintain sequencing of trade messages and no gaps in messages.

- Support a high throughput of incoming trade messages with low latency.

- Ensure that any spikes in the incoming trade data don’t impact downstream systems.

- Any downstream system that’s slow or unable to consume trades should not impact the others.

- Be highly available and fault tolerant.

- Provide a high degree of management visibility and control.

Acme Exchange’s Post-Trade Distribution Platform

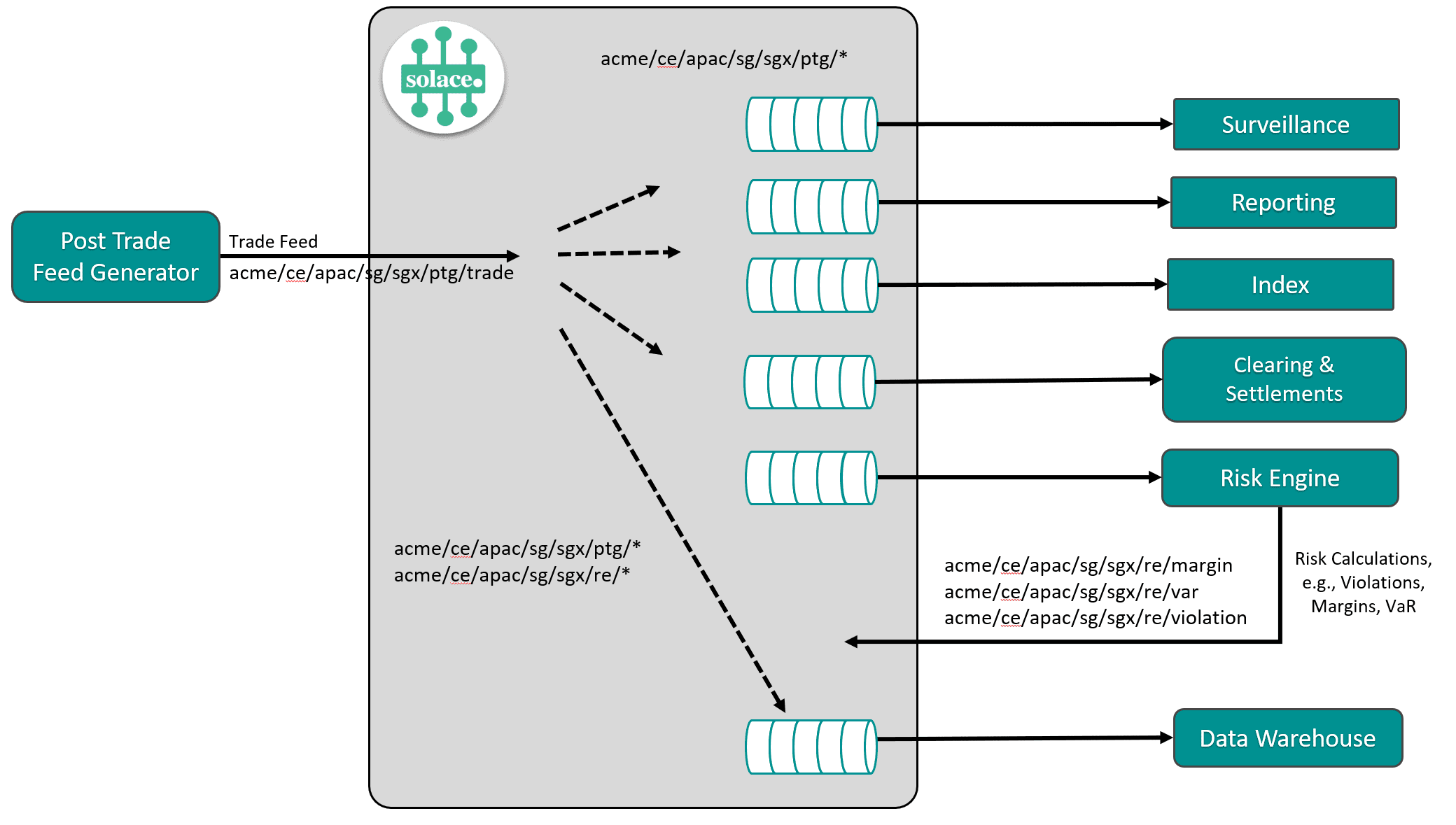

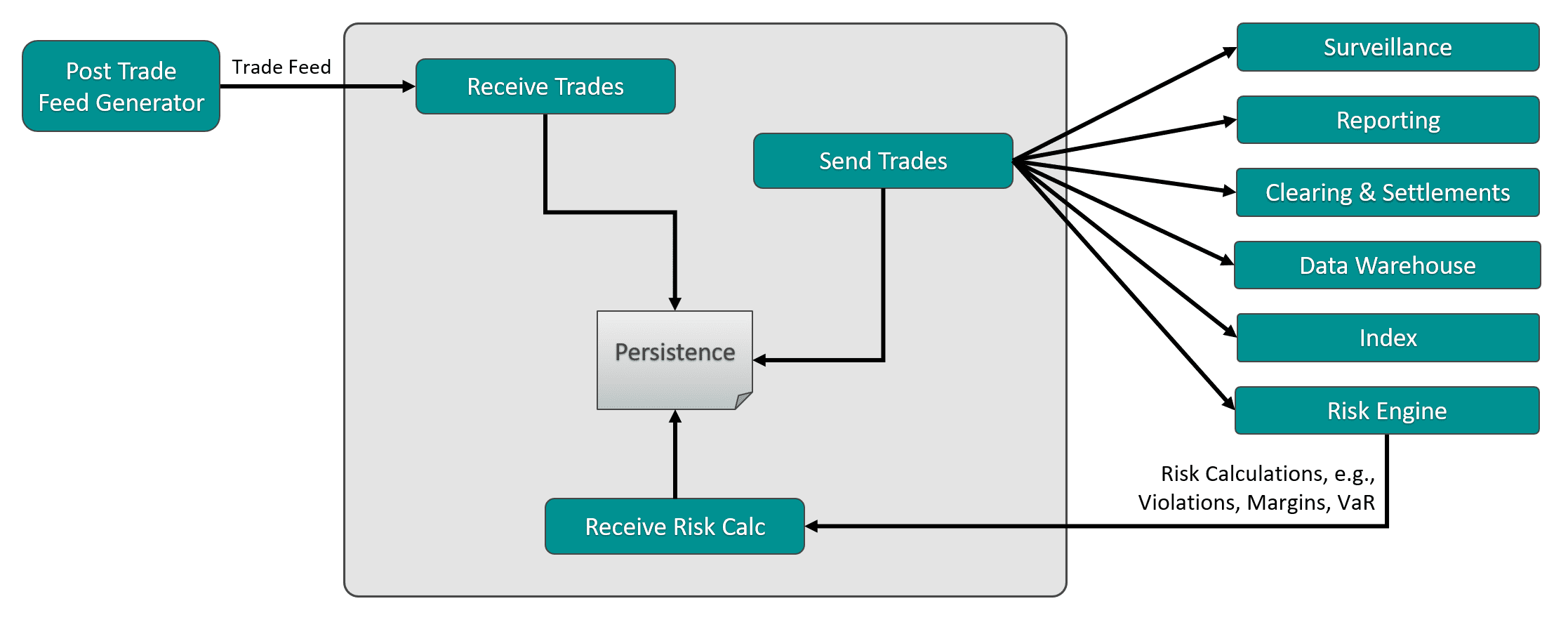

One customer, which we’ll call Acme Exchange, wanted to distribute trade data generated in the front office to systems in the back office for post-trade processing. They’d built an in-house system that received trade data from a post-trade generator and distributed it to the various downstream processes. Here’s an extremely simplistic representation:

Figure 1: Acme’s trade distribution platform

The system used TCP socket connections to receive trade data from the post-trade distribution engines. Similarly, it established an outgoing TCP socket connection with downstream systems that need that data. To make sure the processes can withstand any network disruptions in upstream and downstream connections, incoming trade data was written to an intermediate persistent store. While most of the data flows consist of ingesting the trade data and distributing it to downstream systems, there are also cases where the downstream systems publish data back to the system itself for further distribution – for example, the risk engine performs risk calculations such as violations, margins, and VaR that need to be distributed. Each of the downstream systems will in turn feed other downstream systems – these are not shown for simplicity.

This system met the requirements described above, but suffered from some notable issues:

- Applications were linked via point-to-point connections, and the tight coupling led to design limitations and network and operational complexities.

- In order to support more trades, they had to run multiple instances which resulted in operational complexity and support challenges.

- There was a lack of deterministic high availability. If the active instance failed, failover to the standby instance took an unpredictable amount of time.

- Acme runs a DR site, and was replicating trade data with synchronous database replication to do this, but their failovers didn’t always meet the required RTO and RPO.

- It took them 1-3 moths to onboard new downstream systems.

- It took a dedicated team to monitor the system and keep it running.

Implementing an Event-Driven Solution

With the increase in global market trade volumes and market volatility, exchanges are looking to modernize their post-trade distributions by adopting an event-driven approach. Acme’s architects wanted to adopt more modern agile methodologies like asynchronous event-driven design, enable cloud integration to leverage cloud services such as machine learning, and use container orchestration platforms such as Kubernetes to dynamically scale and manage workloads. So when they came across Solace’s event distribution platform and decided to replace their homegrown system as part of their digital transformation initiative to build a modern post-trade distribution system.

Replacing their homegrown system with Solace event brokers required the architects at Acme to use an event- driven methodology to integrate their applications. The first step was to use event-driven publish-subscribe messaging to integrate the applications with the event broker – effectively decoupling their upstream and downstream systems.

The post-trade feed gateway publishes trade events to the event broker – each trade event is published on a topic, and the payload of the event will consist of the trade message. The topic describes an event and identifies what an event represents. More on that later.

They translated each data flow to a Solace topic, and published messages on the topic to the broker as a guaranteed message. (Guaranteed messaging is Solace’s implementation of persistence, used to ensure that no message accepted by the Solace event broker is ever lost.)

Solace’s guaranteed messaging also acts as an efficient shock absorber in front of the post trade downstream systems; the event broker absorbs burst of post-trade messages and plays them out to consumers at a rate they can accept. This is done by creating queues for each consumer system. Solace’s topic to queue mapping feature allows each consumer to attract messages on a certain set of subscriptions – allowing each consumer to only receive the type of trade messages that they are interested in, providing fine-grained filtering.

The Solace event broker dynamically filters the message stream and delivers only those that satisfy a given recipient’s topic subscriptions. The messages are delivered in a persistent manner, so if the consumer goes down, messages are spooled on the broker. This allows the consumer to continue processing messages when they reconnect with no loss of trade data.

Let’s look at an example of the topic hierarchy and topic flows for this use case. The topic hierarchy decided for the use case was as follows:

acme/<asset class>/<region>/<country>/<exchange>/<source system>/<event type>

- asset class: Cash Equities, Futures, Options, ETF, Bonds, etc

- region: Americas, EMEA, APAC

- country: ISO country code

- exchange: ISO exchange code

- source: source system publishing the events

- event type: A description of the event

Translating these to their original flows, the post-trade data would be published to the below topic – considering the asset class “Cash Equities”, source system as PTG (post-trade feed generator) and country as Singapore, as that’s where I’m writing this from:

- acme/ce/apac/sg/sgx/ptg/trade

The various consumers which want to receive this trade data can simply create a queue with the topic subscription for the same topic, adding wildcards as appropriate.

The risk engine will consume post trade messages from the Solace event broker, perform calculations (such as margin, violations, and VaR), and will publish them back to the event broker on the below topic for distribution to all the downstream systems:

- acme/ce/apac/sg/sgx/re/margin

- acme/ce/apac/sg/sgx/re/violation

- acme/ce/apac/sg/sgx/re/var

The consumers can once again add the appropriate topic subscription to receive the appropriate messages. I highly recommend you check out our blog for more information on the best practices on designing a Solace topic hierarchy.

Acme’s trade distribution using Solace PubSub+ Platform as an event broker for distribution to downstream systems now looks like this:

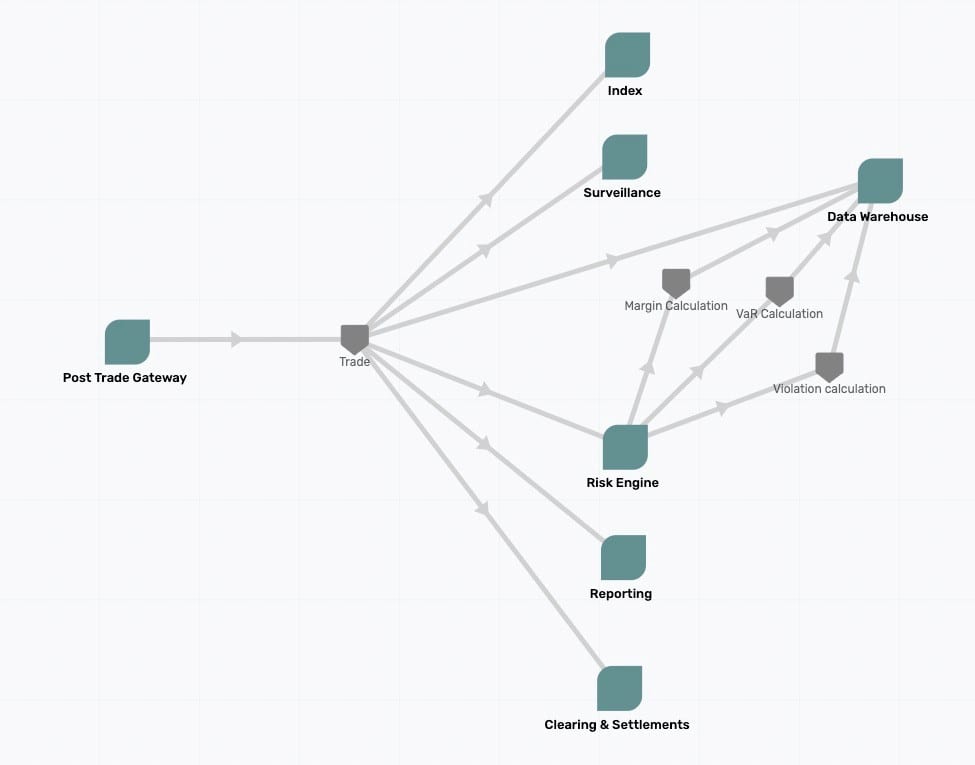

Visualizing and Managing Your Events Using an Event Portal

While the above diagram provides a simple illustration of the post-trade event distribution flow, when you onboard multiple downstream consumers, it can get more challenging to answer some common questions such as:

- Which applications produce a particular event?

- Which applications consume a particular event?

- Is there a central repository for discovering existing events?

- Which events are currently existing and which are deprecated?

- What is the relationship between applications and events?

PubSub+ Event Portal allows you to answer all these questions. You can model the flows between the various applications in the post-trade landscape via Solace brokers using the portal. For those familiar with REST API design, an event portal is analogous to the developer portal of the API world. Using PubSub+ Event Portal, you can design your event-driven architecture for your applications, as well as store all your events, topics and their schemas. The portal will help you visualize the relationships between applications and events, making event-driven applications and microservices easier to design, deploy, and evolve.

You can also use the event catalog to store a list of all the current events available on your broker – if a new downstream system wants to be onboarded to Solace PubSub+ Platform, they can search the event portal for the event topics they are interested in, create and configure a queue via the console, and start streaming events in a matter of seconds!

I’ve modelled the above event-driven flow using the PubSub+ Event Portal below:

Wrapping Up

The immediate benefits of adopting Solace PubSub+ Event Broker for post-trade distribution include:

- Central event backbone: Using Solace as the central event distribution backbone for facilitating the trade message flow to post-trade systems allows you to offload the “heavy lifting” of event streaming and persistence to the event brokers – the client applications connecting to Solace PubSub+ Event Broker do not all need to perform message sequencing, network disconnect recovery, logging, monitoring, and this is now delegated to the event broker. This allows Acme developers to concentrate on adding business value to their applications and improve customer experience.

- Dynamic event distribution: Using Solace topic routing and topic to queue mapping as discussed, trade messages published to the broker will be dynamically routed to any consumer queues, filtered by the topic subscriptions applied by them. Consumers can apply wildcards on their queue’s topic subscriptions, allowing the filtering to be as coarse-grained or fine-grained as required. New downstream consumers can be onboarded quickly, providing higher agility.

- High-speed persistence: Solace guaranteed messaging provides automatic message sequencing, persistence, and network disconnect handling to ensure zero loss of trade messages.

- Throttling: The shock absorber capabilities of the event broker allowed the post-trade system to handle any spikes in the incoming trade data, without any impact to the downstream systems.

- Stability and performance: PubSub+ is a purpose-built event distribution platform with deterministic performance characteristics even when processing millions of messages per second and in the face of misbehaving client applications.

Taking an event-driven approach to post-trade distribution offers these additional benefits:

- Real-time processing of post-trade data reduces the time of trade settlement to instantaneous.

- Enabled Acme to consolidate their estate, reduce cost, and achieve operational simplicity.

- The Solace broker is cloud ready and can be deployed on any cloud – enabling Acme to leverage services on public cloud providers for machine learning.

- Enabled further application modernization, acting as a catalyst to transform their downstream systems to a microservice-based architecture.

- Allowed Acme to build an event mesh that shares data across lines of business so they can build more innovative products for their business users.

Enterprises are transforming their applications to be event driven, and this is rapidly spreading across various industries. Any enterprise has a lot to gain from moving towards an event-driven architecture – greater agility, better flexibility, reduced time to market, and improved customer experience. PubSub+ Platform and PubSub+ Event Portal can be instrumental in this journey of envisioning and implementing a modern post-trade system.

Further Reading

- Blog Post: Event-Enabling Post-Trade Transaction Processing for Capital Markets

- Blog Post: Why You Need a Modernized Post-Trade System to Harness the Power of Reference Data in Financial Markets

- Solution Brief: Modernize and Future-Proof Post-Trade Processing with an Event Mesh

- Success Stories: C3 Post Trade

You can try PubSub+ Event Portal free! Sign up for your 60 day trial.

Explore other posts from categories: For Developers | Use Cases

Shrikanth Rajgopalan

Shrikanth Rajgopalan