Capital markets typically have three core functions spanning front, middle and back office. Front office is a revenue generation machine, whereas middle office and back office functions handle client servicing and settlements. Middle and back office do not generate revenue directly, but it’s a very significant function. Any irregularities and inefficiencies here expose the institution to various forms of risk, such as the compliance risks that can arise due to inadequate controls in the financial transactions and the reputational risks that can arise due to inefficient handling of transactions.

In typical front-to-back-office transaction processing across various asset classes, front office is typically lean and agile (achieving low latency and higher throughput) whereas middle office and back office are burdened with taking care of all the heavy lifting required to settle the trades as well as satisfying customers and regulators – a tough job indeed! Trades get executed in micro/milliseconds within front office, whereas the settlement cycles are still T+2 / T+3 for most of the markets. The reason? The complexities and business processes involved in transaction processing.

In this post, I will share our experiences working with customers in the middle office and back office transaction processing domain. We are seeing a strong desire among the solutions architects, operations teams, and business stakeholders to improve the Straight-Through Processing (STP) via minimizing exceptions. A lot of these exceptions occur due to incorrect/missing set-up of reference data, such as trades halting in STP because of:

- Missing accounts, sub-accounts;

- Missing / incorrect settlement instructions;

- Missing instruments; and,

- Allocation failures due to incorrect charges on client trades.

These challenges are a result of data (events) not reaching intended recipients in time. This also adds a high reconciliation burden on the operations team.

We see a paradigm shift in this domain. Business is demanding higher efficiencies and lower faults / exceptions in post-trade transaction processing platforms. IT architects are looking at adopting more modern and agile approaches, such as asynchronous event-driven design, cloud adoption, and Infrastructure as Code (IaC). They are choreographing agile IT processes that respond to business events proactively—instead of orchestrating the IT processes which are called to respond reactively. All of these efforts are ultimately about making post-trade transaction processing event-driven.

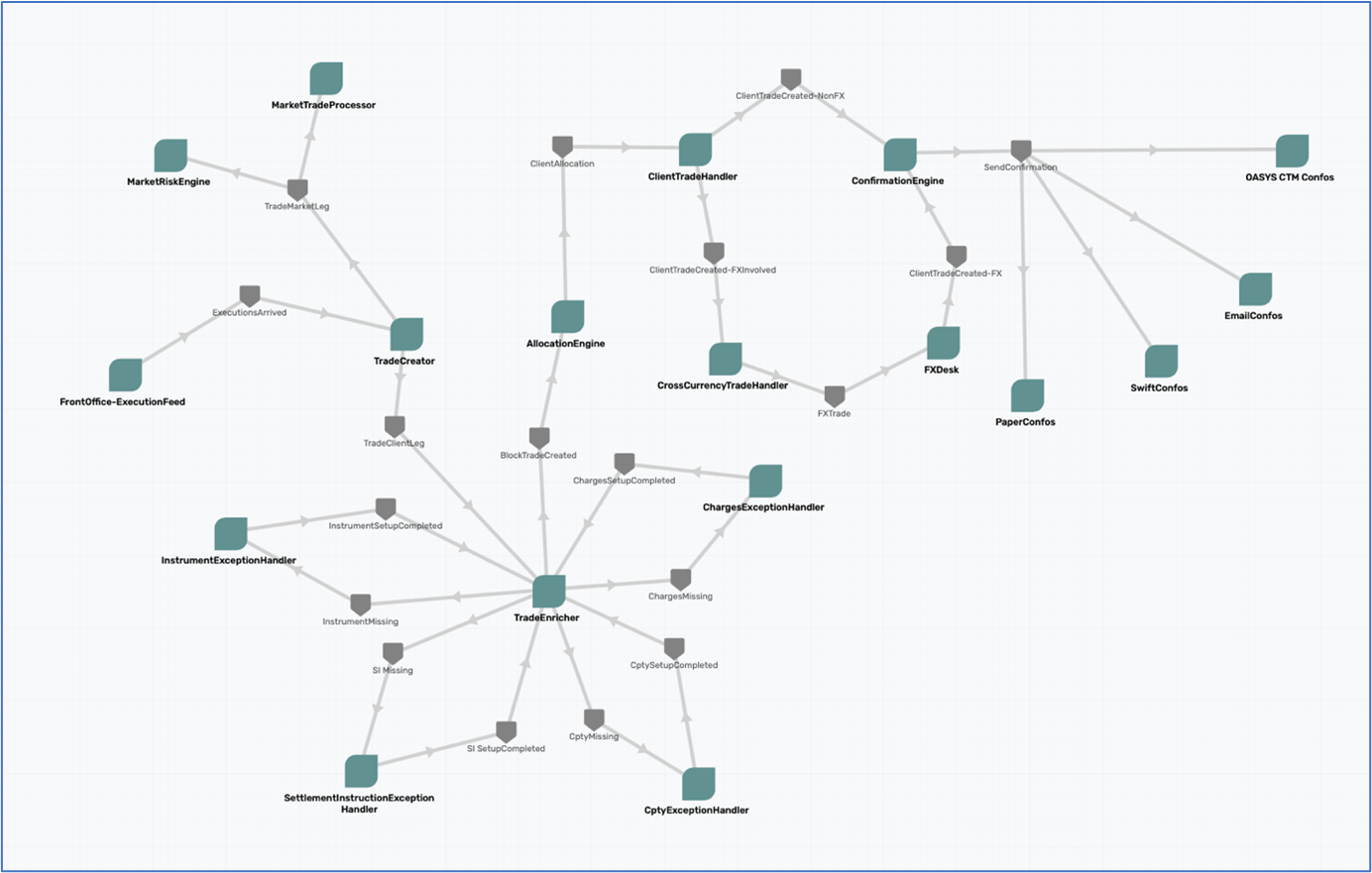

We are working with clients who are on this journey and who have been modernizing their legacy platforms towards event-driven architecture. We have been using the following Solace PubSub+ Event Portal domain model as a nice view of actors and events in the transaction processing world. Please note that while this is a simple generic model, components of it would be applicable to most of the organizations handling global post-trade transaction processing.

Let’s briefly look at some of the components, typical challenges faced, and how Solace PubSub+ solves it:

Trade Creation

Trade creations is a coupling point between front office and middle office. This is the area of typical impendence mismatch, with extremely fast and lean front office handing over executions to a slower middle office.

Most traditional implementations use relational databases, legacy messaging systems, and/or file transfer as a handoff mechanism for executions from front office to middle office. This becomes a bottleneck during volatile market conditions. When the market is roaring, front office is being back-pressured by middle office because it is not built to keep up with that kind of volume. As an architect, would you size for the peak? This is a typical sizing conundrum. PubSub+ provides a fast spool where front office can drop executions at the rate it wants to without being back-pressured by the downstream middle office. In this way, it effectively acts as a shock absorber in the middle.

How PubSub+ Platform Distributes Trade Processing Events in Post-Trade SystemsLearn how the Solace PubSub+ Platform has been deployed to distribute trade events with multiple post-trade systems at a number of our customers.

Most of the post-trade transaction processing entities are centralized in a region and that regional entity services various regional execution venues such as exchanges, dark pools and OTC desks. For example, a global bank would have their trading execution operations in each significant country, but they most often will have a regional transaction processing entity in NY (for Americas), London (EMEA) and Singapore (APAC).

Solace topic routing is the key component for routing global executions appropriately.

For example:

Let’s consider the topic definition for Front Office to Trade Creation microservice:

Acme/v1/<asset class>/<region>/<country>/<exchange>/<source>/<system>

V1 ➡ version of the topic (refer to topic BP)

Asset Class ➡ {Cash Equity, Futures, Options, ETF, Bond…}

Region ➡ {Americas, EMEA, APAC}

Country ➡ ISO Country code

Exchange ➡ ISO Exchange Code

Source ➡ {DMA, DSA, etc.}

System ➡ FO OMS / EMS System name

Solace topic routing and topic-to-queue mapping helps in architecting event distribution by using the metadata.

Note: a direct market access (DMA) order goes straight into the market without any changes to the client order. Direct strategy access (DSA) orders will be routed to the Algo Server for strategy execution. Subject to the algo strategies offered by the broker firm, a DSA order can result in many “slices” of child orders to the market.

Using the above convention:

- A DMA order execution fills for SGX equities order will be published on a topic

acme/v1/eq/apac/SG/SGX/DMA/xyz - A DSA order execution fills for BSE equities order will be published on a topic

acme/v1/eq/apac/IN/BSE/DSA/abc

The key point to note here is this: unlike other messaging / event brokers, topics are not created by issuing any command; they are just annotation on the event message and whoever is subscribing to that topic or wildcard will receive the message / event. But what happens if the subscriber application is not online? Will it lose the event? That’s where topic-to-queue mapping comes to help. As I said earlier, topic routing will help in dynamically segregating the flow to different microservices. We could use the above example to see how this can be achieved.

Let’s say we have one microservice with logic for creating the equity trades for DMA orders, and another microservice for creating DSA orders. To handle these fills, we could create a separate queue for each service: TradeCreateFromDMA and TradeCreateFromDSA.

- TradeCreateFromDMA subscribes to =

acme/v1/eq/*/*/*/DMA/* - TradeCreateFromDSA subscribes to =

acme/v1/eq/*/*/*/DSA/*

These queues will spool the DMA and DSA fills across all asset classes, regions, countries, and exchanges. Imagine the volume these queues will have because we are talking about handling global load within a microservice.

Fast forward as the volume grows and you figure out the microservice is getting overwhelmed with the load, so you want to scale out the microservice. If you are using a modern platform like Kubernetes, it’s fairly straightforward to increase the pods to handle the increased load. Because Solace queues support round-robin dispatch, the scale-out would be seamless.

Taking it to the next level, customers also use PubSub+ Event Portal’s RESTful API (read more in this blog post) to probe the queue depth. Once it crosses a high watermark threshold, they invoke the scale-out function of the microservice deployment platform (Kubernetes, for example) to spin up more instances of the pod automatically. Once the spool decreases under low watermark, they instruct the platform to shrink the pods. This is runtime elastic scaling in action using Solace queues and REST API.

Let’s extend the above example. You may want to have different rules for aggregating executions for different markets. In this scenario, you could simply create market specific queues, subscribe to the right topics, and then have the market-specific microservice consume the fills from the corresponding queue.

So the above queue and subscription would look like:

- TradeCreateFromDMAForSG subscribes to =

acme/v1/eq/*/SG/*/DMA/* - TradeCreateFromDMAForHK subscribes to =

acme/v1/eq/*/HK/*/DMA/* - TradeCreateFromDMAForUS subscribes to =

acme/v1/eq/*/US/*/DMA/* - TradeCreateFromDMAForUK subscribes to =

acme/v1/eq/*/UK/*/DMA/*

One important note here is that you are changing the way fills are getting routed to different microservices without affecting the FO fills publisher at all. It’s continuing to publish on the established topic model and on the consuming end. Solutions architects are free to route the events the way they want and scale in / out the load on microservices.

Just to emphasize the power of topic routing, let’s consider a new regulation has come in that requires you to report the daily average execution prices along with a bunch of other aggregated data on executions. We can simply create a queue:

- ExecutionFillsAnalyticsReporting subscribes to =

acme/v1/>

Note: read more about using wildcards

This will spool all the executions globally, which can then be fed into your compliance engine to derive the necessary reports.

So what Solace offers here is a total decoupling between how applications are producing the events and how consumers are consuming them in a fan-out fashion.

To summarize, Solace enables:

- Topic-based event routing to multiple queues for processing the same event;

- Microservices to be scaled in/out using queues;

- Easily adding new applications that wiretaps events already flowing on the bus; and,

- Elastic scale in/out.

Reference Data Synchronization

Every organization has a distinct setup of their reference data team. Some have a single global store of reference data, others choose to have a regional hub – one for each region (Americas, EMEA, APAC). Either way, there is a need to distribute events to multiple applications, regionally or globally.

Solace Dynamic Message Routing (DMR) helps build a global event mesh to distribute data to applications from a central reference data store. Golden copy/reference data teams struggle with distributing data to the intended recipients. The common approach is to use files to distribute the data or to use legacy messaging technologies which do not have robust topic-based distribution semantics. This causes delayed sync of the data or inconsistent records, which cause exceptions in straight-through processing.

As mentioned earlier, a central team manages all the reference data and is responsible for notifying the create, update, delete (inactive) events on the data. Timely dissemination of this information is vital for STP and the overall client experience. Let’s say a client’s settlement instruction or confirmation email address is updated. It’s expected that the change is notified to confirmation and settlement modules so they act on the right information.

Solace topic routing builds a backbone to distribute the reference data events to all intended recipients in real-time (locally as well as globally using DMR). The rich topic hierarchy can give semantics to the payload and can be used to regionally route the traffic based on subscriptions.

For example:

Let’s consider the following topic scheme for reference data publication:

acme/v1/<asset class>/<data type>/<region>/<operation>

Asset class ➡ {Equities, Fixed Income, Commodities}

Data type ➡ {Account, Subaccount, Instrument, Settlement Instruction, Confirmation Instruction}

Region ➡ {Americas, EMEA, APAC}

Operation ➡ {Create, Update, Delete}

The reference data application will publish the events appropriately on the right topic, and applications such as trade enrichers, confirmation engines, and allocation engines will subscribe to the right set of topics to receive the reference data events. Solace takes care of fanning-out the events to multiple applications based on their entitlements with the help of Access Control Lists, OBO subscription, and in-order guarantees.

Trade Enrichment

Trade enrichment is when the trade created in the trade creation domain has references to counterparties and other instruments which need to be enriched (for the confirmations details, transaction charges levied to counterparty, and settlement instructions). This is an area where most of the STP breaks occur.

For example:

- a missing instrument would cause a transaction to halt

- a missing charge set-up on block / sub-account would cause a transaction to halt

- even worse: an updated charge did not get reflected / synced with the trade enrichment microservice and therefore calculates the wrong charge (expect client complaints)

The client onboarding and reference data team is responsible for sourcing and maintaining the golden copy of the data required for key areas of STP. Quite often the data from the golden copy store does not sync with post-trade transaction processing components, which leads to STP exceptions. PubSub+ provides a reliable event distribution backbone for in-order delivery of the golden copy data events.

As shown in the diagram, the enrichment process handles multiple data points and may raise exceptions if the necessary data is not available.

For example, InstrumentExceptionHandler may raise an event indicating the details for the instrument ID on the trade is missing in the reference data. The reference data application will subscribw to an appropriate topic to be notified if such an exception occurs. The reference data operations team will perform necessary actions and provision the missing instrument, which will publish an event notifying the exception handler to resume the trade enrichment process.

Note: event-driven microservice enables the application to act on the event instead of querying and polling for the outcome.

This same principle applies for other reference data elements, such as settlement instructions and charges.

Allocation Engine

The allocation engine takes care of block trade allocation to sub-accounts for the counterparty. Depending on the counterparty maturity, there may be multiple ways in which allocation processes occur. It could be done over DTCC’s Omgeo-based allocation and matching process, or there could be standing instructions provided by the counterparty. In either case, there will be a separate microservice handling each type of allocation based on capacity set-up (and this should also be tied to the right topic hierarchy). The allocations workflow creates client trades which need to be confirmed and settled.

Confirmation Engine

The confirmation engine handles various confirmation mechanisms based on the client’s static reference data. The engine may be composed of different microservices each handling a specific way of confirmation, such as Omgeo, email, SWIFT, or more traditional paper-based confirmation. A well-built topic hierarchy with “confirmation type” as a topic segment will enable routing the confirmations to appropriate microservices by Solace.

To conclude, the key highlight and recommendation for modernizing your post-trade transaction processing functionality are:

- Consider modern microservices patterns and encapsulate business functionality at the appropriate granularity;

- Think through your data model and spend time building patterns for event-driven communication using appropriate topic hierarchies;

- Use PubSub+ Event Portal to choreograph events among microservices. This will be your one-stop-shop to create, update and govern events, topics and schemas;

- Leverage Solace monitoring capabilities to keep tab of how events are flowing, and to identify slow consumers so that you can scale in / out manually or automatically; and,

- Enforce entitlements using Solace’s rich and granular access control lists and subscription management.

If done properly, I am confident your organization will enable the data distribution visibility you need to have less exceptions in STP flow, higher compliance, and better throughput.

Related Reading:

- Blog Post: Why You Need a Modernized Post-Trade System to Harness the Power of Reference Data in Financial Markets

- Solution Brief: Modernize and Future-Proof Post-Trade Processing with an Event Mesh

- White Paper: How to Modernize Your Payment Processing System for Agility and Performance

- Success Stories: C3 Post Trade and Grasshopper

Explore other posts from categories: For Architects | Use Cases

Vidyadhar Kothekar

Vidyadhar Kothekar