The Fundamental Review of the Trading Book (FRTB), aka Basel 4, has been the hot topic in the banking industry since its introduction in 2016. The implementation of FRTB, along with regional variants, requires a fundamental shift in the way banks evaluate risk and structure and run their trading business.

The regulation provides a clear distinction between the trading book and the banking book, and provides a more complete coverage of trading risk to determine the capital that an organization must hold to mitigate those risks. It is much more prescriptive than previous regulations as its aim is to reduce systemic risk within the financial system, which should be good for the organizations that fall under its umbrella, i.e. seen as a business benefit rather than a chore.

While banks have been considering the implementation and impact of FRTB, change in general has continued:

- On the world stage we have seen an increasing impact of climate change and a rise in political populism.

- From a business and technology perspective, FinTech’s rise and the changing focus of the hyper-scalers.

- On the regulatory front – additional regulatory tests such as the Comprehensive Capital Analysis Review (CCAR) and Global Market Shock (GMS) as well as regulations covering more general items such as GDPR govern what personal data you can hold and how you are allowed use personal data and Data Residency regulations govern where you are allowed to store that data.

In a world that is constantly changing, it is now necessary to treat the evolving regulatory landscape as a core part of the business. Basel 4 needs to be live in 2023, then Basel 5 and 6 will happen. Therefore, it makes sense that regulatory change should be treated as “business as usual” that organizations strategically architect their systems to accommodate, so they can reduce the impact on their business when new regulations are introduced.

Making the right choices in the implementation of FRTB can help optimize the amount and cost of capital that must be held. Making the right implementation choices can also provide improved efficiencies, services and capabilities that can be used across the organization making them more adaptable and flexible to change whether driven by market forces or regulation.

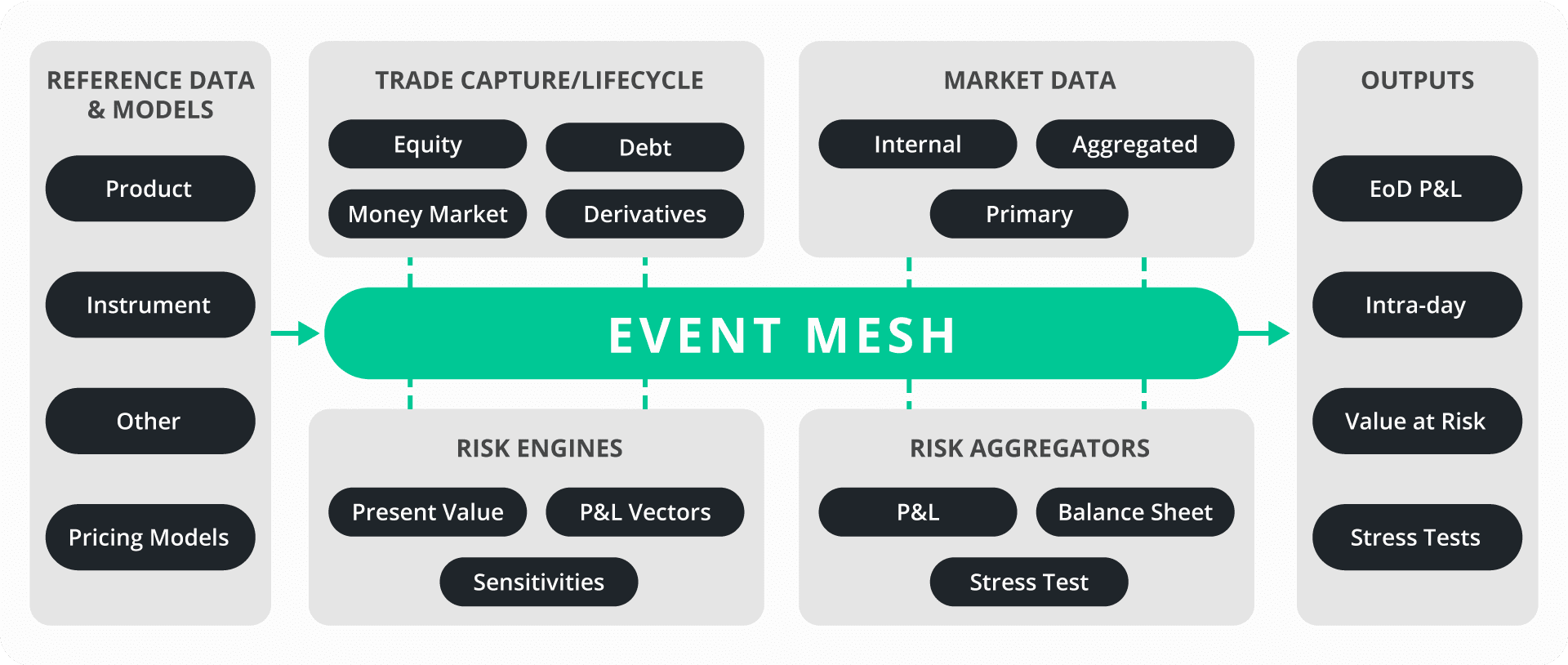

Calculating Risk – Segmenting and Coupling

Prior to the Basel 4 (FRTB) proposal, the approach to calculating risk was essentially a bank-wide choice. If a bank was approved to use the internal model approach (IMA), that is what the bank did for calculating all trading risk, if not then it used the standard approach (SA).

FRTB changes/modifies way the SA and IMA risk models are calculated in their 2015 publication (revised 2019). The changes make the calculated risks more relevant to market events and they make SA fairer and a credible alternative to IMA.

Within FRTB, the use of SA is a requirement for calculation as it enables regulators an attribute for comparison of organizations. IMA is an option, but it is no longer a bank-wide option. IMA is now an option on a per trading desk basis. A bank must now provide evidence (back-testing) and seek approval for every trading desk for which it wishes to use IMA.

In addition to having to handle the more complex calculations the ‘per-desk’ segmentation forces banks to look at the structure of and the size of their trading desks. Optimization of desks allows banks to optimize the requirement to hold capital. However, it creates a coupling or dependency between the front office (business) and risk and finance. There is also a possibility that desks may move between IMA and SA as trading patterns change. The result is that the optimization of desks is unlikely to be a one-time activity.

Removing or reducing the effects of this coupling is a key implementation requirement to maintain flexibility and keep regulatory costs to a minimum.

Regulatory Overlap – Services vs Silos

While the reach of FRTB is significant in terms of its impact on the organization it does have some areas of overlap with other regulations both from a data input and a calculation perspective. Therefore, when implementing regulatory solutions, including those deployed for FRTB, it makes sense that organizations avoid deploying regulatory silos.

Instead, organizations should seek to reuse capabilities where possible and build capabilities that can serve multiple requirements. Examples of this include the calculation of P&L vectors, differing liquidity horizons, sensitivities, etc. Building and reusing capabilities favours centralized services for data distribution, normalization, compute, calculations, and storage. This enables organizations to realize economies of scale, reducing capital expenditure and reducing impact on return on equity (ROE).

In addition, the provision of reusable, flexible, adaptable services, that allow users to ‘self-serve’ using role-based access control (RBAC), gives the organization the ability to plug-in new calculations and new data feeds and support future requirements without extensive rearchitecting or infrastructure reconfiguration.

Data Storage & Compute

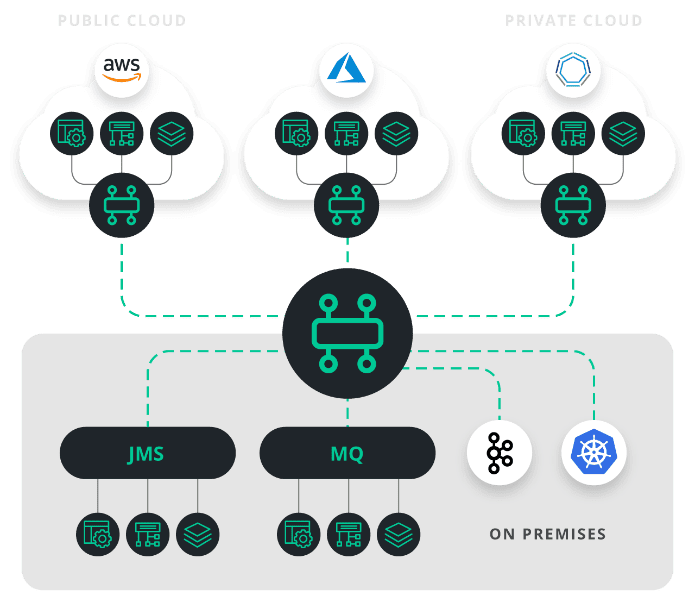

The problem of data volume, along with the computing power needed to process calculations on that data, is another one of FRTB challenges that can cause organizations issues. Moving the storage and the workload to the cloud seems an obvious choice but can bring its own problems in terms of, but not limited to, data transport, data security, cloud provider choice and data residency.

The inclusion of multiple environments, spread between on-premises and perhaps multiple clouds to satisfy data residency issues, complicates the data transport requirement. Minimising the complication of moving data between environments while preserving flexibility to include new distribution requirements, by providing a self-serve capability with RBAC, will speed up deployments and minimize costs in this area.

Data Quality is Key

The calculations and optimizations required for FRTB compliance, and other regulations, rely on high quality data. This is typically where financial institutions struggle. All banks have a large quantity of data how many can be sure of the quality of that data?

It is a fact that a large proportion of the complaints from regulators, and the fines handed out by the FCA for failing to properly report MIFID II transactions, are all based on poor quality data.

Adding to the data problem, FRTB specifies that back-testing should include data with a time horizon that stretches back 10 years. Do banks have this much high-quality data to support the requirement? If not do banks buy additional data from vendors, or do they pool data with other organizations to fill in the gaps?

Adding new sources, and/or pooling data, is not enough in itself, without a good data governance strategy the action simply compounds the problem.

The data volume and data quality issue are amplified by the fact that organizations will use external data points to derive many internal data points that are used in the risk process. What is the quality of the derived data?

Organizations need to provide much more focus on data governance on an end-to-end basis for all flows. The data, information and event flows involved cover all sources within the organization including market data, reference data and pricing data. Quality must be maintained at all points in the flows through acquisition, validation, normalization, and processing.

Realizable Benefits of Rearchitecting for FRTB

The realizable benefits for rearchitecting for FRTB compliance are reusable for current and future regulatory projects. The rationalization, simplification, and governance of the deployed infrastructure should provide:

- A rationalized front-office and risk infrastructure

- The ability to realize hybrid and multi-cloud deployments where desirable

- The ability to offer “market-data as a service” and “reference data as a service”

- An enterprise-wide “standard data model”

- Data governance with a searchable data/event catalog

- Data lineage capabilities

- RBAC-controlled self-service ability to manage and control consumption and production of data/events.

- A design to code tool chain option

- A selectable event tracing capability (for non-repudiation)

The desirable attributes and deliverables listed above rely on the ability to transport data, in a controlled manner, between data producers and data consumers, no matter where they reside in the organization and no matter how they are deployed, while simultaneously being able to ascertain and prove the quality and lineage of the data being exchanged. This is key to enabling future flexibility and proving conformance.

Data governance is the hard part, but it is a key component required for successful implementation. Organizations should put enough weight on data governance and controlled access to events/data to be able to satisfy the regulators in terms of the quality of their submissions. This will ease the regulatory burden in the long run and mitigate against the risk of fines for incomplete reporting due to data quality issues.

Solving Data Governance and Data Transport for FRTB+ and Hybrid and Multi Cloud

Solace has a proven track record of helping organizations realize effective event driven architectures, spanning multiple locations, including private and public cloud. For example, Solace has spent the last 10+ years helping financial organizations define and control data flows for highly available, global networks such as price distribution, FX and FI application domains as well as collect distributed data, from on-premises trading systems, for market risk and P&L systems that started on premises and are now moving to the cloud.

The Solace solution provides effective controlled data governance and data movement throughout an organization whether local or global in nature. From the Solace viewpoint, making EDA effective means providing:

- An enterprise grade resilient, robust, secure, and highly available capability.

- Data governance tools.

- The ability to classify, catalog and search for data/events/applications.

- The ability to control access to events/data/applications in a fine-grained manner rather than coarse ‘firehose’ topics.

- The ability to audit/discover deployed data/event flows.

- An event transport layer that learns and intelligently routes events/data between publishers and subscribers.

- The support for all quality of service guarantees and all communication exchange patterns.

- Support for all deployment environments whether on-premises or in private/public cloud with the ability to centrally manage and monitor the distributed infrastructure.

- Rich integration of third-party products.

- Tooling to support auto-code generation for developers via AsyncAPI.

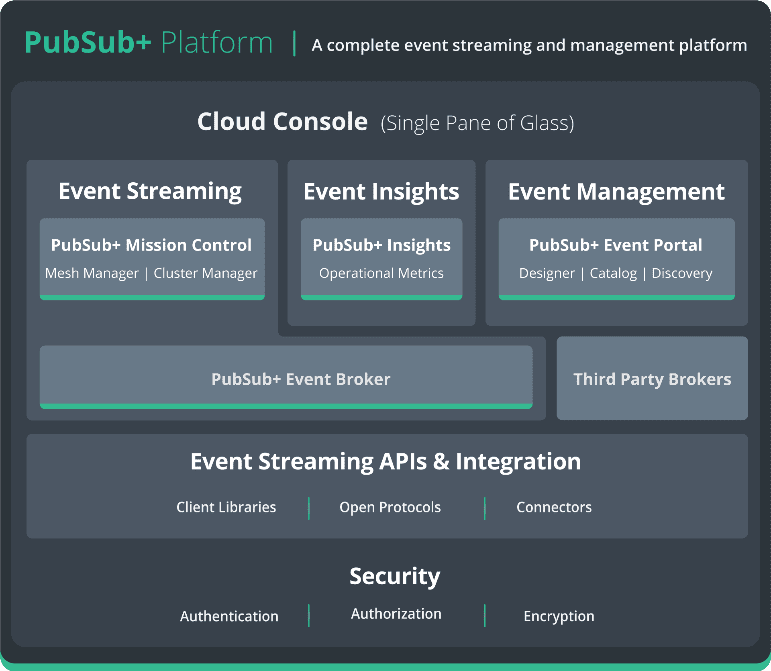

Enabling effective EDA is the role of Solace PubSub+ Platform.

The Solace platform is enterprise grade, covering item 1 in the list of deliverables. Building on its enterprise heritage, Solace provides comprehensive capabilities to provided authentication, authorization as well as data encryption, at rest and in motion, as part of the base platform.

Event Management and Data Governance

In terms of Event Management, PubSub+ Event Portal provides the centralized catalog, data discovery and data governance capability, covering items 2 through 5 and item 10.

PubSub+ Event Portal enables:

- Data Architects to define, version, and life cycle manage data schemas.

- Enterprise architects to define, version, and life cycle manage events and to bind schemas onto events.

- Enterprise architects to define, version, and life cycle manage application components/microservices.

- Enterprise architects to bind events to application components/microservices and define their relationship (publish/subscribe)

- Developers to download application definitions in AsyncAPI format and to put the definition through code generation tools to automatically produce skeleton code to which they add the business logic.

- Schemas, events, and application components can be grouped into application domains.

- App teams can define event API products, which are shared ‘external’ events that other parties can access.

- RBAC limits access to application domains, events, and schemas.

- Users to search the catalog to find existing schemas/events of interest and encourage proper reuse of events – increasing the ROI of the events being produced.

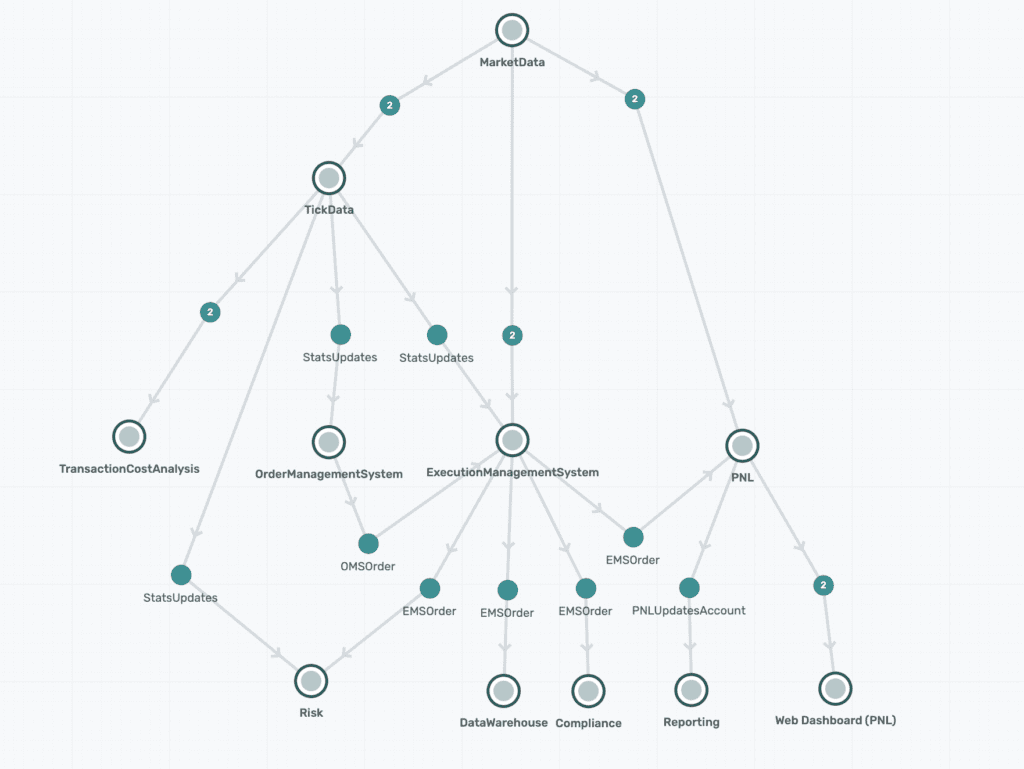

PubSub+ Event Portal also enables visualization of application interaction via the events they produce and consume. This makes it easy to determine where events originate and terminate and simplifies life cycle management.

The tracking of event producers and consumer is a key part of data quality and data lineage within the EDA. Combining good use of ‘meta-data’ with objects in the catalog can help users pick the correct event and data to consume. It can help prevent chained consumption where ‘App Team C’ takes a feed from ‘App Team B’ rather than ‘App Team A’ because ‘App Team C’ does not know that the data attribute they need originates from ‘App Team A’. In this case ‘App Team A’ is the “Golden Source” as opposed to App Team B which is “derived data”. Most organizations will have experienced this where when ‘App Team A’ makes a change that breaks ‘App Team C’. As the dependency is unknown it results in an outage that should have been avoided.

The ability to define, release and version event API products, i.e., which events are shared as a documented event API, enables teams to use and document their internal schemas and events and keep these private and separate from the external events other teams can use.

For example, this could be useful in the Central Market Data Service or the Reference Data Service.

Here the Market Data team will have several different sources to integrate, normalize and cache. All the internal event flows should be hidden as these will include raw data from the sources and only the normalized public events should be available to external parties. In this case the Market Data team defines as many events as it needs but only makes the normalized events that clients should be able to consume available via an API Product.

Likewise, a Reference Data Service may use many internal APIs for documenting, and interconnecting, data cleaning and data normalization flows, etc., and may expose an external API through which all areas of the bank can request initial data and receive updates as changes occur.

It is not difficult to see how advantageous this is to the concept of central services, or capabilities, such as market data, reference data, pricing data, P&L risk, sensitivities, compliance, etc., to be able to define and life cycle manage their external interfaces while allowing other teams that have enough access to self-serve. This drives flexibility and agility to adapt and grow.

Solace PubSub+ Platform itself provides external APIs for integration with other systems. This type of integration facilitates integration into trouble ticket/alerting systems and planning and billing systems. It also enables integration data governance and lineage systems, such as Solidatus, which look after the databases and application components that make up the storage layer. Combining the platform capabilities allows the organization to provide data lineage for a complete end-to-end view of a data flow.

PubSub+ Mission Control and PubSub+ Insights

PubSub+ Mission Control and PubSub+ Insights manage the deployment of the event brokers that form a distributed event mesh covering hybrid multi-cloud if/when required. An event mesh covers items 6 through 8 in the deliverables list above, and enforces item 4.

An event mesh powered by PubSub+ learns its routing table from the subscriptions that it receives. This means that new flows can be facilitated by agreement between the publisher and the consumer without needing administration actions to create the topic on the infrastructure. The event/data flows can originate on any event broker and terminate on any event broker within the mesh. This arrangement enables organizations to move from on-premises to hybrid cloud to multi-cloud by simply adding more brokers and connecting them into the mesh, allowing the mesh to grow and stretch as desired.

As the mesh learns its routing from the subscriptions it receives it is simple to support moving workloads between locations. Redeploying the application in the new location will cause the application subscriptions to be injected in the new location. The routing tables of the mesh will adjust and the required data for the application will now flow to the new location. This makes moving workloads to alternative sites for business reasons, or to facilitate business continuity, relatively simple.

All Solace brokers support high availability deployment mitigating against broker failure and guaranteeing that persistent events will not be lost. Solace PubSub+ Mission Control provides centralized management of the event brokers & mesh. Mission Control enables operators to add new brokers in new environments and to be able to easily add them to the event mesh. Mission control also enables resizing of the event brokers when expansion is needed.

Solace PubSub+ Insights is a metrics and alerts gathering tool that monitors broker alerts and captures statistics from the mesh. This enables users to confirm that the flows on the mesh are as expected from an event rate and a byte rate viewpoint and to plot trend lines for capacity planning. As capacities are approached it is possible to scale Solace brokers to restore headroom for the service being supported by the mesh.

Event Streaming APIs and Integration

Solace has always provided a rich set of APIs and adopted standards where available, e.g., JMS, MQTT and AMQP, so that getting events and data on to and off the mesh is as simple as possible with as few dependencies as possible.

As an extension of this philosophy Solace also provides a rich set of integration tools that allow integration with third party services, whether native cloud services, analytics tools, AI frameworks, databases, Kafka, IBM MQ, etc.

Heterogeneous Event Mesh

Solace also supports other event streaming platform event brokers within the modelling available in PubSub+ Event Portal. This is because other technologies such as Apache Kafka are already deployed as part of the big data services that organization might leverage for risk and compliance projects. Ripping out Kafka and substituting Solace Event brokers in these cases does not make much sense but it is also undesirable to the data governance and data lineage to stop at the Solace/Kafka boundary.

Today Solace supports discovery and design of Kafka (Apache, Confluent, MSK) events, schema, and application components in PubSub+ Event Portal. Other brokers will be supported according to demand and community effort.

Summary

Implementing FRTB forces some fundamental changes on banks and financial institutions. FRTB has some overlap with other regulations and regulatory changes in the future are guaranteed to arise. As there is regulatory overlap, organizations should avoid deploying regulatory silos and instead deploy services/capabilities that facilitate and encourage reuse. This will increase organizational agility and flexibility and ultimately reduce the cost of adapting to change whether the change is required by regulation or desired by customers.

The deployment of reusable capabilities is eased by adopting a set of common/standard data models with which parties can exchange information. Data governance and lineage is required to control and enforce the standards and ensure data quality does not degrade over its life cycle. Poor data quality is the biggest problem that organizations face, and it is the largest cause for complaint and fines from regulators.

Solace has a track record of helping organizations architect for a changing future. Architecting for change minimizes the risk and cost of change, and organizations can better satisfy their customers in an evolving marketplace. This includes more than 60% of the largest Tier 1 Banks where Solace is deployed across Front Office, Middle Office, and Back Office.

Solace enables organizations to catalog, govern, and manage the life cycle of events/data that flows within the organization, providing secure access control and lineage capabilities across hybrid and multi-cloud deployments. Providing a comprehensive data governance capability Solace enables organization to control the quality of data throughout its life cycle.

PubSub+ is a comprehensive EDA platform that enables organizations to easily manage event/information flows in heterogeneous environments with minimum effort, allowing focus on the business problem and customer experience. Deploying Solace can ease the route to FRTB and future regulations.

Explore other posts from categories: Financial Services | For Architects

Mathew Hobbis

Mathew Hobbis